Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

From Pixels to Predictions: A Comprehensive Survey of Image Classification

Authors: Lokesh Kumar Boran, Anwar Husain Joya

DOI Link: https://doi.org/10.22214/ijraset.2024.65113

Certificate: View Certificate

Abstract

Image classification, a fundamental task in computer vision, has undergone significant evolution over the years, driven by advancements in deep learning and machine learning techniques. This paper presents a comprehensive survey of image classification techniques, covering its journey from early methods to state-of-the-art approaches and future directions. We delve into the fundamentals of image classification, including traditional methods and the pivotal role of Convolutional Neural Networks (CNNs). The survey explores advanced techniques such as transfer learning, attention mechanisms, and multimodal learning, along with their applications across various domains including healthcare, autonomous vehicles, social media, and more. Additionally, future trends and directions in image classification are discussed, focusing on weakly supervised learning, multimodal learning, continual learning, and ethical considerations. Through this survey, we aim to provide insights into the past, present, and future of image classification, highlighting its significance, challenges, and promising avenues of research and application.

Introduction

I. INTRODUCTION

A. Background and Motivation

Image classification [1], a fundamental task in computer vision, involves categorizing images into predefined classes based on their visual content. This capability has become increasingly important across a wide range of fields including medical diagnostics, autonomous driving, social media, and security. The ability to accurately classify images is crucial for applications such as identifying diseases from medical images, detecting objects in autonomous driving, and recognizing faces in social media platforms []. The journey of image classification has evolved significantly from its inception. Early methods relied heavily on manual feature extraction, requiring domain expertise and extensive preprocessing. The advent of machine learning introduced more sophisticated techniques, but it was the breakthrough of deep learning that truly revolutionized the field. Deep learning, particularly Convolutional Neural Networks (CNNs), has enabled machines to automatically learn features from data, leading to unprecedented levels of accuracy in image classification tasks [1] [2].

B. Evolution of Image Classification

The field of image classification has witnessed remarkable advancements over the past few decades. Initially, researchers focused on hand-crafted features and traditional machine learning algorithms. These methods, although innovative at the time, faced limitations in handling complex and high-dimensional data. The emergence of deep learning, specifically the development of CNNs, marked a significant turning point. CNNs demonstrated superior performance by learning hierarchical feature representations directly from images [3]. This shift was driven by the availability of large-scale annotated datasets and advancements in computational power, particularly the use of Graphics Processing Units (GPUs). The success of CNNs in competitions such as the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) highlighted their potential and accelerated research in the field. Since then, numerous innovative architectures and techniques have been developed, pushing the boundaries of what is possible in image classification.

C. Objective of the Survey

This survey aims to provide a comprehensive overview of the journey of image classification, tracing its evolution from early manual methods to the state-of-the-art deep learning techniques. The objective is to highlight significant milestones, key challenges, and future directions in the field. By exploring the historical context and the latest advancements, this survey seeks to offer insights into the ongoing developments and emerging trends in image classification.

Specifically, this paper will:

- Review Early Techniques and Foundations: Discuss the initial methods of image classification, focusing on manual feature extraction and classical machine learning approaches.

- Examine the Advent of Deep Learning: Explore the impact of deep learning on image classification, with an emphasis on CNNs and their transformative effect.

- Analyze Advanced Techniques and Architectures: Delve into recent advancements, including transfer learning, generative models, attention mechanisms, and transformers.

- Address Challenges and Solutions: Identify the key challenges faced in image classification and discuss potential solutions.

- Highlight Applications and Real-World Implementations: Provide examples of how image classification is applied in various domains.

- Discuss Future Trends and Directions: Offer insights into future research directions and the potential integration of image classification with other technologies.

D. Significance of the Survey

As image classification continues to evolve, it is crucial for researchers, practitioners, and students to understand its journey and the transformative impact of various techniques. This survey serves as a valuable resource for those looking to gain a comprehensive understanding of the field, offering a structured analysis of past and present methodologies and providing a foundation for future research and development.

In summary, the journey of image classification is a testament to the rapid advancements in computer vision and machine learning. By tracing its evolution, this survey aims to provide a holistic view of the field, highlighting the progress made and the exciting possibilities that lie ahead.

II. EARLY TECHNIQUES AND FOUNDATIONS

A. Types of Image Classification

Depending on the problem at hand, different types of image classification methodologies are employed. These include binary, multiclass, multilabel, and hierarchical classification.

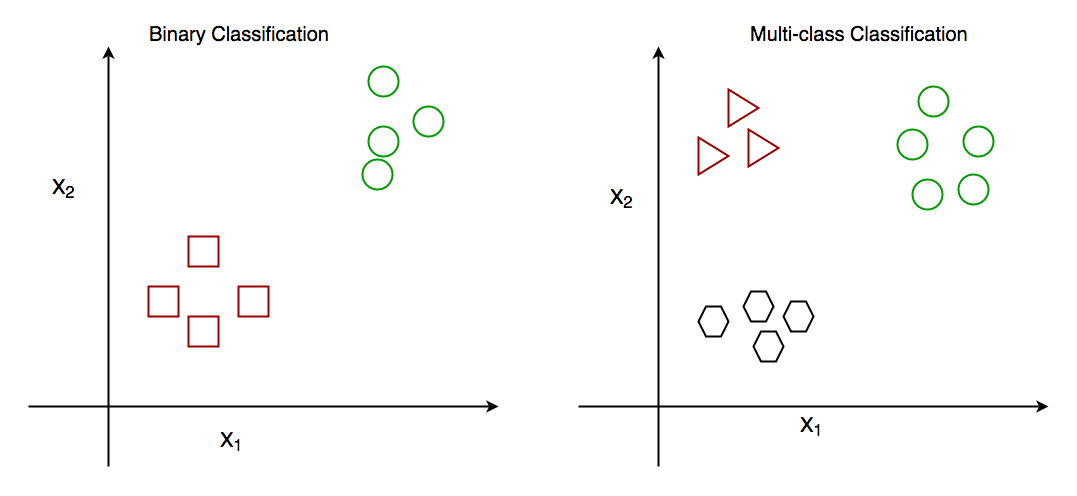

Binary Classification: Binary classification follows an either-or logic to label images and categorizes unknown data points into two categories as shown in figure 1. It is used when the task requires a yes/no answer or distinguishing between two classes. Binary classification is used to categorize benign or malignant tumors in medical imaging, analyze product quality to detect defects, or classify spam and non-spam emails.

Multiclass Classification: Multiclass classification categorizes items into three or more classes. It is used when the task involves distinguishing between multiple classes or categories as shown in figure 1. In natural language processing, sentiment analysis involves categorizing text into multiple emotions or sentiments. In medical diagnosis, diseases may be classified into different categories.

Figure 1: Binary and Multiclass Classification

Figure 1: Binary and Multiclass Classification

- Multilabel Classification: Multilabel classification [4] allows an item to be assigned to multiple labels instead of just one. It is used when each item can belong to more than one class simultaneously. Classifying image colors where an image may contain multiple colors. For instance, a picture of a fruit salad may have labels such as red, orange, yellow, and purple based on the fruits present.

- Hierarchical Classification: Hierarchical classification [4] organizes classes into a hierarchical structure based on their similarities. Higher-level classes represent broader categories, while lower-level classes are more specific. In a hierarchical classification of fruits, the top level might include categories like citrus fruits, berries, and tropical fruits. Subcategories under citrus fruits could include oranges, lemons, and limes, and so on.

B. Manual Feature Extraction

In the early days of image classification, before the rise of deep learning, feature extraction was predominantly a manual and heuristic-driven process. Researchers relied on domain knowledge to design algorithms that could extract discriminative features from images. These features aimed to capture essential characteristics such as edges, textures, colors, and shapes.

- Edge Detection and Shape Analysis: Techniques like Sobel, Canny edge detectors, and Hough transform were commonly used to identify edges and contours in images. Shape analysis methods were employed for object recognition tasks [5].

- Texture and Statistical Features: Textural features such as Haralick features, Gabor filters, and Local Binary Patterns (LBP) were utilized to characterize surface textures in images. Statistical features like histograms of intensity or color distributions were also popular [6].

- Color Histograms and Moments: Color information was represented using histograms of color distributions or moments such as mean, variance, and skewness. These features were effective for tasks where color played a significant role [7].

Manual feature extraction had several limitations, including:

- Dependency on Expertise: Designing effective features required domain expertise and manual tuning, making it time-consuming and often subjective.

- Limited Generalization: Handcrafted features might not generalize well across different datasets or domains.

- Difficulty in Handling Variability: These methods struggled with variations in scale, rotation, illumination, and viewpoint.

C. Classical Machine Learning Approaches

With extracted features, classical machine learning algorithms were applied for classification tasks:

- Support Vector Machines (SVM): SVMs gained popularity for their ability to find optimal decision boundaries in high-dimensional feature spaces. They were widely used for binary and multiclass classification tasks [8].

- k-Nearest Neighbors (k-NN): k-NN algorithms classified images based on similarity measures in the feature space. While simple and intuitive, they suffered from high computational complexity [9].

- Decision Trees and Random Forests: Decision trees recursively split the feature space to make decisions, and Random Forests aggregated multiple trees for improved performance [10].

- Bayesian Classifiers: Bayesian methods, including Naive Bayes classifier, made probabilistic predictions based on feature distributions and class priors [11].

Despite their usefulness, these classical methods had limitations:

- Handcrafted Features: Performance heavily relied on the quality of manually engineered features.

- Scalability: Computational demands grew with the increase in feature dimensionality and dataset size.

- Limited Representation: Difficulty in capturing complex patterns and hierarchical structures in data.

D. Transition to Deep Learning

While manual feature extraction and classical methods had their merits, they struggled to cope with the increasing complexity and variability of real-world data. The transition to deep learning, particularly Convolutional Neural Networks (CNNs) [1-2], addressed many of these challenges, marking a significant shift in the landscape of image classification.

The subsequent section will delve into the transformative impact of deep learning and CNNs on image classification.

III. THE ADVENT OF DEEP LEARNING

The advent of deep learning, particularly Convolutional Neural Networks (CNNs), has revolutionized the field of image classification. Deep learning techniques have demonstrated unprecedented performance in learning hierarchical representations directly from raw data, eliminating the need for handcrafted features. This section explores the transformative impact of deep learning on image classification.

A. Introduction to Deep Learning

Deep learning is a subfield of machine learning that focuses on learning representations of data through neural networks with multiple layers. Unlike traditional machine learning approaches, deep learning algorithms automatically learn hierarchical features from data, allowing for more efficient representation learning [12].

- Neural Networks: Deep learning models are inspired by the structure of the human brain, composed of interconnected layers of artificial neurons. Each layer extracts increasingly abstract features from the input data.

- Deep Architectures: Deep learning architectures consist of multiple layers, enabling the model to learn complex patterns and relationships in the data.

- End-to-End Learning: Deep learning models are capable of end-to-end learning, where raw data is directly inputted into the network, and the model learns to map inputs to outputs without the need for manual feature engineering [2].

B. Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) [1-2] have emerged as the cornerstone of deep learning for image-related tasks. CNNs are specifically designed to handle grid-like data such as images and excel at capturing spatial hierarchies of features.

- Convolutional Layers: Convolutional layers apply learnable filters (kernels) across the input image to detect various features such as edges, textures, and patterns.

- Pooling Layers: Pooling layers down-sample the feature maps, reducing computational complexity and providing translational invariance.

- Fully Connected Layers: Fully connected layers at the end of the network combine extracted features to make predictions.

C. Key Milestones in CNN Development

- LeNet-5: Proposed by Yann LeCun et al. [12] in 1998, LeNet-5 was one of the earliest CNN architectures, designed for handwritten digit recognition. It consisted of convolutional and pooling layers followed by fully connected layers .

- AlexNet: Introduced by Alex Krizhevsky et al. [13] in 2012, AlexNet marked a breakthrough in image classification by significantly outperforming traditional methods in the ImageNet challenge. It utilized deeper architectures, ReLU activation functions, dropout regularization, and GPU acceleration.

- VGG: The Visual Geometry Group (VGG) model, proposed by Simonyan and Zisserman [14] in 2014, emphasized deeper networks with small convolutional filters. VGG-16 and VGG-19 architectures became popular for their simplicity and effectiveness.

- GoogLeNet (Inception): Introduced by Szegedy et al. [15] in 2014, GoogLeNet introduced the concept of inception modules, allowing for efficient use of computational resources and deeper networks while maintaining accuracy.

- ResNet: Residual Network, proposed by He et al. [16] in 2015, introduced skip connections to address the vanishing gradient problem in very deep networks. ResNet architectures enabled training of extremely deep networks (50, 101, or 152 layers).

D. Transfer Learning and Pre-trained Models

Transfer learning has been a significant development in deep learning for image classification. Pre-trained models, trained on large datasets like ImageNet, can be fine-tuned on smaller datasets for specific tasks, enabling effective learning with limited labeled data.

- Transfer Learning: Transfer learning involves leveraging knowledge from pre-trained models to new tasks with small datasets, thus accelerating training and improving performance [16].

- Popular Pre-trained Models: Models like VGG, ResNet, Inception, and EfficientNet trained on large-scale datasets have become popular choices for transfer learning due to their generalization capabilities [18].

E. Impact and Advantages

The adoption of deep learning techniques for image classification has brought several advantages:

- Automatic Feature Learning: Deep learning models automatically learn hierarchical features from raw data, eliminating the need for manual feature engineering.

- Better Generalization: Deep learning models generalize well across different datasets and domains, capturing intricate patterns and variations.

- Scalability: Deep learning architectures scale effectively with increasing data and computational resources.

The advent of deep learning has propelled image classification to new heights, enabling applications across various domains with unprecedented accuracy and efficiency.

IV. ADVANCED TECHNIQUES AND ARCHITECTURES

A. Transfer Learning and Fine-Tuning

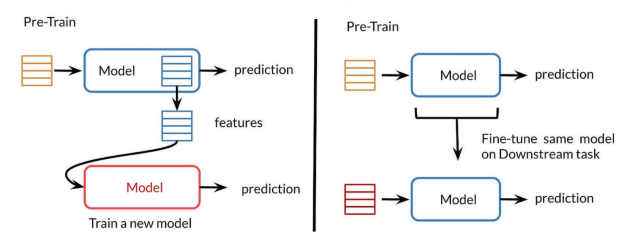

Transfer learning has emerged as a fundamental technique in image classification, leveraging pre-trained models to boost performance on new tasks with limited labeled data. Fine-tuning, a common practice in transfer learning, involves taking a pre-trained model and adapting it to a specific task by retraining the model's parameters on the new dataset. This approach allows the model to quickly learn task-specific features while retaining the knowledge and representations learned from the original dataset. Additionally, domain adaptation techniques have been developed to address domain shifts between the source and target datasets, ensuring the model's robustness across different data distributions.The concept of transfer learning and fine tuning are shown in Figure 2.

Figure 2: The Conceptual figure shows difference between transfer leaning and fine tuning

B. Generative Models and Data Augmentation

Generative models, particularly Generative Adversarial Networks (GANs) [19], have become instrumental in augmenting training datasets for image classification tasks. GANs can generate synthetic images that closely resemble real data, which can be used to augment the training set, thereby increasing its size and diversity. Alongside generative models, data augmentation techniques play a crucial role by applying various transformations to existing images, such as rotation, scaling, flipping, and adding noise. Data augmentation helps in exposing the model to different variations of the same image, improving its robustness and generalization to unseen data.

C. Attention Mechanisms and Transformers

Attention mechanisms have shown remarkable success in improving the performance of image classification models by enabling the model to focus on relevant parts of the input image. Self-attention mechanisms, originally developed for natural language processing, have been adapted to process visual inputs effectively. These mechanisms allow the model to selectively attend to important regions while suppressing irrelevant ones, enhancing the model's ability to capture long-range dependencies and fine-grained details. Transformers, which utilize self-attention mechanisms, have gained popularity in image classification tasks. Vision Transformers (ViTs) [20] replace traditional convolutional layers with self-attention mechanisms, achieving competitive performance on various datasets.

D. Novel Architectures

Recent years have seen the development of novel architectures aimed at improving efficiency, accuracy, and parameter optimization in image classification models. EfficientNet [18], for instance, introduces a scalable architecture by balancing network depth, width, and resolution, achieving state-of-the-art performance with fewer parameters. DenseNet [18] connects each layer to every other layer in a feed-forward fashion, promoting feature reuse and strengthening feature propagation. MobileNet [18] and SqueezeNet [18] focus on model efficiency and compression techniques to deploy models on resource-constrained devices without sacrificing performance.

E. Multimodal Approaches

Multimodal approaches integrate information from multiple modalities such as images, text, and audio to enhance classification performance and enable richer understanding of data. Techniques for multimodal fusion combine information from different modalities, enabling tasks like image captioning, visual question answering, and image-text retrieval. Cross-modal pre-training has gained traction, where models are pre-trained jointly on multiple modalities before fine-tuning on specific tasks, leading to improved performance and robustness across domains.

F. Continual Learning and Few-Shot Learning

Continual learning techniques aim to enable models to learn from new data over time without forgetting previously learned tasks. Meta-learning algorithms [21] allow models to quickly adapt to new tasks with limited data by learning how to learn. Memory-augmented networks equipped with external memory enable continual learning by storing information from past tasks and experiences. Few-shot learning techniques focus on learning from a limited number of labeled examples, which is crucial for tasks where labeled data is scarce. Each of these advanced techniques and architectures contributes to the ongoing progress in image classification, addressing various challenges and opening up new possibilities for real-world applications.

V. CHALLENGES AND SOLUTIONS

Image classification faces various challenges, ranging from data-related issues to model interpretability. However, researchers have proposed several solutions to mitigate these challenges and improve the effectiveness of image classification systems.

A. Data-Related Challenges

Data is fundamental to the success of image classification models, but several challenges arise in acquiring, preprocessing, and utilizing data effectively.

- Data Quality and Quantity: Obtaining large and high-quality labeled datasets can be expensive and time-consuming, especially for niche domains. Noisy or biased data can negatively impact model performance.

- Data Diversity: The diversity of data, including variations in illumination, viewpoint, and object occlusion, poses challenges for model generalization.

Solutions:

- Data Augmentation: Techniques like rotation, scaling, cropping, and adding noise can artificially increase the diversity of training data, helping models generalize better.

- Synthetic Data Generation: Generating synthetic data using techniques like GANs can supplement real data, especially in cases where labeled data is scarce.

B. Model Interpretability and Explainability

Understanding why a model makes certain predictions is crucial for trust and adoption, particularly in sensitive domains like healthcare and criminal justice.

- Black-box Models: Deep learning models are often perceived as black boxes, making it challenging to interpret their decisions.

- Bias and Fairness: Models may exhibit biases learned from the training data, leading to unfair or discriminatory outcomes.

Solutions:

- Interpretability Techniques: Methods such as Grad-CAM (Gradient-weighted Class Activation Mapping) [22] and LIME (Local Interpretable Model-agnostic Explanations) [23] provide insights into model predictions by highlighting important regions in the input image.

- Fairness-aware Training: Techniques to mitigate biases and ensure fairness in models by carefully designing loss functions and evaluating model performance across different demographic groups.

C. Computational and Resource Constraints

Training and deploying deep learning models for image classification often require significant computational resources, which can be prohibitive for many applications.

- Computational Cost: Deep neural networks with millions of parameters demand high computational power for training.

- Model Size and Deployment: Large model sizes may not be practical for deployment on resource-constrained devices.

Solutions:

- Model Compression: Techniques like pruning, quantization, and knowledge distillation reduce the size of models without significantly sacrificing performance.

- Hardware Optimization: Specialized hardware such as TPUs (Tensor Processing Units) and efficient model architectures like MobileNet [18] are designed to improve inference speed and reduce computational cost.

D. Ethical and Societal Implications

Image classification technologies raise ethical concerns regarding privacy, bias, and societal impact.

- Privacy: Models trained on sensitive data may compromise individual privacy if not handled carefully.

- Bias and Discrimination: Biases in training data can lead to unfair treatment of certain groups or individuals.

Solutions:

- Data Governance and Regulation: Establishing guidelines and regulations for data collection, model training, and deployment to ensure privacy protection and fairness.

- Bias Mitigation Techniques: Techniques to detect and mitigate biases in data and models, such as fairness constraints during training and bias-aware evaluation metrics.

Addressing these challenges is crucial for the responsible development and deployment of image classification systems, ensuring they are fair, transparent, and trustworthy.

VI. APPLICATIONS AND REAL-WORLD IMPLEMENTATIONS

A. Medical Imaging

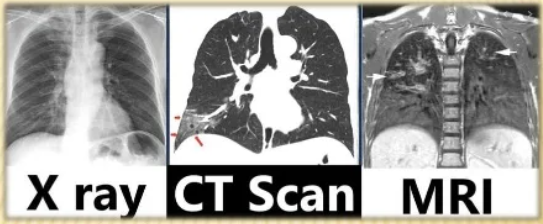

In the field of medical imaging, image classification is paramount for aiding diagnosis and treatment planning, assisting healthcare professionals in interpreting various types of medical images. These include X-rays, MRI scans, CT scans, and histopathology slides as shown in figure 3. Image classification models are utilized to detect and classify diseases such as cancer, pneumonia, diabetic retinopathy, and Alzheimer's disease from medical images. Moreover, segmentation techniques combined with classification help in identifying and delineating regions of interest within medical images, facilitating precise diagnosis and surgical interventions.

Figure 3: Different medical scans

B. Autonomous Vehicles

Autonomous vehicles rely extensively on image classification for understanding their environment and making informed decisions while navigating. Object detection and recognition are crucial tasks where image classification is applied to identify pedestrians, vehicles, traffic signs, and obstacles on the road. Semantic segmentation techniques classify each pixel in an image, enabling vehicles to understand road scenes, detect lanes, and navigate safely, contributing to the development of self-driving technology.

C. Social Media and Content Moderation

Social media platforms leverage image classification for various purposes, including content recommendation, image tagging, and content moderation. Automated tagging of images based on their content assists users in organizing and searching for images efficiently. Moreover, image classification models are employed for content moderation, automatically detecting and filtering inappropriate or harmful content such as violence, nudity, and hate speech, ensuring a safer online environment for users.

D. Agriculture and Environmental Monitoring

Image classification plays a significant role in agriculture and environmental monitoring tasks. In agriculture, it helps in monitoring crop health, identifying diseases, pests, and nutrient deficiencies from drone or satellite images. In environmental monitoring, image classification techniques are used for land cover classification, deforestation detection, and wildlife conservation. These technologies aid in assessing environmental impact, managing natural resources, and preserving biodiversity.

E. Security and Surveillance

Security and surveillance systems utilize image classification for threat detection, facial recognition, and monitoring public spaces. Image classification algorithms can identify suspicious objects or activities in public areas, airports, and critical infrastructure, enhancing security measures. Facial recognition technology enables the recognition of individuals for access control, surveillance, and law enforcement applications. Additionally, anomaly detection algorithms can identify abnormal behavior or events in surveillance footage for crime prevention and public safety.

F. Retail and E-Commerce

In the retail and e-commerce sectors, image classification enhances various aspects of the shopping experience. It enables product recognition, allowing for improved search and recommendation systems based on uploaded images. Visual search capabilities empower users to search for products using images, streamlining the shopping process. Moreover, image classification is employed in quality control processes, automating the inspection of products for defects and ensuring high-quality standards in manufacturing.

Image classification technologies continue to drive innovation and efficiency across diverse industries, revolutionizing processes, improving decision-making, and enhancing user experiences in numerous applications.

VII. FUTURE TRENDS AND DIRECTIONS

The future of image classification is shaped by ongoing advancements in technology and emerging research directions, offering exciting possibilities and challenges.

A. Deep Learning Advancements

Deep learning will continue to be at the forefront of image classification research, with a focus on improving model efficiency, interpretability, and generalization. Future architectures will aim to develop more efficient models that achieve higher accuracy with fewer parameters, enabling deployment on resource-constrained devices. Additionally, efforts will be directed towards enhancing the interpretability of deep learning models, which is crucial for understanding model decisions and building trust in automated systems.

B. Weakly Supervised and Self-Supervised Learning

Efforts in weakly supervised and self-supervised learning will intensify to reduce reliance on large labeled datasets, making image classification more accessible and scalable. Weakly supervised learning will enable models to learn from weak supervision signals such as image-level labels or bounding boxes, while self-supervised learning techniques will allow models to learn from the data itself without explicit supervision, leading to better feature representations.

C. Multimodal and Cross-Modal Learning

Integration of information from multiple modalities and learning across modalities will play a crucial role in handling complex data. Techniques for multimodal fusion will enable combining vision with other modalities such as text, audio, and sensor data, facilitating richer understanding and more robust classification. Moreover, models capable of cross-modal learning will enable joint learning from multiple modalities, advancing tasks like image-text understanding and enabling more human-like comprehension.

D. Lifelong and Continual Learning

Future efforts will focus on developing models capable of continual learning, adapting to new data and tasks over time without forgetting previous knowledge. Lifelong learning approaches will enable models to continuously learn from new data streams, accumulating knowledge and adapting to changing environments. Additionally, techniques for few-shot learning will empower models to generalize better to new classes or tasks with limited labeled data.

E. Robustness and Adversarial Defense

Ensuring robustness against adversarial attacks and handling real-world variability will remain critical for deploying image classification systems in practical applications. Techniques for adversarial defense, such as adversarial training and robust optimization, will continue to be developed. Moreover, models robust to domain shifts and variations will be essential for real-world deployment across diverse environments and conditions.

F. Ethical and Fair AI

Addressing ethical concerns and ensuring fairness and transparency in image classification systems will be paramount for responsible AI development. Techniques to detect and mitigate biases in data and models will be integrated into image classification pipelines. Moreover, efforts to make image classification models more interpretable and explainable will increase, enabling users to understand and trust model decisions.

G. Edge Computing and Deployment

The deployment of image classification models on edge devices will become more prevalent, leading to challenges and opportunities in model optimization and efficiency. Optimizing models for deployment on edge devices with limited computational resources will be crucial for real-time inference. Federated learning approaches will enable collaborative model training across edge devices while preserving data privacy and security.

H. Human-AI Collaboration

Advancements will focus on enhancing human-AI collaboration, where humans and AI systems complement each other's strengths. Interactive image classification systems that incorporate human feedback to improve model performance will become more common. Moreover, designing AI systems with human-centric principles in mind, considering usability, transparency, and user feedback, will be emphasized.

The future of image classification holds tremendous potential, with advancements in technology, methodologies, and applications paving the way for more capable, interpretable, and ethical systems.

Conclusion

In conclusion, image classification has witnessed remarkable advancements over the years, driven by innovations in deep learning, computer vision, and machine learning techniques. This survey has explored the journey of image classification, from its early techniques to state-of-the-art methods and future directions. Early techniques laid the foundation for image classification, but it was the advent of deep learning, particularly Convolutional Neural Networks (CNNs), that revolutionized the field. Deep learning enabled automatic feature learning directly from raw data, leading to unprecedented accuracy and performance in image classification tasks. Advanced techniques and architectures such as transfer learning, attention mechanisms, and multimodal learning have further improved the capabilities of image classification systems. These advancements have enabled applications across diverse domains including healthcare, autonomous vehicles, social media, agriculture, security, and retail. Looking ahead, the future of image classification is promising. Emerging trends such as weakly supervised learning, multimodal learning, and continual learning are poised to address challenges and push the boundaries of what\'s possible. Ensuring robustness, fairness, and ethical deployment of image classification systems will be crucial for their widespread adoption and societal impact. As image classification technologies continue to evolve, collaboration between researchers, practitioners, and policymakers will be essential to harness its potential for positive impact while addressing challenges such as bias, interpretability, and privacy. In summary, image classification remains a vibrant and rapidly advancing field with vast opportunities for innovation and application, promising to shape the future of AI-driven technologies and their integration into various aspects of our lives.

References

[1] Xin, M., Wang, Y. Research on image classification model based on deep convolution neural network. J Image Video Proc. 2019, 40 (2019). https://doi.org/10.1186/s13640-019-0417-8 [2] Luo, L. (2021). Research on Image Classification Algorithm Based on Convolutional Neural Network. In Journal of Physics: Conference Series (Vol. 2083, Issue 3, p. 032054). IOP Publishing. https://doi.org/10.1088/1742-6596/2083/3/032054 [3] Alzubaidi, L., Zhang, J., Humaidi, A.J. et al. Review of deep learning: concepts, CNN architectures, challenges, applications, future directions. J Big Data 8, 53 (2021). https://doi.org/10.1186/s40537-021-00444-8 [4] Ferrandin, M., & Cerri, R. (2022). A multi-label classification approach via hierarchical multi-label classification. Research Square Platform LLC. https://doi.org/10.21203/rs.3.rs-1793069/v1 [5] Ansari, Mohd. A., Kurchaniya, D., & Dixit, M. (2017). A Comprehensive Analysis of Image Edge Detection Techniques. In International Journal of Multimedia and Ubiquitous Engineering (Vol. 12, Issue 11, pp. 1–12). Global Vision Press. [6] Armi, L., & Fekri-Ershad, S. (2019). Texture image analysis and texture classification methods - A review. arXiv. https://doi.org/10.48550/ARXIV.1904.06554 [7] Chakravarti, R., & Meng, X. (2009). A Study of Color Histogram Based Image Retrieval. In 2009 Sixth International Conference on Information Technology: New Generations. 2009 Sixth International Conference on Information Technology: New Generations. IEEE. https://doi.org/10.1109/itng.2009.126 [8] Evgeniou, T., & Pontil, M. (2001). Support Vector Machines: Theory and Applications. In Machine Learning and Its Applications (pp. 249–257). Springer Berlin Heidelberg. https://doi.org/10.1007/3-540-44673-7_12 [9] Uddin, S., Haque, I., Lu, H. et al. Comparative performance analysis of K-nearest neighbour (KNN) algorithm and its different variants for disease prediction. Sci Rep 12, 6256 (2022). https://doi.org/10.1038/s41598-022-10358-x [10] Talekar, B. (2020). A Detailed Review on Decision Tree and Random Forest. In Bioscience Biotechnology Research Communications (Vol. 13, Issue 14, pp. 245–248). Society for Science and Nature. https://doi.org/10.21786/bbrc/13.14/57 [11] Friedman, N., Geiger, D. & Goldszmidt, M. Bayesian Network Classifiers. Machine Learning 29, 131–163 (1997). https://doi.org/10.1023/A:1007465528199 [12] Rawat, W., & Wang, Z. (2017). Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review. In Neural Computation (Vol. 29, Issue 9, pp. 2352–2449). MIT Press - Journals. https://doi.org/10.1162/neco_a_00990 [13] Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2017). ImageNet classification with deep convolutional neural networks. In Communications of the ACM (Vol. 60, Issue 6, pp. 84–90). Association for Computing Machinery (ACM). https://doi.org/10.1145/3065386 [14] Simonyan, K., & Zisserman, A. (2014). Very Deep Convolutional Networks for Large-Scale Image Recognition (Version 6). arXiv. https://doi.org/10.48550/ARXIV.1409.1556 [15] Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., & Rabinovich, A. (2014). Going Deeper with Convolutions (Version 1). arXiv. https://doi.org/10.48550/ARXIV.1409.4842 [16] He, K., Zhang, X., Ren, S., & Sun, J. (2015). Deep Residual Learning for Image Recognition (Version 1). arXiv. https://doi.org/10.48550/ARXIV.1512.03385 [17] Hosna, A., Merry, E., Gyalmo, J. et al. Transfer learning: a friendly introduction. J Big Data 9, 102 (2022). https://doi.org/10.1186/s40537-022-00652-w [18] Shrimali, V. (2019c, June 3). Pre trained models for Image Classification – PyTorch for beginners. LearnOpenCV – Learn OpenCV, PyTorch, Keras, Tensorflow with Code, & Tutorials; Satya Mallick. https://learnopencv.com/pytorch-for-beginners-image-classification-using-pre-trained-models/ [19] Zheng, C., Wu, G., & Li, C. (2023). Toward Understanding Generative Data Augmentation (Version 1). arXiv. https://doi.org/10.48550/ARXIV.2305.17476 [20] Mia, M. S., Arnob, A. B. H., Naim, A., Voban, A. A. B., & Islam, M. S. (2023). ViTs are Everywhere: A Comprehensive Study Showcasing Vision Transformers in Different Domain. In 2023 International Conference on the Cognitive Computing and Complex Data (ICCD). 2023 International Conference on the Cognitive Computing and Complex Data (ICCD). IEEE. https://doi.org/10.1109/iccd59681.2023.10420683 [21] Benhur, S. (2023, May 31). Guide to meta learning. Built In. https://builtin.com/machine-learning/meta-learning [22] Selvaraju, R. R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., & Batra, D. (2019). Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In International Journal of Computer Vision (Vol. 128, Issue 2, pp. 336–359). Springer Science and Business Media LLC. https://doi.org/10.1007/s11263-019-01228-7 [23] Local Interpretable Model-agnostic Explanations — InterpretML documentation. (n.d.). Interpret.Ml. Retrieved June 19, 2024, from https://interpret.ml/docs/lime.html

Copyright

Copyright © 2024 Lokesh Kumar Boran, Anwar Husain Joya. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET65113

Publish Date : 2024-11-09

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online