Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Generative AI in Healthcare Industry: Implementations and Challenges

Authors: Biswajit Bhattacharya, Chiranjeev Dutta, Saugat Kumar Behura

DOI Link: https://doi.org/10.22214/ijraset.2024.63815

Certificate: View Certificate

Abstract

Generative AI (artificial intelligence) refers to algorithms and models that can be prompted to generate various types of content. Generative AI has quickly become a major factor in several industries, including health care. It has the potential to transform the sector, but we must understand how to use this technology in order to capitalize on its potential while avoiding the risks that may come with it while applying it to patient care. These models have played a crucial role in analyzing diverse forms of data, including medical imaging (encompassing image reconstruction, image-to-image translation, image generation, and image classification), clinical documentation, diagnostic assistance, clinical decision support, medical coding, and billing, as well as software engineering, testing and user data safety and security. In this review we briefly discuss some associated issues, such as trust, veracity, clinical safety and reliability, privacy and opportunities, e.g., AI-driven conversational user interfaces for friendlier human-computer interaction.

Introduction

I. INTRODUCTION

In recent years, artificial intelligence (AI) has driven transformative progress across various sectors, and its impact on healthcare is especially significant. Among the rapidly advancing AI technologies, generative models like the ChatGPT, based on the Generative Pre-trained Transformer (GPT) architecture developed by OpenAI, stand out. These models have the potential to revolutionize healthcare by leveraging their impressive natural language processing (NLP) capabilities.

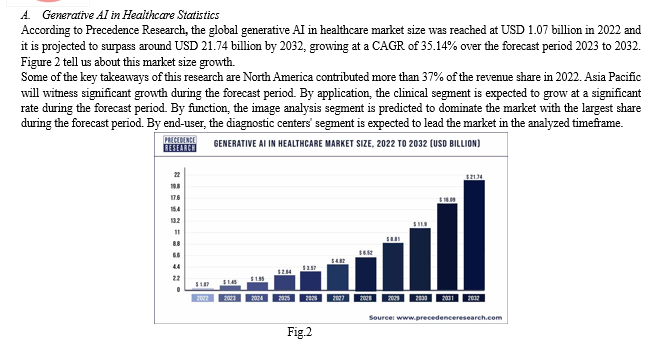

Generative AI models, such as GPT, have significant applications in healthcare. They can aid clinical decision-making by analyzing medical data, improving disease diagnosis, and facilitating personalized treatment strategies. Additionally, GPT models assist radiologists in interpreting medical images and play a role in drug discovery by predicting potential effective and safe drug candidates. Figure 1 tells us about some of the use cases of generative AI in healthcare industry that is currently in use.

While generative models hold significant promise for healthcare, their adoption faces challenges and ethical complexities. Ensuring accurate and reliable AI-driven decisions is crucial, especially in critical medical scenarios. The opaque nature of some AI models, including generative ones, underscores the need for transparency and interpretability. Additionally, ethical concerns related to data privacy, patient confidentiality, and potential biases demand careful handling. Safeguarding patient privacy and data security is essential for maintaining trust in AI-driven healthcare solutions.

B. Current Uses of GenAI and LLMs

Current research initiatives explore the application of Generative AI and LLMs in medical practice. These efforts aim to enhance clinical administration, support professional education, and alleviate the workload on healthcare providers. The integration of these technologies holds significant promise for improving patient care and advancing medical research. Exploring recent advancements, we aim to understand how cutting-edge AI language models can revolutionize healthcare. These state-of-the-art models have the potential to shape the future of medical practice.

C. Clinical Administration Support

Generative AI models find a prominent application in healthcare by automating clinical documentation, providing valuable clinical administration support. Overburdened clinicians can benefit from ChatGPT’s capabilities to swiftly and accurately generate draft clinical notes. By inputting a brief verbal summary or relevant patient data (while respecting data privacy), comprehensive and contextually relevant clinical documentation can be produced, saving clinicians time. Microsoft Copilot, an enterprise tool, seamlessly integrates generative AI into everyday applications like Word, PowerPoint, Excel, Teams and Web Browsers for enhancing productivity. This integration holds significant potential for fostering multidisciplinary collaboration among healthcare teams. For instance, in complex cases involving multiple specialties, a generative AI-based meeting tool can assist in creating agendas, identifying suitable team members for follow-up actions, and summarizing key meeting points.

Google Bard (now Gemini), powered by Med-PaLM 2, plays a vital role in healthcare, offering continuous patient support and aiding clinicians. Med-PaLM 2, trained on diverse medical data, enhances its ability to generate medical content. The tool assists with patient queries, potential diagnoses, and treatment plans. In hematology, it provides immediate information and recommends professional attention for blood disorders. However, it’s essential to use AI-generated responses for information only, not as a substitute for professional medical advice. As Google Bard (now Gemini) evolves, its potential to transform healthcare interactions and improve patient care remains promising.

D. Clinical Decision Support

Given the advanced understanding of the human language and further fine-tuned domain knowledge, GPT models also have the potential to support clinical decision-making. Glass AI, an experimental tool powered by LLMs, holds promise for supporting clinical decision-making. As a diagnostic assistant, it generates a customized list of potential diagnoses and treatment plans for clinicians.

For example, when faced with a patient experiencing symptoms like fatigue, shortness of breath, and paleness, a provider can input these symptoms into Glass AI. The system then offers a comprehensive differential diagnosis, including conditions such as anemia, leukemia, or myelodysplastic syndromes. Additionally, Glass AI aids in formulating clinical plans, guiding hematologists toward further tests or treatment.

Redbrick AI’s Fast Automated Segmentation Tool (F.A.S.T) has significant applications in medical imaging. It assists healthcare professionals in annotating and segmenting CT scans, MRI images, and ultrasounds. By utilizing Meta’s Segment Anything model, F.A.S.T. offers a solution to enhance diagnostic accuracy and speed in healthcare. Its adaptive nature simplifies accurate segmentation without requiring additional data, making it valuable for segmenting visible objects and features in radiology. Clinicians can witness real-time mask computation, streamlining the segmentation process. Overall, F.A.S.T. automates manual segmentation, improving diagnostic accuracy and speed in radiology.

Regard, an AI tool integrated with Electronic Health Record (EHR) systems, swiftly suggests diagnoses and generates clinical notes by analyzing patient data. It automates EHR-related tasks, freeing up clinicians’ time. Regard’s generative AI assists in diagnosing by providing a list of differential diagnoses based on patient data. For primary care physicians and specialists, it offers evidence-based suggestions, enhancing diagnostic accuracy. Regard complements EHR use, emphasizing support for clinical judgment. Pilot programs have shown time-saving benefits and improved accuracy.

E. Patient Interaction

Hippocratic AI is dedicated to developing a language model (LLM) specifically for healthcare. Its focus lies in creating patient-centered interactions, emphasizing empathy, care, and compassion. By generating patient-friendly responses, Hippocratic AI enhances patient engagement and outreach. The concept of “generative AI empathy” is crucial here. A study conducted by Ayers et al. demonstrated that responses from LLM-powered chatbots (such as ChatGPT) were preferred over physician responses and received significantly higher empathy ratings. This highlights the potential impact of AI in fostering better patient experiences.

Gridspace is an enterprise solution powered by generative AI. Its primary purpose is to automate patient outreach by handling phone calls, answering questions, and performing administrative tasks. Gridspace automates routine administrative tasks, including appointment scheduling, patient reminders, and insurance verification. By delegating these inquiries to voice bots, healthcare professionals can save time and concentrate on critical patient care. Additionally, Gridspace efficiently triages and directs patient inquiries in real-time, offering valuable insights. Its applications showcase the potential of Generative AI in transforming patient interactions, streamlining administrative processes, and enhancing overall healthcare efficiency and patient satisfaction.

F. Synthetic Data Generation

Syntegra Medical Mind uses generative AI to create realistic synthetic patient records from real healthcare data, preserving statistical characteristics, including rare cases. Healthcare professionals can access and analyze this data for research and decision-making while maintaining patient privacy. Syntegra addresses data bias and promotes equitable treatment plans. It fosters innovation by breaking down data access barriers, as demonstrated in dementia research by Muniz-Terrera et al.

DALL-E 2, an OpenAI model, generates images from text descriptions. It’s valuable in medical research and education where data is scarce. Importantly, it maintains patient privacy. Adams et al. explored its radiology capabilities, finding promise in generating x-ray images with anatomical proportions similar to real ones. Fine-tuning with medical data could enhance its utility.

Even for testing the efficacy of medical software systems and mimic patients’ data to test the consistency of medical data records w.r.t real world patient data, developers and testers are using various services of ChatGPT and other API services of different GenAI tools to create synthetic test data to save efforts, time and cost for several healthcare providers across the globe.

G. Challenges

In the context of generative AI adoption in healthcare industry, trust and validation play crucial roles. However, ChatGPT’s responses exhibit significant variability in quality and accuracy, making it challenging to determine when to rely on its answers. This unpredictability poses a barrier to widespread adoption, especially for users who lack the expertise to assess response completeness and accuracy.

ChatGPT has been observed to generate fictional content, a phenomenon known as “hallucinations”. Techniques like Retrieval Augmented Generation (RAG) can mitigate this issue. Additionally, generative AI may exhibit bias based on its training data and may not perform consistently across different languages.

H. Clinical Challenges

The challenge is exacerbated by the dynamic nature of medical and clinical knowledge, necessitating an adaptable form of generative AI that can be consistently trained and updated. Additionally, the rapid advancements in large language models and generative AI pose difficulties in their clinical assessment, regulation, and certification.

In the realm of generative AI, models like OpenAI’s ChatGPT, Meta’s Llama 1 and 2, and Google’s Bard (now Gemini) offer diverse capabilities. However, limitations persist, for example, Google Bard (now Gemini) struggles with specific clinical cases due to constraints. While newer versions tend to improve the efficacy, it’s not guaranteed. Looking ahead, dedicated medically trained large language models will evolve rapidly. Yet, evaluating and certifying AI in medicine remains time-consuming, risking obsolescence. Regulatory bodies must adapt to address LLMs’ unique challenges.

I. Privacy Apprehensions

In April 2023, Italy initially restricted access to ChatGPT due to privacy worries related to data collection and storage for model training. However, access was later reinstated in Italy after ChatGPT introduced a feature allowing users to disable chat history, giving them control over which conversations contribute to model training.

Despite the widespread use of AI models, there remains a lack of transparency regarding their training data and the code used for their development. Unauthorized access to data sources, including potentially sensitive information, has raised legal concerns, with a recent legal litigation in the USA. Researchers are advocating for AI models to adhere to privacy laws, including the ‘right to be forgotten’ and the ability to unlearn information about individuals or situations.

J. Copyright Concerns

The recent lawsuit involving unauthorized access without consent highlights copyright concerns related to the data used for training AI models. Additionally, there are unresolved questions about intellectual property ownership for content generated by these models, such as new radiology images produced by DALL-E 2 in response to user prompts. Determining copyright ownership and liability for potential harm becomes more intricate when AI-generated content is based on copyrighted materials. Interestingly, Microsoft introduced a new section on AI services to its overall Services agreement effective September 30, 2023, in which it expanded the definition of “Your Content” to include “content that is generated by your use of our AI services”.

K. Probable Solutions

Emerging technologies, including generative AI, face unresolved issues related to trust, safety, privacy, and ownership. While definitive solutions are lacking, these challenges are not insurmountable. Over time, as technology evolves and legal frameworks develop, these issues will be addressed.

The world’s first comprehensive AI law has been accepted by the EU in Mar 2024 with a grace period of 24-36 months before its main requirements become binding. The EU AI Act mandates transparency for generative AI, including the publication of summaries for copyrighted training data. Simultaneously, healthcare regulators, like the UK’s MHRA, are adapting frameworks to address AI as a medical device. However, ongoing reapproval for medical AI algorithms, which learn from new data post-approval, remains an unresolved process.

Developers can create custom applications using APIs and plugins from major generative AI providers like OpenAI and Google. For instance, Petal/Paladin Max, Inc.'s SaaS tool, GPT-trainer, enables users to build and deploy ChatGPT assistants trained on their data without coding.

Generative AI shows promise for the Internet of Medical Things (IoMT). It can aid in designing edge-based medical devices and enhance user experience by adapting to patients and clinicians. Additionally, it generates synthetic data for testing machine learning algorithms without using real patient data. In IoMT security, generative AI can auto-generate mitigation responses to threats.

Conclusion

In our review, we explored generative AI applications in healthcare industry, addressing concerns like trust, safety, privacy, and copyright. As legal frameworks evolve, we anticipate gradual resolution of these issues. We agree with Lee, Goldberg, and Kohane that generative AI will play a crucial role in healthcare, adapting to unique medical contexts. In the near future, models specifically trained on quality medical texts will aid healthcare professionals across various specialties. These models will be of great help to healthcare professionals and their patients in the not-very-distant future. Rather than AI replacing humans (clinicians), we see it as “clinicians using AI” replacing “clinicians who do not use AI” in the upcoming years.

References

[1] Ayd?n, Ö.; Karaarslan, E. OpenAI ChatGPT Generated Literature Review: Digital Twin in Healthcare; SSRN 4308687; SSRN: Rochester, NY, USA, 2022; Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4308687 [2] Zhang, P.; Kamel Boulos, M.N. Generative AI in Medicine and Healthcare: Promises, Opportunities and Challenges. Future Internet 2023, 15, 286. https://doi.org/10.3390/fi15090286 [3] Eysenbach, G. The role of ChatGPT, generative language models, and artificial intelligence in medical education: A conversation with ChatGPT and a call for papers. JMIR Med. Educ. 2023, 9, e46885 [4] Xue, V.W.; Lei, P.; Cho,W.C. The potential impact of ChatGPT in clinical and translational medicine. Clin. Transl. Med. 2023, 13,e1216. [5] A Comprehensive Review of Generative AI in Healthcare: Yasin Shokrollahi, Sahar Yarmohammadtoosky, Matthew M. Nikahd, Pengfei Dong, Xianqi Li, Linxia Gu [6] https://www.precedenceresearch.com/generative-ai-in-healthcare-market [7] Spataro, J. Introducing Microsoft 365 Copilot—Your Copilot for Work. Official Microsoft Blog. March 2023. Available online: https://news.microsoft.com/reinventing-productivity/ [8] Rahaman, M.S.; Ahsan, M.M.; Anjum, N.; Rahman, M.M.; Rahman, M.N. The AI Race Is on! Google’s Bard and OpenAI’s ChatGPT Head to Head: An Opinion Article; SSRN 4351785; SSRN: Rochester, NY, USA, 2023; Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4351785 [9] Glass Health. Glass AI. Available online: https://glass.health/ai [10] Regard. Torrance Memorial Medical Center Reduces Physician Burnout, Increases Annual Revenue by $2 Million with the Help of Regard Case Study (September 2022). Available online: https://withregard.com/case-studies/tmmc-reduces-burnout [11] Sharma, S. F.A.S.T.—Meta AI’s Segment Anything for Medical Imaging. RedBrick AI 10 April 2023. Available online: https://blog.redbrickai.com/blog-posts/fast-meta-sam-for-medical-imaging [12] Hippocratic, A.I. Benchmarks. Available online: https://www.hippocraticai.com/benchmarks [13] Gridspace. ExploreWays to Build a Better Customer Experience with Conversational AI. Available online: https://resources.gridspace.com/ [14] Syntegra. Data-Driven Innovation through Advanced AI. Available online: https://www.syntegra.io/technology [15] Muniz-Terrera, G.; Mendelevitch, O.; Barnes, R.; Lesh, M.D. Virtual cohorts and synthetic data in dementia: An illustration of their potential to advance research. Front. Artif. Intell. 2021, 4, 613956 [16] OpenAI. Models. Available online: https://platform.openai.com/docs/models/overview [17] Adams, L.C.; Busch, F.; Truhn, D.; Makowski, M.R.; Aerts, H.J.W.L.; Bressem, K.K. What Does DALL-E 2 Know About Radiology? J. Med. Internet Res. 2023, 25, e43110 [18] UK Medicines & Healthcare products Regulatory Agency. Software and Artificial Intelligence (AI) as a Medical Device (Guidance,Updated 26 July 2023). Available online: https://www.gov.uk/government/publications/software-and-artificial-intelligence-aias-a-medical-device/software-and-artificial-intelligence-ai-as-a-medical-device [19] https://www.europarl.europa.eu/news/en/press-room/20240308IPR19015/artificial-intelligence-act-meps-adopt-landmark-law [20] BBC News. ChatGPT Accessible Again in Italy (28 April 2023). Available online: https://www.bbc.co.uk/news/technology-65431914 [21] Microsoft Corporation. Summary of Changes to the Microsoft Services Agreement—30 September 2023. Available online: https://www.microsoft.com/en-us/servicesagreement/upcoming-updates [22] 5G and IoMT: Moving Towards Modernization of Healthcare, Biswajit Bhattacharya, International Journal of Engineering Research & Technology (IJERT) ,Vol. 11 Issue 10, October-2022 [23] Petal/Paladin Max, Inc. GPT-Trainer. Available online: https://gpt-trainer.com/ [24] Srivastava, J.; Routray, S.; Ahmad, S.;Waris, M.M. Internet of Medical Things (IoMT)-Based Smart Healthcare System: Trends and Progress. Comput. Intell. Neurosci. 2022, 2022, 7218113. [25] Lee, P.; Goldberg, C.; Kohane, I. The AI Revolution in Medicine: GPT-4 and Beyond, 1st ed.; Pearson: London, UK, 2023; ISBN-10:0138200130/ISBN-13: 978-0138200138; Available online: https://www.amazon.com/AI-Revolution-Medicine-GPT-4 Beyond/dp/0138200130/

Copyright

Copyright © 2024 Biswajit Bhattacharya, Chiranjeev Dutta, Saugat Kumar Behura. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63815

Publish Date : 2024-07-30

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online