Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Gesture Controlled Robot Car

Authors: Mantesh Mhetre, Netra Mohekar, Rashmit Mhatre, Prof. MInal Barhate, Pranav Modhave, Aakanksha Mishra, Minerva Senapati

DOI Link: https://doi.org/10.22214/ijraset.2023.56184

Certificate: View Certificate

Abstract

A noteworthy achievement in automotive technology is the creation of an automatic hand gesture-controlled robot that can operate and control a variety of car operations. By enabling drivers to keep their hands on the wheel and their eyes on the road, this technology improves driver comfort and safety by lowering distractions, increasing driving focus, and lowering the chance of accidents. It is especially useful in circumstances where quick access to particular functions is necessary. Additionally, the technology encourages accessibility for people with physical limitations, enabling them to drive independently. However, precision and dependability are essential for a successful implementation, and user education are required to guarantee that drivers comprehend and use the movements the system detects.The automatic hand gesture-controlled car system shows promise in enhancing convenience, safety, and accessibility, but further research and development are needed to improve accuracy, reliability, and user-friendliness.

Introduction

I. INTRODUCTION

Automatic hand gesture-controlled robots are robotic systems that can be operated and controlled using hand gestures and movements. These robots are designed to understand and interpret specific hand gestures made by a human operator and then perform corresponding actions or tasks. The development of automatic hand gesture controlled robots is an important advancement in the field of human-robot interaction. By using hand gestures as a means of control, these robots offer a more intuitive and natural way for humans to communicate and interact with machines. This technology has the potential to revolutionize various industries, including healthcare, manufacturing, entertainment, and more. The operation of these robots typically involves a combination of hardware and software components. The hardware usually includes sensors, cameras, and other devices that can capture and interpret hand movements and gestures. These sensors can detect the position, orientation, and motion of the hand, enabling the robot to understand the intended commands.The software algorithms play a crucial role in processing the captured data and translating it into meaningful instructions for the robot. Machine learning and computer vision techniques are often employed to train the system to recognize and classify different hand gestures accurately. The software analyzes the input from the sensors and maps it to predefined commands or actions that the robot can execute.Once the hand gestures are interpreted and translated into commands, the robot can perform a wide range of tasks based on its capabilities. These tasks can include navigation, object manipulation, grasping, picking and placing items, and more, depending on the specific design and functionality of the robot.Automatic hand gesture controlled robots have various applications across different domains. In healthcare, they can be used for assisting surgeons during operations, rehabilitation exercises, and patient care. In manufacturing, these robots can enhance productivity by enabling operators to control machines and perform tasks without physical contact. In the entertainment industry, they can be employed in interactive games and virtual reality experiences.Overall, automatic hand gesture controlled robots offer an exciting and innovative way to interact with and control robotic systems. As the technology continues to advance, we can expect to see more sophisticated and capable robots that can understand and respond to a wider range of hand gestures, further expanding their applications and potential benefits in various industries.

II. LITERATURE REVIEW

- Hand gesture recognition technology, a promising feature in smart homes and IoT, has witnessed substantial advancements. It utilizes various sensors like cameras and depth sensors, such as the Microsoft Kinect, for real-time and accurate gesture recognition. Applications range from controlling appliances to assisting people with disabilities. Overcoming technical challenges like accuracy and latency remains a priority, with machine learning methods like CNNs and RNNs playing a crucial role. User acceptance is generally positive, though preferences vary. Low-cost prototypes with open-source software have made the technology accessible. Future directions include improving accuracy, reducing costs, and integrating with voice control.

This technology has the potential to revolutionize home interaction, driven by evolving technology and user demand for intuitive smart home interfaces[2].

2. It focuses on a vision-based hand gesture recognition system to control a robotic hand. In a rapidly evolving field of human-robot interaction, this study explores the integration of vision technology for gesture recognition, potentially improving remote control and human-machine interaction. While specific findings aren't provided, it is evident that this research aligns with the growing interest in automation and Industry 4.0. The paper adds to the discussion on innovative control methods for robotics, offering insights into the potential of vision-based gestures in controlling robotic systems[3].

3. It explores interaction with virtual games using hand gesture recognition. This study aligns with the growing interest in novel human-computer interaction methods. Although specific findings aren't detailed, it contributes to the discussion on enhancing user experiences in virtual environments. The paper underscores the potential of hand gesture recognition as a means to engage with virtual games, a concept that has continued to evolve and gain significance in the fields of gaming and interactive technology[4].

4. utilization of inertial measurement units (IMU) and electromyography (EMG) sensors for controlling mobile robots through gesture recognition. This research offers a unique perspective on gesture-based control, focusing on combining IMU and EMG technologies. While the paper lacks specific findings, it contributes to the growing discourse on innovative human-robot interaction. The fusion of IMU and EMG sensors for mobile robot control highlights the potential for enhanced real-world applications of gesture recognition in robotics, holding promise for advanced, hands-free robot control systems.

III. METHODOLOGY

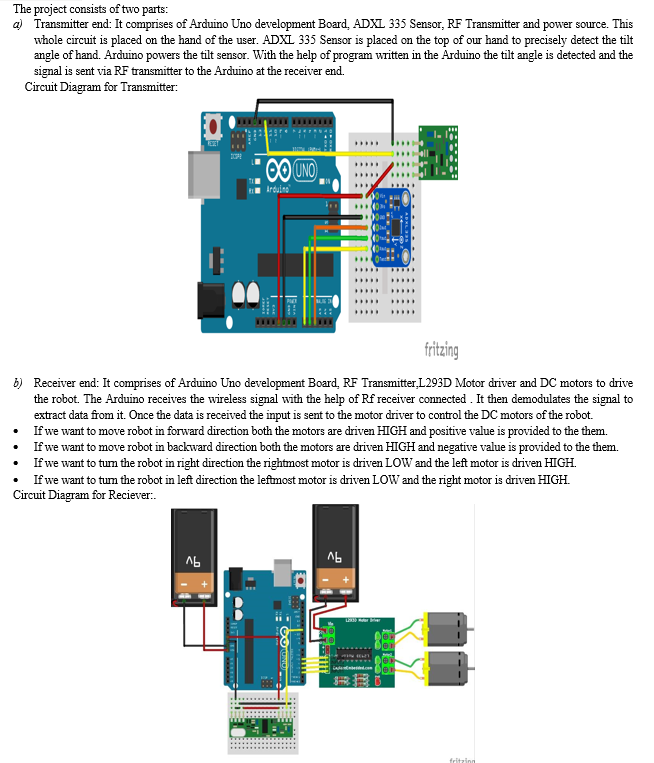

In this project of Automatic Hand Gesture Controlled Robot the main emphasis is on RF Communication.

It uses Radio Frequency for Communication between the Transmitter and the Receiver end.

The components used are:

- ADXL335 Sensor: This is a Accelerometer sensor used to detect the tilt angle of our transmitter. It is also known as small, low-power and low cost accelerometer sensor.

The sensor comprises of 5 pins:

a. VCC pin: Power source from Arduino Uno provided to this pin which is 5V.

b. X: This pin returns the tilt angle with respect to X axis.

c. Y: This pin returns the tilt angle with respect to Y axis.

d. Z: This pin returns the tilt angle with respect to Z axis.

e. GND: This is ground pin connected to ground pin of Arduino.

2. RF Module: This is used for wireless communication between Transmitter end and Receiver end .This module sends data from one Arduino to another Arduino. It uses radio signal to communicate.

a. RF Transmitter: The transmitter module receives input signals from a microcontroller or any other digital source. It encodes the digital information into radio frequency signals and transmits it to the receiver module.

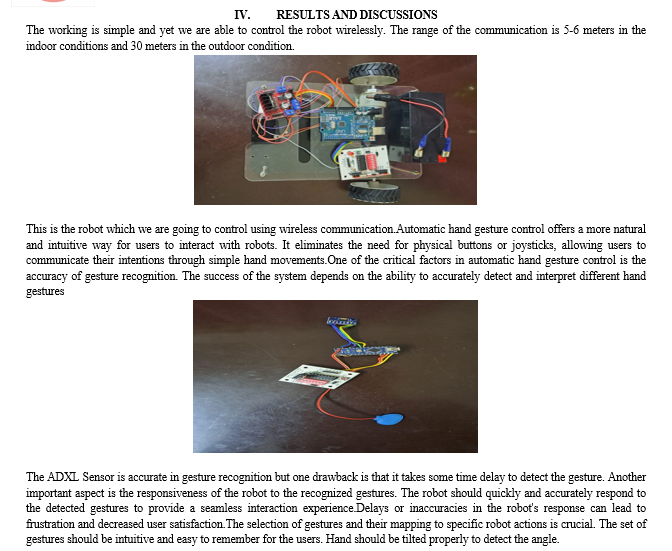

b. RF Receiver: The receiver module consists of an antenna that receives the modulated radio frequency signals. It demodulates the received signals to extract the data from it.

3. L293D Motor driver: The L293 is a popular integrated circuit (IC) motor driver designed to control and drive DC motors and stepper motors. It is connected to the two motors of the robot, based on the input given by the RF transmitter it will drive the motors and the Robot moves accordingly.

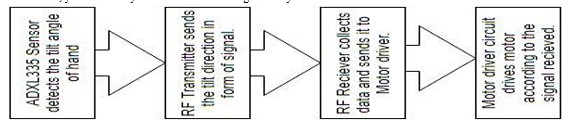

In this flowchart, you can clearly understand the working of the system.

V. FUTURE SCOPE

The future scope of automatic hand gesture-controlled cars is vast, with potential advancements in gesture recognition, expanded functionality, customizable gestures, multi-modal interaction, integration with smart home devices and IoT, collaboration with autonomous driving, and integration with augmented reality. These developments will lead to a more intuitive, personalized, and connected driving experience. Improved accuracy and reliability of gesture recognition systems will enhance performance. Customizable gestures will cater to individual preferences. Integration with smart home and IoT devices will offer seamless control. Collaboration with autonomous driving will facilitate communication with passengers. Augmented reality integration will provide immersive interfaces. In conclusion, these advancements will enhance safety, convenience, and overall user satisfaction. The future scope of automatic hand gesture-controlled cars is vast, with potential advancements in gesture recognition, expanded functionality, customizable gestures, multi-modal interaction, integration with smart home devices and IoT, collaboration with autonomous driving, and integration with augmented reality. These developments will lead to a more intuitive, personalized, and connected driving experience. Improved accuracy and reliability of gesture recognition systems will enhance performance. Customizable gestures will cater to individual preferences. Integration with smart home and IoT devices will offer seamless control.

Collaboration with autonomous driving will facilitate communication with passengers. Augmented reality integration will provide immersive interfaces. In conclusion, these advancements will enhance safety, convenience, and overall user satisfaction.

Conclusion

The development of automatic hand gesture-controlled cars represents a significant advancement in automotive technology, offering improved driver convenience and safety. By allowing drivers to operate various functions through hand gestures, this innovation eliminates the need for physical controls or manual input, reducing distractions and the risk of accidents. Drivers can keep their hands on the steering wheel and their eyes on the road, enhancing safety and making it easier to access specific functions quickly. This technology also enhances accessibility for individuals with physical disabilities, opening up new possibilities for them to enjoy the independence of driving. The accuracy and reliability of hand gesture recognition systems are crucial, requiring high-quality sensors and sophisticated algorithms to correctly interpret and respond to gestures. Proper user training and familiarization are essential, ensuring drivers understand the recognized hand gestures and can perform them correctly. Clear instructions and user-friendly interfaces help minimize confusion and ensure smooth interactions. Fail-safe mechanisms should be implemented to handle system failures or misinterpreted gestures, ensuring driver safety and control. Automatic hand gesture-controlled cars offer increased convenience, safety, and accessibility, but further research and refinement are necessary for optimal performance. Continued development is needed to enhance the accuracy, reliability, and user-friendliness of these systems in future vehicles.

References

[1] Xia, Zanwu, et al. \"Vision-based hand gesture recognition for human-robot collaboration: a survey.\" 2019 5th International Conference on Control, Automation and Robotics (ICCAR). IEEE, 2019. [2] Verdadero, Marvin S., Celeste O. Martinez-Ojeda, and Jennifer C. Dela Cruz. \"Hand gesture recognition system as an alternative interface for remote controlled home appliances.\" 2018 IEEE 10th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management (HNICEM). IEEE, 2018. [3] Gourob, Jobair Hossain, Sourav Raxit, and Afnan Hasan. \"A robotic hand: controlled with vision based hand gesture recognition system.\" 2021 International Conference on Automation, Control and Mechatronics for Industry 4.0 (ACMI). IEEE, 2021. [4] Rautaray, Siddharth S., and Anupam Agrawal. \"Interaction with virtual game through hand gesture recognition.\" 2011 International Conference on Multimedia, Signal Processing and Communication Technologies. IEEE, 2011. [5] Shin, Seong-Og, Donghan Kim, and Yong-Ho Seo. \"Controlling mobile robot using IMU and EMG sensor-based gesture recognition.\" 2014 ninth international conference on broadband and wireless computing, communication and applications. IEEE, 2014. [6] Jain, M., et al. \"Object detection and gesture control of four-wheel mobile robot.\" 2019 International Conference on Communication and Electronics Systems (ICCES). IEEE, 2019. [7] Muhsin Al Ramadan, S., et al. \"Industrial Robot Manipulation using Hand Gesture.\" 2023 2nd International Conference on Advancements in Electrical, Electronics, Communication, Computing and Automation (ICAECA). IEEE, 2023. [8] Frigola, Manel, Josep Fernandez, and Joan Aranda. \"Visual human machine interface by gestures.\" 2003 IEEE International Conference on Robotics and Automation (Cat. No. 03CH37422). Vol. 1. IEEE, 2003.

Copyright

Copyright © 2023 Mantesh Mhetre, Netra Mohekar, Rashmit Mhatre, Prof. MInal Barhate, Pranav Modhave, Aakanksha Mishra, Minerva Senapati. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET56184

Publish Date : 2023-10-17

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online