Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Gesture Controlled Virtual Artboard

Authors: Mr. Deven Gupta, Ms. Anamika Zagade, Ms. Mansi Gawand, Ms. Saloni Mhatre, Prof. J.W. Bakal

DOI Link: https://doi.org/10.22214/ijraset.2024.58680

Certificate: View Certificate

Abstract

Hand Gesture Recognition has brought a brand new era to Artificial Intelligence. Gesture recognition is technology that interprets hand movements as commands. Gesture Controlled Virtual Artboard Project is an innovative and dynamic digital artboard. Use of this artboard can break various education barrier to provide students with fun and creative way of learning and hence It revolutionize the way of traditional teaching. Physically challenged or aged people find it difficult to identify and press the exact key on keyboard. Existing system allows user for free hand drawing with 3 different colors only. Overcoming all this difficulty stands as the ultimate goal for our proposed system. This system works on motion sensing technology which make use of OpenCV module & Mediapipe library to explicate results based on gestures of hand. Real time data is collected through webcam and this python application uses Mediapipe library to track hand gestures. Hence, this system allows user to navigate the fingers in mid air and as per the finger gestures they will draw different shapes or free hand drawing in various colors and also can erase.This Project also enables users to conduct PowerPoint presentations using gestures.

Introduction

I. INTRODUCTION

Writing plays a pivotal role in communication. The evolution of writing is a remarkable journey. It began with prehistoric humans making simple markings on cave walls to the use of keyboard, digital pens and most recent touchless writing in the artificial intelligence era. A hand gesture is a non-verbal form of communication that involves the intentional movement or positioning of one's hands to convey a message, express an emotion. These gestures can range from simple movements, like waving or pointing, to more complex and culturally specific signals. In technology, hand gestures are also employed for interactive purposes, enabling users to control devices or interfaces through specific hand movements. Using this hand gestures we created prototype model that will recognize the hand gestures and perform different tasks such as free style drawing, shape drawing, color selections, thickness adjustment and gesture controlled ppt presentation.

This system is built using the Python programming language and incorporates various modules such as OpenCV and NumPy. mediapipe to enhance Human-Computer Interaction. OpenCV (Open Source Computer Vision) library that provides tools and functions for computer vision and image processing tasks. This artificial intelligence software will track the user’s hand gestures in real time and display the output on artboard with minimal delay time.

During the pandemic period importance of e-learning has increased dominantly so this Gesture Controlled Virtual Artboard will provide convenient and fascinating teaching methods in online mode. In online education teachers finds difficulty in using mouse & keyboard for drawing something on screen or for explaining concepts as it introduces a lot of errors. While this system will eliminate use of such hardwares and teachers can navigate their fingers in air and can write on board with ease. This Project will also build dustless classroom in offline mode because no need of calcium carbonated chalks. This calcium carbonates causes various respiratory problems such as allergy, sinus, itching eyes, etc. Also using plastic based boards, markers will have an adverse effect on the environment with increasing global warming. Specially abled childrens and aged people lacks in interactive communication so using gesture controlled education will enhance their communication abilities as no such expertise required to use this system therefore it is easy to use and can used by anyone irrespective of the age. This system can be convenient for ppt presentations and useful for reducing the workspace and burden of extra hardware devices such as remote control ppt presenter required to do presentation. Since it replaces the hardware devices, it brings the user and their workspace much closer to them than before. Hence Gesture Controlled Virtual Artboard provides fun, fascinating, interactive and modernized teaching methods.

II. LITERATURE SURVEY

This study aims to understand image processing capabilities of OpenCV. Firstly webcam captures the video frame by frame then user makes the selection of colors from the header and system tracks the fingertips simultaneously. At last the user can draw,erase and also clear the board.This system does not provide different brush sizes,colors.[1]

In this system, User should have the same color bead on the fingers as initialized in the program.The user have to enable the web camera in order to detect coordinates that has been assigned for each fingertip. The 4 colors with their names are displayed on the screen. User can write just by waving the fingers and everything is presented on screen. User can wipe the screen by using clear all option.If the user did not use same color bead as initialized in the program then it may create disturbance.[2]

This system uses a program written in Python with a graphical interface created using tKinter. The interface has information about a virtual canvas and allows users to choose colors. The program utilizes the OpenCV library to capture video, which is then converted into a specific color range. As the live video appears, buttons for selecting colors and clearing the screen become visible. To "write in the air," users press the spacebar to let the program detect an object. Once an object is identified, the program outlines it, and users can see the result on the screen. Pressing the 'esc' key allows users to exit the program. It's worth noting that this system doesn't include a specific frame for different shapes.[3]

In the proposed system, the user can paint using fingertips. By using OpenCV user can paint on the canvas and Mediapipe is used for hand tracking. MediaPipe handles the hand gestures and tracking and OpenCV handles the computer vision.User can draw anything on the screen if index finger is up and can select the color from color pallet. If all fingers are up position can be changed on the screen. User can clear the screen with the help of clear option. This system does not provide brush,shapes.[4]

In this project, for hand detection Mediapipe library is used for accuracy and functionality. The changes in position of index finger is tracked using Mediapipe.For selection of colour both middle finger and index finger are used. User can select colours by hovering over desired color.For drawing, Users can create drawings by placing individual points at short time intervals, which collectively give the impression of a continuous line. The output is displayed on the white canvas. This project does not have different shapes, also user cannot adjust thickness of the letters.[5].

III. METHODOLOGY

- Setup: Acquire a camera or use an existing one to capture the user's hand gestures.Install and configure the necessary software, including the Mediapipe library.

- Detect & Extract Key Hand Landmarks: Utilize the hand tracking module from the Mediapipe library to identify and locate the user's hand in the camera feed. Extract the key landmarks on the hand, such as fingertips and palm position.

- Define Gestures: Implement a gesture recognition algorithm using the detected hand landmarks & define specific gestures for actions like drawing, erasing, and navigating. Leverage the pre-trained models provided by Mediapipe for accurate and efficient recognition.

- Assigning tasks: Include functionalities for clearing the canvas, saving work, and other relevant actions through additional predefined gestures.Ppt presentation can be performed through gestures.

- Canvas Interaction:As per the user’s desire, the user can perform actions through Hand Gestures. This is done by mapping the recognized gestures to corresponding actions on the virtual artboard, such as drawing lines or erasing content. Implement real-time updates to the virtual artboard based on the user's hand movements. Develop a user-friendly interface that displays the virtual canvas through which user can interacts with system.

- Testing: Conduct extensive testing to ensure accurate and responsive gesture recognition.

Hand Gestures recognition works in two main phases:- palm detection and hand landmarks.

a. Palm Detection: The primary goal of palm detection is to identify the presence and location of a hand in the given input. For this the module utilizes a machine learning model trained to recognize the general structure and appearance of a human hand. It scans the input frames and identifies areas that likely contain a hand based on learned patterns. Once a hand is detected, the system provides information about the bounding box or region around the detected palm. This information serves as the foundation for further hand gesture analysis.

b. Hand Landmarks: Hand landmarks involve the identification of specific points or landmarks on the detected hand, allowing for detailed tracking of its pose and movements. After palm detection, the module further analyzes the hand by identifying key landmarks, such as the tips of the fingers, the base of the hand, and points along the contours of the palm.

The system outputs the 2D coordinates of these hand landmarks, providing a comprehensive understanding of the hand's spatial configuration. The landmark information enables precise tracking and interpretation of hand gestures. MediaPipe can pinpoint and recognize 21 specific points on a hand when given a close-up image, allowing for precise tracking of hand positions and gestures. The image below displays 21 points representing different locations on a hand:

IV. PROPOSED SYSTEM

The proposed Gesture Controlled Virtual ArtBoard represents a significant enhancement over the current system, introducing a more efficient and intuitive user interface. The limitations of the existing system are evident in its restricted creative functionality. Users are constrained to a limited color palette, typically only offering 3-4 colors for drawing.. Additionally, the system imposes a fixed thickness for drawing shapes, lacking the flexibility to adjust line widths based on user preferences.

Furthermore, the absence of a save option restricts users from preserving their work for future reference. Addressing these loopholes in the proposed Gesture Controlled Virtual ArtBoard enhances user experience, offering a more versatile and feature-rich platform for creative expression and presentation purposes.

Upon interacting with the system, users are seamlessly directed to the ArtBoard interface, where they can unleash their creativity through hand gestures. The implementation involves the utilization of the openCV Python library to initialize the camera and the MediaPipe library for hand gesture detection.

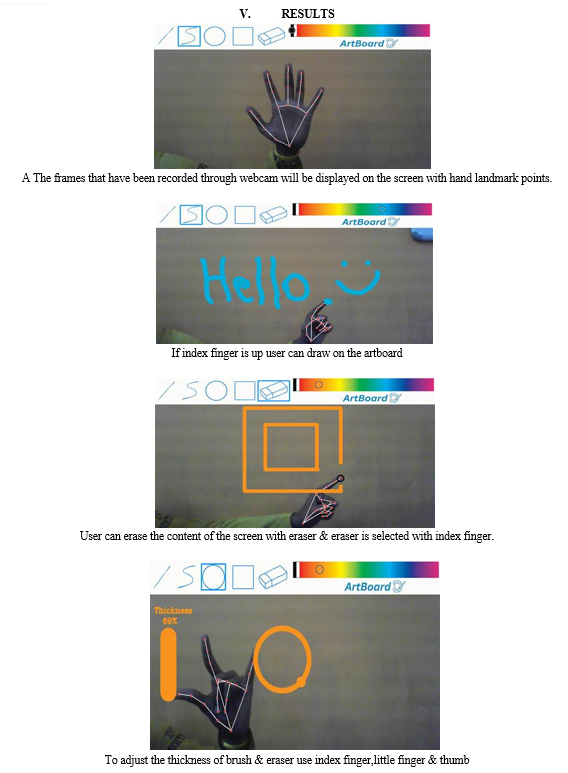

The core functionality revolves around capturing video frames from the webcam, converting them from BGR to RGB for efficient hand detection using the MediaPipe library. This enables users to engage with a virtual paint program, where each frame is analyzed to identify the hand's gestures. Analyzing the tip IDs and corresponding coordinates of the fingers obtained through MediaPipe, the system accurately determines the specific finger positions, and performs the desired action as per the commands collected.

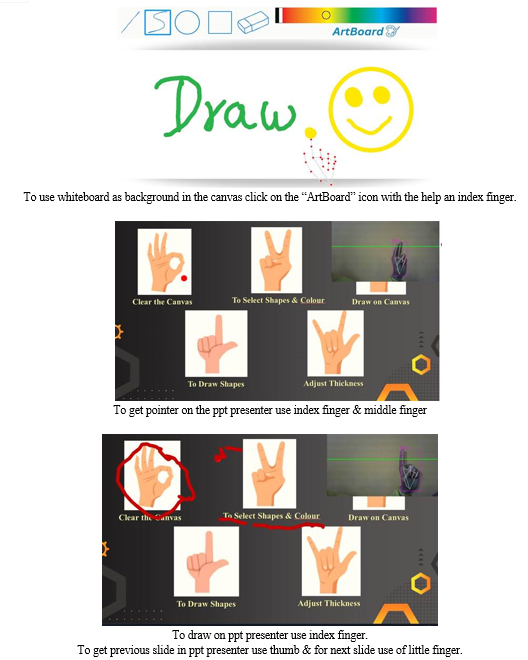

Moreover, the same module finds application in a PowerPoint presenter feature, enabling users to seamlessly navigate and present slides using hand gestures. The system interprets the finger configurations and executes specific functions accordingly. This not only streamlines the creative process in the virtual art space but also adds a practical dimension to the system.

A. Libraries Used

- Mediapipe

Mediapipe is a versatile computer vision library developed by Google that facilitates the development of real-time applications involving perceptual computing and machine learning. It offers a modular framework for tasks such as hand tracking, facial recognition, pose estimation, and object detection. One notable feature is its ability to efficiently process and interpret video streams or image sequences, making it suitable for applications ranging from virtual interfaces to augmented reality. With a user-friendly design, Mediapipe simplifies the integration of advanced computer vision capabilities into diverse projects, providing developers with a powerful toolset for creating interactive and intelligent applications.

2. OpenCv

OpenCV (Open Source Computer Vision) Library, is a comprehensive and image processing library designed to provide a robust set of tools for developing applications in the fields of computer vision, machine learning, and artificial intelligence.

The library encompasses a wide range of functionalities, including image and video manipulation, feature extraction, object detection, facial recognition, and machine learning algorithms. OpenCV supports multiple platforms, making it versatile for applications across desktop, mobile, and embedded systems. Its extensive set of pre-built functions and algorithms simplifies complex tasks such as edge detection, image stitching, and camera calibration..

3. Numpy

NumPy, (Numerical Python), is a vital library in Python for numerical computing. It's a powerful tool that supports large arrays and matrices, making it handy for various mathematical operations. With NumPy, you get a set of high-level functions that simplify working with these arrays, making tasks like linear algebra, statistics, and general math more accessible. Its efficiency in handling array operations and broadcasting enhances the speed of numerical calculations. Many other scientific libraries in Python build on NumPy, showcasing its foundational role in the Python ecosystem. In essence, NumPy is a crucial component for efficient numerical tasks and data manipulation in Python applications.

B. Testing

|

SR NO |

TASKS |

INPUT |

EXPECTED VALUE |

ACTUAL VALUE |

RESULT |

|

1. |

To select Tools/ Pointer on ppt |

Gesture Recognition by camera |

Tools are selected/point on ppt using index & middle finger |

Tools are selected/point on ppt using index & middle finger |

PASS |

|

2. |

Free Hand Drawing |

Gesture Recognition by camera |

Using index finger draw on Artboard |

Using index finger draw on Artboard |

PASS |

|

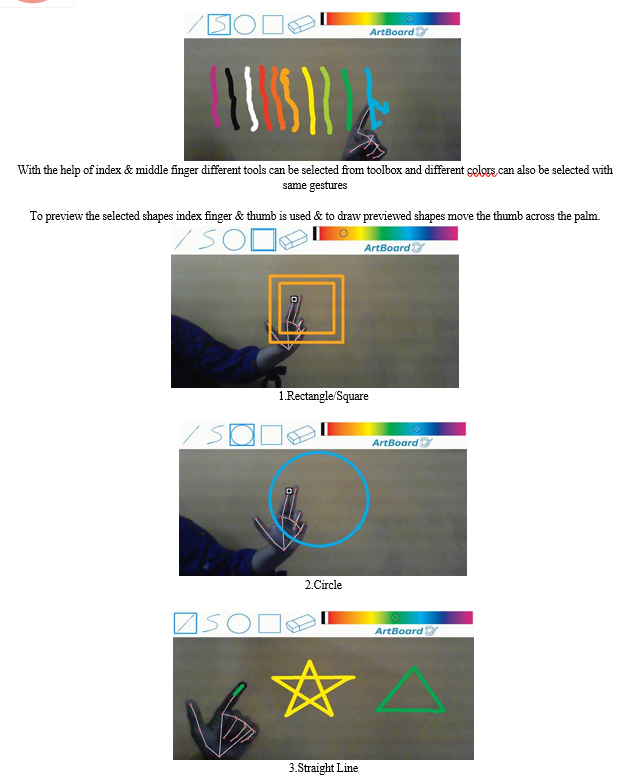

3. |

To Select Color |

Gesture Recognition by camera |

Colors from color bar are selected using index & middle finger |

Colors from color bar are selected using index & middle finger |

PASS |

|

4. |

Adjust Thickness |

Gesture Recognition by camera |

Size of brush & eraser changes using thumb,index & little finger |

Size of brush & eraser changes using thumb,index & little finger |

PASS |

|

5. |

To draw shapes & straight line |

Gesture Recognition by camera |

Drawing shapes like circle ,square, rectangle, straight line using thumb & index finger |

Drawing shapes like circle ,square, rectangle, straight line using thumb & index finger |

PASS |

|

6. |

Clear All |

Gesture Recognition by camera |

Clearing the entire screen using little, ring & middle finger |

Clearing the entire screen using little, ring & middle finger |

PASS |

|

7. |

To Erase |

Gesture Recognition by camera |

Erasing unwanted part on Artboard using index finger |

Erasing unwanted part on Artboard using index finger |

PASS |

|

8. |

Previous Slide |

Gesture Recognition by camera |

Navigate to previous slide on ppt using thumb |

Navigate to previous slide on ppt using thumb |

PASS |

|

9. |

Next Slide |

Gesture Recognition by camera |

Navigate to next slide on ppt using little finger |

Navigate to next slide on ppt using little finger |

PASS |

|

10. |

To Save work |

Pressing key on keyboard |

Work saved in folder using ‘s’ key |

Work saved in folder using ‘s’ key |

PASS |

Conclusion

In this research project, we have developed a Gesture Controlled Virtual Artboard, providing users with the ability to execute tasks like drawing, erasing, and conducting PowerPoint presentations through mid-air hand gestures. The implementation involves the utilization of the Mediapipe library for hand gesture detection, eliminating the need for traditional image processing techniques and allowing real-time tracking of hand gestures. The system empowers users to interact with a virtual canvas using intuitive hand movements, offering features such as drawing and erasing without the need for physical tools. Additionally, the user can navigate through a PowerPoint presentation by making specific gestures in the air. The use of this system ensures accurate and responsive hand gesture recognition in real-time.One notable advantage of this project is its efficiency in screen management. Users can effortlessly clear the entire virtual canvas and can save their work using a few simple hand gestures, enhancing the overall user experience.Beyond its practical applications, this initiative introduces a novel and engaging teaching approach by incorporating technology that promotes creativity and convenience in the educational process.

References

[1] Ramachandra H.V. , Balaraju G, Deepika K, Navya M.S, \"Virtual Air Canvas Using OpenCV and Mediapipe\" in 2022 International Conference on Futuristic Technologies (INCOFT). [2] B Anand Kumar,T Vinod,M srinivas Rao, \"Interaction through Computer Vision Air Canvas\" in 2022 International Conference on Advancements in Smart, Secure and Intelligent Computing (ASSIC). [3] Palak Rai, Reeya Gupta,Vinicia Dsouza,Dipti Jadhav, \"Virtual canvas for interactive learning using OpenCV\" in 2022 IEEE 3rd Global Conference for Advancement in Technology (GCAT). [4] Nenavath Rahul , A Snigdha , K. Jeffery Moses , S.Vishal Simha, “Painting with Hand Gestures using MediaPipe” in 2022 International Journal of Innovative Science and Research Technology. [5] Shaurya Gulati, Ashish Kumar Rastogi, Mayank Virmani, Rahul Jana, Raghav Pradhan, Chetan Gupta “Paint / Writing Application through WebCam using MediaPipe and OpenCV” in 2022 2nd International Conference on Innovative Practices in Technology and Management (ICIPTM) . [6] Koushik Roy, Md. Akiful Hoque Akif “Real Time Hand Gesture Based User Friendly Human Computer Interaction System” in 2022 3rd Int. Conf. on Innovations in Science, Engineering and Technology (ICISET) 26-27 February 2022. [7] K Sai Sumanth Reddy, Abhishek R , Abhinandan HeggdeLakshmi Prashanth Reddy “Virtual Air Canvas Application using OpenCV and Numpy in Python” in IJARIIE Vol-8 Issue-4 2022. [8] Dr. B. Esther Sunanda, M. Bhargavi, , M. Tulasi Sree, M.R.S. Ananya, N. Kavya “AIR CANVAS USING OPENCV, MEDIAPIPE” in International Research Journal of Modernization in Engineering Technology and Science Volume:04/Issue:05/May-2022. [9] Kavana KM, Suma NR \"RECOGNIZATION OF HAND GESTURES USING MEDIAPIPE HANDS\" in International Research Journal of Modernization in Engineering Technology and Science under Volume:04/Issue:06/June-2022. [10] I. J. Tupal and M. Cabatuan, \"Vision-Based Hand Tracking System Development for Non-Face-to-Face Interaction,\" 2021 IEEE 13th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), Manila, Philippines, 2021. [11] Cheng Ming, Yan Yunbing \"Perception-Free Calibration of Eye Opening and Closing Threshold for Driver Fatigue Monitoring\" in the National Natural Science Foundation of China under Grant 51975428 and Grant 52002298. [12] Y. Quiñonez, C. Lizarraga and R. Aguayo, \"Machine Learning solutions with MediaPipe,\" 2022 11th International Conference On Software Process Improvement (CIMPS), Acapulco, Guerrero, Mexico, 2022. [13] Subhangi Adhikary, Anjan Kumar Talukdar, Kandarpa Kumar Sarma \"A vision-based system for recognition of words used in indian sign language using mediapipe\" in 2021 Sixth International Conference on Image Information Processing (ICIIP). [14] Rafiqul Zaman Khan and Noor Ibraheem, \"Hand Gesture Recognition: A Literature Review\", August 2012, International Journal of Artificial Intelligence & Applications. [15] Alper Yilmaz, Omar Javed, Mubarak Shah, \"Object Tracking: A Survey\", ACM Computer Survey. Vol. 38, Issue. 4, Article 13. [16] Jeong-Seop Han, Choong-Iyeol Lee, Young-Hwa Youn, Sung-Jun Kim,\"A Study on Real-time Hand Gesture Recognition Technology by Machine Learning-based MediaPipe\" in Journal of System and Management Sciences, 2022.

Copyright

Copyright © 2024 Mr. Deven Gupta, Ms. Anamika Zagade, Ms. Mansi Gawand, Ms. Saloni Mhatre, Prof. J.W. Bakal. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET58680

Publish Date : 2024-02-29

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online