Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

GestureFusion: A Gesture-based Interaction System

Authors: Vedant Kathe, Prit Patel, Omkar Shinde, Avishkar Wagh

DOI Link: https://doi.org/10.22214/ijraset.2024.60046

Certificate: View Certificate

Abstract

In an era of increasingly sophisticated human-computer interaction, the ability to comprehend and interpret hand gestures has become a pivotal element for bridging the gap between humans and machines. This project, titled ”GestureFusion: A Gesture-based Interaction System,” seeks to contribute to this rapidly evolving field by developing a comprehensive system for recognizing and categorizing hand gestures through the application of computer vision techniques. The primary objective of this project is to create a versatile and extensible hand gesture recognition system that can be employed in various domains such as virtual reality, robotics, sign language interpretation, and human-computer interface design. To achieve this, the project employs state-of-the-art computer vision algorithms and deep learning techniques. Key components of this project include data collection and annotation, model training and optimization, the development of a user-friendly application programming interface (API) for integration into diverse applications. The project will also address challenges related to real-time gesture recognition, gesture classification, and hand pose estimation. Furthermore, the hand gestures library will be designed with a focus on scalability and flexibility, enabling developers to extend its capabilities and tailor it to specific applications. A rich dataset of annotated hand gestures will be made available as part of the project, fostering research and development in this domain.

Introduction

I. INTRODUCTION

In today's digital age, human-computer interaction (HCI) plays a pivotal role in shaping our daily lives, enabling seamless communication and interaction with digital devices. Traditional input methods such as keyboards and mice have long been the standard for interacting with computers, but advancements in technology have paved the way for more intuitive and natural interaction modalities. Among these, gesture-based interaction has emerged as a promising avenue, offering users the ability to control devices using hand movements and gestures .The GestureFusion project seeks to leverage the potential of gesture-based interaction to redefine how users interact with their computers. Gestures are an important form of non-verbal communication between humans and conveying information through hand gestures can enable more natural interaction with computing devices. Vision-based approaches for hand gesture recognition use computer vision and image processing techniques rather than data gloves or other sensors. This allows for a more seamless interaction without additional hardware devices. The main challenges with vision-based gesture recognition are making the systems invariant to factors like background, lighting, people etc. and achieving real-time performance. vision-based methods allow for a more natural user experience, vision-based hand gesture recognition techniques for human-computer interaction. There has been research done on developing systems that allow users to interact with computers using hand gestures as captured by a webcam. Traditional input devices like mouse and keyboard allow interaction but do not fully utilize the possibilities in terms of ease of use. The primary objective of this project is to develop a comprehensive hand gesture recognition system that can be seamlessly integrated into various domains, including virtual reality, robotics, sign language interpretation, and human-computer interfaces. The proposed system focuses on developing a system that uses only a single webcam to capture and track hand movements for computer interactions like zooming, panning, scrolling etc. This approach aims to minimize the use of traditional hardware while providing greater comfort, control and accuracy to the user compared to other input methods. By using only a webcam, the proposed system has the advantages of lower costs and being able to recognize gestures without additional wearable devices. By harnessing the power of built-in webcams present in laptops and desktops, GestureFusion aims to provide users with a novel and intuitive means of controlling their devices through hand gestures. This innovative approach not only enhances accessibility for individuals with diverse technical backgrounds and physical abilities but also offers a more natural and immersive computing experience.

II. LITERATURE SURVEY

Gesture-based interaction has emerged as a promising paradigm in human-computer interaction (HCI), offering intuitive and natural ways for users to interact with digital devices. The literature survey conducted for the Gesture Fusion project explores existing research efforts and advancements in gesture recognition systems, focusing on key themes such as dynamic hand gesture recognition, human-computer interaction, and applications in smart environments and robotics.

The study by Hakim et al. (2019) proposes a unique gesture-based system tailored for smart TV-like environments. By combining various applications such as movie recommendations, social media updates, and tourism information, the system utilizes a deep learning architecture comprising a three-dimensional Convolutional Neural Network (3DCNN) and Long Short-Term Memory (LSTM) model. Additionally, a Finite State Machine (FSM) context-aware model is employed to control class decision results. Achieving an impressive 97.8% accuracy rate on eight selected gestures, the system demonstrates significant improvements in real-time recognition, highlighting the efficacy of deep learning approaches in dynamic gesture recognition tasks.

In a similar vein, Haria et al. (2017) present a marker-less hand gesture recognition system designed for human-computer interaction. Their system efficiently tracks both static and dynamic gestures, translating detected gestures into actions such as opening websites and launching applications. Notably, the system achieves intuitive HCI with minimal hardware requirements, underscoring the importance of accessibility and usability in gesture-based interaction systems.

Furthermore, Vijaya et al. (2023) explore the application of hand gestures in controlling computer systems, particularly in the context of robotics and the Internet of Things (IoT). Their research combines Python and Arduino for laptop/computer gesture control, utilizing ultrasonic sensors to determine hand position and control media players. By leveraging the PyautoGUI module in Python, the researchers demonstrate the feasibility of computer operations through hand gestures. This work signifies the growing interest in developing hands-free computing solutions, paving the way for innovative applications in various domains.

Drawing insights from these studies, Gesture Fusion aims to advance the state-of-the-art in gesture-based interaction by combining the strengths of deep learning, marker-less tracking, and user-centric design principles. By leveraging dynamic hand gesture recognition techniques, the project seeks to enhance user experience, accessibility, and adaptability in interacting with digital devices. Additionally, Gesture Fusion aspires to extend beyond traditional HCI domains, exploring applications in smart environments, robotics, and IoT, thereby contributing to the advancement of gesture-based interaction technologies in diverse contexts.

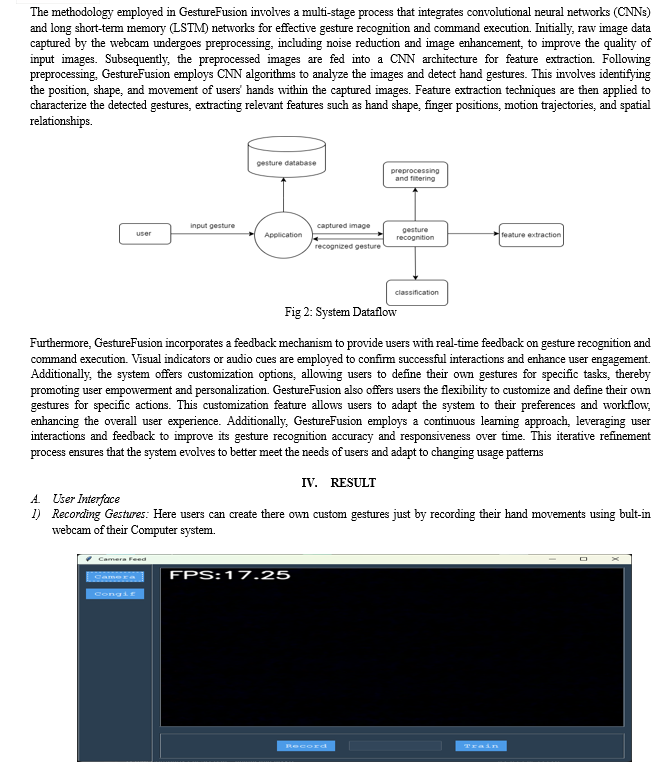

III. METHODOLOGY

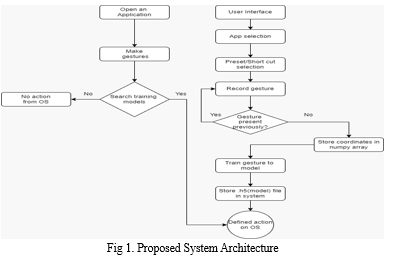

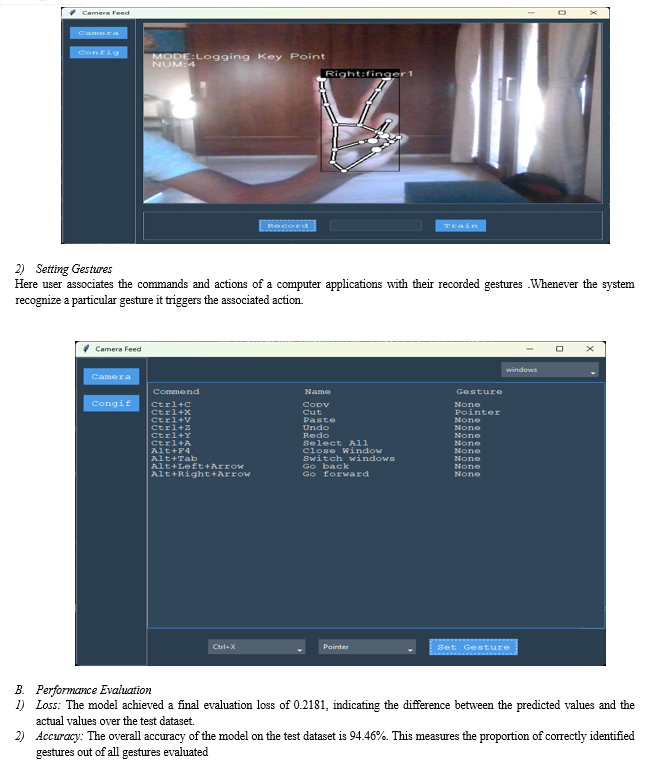

The proposed system, GestureFusion, aims to revolutionize human-computer interaction by introducing a novel approach that utilizes hand gestures for controlling laptops and desktops. Leveraging the built-in webcams commonly found in modern computing devices, GestureFusion captures real-time images of users' hand movements. These images serve as input data for a sophisticated computer vision and machine learning-based system, enabling the recognition and interpretation of hand gestures. GestureFusion offers users an intuitive and natural means of interacting with their computers, providing a more accessible, personalized, and adaptable computing experience.

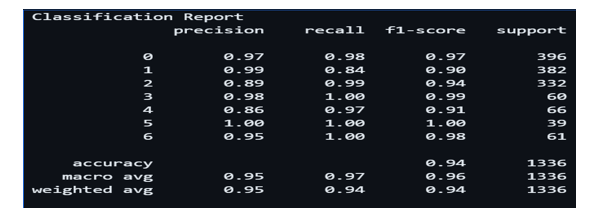

V. DISCUSSION

The GestureFusion project introduces a novel approach to human-computer interaction, aiming to revolutionize the way users control laptops and desktops through hand gestures. This discussion delves into several key aspects of the project paper, including its significance, contributions, challenges, future directions, and potential impact.

A. Significance

GestureFusion addresses the limitations of traditional input devices by providing a more intuitive and accessible means of computer interaction. By leveraging computer vision techniques and machine learning algorithms, the system recognizes hand gestures captured via built-in webcams, enabling users to perform various tasks with natural hand movements. This innovative approach has the potential to enhance user experience, particularly for individuals with physical disabilities or diverse technical backgrounds.

B. Contribution

The GestureFusion project makes several notable contributions to the field of human-computer interaction. Firstly, it introduces a hybrid architecture combining convolutional neural networks (CNNs) and long short-term memory (LSTM) networks for robust gesture recognition and command execution. This hybrid approach captures both spatial and temporal dependencies in hand movements, improving the accuracy and reliability of gesture classification. Additionally, GestureFusion prioritizes user empowerment and personalization by offering customization options, allowing users to define custom gestures tailored to their preferences and tasks.

C. Challenges

Despite its promising potential, GestureFusion faces several challenges that warrant consideration. One significant challenge is ensuring robustness and reliability in real-world environments with varying lighting conditions, background clutter, and user variability. Additionally, addressing privacy concerns and ensuring data security are crucial considerations, particularly when capturing and processing user images through built-in webcams. Furthermore, the system's effectiveness may be influenced by cultural differences in gesture interpretation, necessitating careful consideration of user diversity and inclusivity.

D. Future Direction

Looking ahead, GestureFusion opens avenues for future research and development in several areas. Firstly, advancements in computer vision and machine learning techniques may lead to further improvements in gesture recognition accuracy and efficiency. Additionally, exploring the integration of additional sensors, such as depth cameras or infrared sensors, could enhance the system's capabilities in capturing fine-grained hand movements and gestures. Furthermore, expanding GestureFusion's applications beyond traditional computing environments to domains such as augmented reality, healthcare, and education presents exciting opportunities for innovation and impact.

E. Potential Impact

The potential impact of GestureFusion extends beyond the realm of human-computer interaction, with implications for accessibility, productivity, and user engagement. By providing a more natural and intuitive means of interaction, GestureFusion has the potential to empower users, enhance inclusivity, and improve overall user experience. Moreover, its applications in diverse domains, such as assistive technology, gaming, and virtual reality, have the potential to transform how individuals interact with technology and engage with digital content.

VI. ACKNOWLEDGEMENT

We express our heartfelt gratitude to all those who contributed to the realization of the "GestureFusion: A gesture-based interaction System” project. This initiative would not have been possible without the dedication, expertise, and collaborative efforts of our guide Dr . S. P. Jadhav. This project stands as a testament to the collective effort and dedication of everyone involved.

Conclusion

The GestureFusion system represents a significant advancement in the field of human-computer interaction, offering a novel and intuitive approach to controlling laptops and desktops through hand gestures. By leveraging computer vision techniques and machine learning algorithms, GestureFusion enables real-time gesture recognition and command execution, providing users with a seamless and accessible means of interacting with their computers. Through the integration of convolutional neural networks (CNNs) and long short-term memory (LSTM) networks, the system achieves robust gesture recognition, capturing both spatial and temporal dependencies in hand movements. This hybrid architecture allows GestureFusion to accurately classify a diverse range of gestures and perform corresponding actions with high precision. Furthermore, GestureFusion prioritizes user empowerment and personalization by offering customization options for defining custom gestures tailored to individual preferences and tasks. This adaptability enhances user engagement and satisfaction, promoting a more enjoyable and personalized computing experience. Additionally, the incorporation of real-time feedback mechanisms ensures transparent communication between the system and the user, providing confirmation of gesture recognition and command execution.

References

[1] Hakim, N. L., Shih, T. K., Kasthuri Arachchi, S. P., Aditya, W., Chen, Y. C., & Lin, C. Y. (2019). Dynamic hand gesture recognition using 3DCNN and LSTM with FSM context-aware model. Sensors, 19(24), 5429. [2] Haria, A., Subramanian, A., Asokkumar, N., Poddar, S., & Nayak, J. S. (2017). Hand gesture recognition for human computer interaction. Procedia computer science, 115, 367-374. [3] Hand Gesture Controlling System Vijaya, V. & Harsha, Puvvala & Murukutla, Sricharan & Eswar, Kurra & Kuma, Nannapaneni. [4] Qi, X., Liu, C., Sun, M., Li, L., Fan, C., & Yu, X. (2023). Diverse 3D hand gesture prediction from body dynamics by bilateral hand disentanglement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 4616-4626). [5] Shital M. Chavan, Smitha Raveendran, “Hand Gesture Movement Tracking System for Human Computer Interaction”, International Research Journal of Engineering and Technology (IRJET), Volume: 02 Issue: 08, pp. 1536 - 1542, Nov 2015. [6] Juan C. Núñez, Raúl Cabido, Juan J. Pantrigo, Antonio S. Montemayor, José F. Vélez, “Convolutional Neural Networks and Long Short-Term Memory for skeleton-based human activity anhand gesture recognition”, Elsevier, Volume: 76, pp. 80 - 81, Apr2018.

Copyright

Copyright © 2024 Vedant Kathe, Prit Patel, Omkar Shinde, Avishkar Wagh. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET60046

Publish Date : 2024-04-09

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online