Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Hand Gesture Controlled Presentation Using Computer Vision

Authors: Ram Krishna Singh, Anshika Mishra, Anshika Johari, Harsh Mittal

DOI Link: https://doi.org/10.22214/ijraset.2024.62780

Certificate: View Certificate

Abstract

Making presentations is essential in a lot of facets of life. At some point in your life, whether you\'re a student, worker, business owner, or employee of an organization, you\'ve probably given presentations. Presentations might seem dull at times since you have to use a keyboard or other specialized device to manipulate and alter the slides. Our goal is to enable hand gesture control of the slide display for users. Human-computer interaction has seen a sharp increase in the use of gestures in recent years. The system has attempted to use hand movements to control several PowerPoint capabilities. Machine learning has been used in this system to map motions utilizing several Python modules and identify motions with minute variations. Hand gestures can range from static to dynamic based on how they are used. Depending on the platforms they are used on, hand gesture recognition systems have different advantages and disadvantages.[13] A number of factors, such as the slides, the keys to altering the slides, and the audience\'s composure, are contributing to the growing obstacles to producing the ideal presentation. A hand gesture-based intelligent presentation system provides an easy way to manipulate or update the slides. During presentations, there are multiple breaks so that the presenter can use the keyboard. The goal of the technology is to let users explore and control the slide show with hand gestures. The method uses machine learning to recognize different hand gestures for a wide range of tasks. The recognition approach provides a communication bridge between humans and systems.

Introduction

I. INTRODUCTION

Presenting is a compelling and effective tactic in today's digital world to help presenters persuade the audience and disseminating data. Now we are developing new ways of human -computer interaction like augmented reality (AR) technology and hand gesture control. By superimposing virtual elements on the real world, augmented reality transforms digital experiences and establishes a new paradigm for fluid interaction. Using hand gestures, users interact with augmented environments with ease, providing a simple and natural way to interact [18].

The other way is Hand Gesture control. In this, the basic aim is to identify static hand gesture images, or frames, from an input video stream that was captured with consistent lighting and uncomplicated background conditions [15]. You may work with slides using a laser pointer, keyboard, mouse, etc. The drawback is that prior device expertise is required to control the devices [1]. It will be necessary for humans and computers to interact in new ways, utilizing interfaces that are both user- friendly and easy.

Methods Employing Data Gloves: This method uses a sensor to determine the position of the hand (either optical or physical) fastened to a glove, then transforming fingers with electric cautions. This tactic requires the client to bearing a bunch of cords connected to a laptop, diminishing the value and sincerity of a client interaction [10].

A. Vision-Based Methodologies

The foundation of machine vision techniques is how people interpret knowledge about their surroundings. Creation of a vision-based interface for daily purposes is difficult but we can create them for some controlled settings [10].

In past few years Gesture recognition gained usefulness for controlling software for media players, robots, and games [1]. The human- computer interface (HCI) is the system that consists of a mix of hardware and software to facilitate communication between the person and the computer or machine. Typically, control components and switches with touch displays are utilized. Using the speech or gesture input of the touchless user interface is a simpler method of communicating. This project focuses on gesture input since speech input has already been widely used in every industry [2]. We will use hand gestures in this design to feed input into our programs through Python libraries.

When these hand motion alternatives are used in place of a traditional mouse and keyboard control, the presentation experience is significantly improved. It is a type of non- verbal and non-vocal communication.[4]. As we move our hands in different directions, the webcam will read the data from the picture and analyze it to identify the kind of motion our hands are producing [3]. The machine records motion and interprets it to carry out the operation. The hand gesture will be recognized by the machine after being recorded by the camera. It will first filter out the foreground and remove the background from the image that was taken.[12]

The Python framework was mostly used in the construction of the system, together with NumPy, media pipe, open cv, and cv zone technologies. This approach aims to improve the usefulness and effectiveness of presentations [4]. Using hand gestures when giving presentations increases audience attention, fosters engagement, and improves interactivity. Additionally, the study highlights the significance of a smooth integration with a well-liked presentation applications and advances interaction technology [7]. This paper introduces a system that uses the libraries of python such as OpenCV and Media Pipe to allow us to control presentations using hand gestures. Among the studies on human-computer interaction is the virtual mouse that uses hand gesture tracking based on image in real time and fingertip recognition [16].

B. Why Gestures? [14]

Robotics, gaming, emotion detection ,and communication for the deaf are just a few of the many uses for gesture recognition systems. These applications are controlled by a variety of gestures, including hand and face movements. There are two different kinds of hand gestures: dynamic and static.

C. Static Hand Gesture [14]

A single position or hand configuration is represented by a static hand gesture. Hand gestures are classified as static when their direction and position remain constant for a certain amount of time. Dynamic Hand Gesture [14]

A dynamic hand gesture is one that aims to evolve with time. Dynamic hand gestures are hand movements, such as waving a hand to say "goodbye."

In our system, we are basically using static gesture.

II. EXISTING SYSTEM

Typically, the manual presentation system uses keyboards or clickers as well as slide decks for input. Presenters use the input devices' buttons and keys to move through the slides. This manual method, while popular, has a few drawbacks. Presenters have limited mobility, and using outside tools could cause interruptions or diversions from the main point of the presentation. The audience's engagement may also be impacted by the absence of logical and organic interaction techniques. Consequently, a different strategy that provides better mobility, interactivity, and user experience is required [9].

III. PROPOSED SYSTEM

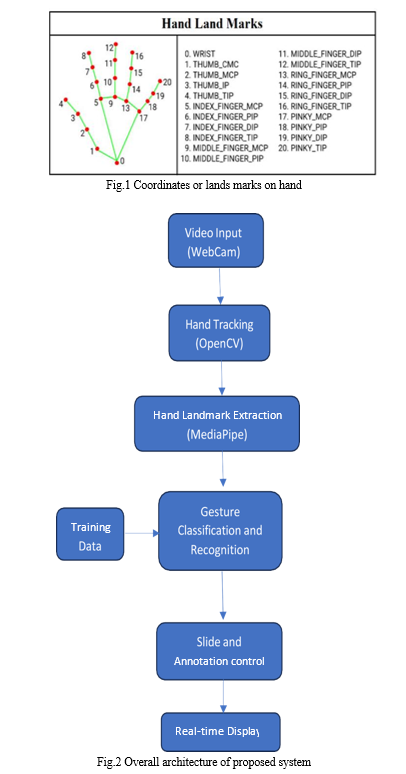

The suggested system uses real-time hand movement detection and tracking through computer vision techniques that make use of the OpenCV and Media Pipe libraries. The term "real-time hand gesture detection" refers to the capacity to recognize hand movements immediately and accurately. Real-time and non-real-time hand gestures differ in terms of processing speed, image processing methods, acceptable result delivery delays, and recognition algorithms [13].

The to identify and comprehend a variety of organic hand gestures. Swiping left or right to navigate between slides is one gesture that the system recognizes by examining the positions and movements of the fingers. For instance, the system can advance to the next slide with a swipe gesture to the right, or it can advance to the previous slide with a swipe gesture to the left. Presenters can also use the system to move a pointer across the screen. The system detects the tip of the index finger and maps its location to the screen coordinates by using the hand tracking data. This makes it possible for presenters to point virtually with their finger, improving their ability to draw attention to particular points on the slides. By facilitating seamless communication between the presenter and the presentation software, this improves both the delivery and the experience for the audience [9].

IV. LITERATURE SURVEY

Puja Chavan et. al. (2023) [1], in this paper the idea is to let users manipulate the slideshow with hand gestures. Human-computer interaction has seen a sharp increase in the use of gestures in recent years. The system has attempted to use hand movements to control various PowerPoint functions. Seema Sharma (2023) [2]: Gesture recognition has gained a lot of recognition as a result of technological advancements that offer new, practical, and quick ways for humans and computers to interact.

Although the various current systems have good functionality, users have not been very positive about them. These systems' complicated algorithms and low accuracy rates are the primary causes of the issue. In order to overcome these problems and differentiate itself from other gesture recognition systems, a new system has been proposed. For managing multimedia files and apps, including music and video players, document viewers, and video players, it will offer a touchless user interface. It will serve as a helpful tool for those with disabilities who are unable to operate their input devices or for anyone who would rather use this more organic approach to manipulate systems. Chetana D. Patil et. al. (2023) [3]: Gesture recognition has been used in recent trends to develop machines because of its communal nature. Humans and computers can converse with each other through gestures, a kind of verbal communication. Hand gesture detection is heavily used in artificial intelligence to enhance functionality and person-to- person commerce. Bhairavi Pustode et. al. (2023) [4]: In this paper, an intelligent hand gesture-based presentation control system that was implemented with computer vision and Python. We showcased the system's performance and efficacy through a range of experiments. We think the suggested system has the ability to enhance the whole presentation experience and create more dynamic and interesting presentations . Wa Thai Nhu Phuong et. al. (2023) [5]: These days, a lot of students struggle with body language during presentations. The purpose of this study was to learn more about Tay Do University students' challenges with body language during presentations. For this study, forty sophomore English majors were the participants. A questionnairewas employed as the data collection tool. The study's conclusions indicated that the participants struggled to use body language (facial expressions, eye contact, and posture) when giving a presentation. Few pupils, though, had trouble using hand gestures. The researchers concluded their study with some recommendations for enhancing students' use of body language in presentations .M. Bhargavi et. al. (2023) [6]: In recent years, natural interaction techniques have been the main focus of research in the field of Human- Computer Interaction (HCI). Real-time handgesture- based recognition applications have been deployed in various contexts where humans interact with computers. A camera is required for hand motion detection. Using a web camera to create a virtual HCI device is the main method of interaction.

Hope Orovwode et. al. (2023) [7]: The created system, which consists of an LSTM model anda linear classifier, shows great promise for precise and real-time hand gesture recognition. Efficacious classification in conjunction with temporal analysis offers a holistic method for gesture identification. Because of this, this system has potential for a variety of uses that will improve user experience and interaction, such as interactive presentations and virtual reality interfaces. This system can be improved even further in terms of accuracy and practicality in real-world situations with additional study and optimization .Sruthi S et. al. (2023) [9]: The presentation system controlled by hand gestures offers a remarkable progression in the field of human- computer interaction. Presenters are empowered to navigate slides, interact with content, and increase audience engagement with ease thanks to the system's accurate gesture recognition, seamless functionality, and intuitive control capabilities. This innovation has the power to completely transform conventional presentation techniques, providing viewers and presenters with a more engaging and dynamic experience .Mr. Srinivasulu M et. al. (2022) [10].

Gestures are among the many interactions that fall under the umbrella of human-computer interaction (HCI). Nonverbal communication gestures are of interest to HCI gesture recognition researchers. In order to make a device function, a system may be able to interpret human gestures and transmit information.

This important area of HCI study focuses on user and device interfaces. The aim of gesture recognition is to record specific motions that an apparatus such as a camera can subsequently identify. There are several situations in which hand gestures can be used for communication Anwer Mustafa Hilal et. al. (2022) : Sign language recognition is considered one of the most effective ways to help people with disabilities communicate with others. It facilitates their seamless use of sign language to communicate the necessary information. People with disabilities can reliably recognize signs using the most recent advancements in computer vision and image processing techniques.

V. METHODOLOGY

The Python code for the project was developed using the OpenCV and NumPy packages. The libraries utilized in this work are first imported, and then further input and output processing is performed on them. The webcam is used tore cord video input, and the system processes each frame to identify and follow the presenter's hand.

Based on the Media Pipe Hands solution, the Hand Tracking Module recognizes hand landmarks and gathers pertinent data for gesture recognition. The system recognizes the active gesture and executes the related action by examining the finger positions and motions.[9]

The system follows a systematic flow to enable seamless interaction with slides:

- Hand Detection: Live video input from a webcam is captured by the system using OpenCV. The system recognizes and locates the presenter's hand in the taken pictures by examining the video frames.

- Background and Illumination Considerations: To reduce errors in hand segmentation, adequate lighting is essential. Skin-colored elements should be absent from the background to avoid affecting the segmentation's accuracy.[17]

- Hand Tracking: Media Pipe is used to track hand movements and extract hand landmarks after the hand has been detected. These landmarks stand in for particular hand locations, like joints and fingertips, that will be utilized to recognize gestures.

- Gesture Recognition: Through the examination of the hand landmarks' positions and movements, the system is able to precisely identify predefined gestures. Each gesture in the presentation software relates to a particular function. The following gestures are approved:

- Gesture 1: Whole hand pointing up to go to the next slide

- Gesture 2: Thumbs up to go to the previous slide.

- Gesture 3: Index Finger and Middle Finger Together– Holding the Pointer

- Gesture 4: Index Finger-Drawing on the Slide

- Gesture 5: Erase/Undo the Previous Drawing - Middle Three Fingers

During the presentation, the system recognizes the presenter's gestures and executes the corresponding actions by analyzing the presenter's hand position and movement.

a. Slide Navigation: Presenters can advance to the previous slide by using the Thumb Finger gesture, which the system recognizes. Slide navigation is also smooth for presenters thanks to the Little Finger gesture, which lets them move to the next slide.

b. Pointer Control: By combining the index and middle fingers, presenters can grasp a virtual pointer. They can draw the audience's attention to particular sections of the slide and emphasize important points by using this gesture. The dynamic pointer control gives the presentation an interactive component.

c. Drawing and Annotations: Presenters can use the Index Finger gesture to draw on the slide thanks to the system. Presenters can highlight particular elements, add real-time annotations, and underline crucial details by dragging their finger across the screen. This feature makes it possible to communicate the content effectively and make on- the-fly visual enhancements.

d. Erasing: Presenters can use the Middle Three Fingers gesture to erase or edit earlier annotations. Presenters can easily remove individual annotations or clear the entire slide with this gesture, which activates the erasing function and guarantees a professional and orderly presentation.

e. Interaction during Presentation: The presentation software is then informed of the gestures that have been identified and the corresponding actions. Popular presentation tools are integrated with the system to facilitate the smooth execution of desired actions, including highlighting content, navigating between slides, annotating content, and erasing or undoing annotations.

VI. RESULTS

Accurate gesture recognition has been demonstrated by the hand gesture-controlled presentation system that makes use of Media Pipe and OpenCV. Presenters can easily manage their slides with its smooth navigation, dynamic pointer control, quick comments, and simple erasing features. The technology ensures a seamless presentation experience and raises audience engagement by precisely tracking hand movements in real-time. This implementation showcases the potential of gesture recognition and computer vision technologies to revolutionize human-computer interaction and open up new possibilities for interactive and engaging presentations.

Conclusion

In this paper, we presented an intelligent hand gesture-based presentation control system that was implemented with computer vision and Python. With the system, presentation is made easy and convenient by allowing the presenter to manipulate the slides with basic hand gestures. We showcased the system\'s performance and effectiveness through a range of experiments. The suggested system, in our opinion, has the ability to enhance the presentation experience overall and make talks more dynamic and interesting. In future, this system can be made more advanced by adding features of voice control along with gesture controls making it much easier to use and providing audience engagement to much greater extent.

References

[1] Puja Chavan, Vedant Pawar, Tejas Pawar, Varun Pawar, Samiksha Pokale, Bhairavi Pustode(2023) Department of Multidisciplinary, Vishwakarma Institute of Technology, Pune, Maharashtra, India Smart Presentation System Using Hand Gestures. [2] Seema Sharma 1,2 1 Research Scholar, Department Of Information Tecnology, Manipal University Jaipur, Jaipur, India 2 Assistant Professor, Department of CSE, JECRC University, Jaipur, India Video controlling using hand gestures using automation techniques in ML pipelines. [3] Chetana D. Patil1 , Amrita Sonare2 , Aliasgar Husain3 , Aniket Jha4 , Ajay Phirke Department of Computer Engineering, Dhole Patil College of Engineering, Pune Controlled Hand Gestures using Pythonand OpenCV. [4] Bhairavi Pustode, Vedant Pawar ,Varun Pawar, Tejas Pawar, Samiksha Pokale Smart Presentation System Using HandGestures [5] Wa Thai Nhu Phuong 1 , Phan Vinh Khang 2Tay Do University Using Body Language in Giving Presentations [6] G.Reethika, P.Anuhya, M.Bhargavi JNTU, ECE, Sreenidhi Institute Of Science and Technology, Hyderabad, Telangana, India Slide Presentation By Hand Gesture Recognition Using Machine Learning [7] Hope Orovwode1 , John Amanesi Abubakar2, Onuora Chidera Gaius3 , Ademola Abdullkareem4 Department of Electrical and Information Engineering, Covenant University, Ota, Ogun State, Nigeria1, 3, 4 Department of Computer Science and Engineering, University of Bologna, Bologna, BO, Italy2 The Use of Hand Gestures as a Tool for Presentation [8] Brendan Bentley¹ |Kylie Walters¹ |Gregory C. R. Yates² School of Education, University of Adelaide, Adelaide, Australia School of Education, University of South Australia, Adelaide, Australia Correspondence Brendan Bentley, University of Adelaide, Australia. [9] Sruthi S¹, Swetha S2 \'UG-Computer Science and Engineering, R.M.K Engineering College, Kavaraipettai, Tamil Nadu, India 2UG - Electronics and Instrumentation Engineering, R.M.K Engineering College, Kavaraipettai, Tamil Nadu, India Hand Gesture Controlled Presentation using OpenCV and MediaPipe [10] Devivara prasad G1 , Mr. Srinivasulu M2 1Master of Computer Applications, UBDT College of Engineering, Davangere, Karnataka, India 2Dept. of Master of Computer Applications, UBDT College of Engineering, Davangere, Karnataka, India Hand Gesture Presentation by Using Machine Learning [11] Fadwa Alrowais1 , Radwa Marzouk2,3 , Fahd N. Al-Wesabi4,* and Anwer Mustafa Hilal5 1 Department of Computer Sciences, College of Computer and Information Sciences, Princess Nourah bint Abdulrahman University, P.O.Box 84428, Riyadh, 11671, Saudi Arabia 2 Department of Information Systems, College of Computer and Information Sciences, Princess Nourah bint Abdulrahman University, P.O.Box 84428, Riyadh, 11671, Saudi Arabia 3 Department of Mathematics, Faculty of Science, Cairo University, Giza, 12613, Egypt 4 Department of Computer Science, College of Science & Art at Mahayil, King Khalid University, Saudi Arabia 5 Department of Computer and Self Development, Preparatory Year Deanship, Prince Sattam bin Abdulaziz University, AlKharj, Saudi Arabia Hand Gesture Recognition for Disabled People Using Bayesian Optimization with Transfer Learning [12] Salonee Powar1, Shweta Kadam2, Sonali Malage3, Priyanka Shingane4 1 Department of Information Technology, RAIT, D.Y. Patil Deemed to be University Nerul 2 Department of Information Technology, RAIT, D.Y. Patil Deemed to be University Nerul 3 Department of Information Technology, RAIT, D.Y. Patil Deemed to be University Nerul 4 Department of Information Technology, RAIT, D.Y. Patil Deemed to be University Nerul (2022) [13] Fahmid Al Farid , Noramiza Hashim WanNoorShahida MohdIsa1 , Junaidi Abdullah , Md Roman Bhuiyan , Jia Uddin , MohammadAhsanulHaque andMohdNizamHusen(2022) [14] Muhammad Idrees1 , Ashfaq Ahmad2 , Muhammad Arif Butt3 , and Hafiz Muhammad Danish4 1Department of Data Science, University of the Punjab, Lahore, Pakistan. 2Department of Computer Science, College of Computer Science and Information Technology, Jazan University, Saudi Arabia. 3Department of Data Science, University of the Punjab, Lahore, Pakistan. 4Department of Computer Science, University of Lahore, Lahore, Pakistan.(2021) [15] Nalluri Veda Kumar1 Vidyadhar2 1Electronics& Communication Engineering, Gandhi Engineering College (GEC, Bhubaneswar), India 2 Electrical & Electronics Engineering, Gandhi Engineering College (GEC, Bhubaneswar), India(2021) [16] Bhor Rutika1, Chaskar Shweta2, Date Shraddha3, Prof. Auti M. A.4 1 2 3 Student Department of Computer Engineering Jaihind College of Engineering Pune, India 4 Assistant Professor Department of Computer Engineering Jaihind College of Engineering Pune, India(2023) [17] Swati Bhisikar1, Shreya Sawant2, Tanvi Sawant3, Sayali Narale4, Hemant Kasturiwale5 1Department of Electronics and Telecommunication, JSPM’s Rajarshi Shahu College Of Engineering, Tathawade, Pune, India 2Department of Electronics and Telecommunication, JSPM’s Rajarshi Shahu College Of Engineering, Tathawade, Pune, India 3Department of Electronics and Telecommunication, JSPM’s Rajarshi Shahu College Of Engineering, Tathawade, Pune, India 4Department of Electronics and Telecommunication, JSPM’s Rajarshi Shahu College Of Engineering, Tathawade, Pune, India 5Department of Electronics andComputer Science Engineering ,Thakur college of Engineering and Technology, Mumbai, India.(2023) [18] Mr.Gurunathan T1*, Mrs.Sathiya A2 , Mr Srinivasan S R3 , Mr. Kannan A4, Mr. S.Karthick5 1,3,4,5UG - Department of Artificial Intelligence and Data Science, Sri Sairam Institute of Technology, Chennai, Tamil Nadu, India. 2Research scholar, Anna University Chennai, India.(2024)

Copyright

Copyright © 2024 Ram Krishna Singh, Anshika Mishra, Anshika Johari, Harsh Mittal. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET62780

Publish Date : 2024-05-26

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online