Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Hand Gesture Recognition Using AI/ML

Authors: Tejasvi Jawalkar, Sejal Sandeep Khalate, Shweta Anil Medhe, Kshitija Shashikant Palaskar

DOI Link: https://doi.org/10.22214/ijraset.2024.58165

Certificate: View Certificate

Abstract

Every day, we see many people who are facing illnesses as deaf, dumb and blind etc. They face difficulties in interacting with others. Previously developed techniques were all sensors based and they didn’t give a general solution. This article explains a new technology for virtual conversations without sensors. An image processing technique called Histogram of gradient (HOG) along with an artificial neural network (ANN) has been used to train the system. The Web Camera is used to take an image of different gestures and that will be used as input to the Mat lab. The software will recognize the image and identify the core speech output to be processed using the speech replay suite. Sign language is composed of continuous gestures, therefore, for sign language recognition, in addition to spatial domain, it also needs to capture motion information across multiple consecutive video frames.This paper explains two way communications between the deaf, dumb and normal people which means the proposed system is capable of converting the sign language to text and voice.

Introduction

I. INTRODUCTION

The Sign language is commonly used by those who are unable to talk but can hear, as well as those who can hear but cannot speak. Sign language is made up of diverse gestures generated by varied hand shapes, movements, and hand or body orientations, as well as face expressions. Deaf people use these gestures to express their thoughts [3]. But the use of these gestures is always limited in the deaf-dumb community. Normal individuals never attempt to learn sign language. This creates a significant communication barrier between deaf-dumb persons and hearing people. Deaf persons typically seek the assistance of sign language interpreters to translate their thoughts into those of regular people and vice versa. However, this system is highly expensive and does not work throughout the entire life of the vehicle [5].

Indian sign language (ISL) is utilized by India's deaf community. It includes both word level gestures and fingerspelling. Fingerspelling is a method of forming words using letter-by-letter coding. Letter-by-letter signing can be used to represent words for which no signs exist, words for which the signer is unfamiliar with the movements, or to emphasize or explain a specific phrase. As a result, detection of fingerspelling is critical in sign language recognition. Fingerspelling in Indian sign language comprises of both static and dynamic motions created by two hands with arbitrarily intricate forms [4]. This research describes a method for automatically recognizing static motions in Indian sign language alphabet and numbers. The 26 letters of the English alphabet and the numbers 0-9 are among the indications assessed for recognition [3].

Multimedia technology has expanded and spread dramatically over the last 10 years, significantly affecting how we conduct study and connect with one another. all throughout the world. Multimedia technologies are being used in education to enable many individuals to acquire the training they require over the Internet. It is critical to consider each individual's unique demands while developing instructional materials and technologies. Those with hearing impairments, for example, typically have such requirements.

With the rapid development of artificial intelligence and other technologies, gesture recognition as a major human computer interaction method has gradually become a hot issue. As a special gesture, sign language is also the main communication method for language-disabled people [4]. It carries a wealth of information and has a good expressive ability. Because people with no language barriers generally do not have sign language skills, the study of sign language recognition based on computer vision can not only facilitate the communication between language disabled and non-language obstacles, but also has important significance for the development of human-computer interaction.

Sign language is the daily language of communication between deaf and dumb people, which is the most comfortable and natural way of communication between deaf and dumb people, and is also the main tool for special education schools to teach and convey ideas [5]. Sign language is a natural language that conveys meaning through the shape, position, movement of hands and facial expressions. Similar to other natural languages, sign language has a standardized grammar and a complete vocabulary system.

However, there are very few people with normal hearing who are proficient in sign language, and in many countries, the theoretical research on translation of sign language is still in its infancy.

Sign language recognition methods are easily affected by human movement, change of gesture scale, small gesture area, complex background, illumination and so on. And some sign language recognition methods must use gesture areas to input information [1]. Therefore, robust hand locating is an important pretreatment step in sign language recognition. Compared with basic gestures, gestures in sign language are characterized by complex hand shape, blurred movement, low resolution of small target area, mutual occlusion of hands and faces, and overlapping of left and right hands. In addition to the influence of complex background and light, a large number of sign language image sequences are needed in sign language recognition, and all these have brought great challenges to the accuracy and stability of hand locating in sign language recognition. Sign language is composed of continuous gestures, therefore, for sign language recognition, in addition to spatial domain , it also needs to capture motion information across multiple consecutive video frames. At the same time, how to build an efficient and suitable sign language recognition model has always been a hot research spot.

II. LITERATURE REVIEW

Myasoedova M.A, Myasoedova Z.P.,” Multimedia technologies to teach Sign Language in a written form [1]”, In this paper They investigate the features of signs in the Sign Languages, and the related problems of how to describe the features linguistically. They present a brief overview of the existing Sign notations; the notations allow us to record all Sign elements in the form of a sequence of characters using alphabetic, digital, and various graphic elements. Furthermore, They confirmed that the Sign writing system They chose to record Russian Sign Language, whose symbols and rules allowed us to compactly and precisely set the spatiotemporal form of the signs.

Dilek Kayahan, Tunga Güngör,” A Hybrid Translation System from Turkish Spoken Language to Turkish Sign Language [2]”, Sign language is the primary tool of communication for deaf and mute people. It employs hand gestures, facial expressions, and body movements to state a word or a phrase. Like spoken languages, sign languages also vary between the regions and the cultures. The advantages of rule-based machine translation technology and statistical machine translation technology are combined into hybrid translation systems.

Ilya Makarov, Nikolay Veldyaykin, Maxim Chertkov,” Russian Sign Language Dactyl Recognition [3]”, In this study, They offer a novel model based on deep convolutional neural networks and compare numerous real-time sign language dactyl recognition systems. These systems are able to recognize the Russian alphabet in the form of static symbols in the Russian sign language used by people in the deaf community. With this method They can recognize words in Russian, a natural language presented through the subsequent gestures of each letter. They evaluate our approach on Russian (RSL) sign language, for which They collected a dataset and evaluated abbreviation recognition.

Sandrine Tornay Marzieh Razavi.,” TOWARDS MULTILINGUAL SIGN LANGUAGE RECOGNITION [4]”, Sign language recognition involves modelling of multichannel information such as, hand shapes, hand movements. This also requires sufficient sign language-specific data. This is a challenge because sign language is inherently under-resourced. It has been demonstrated in the literature that hand shape data may be calculated by combining resources from several sign languages. There is currently no capacity for modelling hand movement data. In this paper, They develop a multilingual sign language approach in which hand movement modelling is also accomplished by deriving hand movement subunits, using data independent of the target sign language. They validate the proposed approach through a survey of Swiss German Sign Language, German Sign Language, and Turkish Sign Language and demonstrate that sign language recognition systems can be efficiently developed by using multilingual sign language resources.

MD. Sanzidul Islam, Sadia Sultana Sharmin Mousumi, The First Complete Multipurpose Open Access Dataset of Isolated Characters for Bangla Sign Language [5]. In this paper, They investigate the features of signs in the Sign Languages, and the related problems of how to describe the features linguistically. They present a brief overview of the existing Sign notations; the notations allow us to record all Sign elements in the form of a sequence of characters using alphabetic, digital, and various graphic elements. Furthermore, They confirmed that the sign language system They chose to record Russian Sign Language, whose symbols and rules allow us to compactly and precisely set the spatiotemporal form of the symbols.

Deaf Mute Communication Interpreter- A Review: This article aims to cover the various mainstream approaches to communication translation systems for deaf people. Text-based methods used by deaf people fall into two broad categories: wearable communication devices and online learning systems. In the wearable voice mode, there are glove-based systems, keyboard methods and touch-screen methods.

All three above-mentioned segmentation methods make use of various sensors, accelerometers, suitable microcontrollers, text-to-speech modules, keyboards, and touch screens.

III. PROPOSED SYSTEM

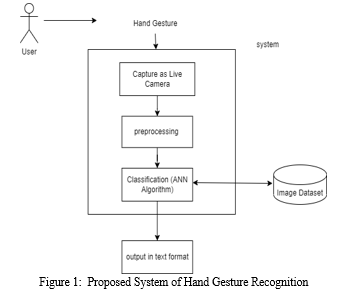

First They will collect the dataset, then the machine has to process the dataset after training, then the machine has to train the data using ANN algorithm and then detect the output. So when you provide the input as a camera then detect the output [2].

Recognition of sign language is an emerging area of research in the domain of gesture recognition. Research has been carried out around the world on sign language recognition, for many sign languages. The basic stage of a sign language recognition system is accurate hand segmentation. This paper used Otsu's technique of segmentation to create an improved vision-based recognition of sign language. Approximately 466 million users worldwide suffer from hearing loss, of which 70 million suffer from hearing loss. The number of children is 34 million Recognition.

Deaf people have very little or no capacity to hear. For speech, they use the sign language. People in distinct areas of the globe use distinct sign languages, which are very small in number compared to spoken languages [3]. Our goal is to create a static-gesture recognizer, a multi-class classifier that predicates the gestures of the static sign language. In the proposed work, They identified the hand in the raw image and provided the static gesture recognizer (the multi-class classifier) with this section of the image. They first build the dataset and build a multi-class classifier from the scikit-learn library [3].

The easiest way to describe artificial neural networks is as computer models of biological systems used to do a certain set of tasks, such clustering, classification, pattern recognition, etc [2]. An artificial neural network is a network of artificial neurons with biological inspiration that is set up to carry out a predetermined set of tasks.

Artificial neural networks, ANNs for short, have become well known and also considered as a hot topic of interest and are used in chatbots commonly used in text classification To be honest with you about, that you are only a neuroscientist, if you re a neuroscientist It wouldn't make much sense. Software for simulations of synapses and neurons in animal brains is evolving as the neural software industry has already been around for decades

Nature is an inspiration to people. For example, bird-powered airplanes were developed. Similarly, artificial neural networks (ANNs) were developed in the brain using neurons. This approach has addressed challenging machine learning problems such as image classification, recommendation algorithms, and speech language interpretation.

The ANN method is a machine learning algorithm with natural neural networks. ANN is the essence of deep learning. The first ANN was proposed in 1944, but has become increasingly popular in recent years.

Let’s take a look at why this method has become so popular in recent years. As you know, with the rise of the internet and social media, the amount of data generated has increased, and big data has become a buzzword. Big data has made it easier to train ANNs.

While traditional machine learning algorithms rarely analyze big data, artificial neural networks performed well on big data.Another reason for the popularity of these algorithms is when gaming industry GPUs have increased the computing power of machines is that It was made.

Using GPUs.Also, the development of awesome architectures like CNN, RNN, Transformers etc.

IV. METHODOLOGY

Basic and comprehensive concepts of ANNs are presented through the course of Artificial Neural Networks. Our Artificial Neural Network tutorials were designed for professionals and beginners [2].

"Artificial neural networks" refers to a branch of artificial intelligence inspired by biology and based in the brain[1]. Computer networks based on the biological neural networks that make up the human brain system are often referred to as artificial neural networks Artificial neural networks also contain neurons that are connected at different levels of communication, much like neural networks in a real brain in the 19th century. These nodes are called nodes.

Deaf people use hand signs to communicate, so normal people have trouble recognizing their speech with synthetic signs. Therefore, there is a need for systems that recognize signals and disseminate information to the general public.

People with hearing loss always have trouble communicating with the general public. They worry about communicating their thoughts and ideas to the general public with very little or sometimes no knowledge of sign language.

• This results in community members with hearing loss losing interest in normal activities and sometimes avoiding contact with normal people and becoming isolated.

• Researchers have developed many sign language recognition systems to overcome this situation but there is still a need for accurate and effective signal recognition. Currently, the systems proposed by previous researchers are based on the transformation of a process-based process into an equivalent representation.

• These systems limit the maximum number of action verbs to be processed in a particular language.

• The aim of the study is to develop a sign language recognition system for English sounds.

• The proposed system should be user-friendly and user-friendly for people with hearing loss.

Multilayer perceptrons consist of an input layer, a hidden layer, and an output layer. As you can see in the image above, there’s a hidden layer. If there is more than one hidden object, it is called a deep neural network. This is where deep learning comes into play. The development of modern Al architecture has made deep learning popular.

In short, inputs pass through neurons and predictions are made. However, how can prognosis of neural tubes be improved? This is where the backpropagation algorithm comes in. This algorithm assumes a neural.

The literature on artificial neural networks covers every aspect of these networks [2]. They will explore artificial neural networks (ANNs), adaptive resonance theory, and Kohonen's own systems.

V. APPROACHES

How do artificial neural networks work?

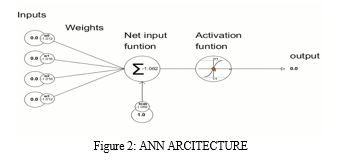

An artificial neural network can be satisfactorily represented as a weighted directed graph, where the synthetic neurons shape the nodes [1]. The association between the neuron outputs and neuron inputs can be seen because the directed edges have weights. The artificial neural network receives the input signal from the outside source in the form of a pattern and a picture inside the form of a vector. These inputs are then mathematically assigned by way of the notation x(n) for every n quantity of inputs [2].

Each input is then multiplied by its corresponding weight (this weight is the detail used by neural networks to solve a particular problem). In general, this weight usually represents the strength of connections between tissues in artificial tissues. All weighted input parameters are summarized in the software package [4].

If the weighted sum equals zero, a bias is added to make the output zero or otherwise scale up to the system’s response. The bias has the same input and is weighted equal to 1. Here, all weighted inputs can range from 0 to positive infinity [5]. Here, a fixed maximum value is compared to keep the response within the desired value range, and all weighted inputs are passed through the processing function.

The activation function specifies the transfer functions used to achieve the desired output. There are special activation functions, but they are a linear or nonlinear set of functions. Some commonly used activation functions are binary, linear, and tonal hyperbolic sigmoidal activation functions. input is then multiplied by its corresponding weights (these weights are the details used by artificial neurons to solve a particular problem), and in general, this weight usually represents the strength of the intermolecular connections in the artificial interface [1]. All weighted input parameters are summarized in the software package.

If the weighted sum equals zero, a bias is added to make the output zero or something else to scale up to the system’s response. The bias has the same input, and the weight is equal to 1. Here, all the weighted inputs can range from 0 to positive infinity [2]. Here, a fixed maximum value is compared to keep the response within the desired value range, and all weighted inputs are passed through the processing function.

The activation function specifies the transfer functions used to achieve the desired output. There are special activation functions, but they are a linear or nonlinear set of functions. Some commonly used activation functions are binary, linear, and tonal hyperbolic sigmoidal activation functions.

Conclusion

A neural network-based method for automatic recognition of handwriting in Indian Sign Language is presented in this paper. Markers are determined by extractions from manuscripts. They used segmentation based on skin colour to exclude the hand part from the image. In this work, a new theory based on the teletransformation of the image is proposed. The feed-forward neural network that recognises the symbol is trained with the features extracted from the symbol image. The method is implemented using precise digital image processing techniques, so the user does not need to have any special hardware devices to access manual processing features. Our proposed method has low computational complexity and accuracy, which is very high compared to the existing methods.

References

[1] Rini Akmeliawati, Melanie Po-Leen Ooi and Ye Chow Kuang, “Real-Time Malaysian Sign Language Translation Using Colour Segmentation and Neural Network”, IEEE on Instrumentation and Measurement Technology Conference Proceeding, Warsaw, Poland 2006, pp. 1-6. [2] Azadeh Kiani Sarkalehl, Fereshteh Poorahangaryan, Bahman Zan, Ali Karami, “A Neural Network Based System for Persian Sign Language Recognition” IEEE International Conference on Signal and Image Processing Applications 2009. [3] Incertis, J. Bermejo, and E. Casanova, “Hand Gesture Recognition for Deaf People Interfacing,” The 18th International Conference on Pattern Recognition, 2006 IEEE. [4] R. Feris, M. Turk, R. Raskar, K. Tan, and G. Ohashi. \"Exploiting depth discontinuities for vision-based fingerspelling recognition\". In IEEE Workshop on Real-time Vision for Human-Computer Interaction, 2004. [5] T. Starner and A. Pentland, \"Real-time American sign language recognition from video using hidden markov models\", Technical Report, M.I.T Media Laboratory Perceptual Computing Section, Technical Report No. 375, 1995. [6] T. Starner and A. Pentland, \"Real-time American sign language recognition from video using hidden markov models\", Technical Report, M.I.T Media Laboratory Perceptual Computing Section, Technical Report No. 375, 1995. [7] Stephan Liwicki, Mark Everingham, \"Automatic Recognition of Fingers pelled Words in British Sign Language\", School of Computing University of Leeds.

Copyright

Copyright © 2024 Tejasvi Jawalkar, Sejal Sandeep Khalate, Shweta Anil Medhe, Kshitija Shashikant Palaskar. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET58165

Publish Date : 2024-01-24

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online