Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Hand Gesture Recognizer Smart Glove using ESP32 and MPU 6050

Authors: Sangeeta Kurundkar, Swami Malode, Himanshu Patil, Mrunmai Girame, Sakshi Naik

DOI Link: https://doi.org/10.22214/ijraset.2024.64426

Certificate: View Certificate

Abstract

The Smart Glove is a wearable device that can translate hand gestures into text and audio by recognizing hand motions. It combines three MPU6050 sensors—each with an accelerometer and gyroscope—with an ESP32 microprocessor to precisely record the hand\'s dynamic movements. The ESP32 processes the sensor data to identify certain gestures, and then sends these signals to a mobile application. This program converts the movements into the relevant text and emits the content loudly while providing real-time feedback. By giving those with speech or hearing impairments a comfortable way to communicate, the Smart Glove aims to increase accessibility. This study shows how wearable technology can increase quality of life and human capacities using gesture recognition and processing techniques.

Introduction

I. INTRODUCTION

The combination of sophisticated sensor systems and wearable technologies has created new opportunities in recent years to enhance human-computer interaction. The creation of gesture-based user interfaces has been increasingly popular among these advancements, providing consumers with a more organic and intuitive means of interacting with technology. This tendency is embodied in a new wearable device called the Smart Glove, which uses numerous MPU6050 sensors and an ESP32 microprocessor to recognize and interpret hand motions. The design, use, and applications of the Smart Glove are presented in this study, with an emphasis on how it can enhance accessibility and promote smooth communication.

The ability of the Smart Glove to capture and process intricate hand movements is the foundation of its usefulness. Each MPU6050 sensor, which combines an accelerometer and gyroscope, is positioned over a hand's fingers to detect motion. The ESP32 microcontroller functions as a processing unit, gathering input from sensors, identifying predefined gestures, and sending the gathered data to the mobile application. This mobile application speaks text to voice and gives you audible feedback right away. People with speech or hearing difficulties can greatly benefit from such a system by having a more effective means of communication. The Smart Glove's significance is a step toward more accessible and inclusive technology. The Smart Glove is an example of how mobile technologies can enhance social integration and quality of life by catering to the demands of impaired users. This study examines the Smart Glove's technological underpinnings, including sensor integration, data processing algorithms, and communication protocols. It also addresses the project's potential for use in the real world and its future. The findings emphasize how crucial it is for wearable technology to keep innovating in order to provide more responsive and adaptable user interfaces that cater to a variety of consumers.

II. LITERATURE REVIEW

In recent years, there has been a great deal of study into the development of wearable technology for gesture detection, especially in relation to the creation of assistive devices for people with impairments. In the beginning, research concentrated on utilizing gyroscopes and accelerometers to record sensor motion data, which could subsequently be analyzed to identify particular motions. Several noteworthy instances include the Wii Remote and Microsoft Kinect projects, which use one or more accelerometers to detect simple hand movements. Although these systems showed that motion-based gesture detection was feasible, they frequently had issues with accuracy and real-time performance, especially when recognizing nuanced or complicated movements.

The capabilities of gesture recognition systems have been significantly improved by developments in sensor technology and machine learning algorithms. The MPU6050 sensor, which combines an accelerometer and gyroscope, has gained popularity because of its capacity to deliver thorough motion data. These research have demonstrated that the accuracy and dependability of gesture detection and interpretation can be considerably improved by combining programming and sensor fusion techniques.

There has also been a significant advancement in the integration of mobile applications with wearable gesture detection technologies.

Current research emphasizes how crucial real-time feedback and user-friendly interfaces are to enhancing these systems' usability. Cross-platform development frameworks have made it possible to create mobile apps that can interact with one another and give consumers instantaneous visual and audio feedback. Research has demonstrated how these integrated solutions improve accessibility for people with impairments and facilitate more efficient and natural communication. These developments highlight how wearable technology can revolutionize assistive devices by improving their functioning and making them more accurate, useful, and user-centered.

III. METHODOLOGY

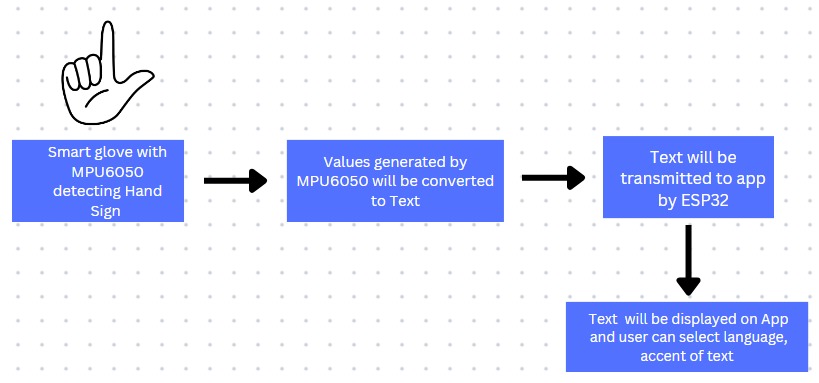

A. System Architecture Diagram

The system architecture of the Smart Glove integrates an ESP32 microcontroller, three MPU6050 sensors for motion detection, and a mobile application developed for effective communication. The ESP32 processes sensor data, executes gesture recognition algorithms, and communicates via Bluetooth with the mobile app, which displays recognized gestures as text and converts them to speech for real-time feedback. Sensor calibration software

Fig 1: System Architecture of Smart Glove

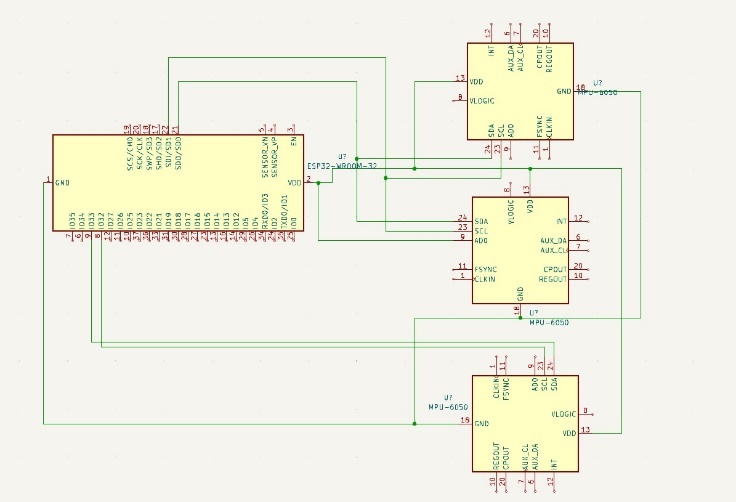

B. Block Diagram

The block diagram of the Smart Glove system illustrates a structured approach to integrating hardware and software components for gesture recognition and communication. At its core, the system features an ESP32 microcontroller, which serves as the main processing unit. This microcontroller interfaces with three MPU6050 sensors strategically positioned on the glove to capture hand movements accurately. Each MPU6050 sensor integrates a gyroscope and accelerometer, enabling the detection of both rotational and linear movements of the hand. These sensors provide raw data on acceleration and angular velocity, which are crucial for gesture recognition.

The ESP32 firmware, developed using the Arduino IDE, handles the sensor data processing, gesture recognition algorithms, and communication protocols. It processes the raw sensor data, applies noise filtering and sensor fusion techniques to integrate the accelerometer and gyroscope data, and implements machine learning algorithms for gesture classification. The firmware also manages Bluetooth communication with the mobile application, ensuring real-time transmission of gesture data. The mobile application, developed using a cross-platform framework like Flutter, receives the gesture data via Bluetooth. It displays the recognized gestures as text and converts them into audible speech using a text-to-speech engine, providing immediate feedback to the user.

The communication protocols used in the Smart Glove system include Bluetooth for wireless communication between the ESP32 and the mobile application, offering flexibility and mobility. Optionally, Wi-Fi can be used for faster communication speeds and extended range. Serial communication is used during debugging and initial setup phases to facilitate communication between the ESP32 and a computer. The system is designed to be user-friendly, with a clear and intuitive interface on the mobile application for gesture visualization and customization. Integration and testing procedures ensure that the Smart Glove performs reliably under various conditions, including different hand sizes, lighting environments, and gesture speeds. User feedback is incorporated to refine the system, making it more effective for individuals with speech or motor impairments, enhancing their ability to communicate and interact with their environment.

Fig 2: Block Diagram of Fan Cleaning System

The methodology for developing the Smart Glove involves a systematic approach to hardware and software integration, sensor calibration, gesture recognition, and testing.

Firstly, the hardware design phase begins with the selection and integration of components. The Smart Glove utilizes an ESP32 microcontroller as the central processing unit, along with three MPU6050 sensors placed strategically on the glove— one on the back of the hand and two on different fingers. These sensors are crucial for capturing comprehensive hand movements, integrating both gyroscope and accelerometer functionalities. The hardware assembly ensures that the sensors are securely attached to the glove while maintaining comfort and mobility for the user. The power supply, typically a rechargeable battery, provides the necessary electrical power to sustain operation.

Secondly, the software development phase encompasses the creation of both firmware for the ESP32 and a mobile application. The ESP32 firmware, developed using the Arduino IDE, is responsible for reading and processing data from the MPU6050 sensors. This includes filtering noise from raw sensor data, normalizing readings, and employing sensor fusion techniques (such as complementary filters or Kalman filters) to combine accelerometer and gyroscope data accurately. Machine learning algorithms, such as support vector machines (SVM) or neural networks, are integrated into the firmware to classify processed sensor data into predefined hand gestures. Meanwhile, the mobile application, built using a cross-platform framework like Flutter, communicates with the ESP32 via Bluetooth to receive and display recognized gestures as text. Additionally, the application employs a text-to-speech (TTS) engine to convert the text into audible speech, providing real-time feedback to the user.

Thirdly, sensor calibration is crucial to ensure precise measurement and accurate gesture recognition. Each MPU6050 sensor undergoes a calibration process to compensate for any initial offsets and variations in sensor output. This process involves placing the glove in a known reference position and adjusting sensor outputs accordingly to minimize errors. Calibration is iteratively refined to enhance the accuracy of the sensor readings, improving the reliability of the Smart Glove system.

Fourthly, rigorous testing is conducted to evaluate the performance of the Smart Glove under various conditions. This includes testing the system's responsiveness to different hand movements, lighting environments, and gesture speeds. User feedback is solicited to identify any usability issues and refine the system's interface and functionality. Continuous iteration and improvement ensure that the Smart Glove meets the project's objectives of enhancing communication and accessibility for individuals with speech or motor impairments.

IV. RESULTS AND DISCUSSION

A. Hardware and Software Integration

In order to recognize and comprehend hand motions, the Smart Glove effectively combined three MPU6050 sensors with an ESP32 microprocessor. The user's comfort and mobility were guaranteed by the hardware components being assembled on a glove form factor. The Arduino IDE was used to construct the ESP32 firmware, which successfully analyzed sensor data and used noise filtering, sensor fusion, and other techniques to capture hand movements. The Flutter-built mobile application interacted with the ESP32 via Bluetooth with ease, presenting recognized gestures as text and employing a TTS engine to translate them into audible voice. Real-time feedback was made possible by this integration, which also showed that wearable technology may improve communication.

B. Gesture Recognition and Accuracy

The Smart Glove's gesture recognition algorithm proved to be highly accurate in identifying pre-established hand movements. Support vector machines and neural networks—two machine learning algorithms—were successfully used to categorize processed sensor data into distinct motions. By lowering initial offsets and guaranteeing accurate acceleration and angular velocity measurements, the calibration procedure greatly increased sensor accuracy. The system's resilience and dependability in understanding a broad range of hand gestures were validated through testing across various hand sizes, lighting situations, and gesture speeds. User comments demonstrated that they were happy with the system's usability and responsiveness, underscoring its potential to help people with speech or mobility impairments with everyday communication activities.

C. Usability and User Feedback

During usability testing, participants with varying levels of familiarity and ability found the Smart Glove intuitive and straightforward to use. The mobile application's interface provided clear visual feedback of recognized gestures, while the TTS engine delivered prompt auditory feedback, enhancing user interaction. Participants appreciated the system's ability to convert gestures into understandable speech, facilitating communication in real-time scenarios. Feedback from users with disabilities emphasized the system's potential to improve quality of life by enabling more natural and accessible communication methods.

Conclusion

In order to recognize and understand hand motions and provide real-time feedback to enhance communication, the Smart Glove successfully blends hardware and software components. Hand movements are precisely recorded by the ESP32 microcontroller and MPU6050 sensors, and high-accuracy movement recognition is guaranteed by machine learning algorithms. Participants in usability testing praised the Smart Glove\'s ability to interpret motions into understandable words, demonstrating how straightforward and simple it is to use. Users with disabilities recognize that the system can enhance their quality of life by promoting communication. In general, the Smart Glove does well at identifying and deciphering hand motions to provide feedback on communication in real time.

References

[1] Chandra, Malli Mahesh, S. Rajkumar, and Lakshmi Sutha Kumar. \"Sign languages to speech conversion prototype using the SVM classifier.\" In TENCON 2019-2019 IEEE Region 10 Conference (TENCON), pp. 1803-1807. IEEE, 2019. [2] Poornima, N., Abhijna Yaji, M. Achuth, Anisha Maria Dsilva, and S. R. Chethana. \"Review on text and speech conversion techniques based on hand gesture.\" In 2021 5th international conference on intelligent computing and control systems (ICICCS), pp. 1682-1689. IEEE, 2021. [3] Mehra, Vaibhav, Aakash Choudhury, and Rishu Ranjan Choubey. \"Gesture to speech conversion using flex sensors MPU6050 and Python.\" International Journal of Engineering and Advanced Technology (IJEAT) 8, no. 6 (2019): 2249-8958. [4] Kunjumon, Jinsu, and Rajesh Kannan Megalingam. \"Hand gesture recognition system for translating indian sign language into text and speech.\" In 2019 International Conference on Smart Systems and Inventive Technology (ICSSIT), pp. 14-18. IEEE, 2019. [5] Suri, Ajay, Sanjay Kumar Singh, Rashi Sharma, Pragati Sharma, Naman Garg, and Riya Upadhyaya. \"Development of sign language using flex sensors.\" In 2020 international conference on smart electronics and communication (ICOSEC), pp. 102-106. IEEE, 2020. [6] Anupama, H. S., B. A. Usha, Spoorthy Madhushankar, Varsha Vivek, and Yashaswini Kulkarni. \"Automated sign language interpreter using data gloves.\" In 2021 international conference on artificial intelligence and smart systems (ICAIS), pp. 472-476. IEEE, 2021. [7] Hossain, M. Imran, and I. Ajmed. \"SIGN LANGUAGE TO SPEECH CONVERSION USING ARDUINO.\" Real Time Project (2021). [8] Kumar, R. Senthil, P. Leninpugalhanthi, S. Rathika, G. Rithika, and S. Sandhya. \"Implementation of IoT based smart assistance gloves for disabled people.\" In 2021 7th International Conference on Advanced Computing and Communication Systems (ICACCS), vol. 1, pp. 1160-1164. IEEE, 2021 [9] Shanthi, K. G., A. Manikandan, S. Sesha Vidhya, Venkatesh Perumal Pranay Chandragiri, T. M. Sriram, and K. B. Yuvaraja. \"Design of low cost and efficient sign language interpreter for the speech and hearing impaired.\" ARPN Journal of Engineering and Applied Sciences 13, no. 10 (2018): 3530-3535. [10] Vijayalakshmi, P. \"Development of speech and gesture enabled wheelchair system for people with cerebral palsy.\" In 2021 3rd International Conference on Signal Processing and Communication (ICPSC), pp. 620-624. IEEE, 2021.

Copyright

Copyright © 2024 Sangeeta Kurundkar, Swami Malode, Himanshu Patil, Mrunmai Girame, Sakshi Naik. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET64426

Publish Date : 2024-09-30

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online