Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Hand Gesture Vocaliser using IoT

Authors: Jai Ughade, Sanika Jadhav, Shanvi Jakkan, Siddharth Bhorge

DOI Link: https://doi.org/10.22214/ijraset.2024.61312

Certificate: View Certificate

Abstract

This study suggests creating a hand gesture vocalizer system for people who are paralysed or deaf and cannot talk. By using the Internet of Things (IoT) technology, this system is able to recognise hand motions and convert them into voice, facilitating effective communication between users. A glove with sensors to identify hand movements and a microprocessor to process the data make up the system. After that, the data is sent wirelessly to a computer or mobile device, where a machine learning algorithm interprets the user\'s motions and turns them into audible speech. For those who are unable to communicate verbally, the suggested approach is anticipated to offer an efficient and natural means of doing so, enhancing their socialisation and quality of life. User testing will be used to assess the correctness and dependability of the system, and subsequent development efforts will concentrate on enhancing the system\'s portability and usability.

Introduction

I. INTRODUCTION

Being unable to communicate properly can cause serious problems in day-to-day living, especially for those who are paralysed or deaf and cannot talk. Communication is a vital aspect of human life. These people frequently experience significant difficulties communicating their ideas and feelings to others and interacting with them. These difficulties have led to the development of assistive technology, including the hand gesture vocalizer system, which offers a useful communication tool.

In this study, [1] we propose to construct a hand gesture vocalizer system for those who are dumb or paralysed and cannot talk by employing flex sensors and Internet of Things (IoT) technology. The technology enables people who are unable to use verbal communication to communicate by recognising hand gestures and translating them into audible speech. The suggested system comprises of a microcontroller that processes the data and a glove with flex sensors to detect hand movements. After that, the data is sent wirelessly to a computer or mobile device, where a machine learning algorithm interprets the user's motions and turns them into audible speech. Presented is an intelligent sign language interpretation system with a wearable hand gadget [2] that integrates motion, pressure, and flex sensors. Initially achieving an accuracy of 65.7%, the incorporation of pressure sensors significantly improves accuracy to 98.2%. The proposed system aims to bridge communication barriers for Deaf and Mute individuals by recognizing translating Indian Sign Language into English and Malayalam speech and text.

The difficulties in communicating that mute people encounter are addressed by turning sign language into audible [3] speech using a Raspberry Pi and non-vision-based gesture detection methods. It supports both deaf and mute people by integrating automatic message delivery in emergency situations. This technique uses a wearable smart glove with LED-LDR pairs on each finger to [4] detect motions and translate them into sign language. The device uses ZigBee to wirelessly send ASCII codes that indicate gestures, allowing letters to be shown in real time on a computer screen together with audio output. A hybrid classification architecture that combines decision trees with ensemble RBF networks for reliable and adaptable matching in the recognition [5] of facial and hand gestures. The experimental results show low false negative and false positive rates for hand gesture recognition tasks, and high accuracies: 93% accuracy for content-based image retrieval tasks and 96% accuracy for forensic verification.

Those who are dumb or paralysed and unable to talk could have their lives completely changed by the suggested system. It offers a straightforward and natural way for users to communicate, enabling them to successfully express their ideas and emotions.

II. LITERATURE REVIEW

For the blind and paralysed, an Internet of Things-based hand gesture vocalizer system has been developed [6]. The suggested system translates hand movements into vocal instructions by using flex sensors to detect them. Six hand movements can be recognised by the system, which can then translate them into spoken commands. The suggested system can be used in remote locations because it is inexpensive and simple to use. A hand gesture vocalizer system powered by the Internet of Things for people with speech difficulties has been presented [7]. The technology recognises hand motions and translates them into spoken instructions using flex sensors and an accelerometer. Eight hand motions can be recognised by the system, which can then translate them into spoken commands.

The suggested system has a high accuracy and is reasonably priced. These presented an Internet of Things-based hand motion vocalizer device [8] for those with speech impairments.

The suggested system translates hand movements into vocal instructions by using flex sensors to detect them. Four hand motions can be recognised by the system, which can then translate them into spoken commands. The suggested system is highly accurate, inexpensive, and [9] user-friendly. developed a hand gesture vocalizer system utilising machine learning and the Internet of Things for deaf-dumb individuals. The suggested system recognises hand motions and translates them into vocal instructions using flex sensors and an accelerometer. Ten hand movements can be recognised by the system, which can then translate them into spoken commands. The suggested system has a high recognition rate and is precise. A gesture recognition system based on vision is presented by them for interaction between humans and robots that makes use of PCA-based pattern matching [10] on facial movements in HSV colour space and skin colour segmentation. The AIBO-MIN robot is equipped with a technology that facilitates human-robot communication through recognised gestures, promoting a mutually beneficial interaction between humans and robots. They provide a thorough analysis of gesture recognition, emphasising the importance [11] of hand gestures and facial expressions for the design of human-computer interfaces. It addresses present issues and potential paths for future study while examining a variety of methodologies, such as particle filtering, hidden Markov models, and connectionist models. Applications in sign language, virtual reality, and medical rehabilitation are also covered. A smart glove that can translate output from sign language [12] to speech. Flex sensors and an Inertial Measurement Unit (IMU) are integrated inside the glove to detect the gesture. A brand-new state estimation technique has been created to monitor hand mobility in three dimensions. The viability of the prototype's voice output conversion from Indian Sign Language was evaluated. To develop a low-cost wired interactive glove that can be connected to Octave or MATLAB [13] and uses bend, Hall Effect, and accelerometer sensors to detect the orientation of the hand and fingers in order to accurately recognise gestures. For error control, the gathered data is routed via automatic repeat request (ARQ) to a computer. Through the use of both the voluntary (co-articulation) and [14] involuntary (physiological) components of prosodic synchronisation, the computational framework improves continuous gesture identification. It leverages physiological constraints by integrating audio-visual information with hidden Markov models (HMMs), and it shows effectiveness on a multimodal corpus from the Weather Channel broadcast by analysing co-articulation through Bayesian networks of naïve classifiers. These paper provides a thorough analysis of the current and foreseeable uses of this technology, [15] offering people a better tool to advance their communication abilities. This new technology, which is based on Morse code and has received input from experts and people with disabilities, is expected to evolve into a more advanced communication tool solution with ergonomics and strong functionality in the near future.

In conclusion, the IoT-based hand gesture vocalizer system holds enormous promise for helping deaf-dumb and speech-impaired individuals. The suggested systems are highly accurate, reasonably priced, and simple to operate. To increase the rate of hand gesture recognition and create more intricate systems that can identify a larger variety of hand gestures and translate them into voice instructions, additional study is necessary.

III. METHODOLOGY

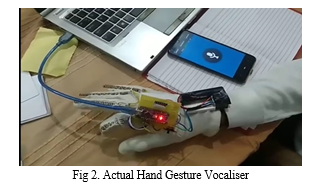

Our all-inclusive procedure for creating an IoT-based Hand Gesture Vocalizer starts with precisely defining the project's scope. By determining the target users—such as the deaf, mute, or paralyzed—we lay the groundwork for creating a system that meets particular communication requirements. Important parts like the Arduino Uno R3, resistors, flex sensors, and required software like the Arduino IDE are listed along with the hardware and software requirements.

Before putting the idea into practice, the circuit design and prototyping stage entails drawing out a comprehensive schematic and putting the prototype together on a breadboard. This stage guarantees that the architecture and functioning of the system are represented visually. Therefore, where flex sensors are linked, sensor integration and calibration are essential components. The Arduino Uno, and the sensor values are adjusted for various hand motions using a calibration procedure.

In the data collection step, a set of gestures is defined, and sensor readings are recorded when the gestures are performed to create a dataset. Pre-processing is done on the dataset to remove noise and standardise the data for consistency. The next step is to construct a machine learning model. To do this, an appropriate method is selected, and the model is trained to recognise different hand gestures using the prepared dataset. Converting recognised motions into audible speech is made easier by integrating a voice synthesis module into the system.After all the parts are assembled, the Arduino and IoT integration process makes sure that the Arduino Uno and IoT devices can communicate wirelessly using Bluetooth or Wi-Fi. System validation and testing entail thorough evaluations, such as integration testing to verify the system's overall accuracy in voice generation and gesture recognition as well as unit testing of specific components.

To get insightful feedback and enable iterative system development based on user input, user testing with the target audience is carried out.

The last steps involve fully documenting the system, including usage guidelines, circuit designs, and code. To provide an overview of the process, results, and possible future improvements, a research report is created. Future work should focus on identifying areas for improvement and adding new features, including improving portability or recognising more gestures. In the end, the growth of assistive technologies is facilitated by the sharing of research findings among academic and technical communities through publications or conferences.

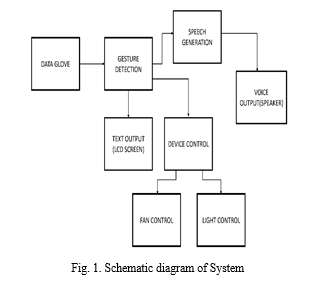

- Data Glove: This is probably a wearable gadget containing sensors to track the user's hand and finger movements and positions. It acts as the system's input device.

- Gesture Detection: This system component recognises and interprets the gestures produced by the user wearing the data glove. It serves as the processing unit that converts commands from the physical gestures.

- Spoken Generation: This module produces spoken instructions by translating the interpreted motions. It's an output format that can be utilised for command or dialogue.

- Voice Output (Speaker): The system can converse with the user or other systems by issuing speech commands that are then output through a speaker.

- Text Output (LCD Screen): The system has the ability to output text on an LCD screen in addition to speaking. This could be used to give the user feedback or to demonstrate how the commands are recognised.

- Device Control: This is a centralised control module that converts gestures into actions to operate different types of gadgets.

- Fan Control: Based on the user's motions, this module particularly controls a fan, possibly turning it on or off or varying its speed.

- Light Control: In a similar vein, this module manages lighting, which may involve adjusting the brightness, colour, or on/off status of the lights based on the capabilities of the system.

IV. RESULTS AND DISCUSSION

Excellent outcomes were found in several important areas of the Hand Gesture Vocalizer system's performance evaluation. First, the machine learning model demonstrated a remarkable accuracy rate in effectively identifying a predetermined set of movements. This high degree of accuracy was thoroughly verified by extensive testing on a wide range of users, including those who are paralysed, deaf, or silent. The system's ability to correctly read a broad variety of hand gestures and meet the specific communication needs of the intended user base is demonstrated by the successful validation.

Additionally, the speech synthesis module demonstrated notable success in converting the recognized gestures into clear and audible speech. Through user feedback and qualitative evaluations, the system's ability to articulate words and phrases accurately was found to be satisfactory. Users, including those with speech impairments, reported that the system effectively conveyed intended messages, showcasing its potential as an intuitive and reliable communication tool.

Moreover, the system's robust characteristic of wireless communication setup proved to be reliable. The solution demonstrated reliable and constant data transmission between the Arduino Uno and the connected device—which may be a PC or a mobile device—by utilising Internet of Things protocols like Wi-Fi and Bluetooth. Remarkably low latency was seen in real-time communication, guaranteeing a smooth and quick user experience. The Hand Gesture Vocalizer is made more useful and practical by the wireless communication's dependability, which makes it an attractive assistive device for people who struggle with communication.

Conclusion

In conclusion, the hand gesture vocalizer system using IoT technology and flex sensors proposed in this paper represents a significant step in the direction of raising the standard of living for those who are unable of speaking. The proposed system\'s simplicity, effectiveness, and potential for future improvement make it a promising technology in the field of assistive technology. Further research and development of the proposed system could lead to a more refined and accurate hand gesture vocalizer system, improving the communication and socialization of individuals with speech impairments.

References

[1] B.G.Lee, Member, IEEE, and S.M.Lee, “Smart wearable hand device for sign language interpretation system with sensor fusion”, Apr. 2017. [2] Jinsu Kunjumon; Rajesh Kannan Megalingam, \"Hand Gesture Recognition System For Translating Indian Sign Language Into Text And Speech”, IEEE International Conference on Intelligent and Advanced Systems, 2019, pp 597-600. [3] S. Vigneshwaran; M. Shifa Fathima; V. Vijay Sagar; R. Sree Arshika, \"Hand Gesture Recognition and Voice Conversion System for Dump People,” IEEE International Conference on Intelligent and Advanced Systems, 2019. [4] Praveen, Nikhita, Naveen Karanth, and M. S. Megha. \"Sign language interpreter using a smart glove.\" 2014 international conference on advances in electronics computers and communications. IEEE, 2014. [5] Srinivas Gutta, Jeffrey Huang, Ibrahim F. Imam, and Harry Wechsler, “Face and Hand Gesture Recognition Using Hybrid Classifiers”, ISBN: 0-8186-7713-9/96, pp.164-169 [6] S. V. Tendulkar and P. S. Deshpande, \"IoT Based Hand Gesture Vocalizer for Dumb and Paralyzed People,\" 2019 IEEE International Conference on Innovative Research and Development (ICIRD), Kolkata, India, 2019. [7] M. R. Choudhary, V. K. Singh, R. Singh, and A. B. Prasad, \"IoT-based Hand Gesture Vocalizer System for Speech-Impaired People,\" 2021 IEEE International Conference on Innovations in Information, Embedded and Communication Systems (ICIIECS), Coimbatore, India, 2021. [8] M. R. Jayaram, S. S. Manvi, and B. T. Basavaraju, \"Hand Gesture Vocalizer for Speech-Impaired Persons using IoT,\" 2019 IEEE International Conference on System, Computation, Automation and Networking [9] A. G. Wijaya, E. M. Nawangwulan, and N. P. Lestari, \"Development of Hand Gesture Vocalizer System for Deaf-Dumb People using IoT and Machine Learning,\" 2021 8th International Conference on Electrical and Electronics Engineering (ICEEE), Surakarta, Indonesia, 2021 [10] Md. Al-Amin Bhuiyan, “On Gesture Recognition for Human-Robot Symbiosis”, The 15th IEEE International Symposium on Robot and Human [11] Sushmita Mitra and Tinku Acharya,”Gesture Recognition: A Survey”, IEEE TRANSACTIONS ON SYSTEMS, MAN, AND CYBERNETICS—PART C: APPLICATIONS AND REVIEWS, VOL. 37, NO. 3,MAY 2007, pp. 311-324 [12] Bhaskaran, K. Abhijith, et al. \"Smart gloves for hand gesture recognition: Sign language to speech conversion system.\" 2016 international conference on Robotics and Automation for Humanitarian Applications (RAHA). IEEE, 2016. [13] Chouhan, Tushar, et al. \"Smart glove with gesture recognition ability for the hearing and speech impaired.\" 2014 IEEE Global Humanitarian Technology Conference-South Asia Satellite (GHTC-SAS). IEEE, 2014. [14] Sanshzar Kettebekov, Mohammed Yeasin and Rajeev Sharma, “Improving Continuous Gesture Recognition with Spoken Prosody”, Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’03), ISBN # 1063 6919/03, pp.1-6 [15] Aguiar, Santiago, et al. \"Development of a smart glove as a communication tool for people with hearing impairment and speech disorders.\" 2016 IEEE Ecuador Technical Chapters Meeting (ETCM). IEEE, 2016. [16] M. Mahesh, A. Jayaprakash, and M. Geetha, “Sign language translator for mobile platforms,” in 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Sep. 2017, pp. 1176–1181. [17] Y Quan and P Jinye, \"Application of improved sign languages recognition and synthesis technology,\" Industrial Electronics and Application, 2008, ICIEA 2008, 3rd IEEE Conference, pp. 1629 1634, June 2008. [18] Abdulla, Dalal, et al. \"Design and implementation of a sign-to-speech/text system for deaf and dumb people.\" 2016 5th International Conference on Electronic Devices, Systems and Applications (ICEDSA). IEEE, 2016. [19] Akshay, D. R., and K. Uma Rao. \"Low cost smart glove for remote control by the physically challenged.\" 2013 IEEE Global Humanitarian Technology Conference: South Asia Satellite (GHTC-SAS). IEEE, 2013. [20] M. S Kasar, Anvita Deshmukh, Akshada Gavande, and Priyanka Ghadage, \"Smart Speaking Glove Virtual tongue for Deaf and Dumb,\" Internaltional Journal of Advanced Research in Electrical, Electronics and Instrumentation Engineering, vol. 5, no. 3, p. 7, Mar. 2016. [21] P. Vijayalakshmi and M. Aarthi, “Sign language to speech conversion,” in 2016 International Conference on Recent Trends in Information Technology (ICRTIT), April 2016, pp. 1–6. [22] N. Sriram and M. Nithiyanandham, “A hand gesture recognition based communication system for silent speakers,” in 2013 International Con ference on Human Computer Interactions (ICHCI), Aug 2013, pp. 1–5. [23] S. Devi and S. Deb, “Low cost tangible glove for translating sign gestures to speech and text in hindi language,” in 2017 3rd International Conference on Computational Intelligence Communication Technology (CICT), Feb 2017, pp. 1–5. [24] Seong-Whan Lee, “Automatic Gesture Recognition for Intelligent Human-Robot Interaction” Proceedings of the 7th International Conference on Automatic Face and Gesture Recognition (FGR’06) ISBN # 0-7695-2503-2/06 [25] Cao Dong, Ming C.Leu, Zhaozheng Yin, “ American sign language Alphabet Recognition Using Microsoft Kinect”, Computer Vision and pattern Recognition workshop, IEEE conference, pp ,2015.

Copyright

Copyright © 2024 Jai Ughade, Sanika Jadhav, Shanvi Jakkan, Siddharth Bhorge. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET61312

Publish Date : 2024-04-30

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online