Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Harnessing Emotional Intelligence for Music Curation in Recommendation Systems

Authors: Dr. Sruthi, Anil Kumar , Gilla Sahithi, Thatikonda Surya Teja, Balla Tejasri

DOI Link: https://doi.org/10.22214/ijraset.2024.59856

Certificate: View Certificate

Abstract

When an individual decides the music he or she wants to listen to from a huge collection of already-existing options, sometimes it might get confusing. Diverse maneuvers have been executed for music, pride and food, and shopping based on user mood available, but there is no centrally managed network for all. The objective behind the technology of our music recommendation system is to give user-fitted recommendations to customers. The facial expression/user emotion analysis may a way to rectify defects in the current emotional or mental status of a user. When it comes to music and videos, this is the one area where there is a considerably large avenue for predictions based on customer tastes and previous records. This is a common thing that you\'ve probably heard: that people often use facial expressions to be more direct about what they are really saying and the situation they are in. More than 60% of users agree with the sentiment that it will at some point become so difficult for them to determine which video they should play because quantity of videos in their library is really huge. Through the modelling of a robot dedicated to making recommendations about music to a user, it can facilitate the decision-making of which music one should be listening to and, thereby, lower the stress levels of the user. The user would not need to spend time on music searching or looking up suitable songs, as the best song paired with the user’s mood status would be successfully detected and immediately recommended to him/her by the application. The picture of the user is delivered through the camera incorporated into it. The picture of the user is taken, and then song is displayed on the screen in accordance with the mood or emotion of the user from their playlist, which matches the needs of the user.

Introduction

I. INTRODUCTION

The human-music and science interaction theme is a scientific research field of debate. A rise in several research surveys shows that the influence of music on the human brain and moods is immense. Research findings have shown a decisive effect on modulating arousal and mood states using music. The connection between musical

tastes and personal traits that seems the strongest is certainly a promising subject for research in this sphere, as it is manifest that the music that people listen to is very much related to their mood and emotional state.

Music touches the parts of the brain that are involved in feelings and mood regulation. In these areas, elements such as meter, timbre, rhythm, and pitch are processed, causing an emotional attachment to music. In consequence, music was discovered to serve an extremely good purpose for making a person happier and more self-aware. In recent times, there has appeared to a breakthrough in the development of automated emotion detection systems, which coincided with the improvement of digital signal processing tools and feature extraction techniques. To an extent, those technologies have transitioned a common element of the machine-human interaction puzzle, of our entertainment, and of our overall security. Proceeding from this, verity that facial emotion recognition technologies are getting more popular involves the rising interest of music recommendation system developers in using personalization. In this case, studying the facial expressions can help the system deduce a person's emotional state and recommend a playlist of music songs that suit the mood. In this case, if the user is sad, the system may point the user to cheerful music. It can also suggest healing or soothing melodies, according to the user's mood. Also, there is a potential for the facial emotion recognition system to have a high degree of accuracy. Security and privacy concerns that emanate from having the system acquire and use the facial data are portion of the issues. Furthermore, the prowess of the music recommendation algorithm to work out the best songs coinciding with the entered mood or emotion should be rigorously evaluated as well.

With the Kaggle Facial Expressions recognition dataset, we have constructed a system of emotion detection. We check that and insert the playlist that contains the different music genres that are tuned to energize good feelings. Our music player database offers an entire spectrum of tunes picked to boost the good moods of listeners; these solely serve to produce a memorable experience for the use.

Songs have been used to construct a dataset for the player that plays music. Music serves two primary purposes, as seen by participants: it can elevate their mood and increase their self-awareness. There is strong evidence linking musical tastes to emotions and personality traits. The brain regions responsible for emotions and mood are also involved in the control of music's meter, timbre, rhythm, or pitch.

These days, emotion detection is thought to be the most crucial method utilized in a broad diversity of applications, including smart card applications, security, adaptive human- computer interfaces with multimedia environments, surveillance, picture database analysis, criminal, and video indexing

applications. Automated marketing recognition of emotions in multimedia attributes such as film or music is growing rapidly due to advancements in the processing of digital signals and other efficient feature extraction algorithms. This system has a lot of potential applications, including music entertainment and human computer interaction systems.

The suggested method identifies a person's emotions; if the individual is experiencing bad feelings, a playlist containing the most. appropriate musical genres will be displayed to lift his spirits. Additionally, a particular playlist with various musical genres that uplift pleasant feelings will be shown if the feeling is positive.

II. LITERATURE REVIEW

A. Wearable Computing and its Application

The idea of wearable Computing as a new field of the future excites consumers in and out of different industries. Technology has, in fact, become a profound companion for all affluent lifestyles, and wearables have strived to fit in there and beyond, so they will be having potential to help doctors and ordinary citizens deal with their daily tasks more smartly. Difficulties of battery life, performance, and form factor act as the major impediments for large-scale deployment. Nevertheless, many interesting prospects will come with future advancements. The origin of wearable computing systems, starting with military applications, will be reviewed, along with the along with the up-to-date wearable products we use today that are for entertainment, communications, and health monitoring purposes. Wearable technology has a plethora of opportunities that spark people's ideas and thoughts across all industries. The reliance on computers and other interfaces in this technological age necessitated their ubiquitous presence. This necessity opened the door for the creation of wearable technology and computers that may help professionals with their personal tasks by enhancing and supporting daily living in a technologically advanced society. The broad deployment of wearable computers has been delayed due to various reasons such display brightness, battery life, CPU power, network coverage, and form factor. But over the last ten years, a number of effective implementations and an unrelenting pursuit of computer miniaturization have raised the prospect of practical applications. This study examines the various applications of wearable computers, ranging from early ideas for aviation maintenance and military use to contemporary production models that facilitate personal entertainment, communication, and health monitoring.

B. Multimodal Emotion Recognition

Here, we introduce a multimodal emoF-BVP data set comprising acting scenes that emphasize different emotional expressions performed by actors. Finally, the present research yields the outline of the four DBN models, which manifest that these are able to obtain meaningful features for unsupervised emotion classification. DBN models triumph over the old methods, providing higher efficacy in specification of a comparative evaluation. Furthermore, we suggest the use of the Deep Convolutional Belief Networks (CDBN) models that are capable of distinguishing barely recognizable emotions to a higher degree, particularly in nearly-neutral cases. This research shows that most of the existing multidimensional emotion recognition systems work properly and, therefore, Could be perceived as a to modernize computer vision designed for emotional analysis. First presented in 10 this analysis is the remove-BVP database, which contains multimodal recordings of actors acting out different emotional expressions (facial, body gesture, speech, and physiological signals).The database includes audio and video clips of performers expressing 23 distinct emotions at three different intensities, and skeletal and facial feature tracking and the related physiological information. We then go on to present four deep belief network (DBN) models and demonstrate how they produce strong multimodal features for emotion categorization in an unsupervised way.

C. Toward Machine Emotional Intelligence

The integration of emotional intelligence with machine intelligence would be a proposed construct that goes by the name of emotional artificial intelligence (AI) in a hypothetical sense. By means of the chronological development of AI, such as artificial neural networks, there is an integration of both AI and emotional intelligence. He shows that these patterns of signals are related to the four physiological signals of the complex emotional state of people. Data reliability can be affected if you are negligent about the values you are using, given that you may obtain erroneous values. While we have greatly expanded our data, we are also capable of huge knowledge. The innovation occurred as the algorithmic ability to distinguish the markers of emotional state was contrasted to the existing techniques, taking into account the possible daily variations. The end result was an acknowledgement capability of 81% across 8 emotional states, neutral ones included. We suggest novel features and techniques to address the daily fluctuations and evaluate their efficacy. On eight kinds of emotion, including neutral, the recognition accuracy was 81 percent.

D. Emotion Recognition through Searing Skin Response

This work is devoted to the identification of emotions using searing skin response sensor data through different feature extraction methods. Machine learning methods are based on classification into different valence and arousal categories with the help of the k-nearest neighbor, decision tree, random forest, and support vector machine algorithms. A matter of notice is that precision parameter for arousal was 81.81%, but it was 89.29% for valence.

As a result, this investigation prepares data that can be used by machine learning and for critical thinking based on the physiology of emotional signals in connection with human beings. Empirical Mode Decomposition, wavelet, and temporal domain characteristics were used in this study to identify emotions from Galvanic Skin Response signals.

E. Autonomic Nervous System Activity Differentiates Among Emotions

Research proves the verity that activity of the autonomic nerve system is felt differently for every emotion. By producing facial models and through emotional memories, distinct autonomous reactions could be evoked, enabling the differentiation of positive from negative emotions and various negative feelings. This result put such existing theories on emotion into question, particularly those being in favor of undifferentiated autonomic activity or considering the implications of autonomic activity differentiation to be unimportant. Being aware of autonomic parameters paves the way for uncovering the intricacy of emotions and their psychological roots.

III. SYSTEM REQUIREMENTS

A. Hardware Requirements

- Processor – intel i3 or above

- Speed – 1.1 Ghz

- RAM – 4 GB or above

- Hard Disk – 500 GB or above

B. Software Requirements

- Windows

- Python

- Visual Studio Code

- OpenCV

- TensorFlow

IV. METHODOLOGY

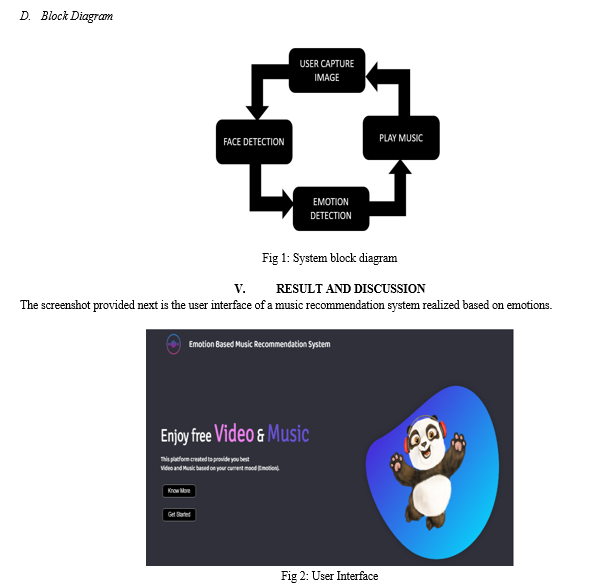

This study is a way to develop Music suggesting system based on emotion captured from user facial expression

A. Face Detection

Face detection derives from OpenCV, which is widely-known computer vision libraries. OpenCV function is utilized to get the face from both images and live video streams. Public libraries are currently developing algorithms that provide fast and almost immediate face detection. OpenCV used Haar cascades, it is a rapid and decisive detection method, to break down the face.

B. Emotion Recognition

Emotional recognition is a process that makes use of the CNN that is used to detect emotional expressions in faces. A CNN model with attention is to be trained, and facial expression awareness as well as emotions sorting into happy, sad, angry, and neutral will be extracted from it. The CNN model makes the features from the facial images auto-extracted to map emotions into lines. During training of the CNN model, pictorial labels are used so that it links between facial features and emotions are clarified.

C. Music Recommendation

Music recommendation utilizes detected moods and expressions from blushing and frowning faces. The dataset of songs utilized, which gives emotional attributes for recommendation, shows recency. Songs are picked to correspond with the specified emotions, and as result, the user gets a unique and tailor-made music listening experience. The suggested songs will play in the air soon if you just call the resource (YouTube), where all the songs you need will be assembled in a short period of time.

Conclusion

The foundation of this music-themed project is the user\'s emotions, which are shown in real-time captured photos as input. Mood regulation and stress relief are two outcomes that music can give to the user; thus, improving user-music system interaction is the objective of the project. The current development implies the prospective relationships\' variety in the sphere of emotion-rich music recommendation by an AI system in the near future. To express emotions correctly and customize the music accordingly, the current version has a face identification system that relies on the user\'s facial expressions.

References

[1] Sauth Jhanjhariya, Sumita Pal and Shobhna Verma, “Wearable Computing and Its Usage,” International Journal of Computing Science to investigating and understanding the various roles these play in the lives of people. 4, pp. 5700– 5704, 2016. [2] K. Popat and P. Sharma, “Wearable computer applications: In, “A scene differently,” Int.J. Eng. and Innova.Tech., vol. 3, no.1, 2015. [3] Melville and Sindhwani, in thechapter named “Recommender systems,” inEncyc.of mach.learn.Springer, 2012, pp. 829–838. [4] N. Sebi, I Cohen, T.S. Huang et al,, \"Multimodal Emotion Recognition,\" Handbook of Pattern Recognition and Computer Vision, 4:387-419, 2006. [5] R. W. Picard, E. Vyas, and J. Healey, “Toward machine emotional intelligence: \"Analysis of the Affective Physiological State\" IEEE Trans. Pattern Anal. Mach. Intel. 23(10), 1175–1191, 2002. [6] D. Ahata, Y. Yazan, and M. Kamana, “Emotion recognition with galvanic skin response: \"Performance analysis of machine learning algorithms and the feature extraction techniques,\" IU J. of Elect.& Elect.Eng., vol. 17, no.1, pp. 3129–3136, 2018. [7] P. Ekman, R. W. Levenson & W. V. Friesen, “The autonomic nervous system activity differentiates between emotions.” Am.Assoc. for Adv. of Science, 1983. [8] I.-h. Shinn, C. W. Chae, C. Lee, S. Y. Lee, and J. S. Lee believe Chinese megaprojects, e.g Belt and Road Initiative (BRI) and Multilateral Development Bank (MDB), have certainly assisted in the enlargement of trade for member countries. Moreover to that, Yoon and H. C. Kim published “Automatic stress-relieving music suggestion system based on photoplethysmography-derived heart rate variability analysis” IEEE Int. Conf. on Eng.in Med. and Soc. IEEE, 2015, pp. 6402-6405. [9] S. Nirja, R. F. Dickerson, Q. Li, P. Asare, J. A. Stankovic, D. Hong, B. Zhang, X. Jiang, G. Shen, and F. Zhao, “musical heart: A great way of listening to music,” in Proc.of ACM Conf.on Emb.Newt.Sens. Sys.ACM, 2013, pp. 43–56. [10] H. Liu, J. Hu and M. Rutenberg “Music playlist recommendation depends on user heartbeat and music preferences” to the Int.Conf.on Comp.Tech.and Dev., vol. 1.IEEE, 2008, pp. 545–549. [11] F. Isinkaye, Y. Folajimi, and B. Ojo Koh, “Recommendation systems: This post discusses only “the basics” that is “the principles, methods and evaluation in Egypt.” Inf. J., vol. 16, no.3, pp. 261–273, 2014. [12] A. Nakasone, H. Preminger, and M. Ishizuka, “Emotion recognition from electromyography and skin conductance,\" conference Record, Proc.of Int.Work. on Bio signal Interp., 2005, pp. 219-222. [13] Yoon, K., Lee, J., & Kim, M. U., “The music recommendation bot via emotional triggering low-level features,\" IEEE Trans.Consume.Electron, vol. 58, no. 2, p. 612–618, May 2013. [14] A Music recommendation system that takes user\'s sentiments gathered from social media is presented by R. L. Rosa, D. Z. Rodriguez, and G. Bressan, IEEE Trans.Consume.Electron, vol. 61, no. 3, pp. 359–367, Aug 2016. [15] Sruthi, P., Singamsetty, P.L.” Enhance the efficiency in multi packet routing for neighbor discovery”,Journal of Advanced Research in Dynamical and Control Systems, 2017, 9(Special issue 14), pp. 2117–2130. [16] Vidhya, V., Kiran, A., Bhaskar, T., Boddupalli, S.” Machine Learning-based Reduction of Food Remains and Delivery of Food to the Needy”,Proceedings of the 2023 2nd International Conference on Augmented Intelligence and Sustainable Systems, ICAISS 2023, 2023, pp. 878–882 [17] Sasi Bhanu, J., Kamesh, D.B.K., Durga Bhavani, B., Saidulu, G.” An Architecture on Drome Agriculture IoT Using Machine Learning”,Cognitive Science and TechnologyThis link is disabled., 2023, Part F1493, pp. 635–641. [18] Y. Ambica, Dr N. Subhash Chandra MRI brain segmentation using correlation based on adaptively regularised kernel-based fuzzy C-means clustering Int. J. Advanced Intelligence Paradigms, Vol. 19, No. 2, 2021.

Copyright

Copyright © 2024 Dr. Sruthi, Anil Kumar , Gilla Sahithi, Thatikonda Surya Teja, Balla Tejasri. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET59856

Publish Date : 2024-04-05

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online