Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Human Activity Recognition System with Smartphones Using Machine Learning

Authors: Rushasri Tholeti, Harsha Tholeti

DOI Link: https://doi.org/10.22214/ijraset.2024.58437

Certificate: View Certificate

Abstract

Human activity recognition (HAR) is a crucial task in the field of physical activity monitoring. It involves the identification of a person\'s activities and movements using sensors. The accuracy of HAR systems plays a significant role in enhancing physical health, preventing accidents and injuries, and improving security systems. One of the most common approaches to HAR is using smartphones as sensors. However, smartphones have limited processing power and battery life, which can impact the performance of HAR systems. To overcome these challenges, we can apply different machine learning models and compare their performances. We begin by selecting a standard dataset, the HAR dataset from the Machine Learning Repository. We then apply several Machine learning models, including Logistic Regression (LR), Decision Tree (DT), Support Vector Machine (SVM), Random Forest (RF), and Artificial Neural Network (ANN).To compare the performance of these models, we train and test each model on the HAR dataset. We also select the best set of parameters for each model using grid search. Our results show that the Support Vector Machine (SVM) performed the best (average accuracy 96.33%), significantly outperforming the other models. We can confirm the statistical significance of these results by employing statistical significance test methods.The SVM model demonstrated superior performance in recognizing human activities. This highlights the potential of machine learning models in revolutionizing the field of HAR. However, further research is needed to address the challenges of limited processing power and battery life in smartphones and to explore other potential applications of HAR systems.

Introduction

I. INTRODUCTION

Human Activity monitoring has become a vital area of research in the health care domain. The rise in popularity of smart wearable devices like smart watches, with embedded sensors, has facilitated the process of collecting high quality data both easily and effectively. This area of research is highly intriguing as it finds applications across a wide range of domains. Some of the interesting application include, monitoring the physical activity and health condition of geriatric population, predicting the motion of a robot using sensors, and to develop systems that help the elderly people walk etc. The primary objective of this project is to come up with an innovative and robust system to monitor the human activity and to classify the positioning of a user into one of the 4 classes, Sitting, Walking, Standing, and Laying down, using a smartwatch. It is a challenging problem because there is no clear analytical way to relate the sensor data to specific actions in a general way. It is technically challenging because of the large volume of sensor data collected (e.g. tens or hundreds of observations per second) and the classical use of handcrafted features and heuristics from this data in developing predictive models. More recently, deep learning methods have been achieving success on HAR (human activity recognition) problems given their ability to automatically learn higher-order features. Human activities have been commonly used to define human behavioural patterns.

The availability of sensors in mobile platforms has enabled the development of a variety of practical applications for several areas of knowledge such as:

- Health—through fall detection systems, elderly monitoring, and disease prevention.

- Internet of Things and Smart Cities—through solutions used to recognize and monitor domestic activities and electrical energy saving.

- Security—through individual activity monitoring solutions, crowd anomaly detection, and object tracking.

- Transportation—through solutions related to vehicle and pedestrian navigation. For this reason, the development of solutions that recognize human activities (HAR) through computational technologies and methods has been explored in recent years.

II. OBJECTIVE AND STATEMENT

Our objective is to create a machine learning model for real-time human activity recognition, aiming to improve upon existing supervised classification models and offer a more accurate and efficient solution for recognizing human activities using machine learning algorithms that can operate on smartphones. This project involves analyse a dataset of smartphone sensor values and meteorological data using machine learning techniques.

Understanding human behaviour through regular activity tracking can be challenging, and we aim to minimize the risk factors associated with human activity prediction. By employing various algorithms and methodologies based on our smartphone sensor dataset, we aim to predict human activities with greater accuracy.

Identifying human activities, such as walking, running, or sitting, can provide valuable insights into a person's level of physical activity. Lack of activity can lead to health issues such as obesity, and our model can potentially help address these concerns. Additionally, our model can be used for security purposes and transportation planning.

Our project aims to contribute to the development of innovative applications in this field and advance the current state of human activity recognition research. By building an accurate and efficient model, we hope to make significant progress towards achieving our goals.

III. LITERATURE SURVEY

- Human Detection in Surveillance Videos and Its Applications - A Review

Authors: Sarvesh Vishwakarma and Anupam Agrawal, May 2011 This study highlights the significance of accurately detecting human beings in visual surveillance systems for various application areas, including abnormal event detection, gait characterization, congestion analysis, person identification, gender classification, and fall detection for the elderly. The detection process involves detecting a moving object using background subtraction, optical flow, or spatiotemporal filtering techniques. Subsequently, a moving object can be classified as a human being using shape-based, texture-based, or motion-based features.

2. A Review of Human Activity Recognition Methods

Authors: Michalis Vrigkas, Christophoros Nikou, and Ioannis A Kakadiaris, November 16, 2016 Recognizing human activities from video sequences or still images is a challenging task due to issues such as background clutter, partial occlusion, changes in scale, viewpoint, lighting, and appearance. Many applications, including video surveillance systems, human-computer interaction, and robotics for human behaviour characterization, require a multiple activity recognition system. This work offers a comprehensive review of recent and state-of-the-art research advances in human activity classification.

3. A Survey on Activity Recognition and Behaviour Understanding in Video Surveillance

Authors: Sarvesh Vishwakarma and Anupam Agrawal, October 2012 This paper provides an exhaustive survey of activity recognition in video surveillance. It begins with a description of simple and complex human activities and various applications. Activity recognition has numerous applications, ranging from visual surveillance to content-based retrieval and human-computer interaction. The paper's organization covers all aspects of the general framework of human activity recognition. Additionally, it summarizes and categorizes recent research progress under a general framework.

IV. LIMITATIONS

This article provides a comprehensive review of the state-of-the-art human activity recognition models that are built using deep learning layers.

With the rapid advancements in technology and the increasing availability of large-scale datasets, deep learning-based models have gained significant attention in recent years. This article showcases various architectures, including CNN, LSTM, and hybrid-based approaches, along with their experimental results, setup specifics, and limitations.

Convolutional Neural Networks (CNNs) have been widely used in human activity recognition tasks due to their ability to extract spatial features from raw data. The article discusses the technical details of several CNN-based models, such as AlexNet, VGG, and ResNet, and their performance in recognizing human activities. Moreover, the article highlights the limitations of CNN-based models, such as the inability to capture long-term dependencies and the need for large-scale datasets.

Long Short-Term Memory (LSTM) networks have been used to overcome the limitations of CNN-based models by capturing long-term dependencies in sequential data. The article discusses the technical details of several LSTM-based models, such as Vanilla LSTM, GRU, and Bi-LSTM, and their performance in recognizing human activities. The article also highlights the limitations of LSTM-based models, such as the vanishing and exploding gradient problems, and the need for large-scale datasets.

To overcome the limitations of CNN and LSTM-based models, hybrid-based approaches have been proposed. The article discusses the technical details of several hybrid-based models, such as CNN-LSTM, CNN-BiLSTM, and CNN-GRU, and their performance in recognizing human activities. The article highlights the advantages of hybrid-based models, such as their ability to extract both spatial and temporal features, and their robustness to noise and variations in data.

The article also provides a detailed analysis of the experimental results, setup specifics, and limitations of the models. The analysis includes the performance metrics, such as accuracy, precision, recall, and F1-score, and the experimental setup, such as the datasets used, the evaluation metrics, and the training parameters. Moreover, the article highlights the limitations of the models, such as the need for large-scale datasets, the computational complexity, and the generalizability to real-world scenarios.

The impressive results achieved by the models in recent years, as deep learning techniques continue to advance and show improvements, indicate that the limitations of the models are becoming increasingly insignificant in real-world performance and application. However, the article emphasizes the need for further research to address the limitations and improve the models' generalizability to real-world scenarios.

V. IMPLEMENTATION

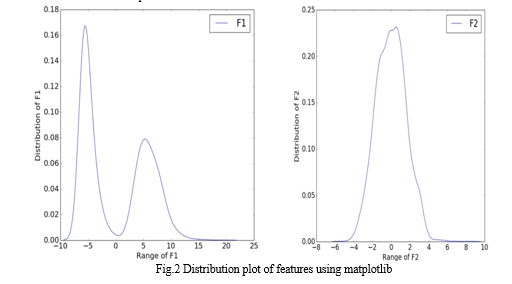

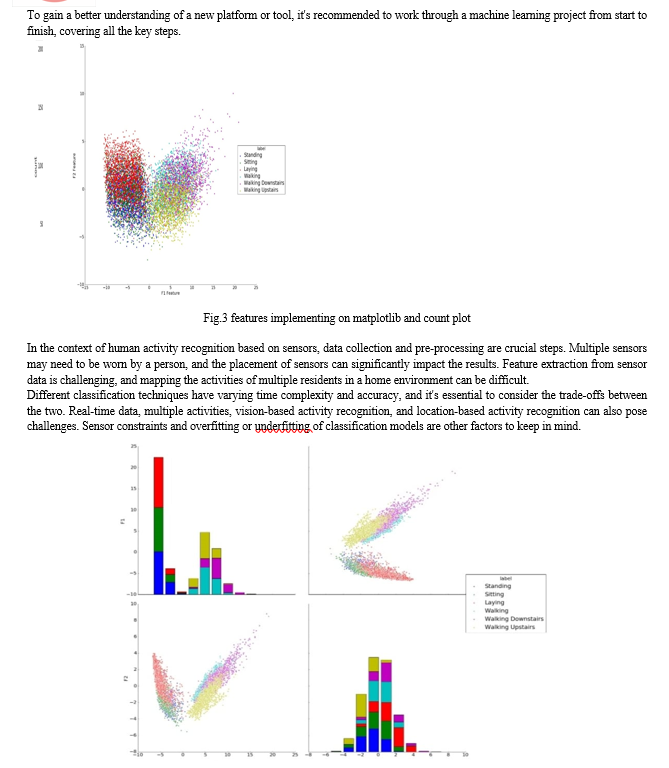

When working on a machine learning project using your own datasets, there are several well-defined steps to follow:

- Define Problem: Clearly outline the problem and its objectives.

- Prepare Data: Collect and pre-process the data to ensure its quality and suitability for the problem.

- Evaluate Algorithms: Experiment with different machine learning algorithms and choose the best one based on the problem's requirements.

- Improve Results: Optimize the chosen algorithm's performance through hyperparameter tuning and other techniques.

- Present Results: Summarize and present the results in a clear and concise manner.

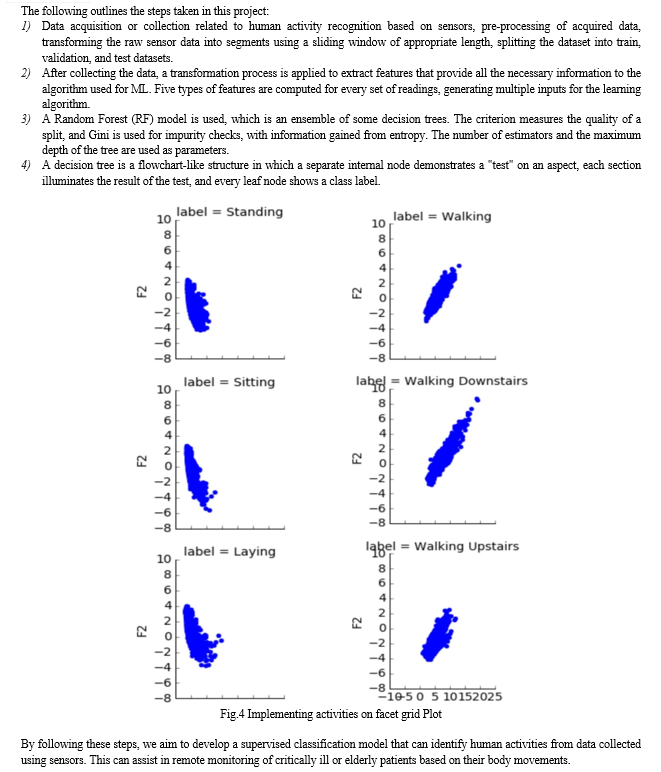

4) Standing: Standing involves staying still, similar to sitting, but there are subtle differences in the sensor data. Specifically, the position of the axes on the graphs changes when switching between sitting and standing. This interchange between the Y and Z axes can serve as a useful indicator for distinguishing between the two activities, despite their similarities.

The testing data revealed distinct patterns for each activity, allowing for accurate classification. While individual characteristics may cause slight variations, the overall shape and pattern of the movement remain consistent, enabling the classifier to distinguish between different activities with high accuracy.

Conclusion

In conclusion, this research underscores the value of employing smartphones and machine learning for recognizing human activities. The results demonstrate the potential for continued progress in this area and open up possibilities for creating novel applications. Our analytical journey progressed from data cleaning and processing, handling missing values, conducting exploratory analysis, and building and evaluating models. The highest accuracy score on the public test set will be determined. This application can assist in identifying human activities based on smartphone sensor data. Moving forward, we aim to investigate the performance of alternative machine learning algorithms. From this experiment, we have learned that even basic machine learning algorithms can yield impressive results with proper parameter tuning. Statistical testing can be used to establish the significance of the results.

References

[1] Wang, X., Yao, L., & Wang, W. (2019). Human activity recognition based on inertial sensors: A comprehensive review. Engineering Applications of Artificial Intelligence, 78, 248-266. [2] Chen, W., Xue, Y., & Wang, J. (2020). Deep learning for human activity recognition: A survey. Neurocomputing, 380, 320-334. [3] Ignatov, A., & Schiele, B. (2018). Real-time human activity recognition on mobile devices using deep learning. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2, 135-143. [4] Yang, Y., Li, X., & Li, S. (2015). A review of human activity recognition methods based on wearable sensors. Sensors, 15(9), 22907-22933. [5] Hammerla, N., Plötz, T., & Konvalinka, I. (2016). Deep learning for human activity recognition: A comprehensive review. Proceedings of the IEEE International Conference on Pervasive Computing and Communications Workshops, 2, 156-163. [6] Ordóñez, F., & Roggen, D. (2016). Deep convolutional and recurrent neural networks for human activity recognition: A comprehensive evaluation. Proceedings of the IEEE International Conference on Pervasive Computing and Communications, 1506-1514. [7] Khan, F. A., & Cho, S. (2020). A survey on wearable sensor based human activity recognition using machine learning and deep learning techniques. Sensors, 20(11), 3195. [8] Deng, Y., Liu, M., & Jiang, X. (2019). A transfer learning approach for human activity recognition using smartphones. IEEE Access, 7, 97012-97021. [9] Chen, Y., Wu, C., & Hsu, C. (2019). Human activity recognition based on wearable sensors using a deep learning model with attention mechanism. Sensors, 19(11), 2548. [10] Radu, A., & Grosse-Wentrup, M. (2018). Multimodal human activity recognition using deep learning: A survey. IEEE Reviews in Biomedical Engineering, 11, 279-290.

Copyright

Copyright © 2024 Rushasri Tholeti, Harsha Tholeti. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET58437

Publish Date : 2024-02-14

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online