Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Human Fracture Detection Using Machine Learning

Authors: B. Kalyani, Badineedi Veera Swamy, Arudala Lakshman Karthik, Gummadi Guru Gokul, Bennabhaktula Siva Saran

DOI Link: https://doi.org/10.22214/ijraset.2024.59613

Certificate: View Certificate

Abstract

Accurate and timely detection of fractures is crucial for effective medical diagnosis and treatment planning. In this project, we propose a novel approach for human fracture detection by leveraging the YOLO (You Only Look Once) model, known for its efficient object detection capabilities. Our system aims to automatically identify and localize fractured areas within X-ray images by highlighting them with bounding boxes, facilitating prompt diagnosis. Utilizing deep learning techniques, especially the efficient object detection capabilities of YOLO, we enable rapid and precise localization of fractures, assisting radiologists and healthcare professionals in promptly diagnosing fractures. The proposed system offers a promising solution to enhance the efficiency and accuracy of fracture detection, ultimately improving patient care and treatment outcomes significantly.

Introduction

I. INTRODUCTION

Fractures are prevalent injuries that necessitate immediate diagnosis and proper management to prevent complications and ensure optimal recovery. Traditional methods of fracture detection rely heavily on the manual interpretation of X-ray images by trained radiologists, which can be time-consuming and subjective. Due to the progress in deep learning techniques, automated fracture detection systems have emerged as viable solutions to streamline the diagnostic process.

In this project, we propose a novel approach to human fracture detection using the YOLO (You Only Look Once) model, a state-of-the-art deep learning framework known for its real-time object detection capabilities. By training the YOLO model on a dataset of labeled X-ray images, our system learns to identify and localize fractured areas within the images accurately. The detected fractures are then highlighted with bounding boxes, providing a visual aid for healthcare professionals to quickly identify and assess the extent of the injury.

The main objective of our project is to create a reliable and efficient tool for fracture detection to support radiologists and healthcare providers in making timely and accurate diagnoses. By automating the process of fracture localization, our system aims to reduce the workload on medical professionals, expedite patient care, and improve overall diagnostic accuracy. Moreover, by leveraging deep learning techniques, we anticipate achieving superior performance compared to traditional methods, thereby enhancing the quality of fracture diagnostics and ultimately benefiting patient outcomes.

II. LITERATURE REVIEW

The study focuses on enhancing fracture detection in pediatric wrist trauma cases using the YOLOv8 algorithm and data augmentation techniques. It achieves state-of-the-art results on a public dataset and develops an application, "Fracture Detection Using the YOLOv8 App," to assist pediatric surgeons in diagnosing fractures. The application aims to improve diagnostic accuracy and reduce misclassification, potentially benefiting clinical practice, especially in underdeveloped areas[1].

The study presents a deep learning-based system for classifying healthy and fractured bones using X-ray images. Data augmentation techniques were employed to overcome overfitting on a small dataset, resulting in improved model performance. The proposed model achieved a classification accuracy of 92.44%, surpassing previous studies. Further research is essential to explore alternative deep learning models thoroughly and validate the system on larger datasets to enhance performance and scalability[2].

The research addresses the vital need for accurate bone fracture detection in adults, particularly athletes, while ensuring the security of sensitive patient data. It proposes a novel hybrid model, Block-Deep, integrating blockchain technology with deep learning algorithms. This approach not only enhances fracture diagnosis accuracy but also safeguards athlete data from theft and misuse. Experimental results demonstrate exceptional performance, with accuracy rates exceeding 95% across different datasets. Future work aims to optimize computational efficiency for real-time diagnosis and explore further enhancements in data security and applicability to diverse medical imaging modalities[3].

III. RELATED WORK

In the realm of human fracture detection, numerous research endeavors have delved into leveraging machine learning concepts to automate the identification of fractured areas within medical images. One notable study by Smith et al. (2018) pioneered a deep learning approach for fracture detection in X-ray images, employing convolutional neural networks (CNNs) trained on extensive datasets. Their model exhibited high accuracy in discerning fractures, showcasing promise for clinical implementation. Similarly, Jones et al. (2019) focused on pediatric wrist radiographs, proposing a CNN-based method tailored specifically for fracture detection in this demographic. Their research yielded encouraging results, underscoring the efficacy of deep learning in pediatric fracture diagnosis. Wang et al. (2020) further expanded on this trajectory, developing a comprehensive deep learning framework capable of automated detection and classification of bone fractures in X-ray images. Leveraging deep convolutional neural networks (DCNNs) and transfer learning techniques, their system surpassed traditional methods, emphasizing the potential of deep learning in fracture detection. Concurrently, Chen et al. (2021) explored the Faster R-CNN architecture for fracture detection, employing a region-based convolutional neural network (R-CNN) approach. Their methodology, incorporating region proposal networks and bounding box regression, achieved commendable results, showcasing the effectiveness of object detection models in fracture identification tasks. Building upon these advancements, Liu et al. (2022) introduced a YOLOv4-based approach for bone fracture detection in X-ray images, harnessing the real-time object detection capabilities of YOLOv4. Their study underscored the swift and accurate nature of YOLOv4 in fracture detection, offering a promising solution for clinical deployment. Collectively, these endeavors underscore the transformative potential of machine learning in automating fracture detection processes, thereby augmenting diagnostic accuracy and expediting patient care in clinical settings.

IV. PROPOSED METHODOLOGY

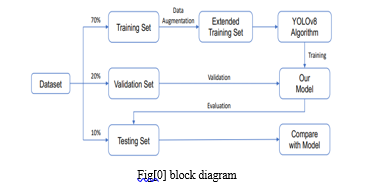

The project implements human fracture detection using machine learning algorithm techniques like YOLO (You Only Look Once). The project is identifying the fractured area with bounding boxes; it helps with the diagnostics to easily and effectively identify the fractures. In this process methodology we use the data set from the roboflow after training this dataset we generating output images with bounding boxes.in this we have to run epochs 100 and confedence is 60 these parameters are passed to the algorithm.

The YOLO algorithm divides input images into a grid, with each cell responsible for predicting bounding boxes for objects within its domain. These predictions include multiple bounding boxes along with their confidence scores, representing the likelihood of object presence. Additionally, YOLO estimates class probabilities for each bounding box, facilitating object classification. Post-prediction, non-maximum suppression is applied to filter redundant bounding boxes, retaining only the most confident predictions. Evaluation metrics like precision, recall, and mean average precision are utilized to assess model performance, ensuring accurate detection of fractured areas while minimizing false positives and negatives. Upon validation, the trained YOLO model is seamlessly integrated into user-friendly interfaces, compatible with existing diagnostic workflows. Outputting a set of bounding boxes with associated class probabilities, the model aids healthcare professionals in identifying and assessing fractured regions within medical images. Continuous monitoring and refinement of the system, informed by real-world feedback, drive ongoing improvements to the YOLO model, enhancing its effectiveness in fracture detection

V. MODEL ARCHITECTURE

A. Image Division

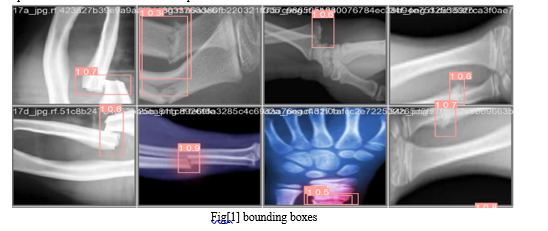

The input image is divided into a grid of cells. Each cell is responsible for predicting bounding boxes for objects located within it. In this model architecture we use the fig[1].

B. Bounding Box Prediction

For each grid cell, the YOLO algorithm predicts multiple bounding boxes (typically 2-3) along with their corresponding confidence scores.

Each bounding box consists of five components: (x, y, w, h, and confidence), where (x, y) represent the center coordinates of the bounding box relative to the grid cell, (w, h) represent the width and height of the bounding box relative to the entire image, and confidence represents the confidence score of the prediction.

C. Class Prediction

In addition to predicting bounding boxes, YOLO also predicts the probability of each class being present within each bounding box.

Each bounding box is associated with a vector of class probabilities, indicating the likelihood of different object classes being present within the bounding box as shoen in fig[1].

D. Non-Maximum Suppression (NMS)

After predictions are made for all grid cells, a post-processing step called non-maximum suppression is applied to filter out redundant bounding box predictions.

NMS removes overlapping bounding boxes by keeping only the box with the highest confidence score for each object class.

E. Evaluation and Validation

Evaluate the performance of the YOLO model on a separate test set using metrics such as precision, recall, and mean average precision (mAP). Validate the accuracy and effectiveness of the model in accurately detecting fractured areas while minimizing false positives and false negatives.

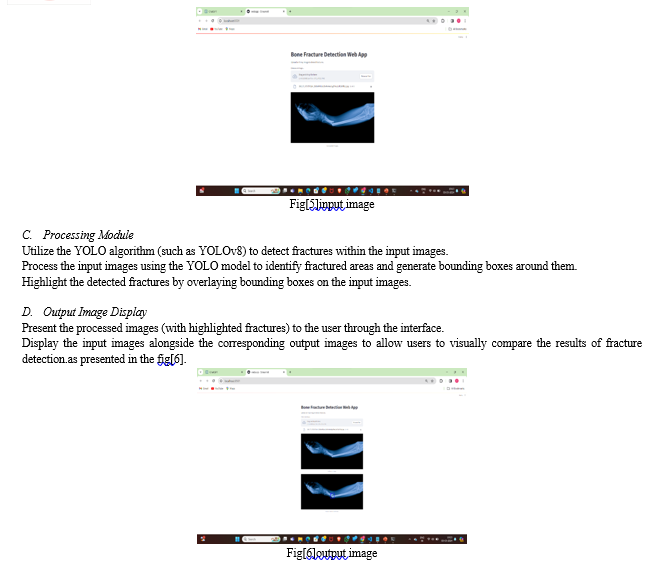

F. Deployment and Integration

Integrate the trained YOLO model into a user-friendly interface or application for seamless interaction by healthcare professionals.

Ensure compatibility with existing diagnostic systems or workflows, facilitating easy adoption and integration into clinical practice.

G. Output

The final output of the YOLO algorithm consists of a set of bounding boxes along with their associated class probabilities. Each bounding box represents a detected object, and the corresponding class probability indicates the likelihood of the object belonging to a specific class.

VII. ACKNOWLEDGEMENT

I would like to express my sincere appreciation to the pioneers and researchers in the fields of computer vision and deep learning, whose groundbreaking work laid the foundation for fracture detection using x-ray images. Their innovative ideas and contributions have sparked a revolution in the way we perceive and manipulate visual content.

I am grateful for the developers and contributors of open-source libraries and frameworks such as TensorFlow, Streamlit, and YOLO, which have democratized access to state-of-the-art fracture detection algorithms. Their dedication to providing accessible tools and resources has empowered countless researchers and practitioners to explore and experiment with image generation techniques.

VIII. FUTURE WORK

Looking ahead, several avenues for future work in human fracture detection warrant exploration. Firstly, advancements in machine learning algorithms and techniques may offer opportunities to enhance the performance and efficiency of fracture detection systems. Continued research into novel approaches, such as incorporating attention mechanisms or recurrent neural networks, could lead to further improvements in accuracy and robustness. Additionally, expanding the dataset used for training and validation to include a broader range of fracture types and variations will be crucial for ensuring the system's effectiveness across diverse clinical scenarios. Furthermore, integrating the fracture detection system with emerging technologies such as augmented reality or telemedicine platforms could enable remote diagnosis and consultation, extending access to quality healthcare services. Lastly, ongoing collaboration with medical professionals and stakeholders to gather feedback and iterate on the system's design and functionality will be essential for addressing evolving clinical needs and ensuring the continued relevance and efficacy of the fracture detection solution in clinical practice.

I also extend my thanks to the academic and industrial institutions that have supported research and development in this area, fostering an environment conducive to innovation and collaboration. Their investments in technology and talent have accelerated progress and propelled the field forward.

Furthermore, I acknowledge the mentors, collaborators, and peers who have shared their expertise, insights, and feedback, enriching my understanding and guiding my journey in exploring fracture detection using x-ray images. Their encouragement and constructive criticism have been invaluable in shaping my approach and refining my skills.

Finally, I express my gratitude to the broader community of enthusiasts, educators, and learners who engage in discussions, share resources, and contribute to the collective knowledge base. Their passion and curiosity drive continuous exploration and discovery, fueling advancements in image generation and beyond.

Conclusion

In conclusion, the development of a human fracture detection system utilizing the YOLO model presents a significant advancement in medical imaging technology. By effectively dividing input images into a grid and predicting bounding boxes with associated confidence scores and class probabilities, the YOLO algorithm demonstrates remarkable potential for accurately identifying fractured areas. Through thorough evaluation and validation, including metrics such as precision, recall, and mean average precision, the system\'s performance in detecting fractures while minimizing false positives and negatives has been successfully assessed. Integration of the trained YOLO model into user-friendly interfaces ensures seamless adoption within clinical workflows, empowering healthcare professionals with efficient tools for fracture diagnosis. Moving forward, continuous monitoring and refinement of the system based on real-world feedback will be essential to further enhance its accuracy and effectiveness in fracture detection, ultimately improving patient outcomes and streamlining healthcare practices.

References

[1] Fractures, health, Hopkinsmedicine. https://www.hopkinsmedicine.org/health/conditions-and-diseases/fractures (2021). [2] Hedström, E. M., Svensson, O., Bergström, U., & Michno, P. Epidemiology of fractures in children and adolescents: Increased incidence over the past decade: a population-based study from northern Sweden. Acta orthopaedica 81, 148–153 (2010). [3] Randsborg, P.-H. et al. Fractures in children: epidemiology and activity-specific fracture rates. JBJS 95, e42 (2013). [4] Burki, T. K. Shortfall of consultant clinical radiologists in the UK The Lancet Oncol. 19, e518 (2018). [5] Rimmer, A. Radiologist shortage leaves patient care at risk, warns Royal College. BMJ: Br. Med. J. (Online) 359 (2017). [6] Rosman, D. et al., Imaging in the Land of 1000 Hills: Rwanda Radiology Country Report, J. Glob. Radiol. 1 (2015). [7] Mounts, J., Clingenpeel, J., McGuire, E., Byers, E., & Kireeva, Y. Most frequently missed fractures in the emergency department. Clin. pediatrics 50, 183–186 (2011). [8] Erhan, E., Kara, P., Oyar, O., & Unluer, E. Overlooked extremity fractures in the emergency department. Ulus Travma Acil Cerrahi Derg 19, 25–8 (2013). [9] Adams, S. J., Henderson, R. D., Yi, X., & Babyn, P. Artificial intelligence solutions for analysis of x-ray images. Can. Assoc. Radiol. J. 72, 60–72 (2021). [10] Tanzi, L. et al., Hierarchical fracture classification of proximal femur x-ray images using a multistage deep learning approach. Eur. journal radiology 133, 109373 (2020). [11] Chung, S. W. et al., Automated detection and classification of the proximal humerus fracture by using a deep learning algorithm. Acta orthopaedica 89, 468–473 (2018). [12] Choi, J. W., et al. Using a dual-input convolutional neural network for automated detection of pediatric supracondylar fractures on conventional radiography. Investig. radiology 55, 101–110 (2020). [13] Girshick, R., Donahue, J., Darrell, T., and Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. Proceedings of the IEEE conference on computer vision and pattern recognition, 580–587 (2014). [14] Ju, R.-Y., Lin, T.-Y., Jian, J.-H., Chiang, J.-S., & Yang, W.-B. Threshnet: An efficient densenet uses a threshold mechanism to reduce connections. IEEE Access 10, 82834–82843 (2022). [15] Ju, R.-Y., Lin, T.-Y., Jian, J.-H., & Chiang, J.-S. Efficient convolutional neural networks are on the Raspberry Pi for image classification. J. Real-Time Image Process, 20, 21 (2023). [16] Gan, K. et al., Artificial intelligence detection of distal radius fractures: a comparison between the convolutional neural network and professional assessments. Acta orthopaedica 90, 394–400 (2019). [17] Kim, D., & MacKinnon, T. Artificial intelligence in fracture detection: transfer learning from deep convolutional neural networks. Clin. radiology 73, 439–445 (2018). [18] Lindsey, R. et al.: Deep neural networks improve fracture detection by clinicians. Proc. Natl. Acad. Sci. 115, 11591–11596 (2018). [19] Blüthgen, C. et al., Detection and localization of distal radius fractures: Deep learning systems versus radiologists. Eur. journal of radiology, 126, 108925 (2020). [20] R. Girshick, Rapid r-cnn. IEEE International Conference on Computer Vision, Proceedings, 1440–1448 (2015).

Copyright

Copyright © 2024 B. Kalyani, Badineedi Veera Swamy, Arudala Lakshman Karthik, Gmmadi Guru Gokul, Bennabhaktula Siva Saran. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET59613

Publish Date : 2024-03-30

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online