Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Human Motion Recognition with Smartphone Data

Authors: Akshara. G, MS Sheena Mohammed

DOI Link: https://doi.org/10.22214/ijraset.2024.64279

Certificate: View Certificate

Abstract

The Human Motion (Activity) Recognition (HAR) is significant as it can provide valuable insights into human activity patterns and behaviors, enabling the development of personalized and context-aware healthcare solutions. The use of smartphones in The Human Activity Recognition projects can facilitate the collection of real-world data over extended periods, leading to more ecologically valid and reliable activity recognition. This project proposes a Human Activity Recognition system that leverages Random Forests and Neural Networks to enhance accuracy in wearable and mobile technology. Utilizing the UHAR dataset from Kaggle, which includes sensor data for six activities— walking, climbing stairs, sitting, standing, and laying down—the system can accurately classify these activities. The benefits of this project include promoting health and fitness, assessing fall risks, encouraging ergonomic improvements, and analyzing sleep quality, thereby providing valuable insights into various aspects of health and well-being. By using Human Activity Recognition using smartphones with neural networks and random forest algorithm project can be valuable in various healthcare applications like monitoring patient activity levels, rehabilitation programs, and fall detection for elderly caring people.

Introduction

I. INTRODUCTION

Human Activity Recognition (HAR) is an expanding field that uses sensors and machine learning to identify human activities. The rise of smartphones, equipped with sensors like accelerometers, gyroscopes, and GPS, has made HAR a promising area of research. These sensors collect data that can be analyzed to classify different activities. HAR has applications in healthcare, fitness, and entertainment. It can monitor chronic disease patients, track physical activities, and provide personalized feedback and coaching. Smartphones are ideal for HAR because they are widely available, have multiple sensors, and possess strong processing capabilities. However, HAR using smartphones faces challenges such as sensor variability, the need for large labeled datasets, and privacy concerns. Recent advances in wireless, wearable, and smartphone technologies have enhanced Medicare applications by enabling the collection and analysis of patient data. This continuous monitoring improves outcomes, especially for disabled and elderly patients, and has led to new intelligent healthcare applications. Developing HAR systems focuses on data quality and energy efficiency, using hardware-friendly computation techniques. Various machine learning algorithms, including decision trees, random forests, SVMs, and neural networks, have been used for HAR. Deep learning algorithms, such as CNNs and RNNs, have shown high accuracy in recognizing activities. Overall, HAR using smartphones is a growing field with significant applications. While deep learning has improved HAR systems' accuracy, challenges like sensor variability, data needs, and privacy must be addressed to advance this technology.[1][2]

II. METHODOLOGY

The Human Activity Recognition (HAR) project is designed to create a detailed system that tracks and identifies a variety of daily activities. Our goal is to develop a reliable and precise system that offers users valuable insights into their physical activities, location-based movements, and overall health metrics. This system is composed of several key modules, each focusing on different aspects of activity recognition and user support.

A. Module 1: Activity Recognition

This module focuses on identifying physical activities such as cycling, running, walking, and standing. Users start the process by tapping the app icon or start button, which activates the phone's sensors like accelerometers and gyroscopes. These sensors continuously monitor the user's movements.The data collected is then analyzed using machine learning algorithms to accurately classify the activities being performed. This module is the core of the HAR system, providing real-time activity monitoring.[5]

B. Module 2: Location-Based Activity Recognition

Building on the basic activity recognition, this module integrates GPS sensors to track the user’s location and correlate it with their physical activities.

By combining movement data with location information, the system can provide context-aware activity recognition. For instance, it can differentiate between running in a park and walking in a shopping mall. This module improves the precision of activity recognition and is especially useful for features like fall detection.

C. Module 3: Fall Recognition

The fall recognition module is designed to detect falls and trigger emergency responses. It uses data from accelerometers, gyroscopes, and GPS to monitor the user's movements continuously. If a fall is detected, the system immediately raises an alarm. It then closely observes the user's subsequent activities; if no movement is detected after the fall, an emergency message is sent to a pre-designated guardian, providing details of the incident and the user's location. This module is crucial for ensuring the safety of elderly or disabled users who are more prone to falls.

D. Module 4: Physical Activity Chart

This module offers users a visual summary of their daily physical activities. The app compiles data on the types and durations of activities performed throughout the day, presenting it in an easy-to-read chart format. Users can review their activity patterns, set goals, and track their progress over time. This feature encourages a healthy lifestyle by providing clear feedback on physical activity levels.

E. Module 5: Calorie Burnt

The calorie burnt module calculates the number of calories burned during various activities. Using data from activity recognition and user-specific information like weight and age, the app estimates calories burned for each activity. It also provides daily and weekly summaries to help users understand their energy expenditure. Additionally, the app offers personalized recommendations on the number of calories to burn to achieve fitness goals, promoting a balanced and healthy lifestyle.

F. Module 6: Medical Reminder

This module helps users manage their medication schedules. Users can input their prescriptions, including medication names, dosages, and timings, into the app. The system then generates reminders to ensure that users take their medications at the right time. This feature is particularly useful for individuals with chronic conditions who need regular medication, aiding in better adherence and improved health outcomes.

G. Module 7: Distance Traveled

This module tracks the distance user's travel by running, cycling, or walking. By using GPS data and activity recognition algorithms, the app calculates the total distance covered for each activity type. Users can view their daily, weekly, and monthly distance metrics, allowing them to monitor their physical activity levels and set distance-based goals. This feature supports users in maintaining an active lifestyle and achieving their fitness objectives.

H. Implementation and Integration

The implementation of these modules will be done sequentially, with each module undergoing rigorous testing and validation before being integrated into the overall system. The system leverages advanced sensors and machine learning algorithms to ensure accurate and reliable activity recognition. Data privacy and security are prioritized, and the system will incorporate robust encryption and data handling protocols to protect user information.

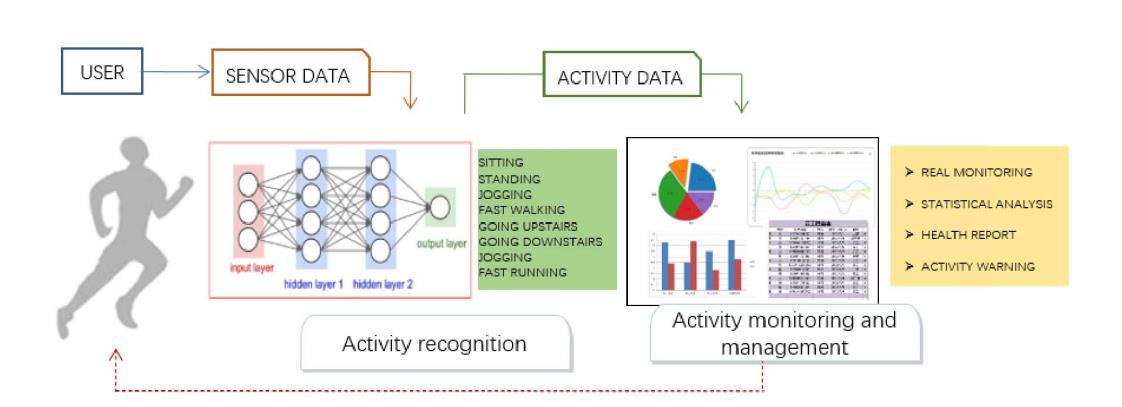

Fig: 2.1 Proposed methodology of Human Activity Recognition

Fig: 2.1 Proposed methodology of Human Activity Recognition

The system architecture consists of three main stages: sensor data collection from the user, activity recognition using a neural network, and activity monitoring and management. The sensor data is processed to classify activities such as sitting and running, which are then used for real-time monitoring, statistical analysis, health reporting, and activity warnings.

III. RESULT

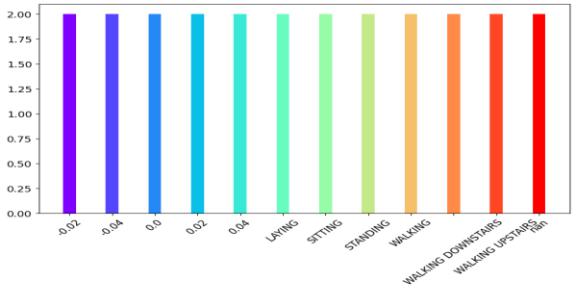

A. Activity Frequency in the Dataset

The data collected showed a balanced distribution of various activities such as laying, sitting, standing, walking, walking downstairs, and walking upstairs. Each activity was equally represented, ensuring that our machine learning models were not biased towards any specific activity.

Fig:3.1 Activity Frequency on the dataset

A bar chart titled "Activity" visualizes the distribution of people across various postures, including walking, standing, sitting, and lying.

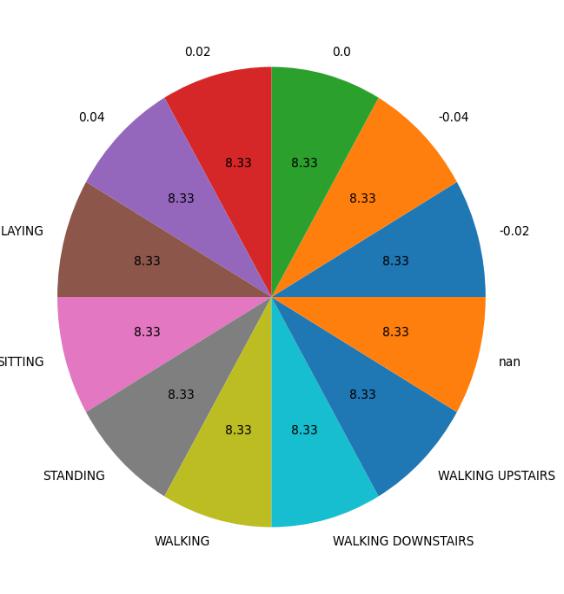

B. Activity Distribution

A pie chart of the dataset distribution confirmed that each activity type constituted 8.33% of the total data. This equal representation is crucial for developing accurate and reliable activity recognition models.

Fig: 3.2 Activity Distribution in the Dataset

This pie chart illustrates a balanced distribution, with each activity receiving an equal portion.

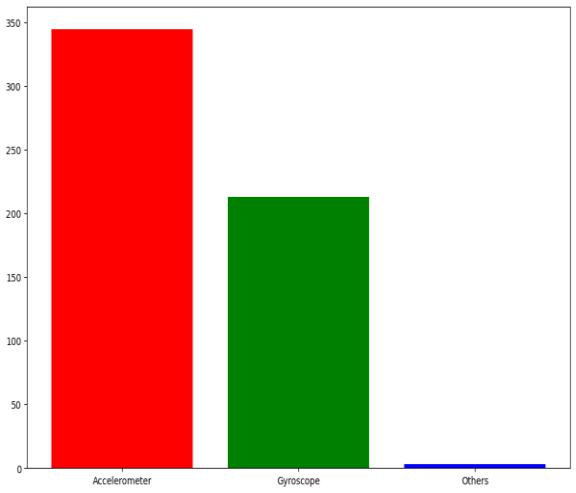

C. Sensor Accuracy

We compared the accuracy of different sensors used in the study. The accelerometer emerged as the most accurate, followed by the gyroscope. Other sensors contributed minimally to the overall accuracy, underscoring the importance of the accelerometer and gyroscope in recognizing human activities.

Fig:3.3 Accuracy of Sensor in graph

The Bar Graph shows the Accuracy of sensors such as Accelerometer, Gyroscope and others. P

D. Sensor Data Over Time

A graph illustrating sensor data over time for multiple subjects revealed distinct patterns for each individual. These unique trends highlight the potential for personalizing activity recognition systems based on individual characteristics.

Fig 3.4: Accuracy graphs of sensors

The Graph shows the Accuracy of sensors such as Accelerometer, Gyroscope and others.

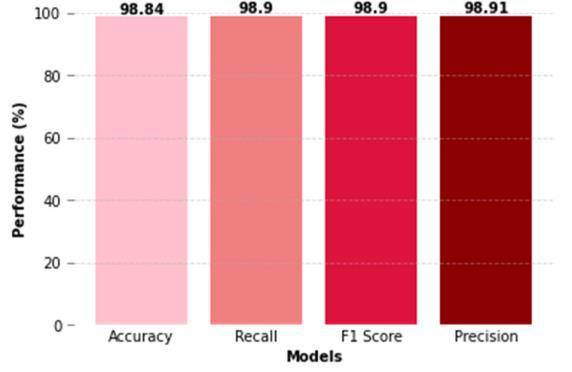

E. Evaluation Metrics

We evaluated the performance of our models using metrics such as accuracy, recall, F1 score, and precision. Our models demonstrated strong performance across all metrics, indicating their effectiveness in accurately identifying different activities from the sensor data.

FIG:3.5: The Evaluation Metrics, Accuracy, Recall,F1 score, and Precision value

A line graph shows performance of four models (Accuracy, Recall, F1 Score, Precision) across different metrics (0 – 100)

IV. DISCUSSION

The combination of Artificial Neural Networks (ANN), Neural Networks, and Random Forest (RF) classifiers has shown great promise in Human Activity

Recognition (HAR) using Inertial Measurement Unit (IMU) data. ANNs and Neural Networks effectively manage the high dimensionality and complexity of sensor data by automatically learning hierarchical representations, reducing the need for manual feature engineering. This enhances the overall performance of HAR systems by allowing these networks to extract meaningful patterns from raw data.

Random Forest classifiers complement ANNs and Neural Networks by handling noisy and incomplete data through averaging the predictions of multiple decision trees, which smooths out inconsistencies and improves classification accuracy. This synergy leverages the strengths of all methods: ANNs and Neural Networks for complex feature extraction and RFs for robustness against data noise.

Additionally, RFs provide clear interpretability, helping to identify the most important features for different activities. This balanced approach enhances both the accuracy and transparency of HAR systems.[1][8]

Conclusion

The ability to accurately recognize and classify human activities is a crucial aspect of various healthcare applications, including monitoring patient activity levels, rehabilitation programs, and fall detection for elderly care. Human Activity Recognition (HAR) has emerged as a promising technology to address these needs. By leveraging machine learning algorithms such as Random Forest and Neural Networks, HAR can provide accurate and reliable activity recognition, enabling healthcare professionals to make informed decisions and improve patient outcomes. In addition to its applications in healthcare, HAR has the potential to revolutionize other industries as well. For instance, in the field of sports, HAR can be used to analyze athlete performance, detect patterns, and provide personalized training programs. In the context of smart homes and buildings, HAR can be used to optimize energy consumption, improve security, and enhance the overall living experience. With the increasing availability of wearable devices and the proliferation of IoT sensors, the potential use cases for HAR are vast and varied. As the technology continues to evolve, we can expect to see HAR becoming an integral part of our daily lives, providing valuable insights and enabling us to make informed decisions in real-time.[4][7]

References

[1] F. Zhou, R. Wang, H. Su and S. Xu, \"A Human Activity Recognition Model Based on Wearable Sensor,\" 2022 9th International Conference on Digital Home (ICDH), Guangzhou, China, 2022, pp. 169-174, Doi: 10.1109/ICDH57206.2022.00033. [2] E. Bulbul, A. Cetin and I. A. Dogru, \"Human Activity Recognition Using Smartphones,\" 2018 2nd International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), Ankara, Turkey, 2018, pp. 1-6, doi: 10.1109/ISMSIT.2018.8567275. [3] V. Radhika, C. R. Prasad and A. Chakradhar, \"Smartphone-Based Human Activities Recognition System using Random Forest Algorithm,\" 2022 International Conference for Advancement in Technology (ICONAT), Goa, India, 2022, pp. 1-4, doi: 10.1109/ICONAT53423.2022.9726006. [4] D. N. Tran and D. D. Phan, \"Human Activities Recognition in Android Smartphone Using Support Vector Machine,\" 2016 7th International Conference on Intelligent Systems, Modelling and Simulation (ISMS), Bangkok, Thailand, 2016, pp. 64-68, doi: 10.1109/ISMS.2016.51. [5] N. G. Nia, A. Amiri, A. Nasab, E. Kaplanoglu and Y. Liang, \"The Power of ANN-Random Forest Algorithm in Human Activities Recognition Using IMU Data,\" 2023 IEEE EMBS International Conference on Biomedical and Health Informatics (BHI), Pittsburgh, PA, USA, 2023, pp. 1-7, doi: 10.1109/BHI58575.2023.10313507. [6] I. Stolovas, S. Suárez, D. Pereyra, F. De Izaguirre and V. Cabrera, \"Human activity recognition using machine learning techniques in a low-resource embedded system,\" 2021 IEEE URUCON, Montevideo, Uruguay, 2021, pp. 263-267, doi: 10.1109/URUCON53396.2021.9647236. [7] R. Moola and A. Hossain, \"Human Activity Recognition using Deep Learning,\" 2022 URSI Regional Conference on Radio Science (URSI-RCRS), Indore, India, 2022, pp. 1-4, doi: 10.23919/URSI-RCRS 56822.2022.10118525. [8] E. Bulbul, A. Cetin and I. A. Dogru, \"Human Activity Recognition Using Smartphones,\" 2018 2nd International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), Ankara, Turkey, 2018, pp. 1-6, doi: 10.1109/ISMSIT.2018.8567275.

Copyright

Copyright © 2024 Akshara. G, MS Sheena Mohammed. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET64279

Publish Date : 2024-09-19

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online