Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Image Classification and Object Following Functions for Mobile Robots

Authors: Lakshya Jain, Upendra Bhanushali, Rajashree Daryapurkar

DOI Link: https://doi.org/10.22214/ijraset.2022.47142

Certificate: View Certificate

Abstract

This paper describes the design and implementation of a deployable, multi-modal system executed using Deep Learning with Computer Vision. The system was majorly created using Transfer Learning Algorithms, GoogleNet, and MATLAB, which were then made portable and deployable using a Raspberry Pi with Pi Cam. The neural networks were trained on a dataset consisting of several images and is highly accurate. This system consists of Fire Detection, Face and Intruder Detection, Object Identification, and Animal Identification, and follow me features which are meant to be deployed on a mobile robot such as an autonomous rover, drone, glider, and aquatic robot.

Introduction

I. INTRODUCTION

Forest environments are an essential ecosystem for most animal species around the world. The world's forests are central to several major developmental and environmental issues like climate change, sustainability, and biodiversity. In the past few centuries, around forty-five percent of the earth's original forest cover has been lost due to urbanization, deforestation, degradation, and fragmentation of forests. Several malicious activities like hunting and poaching endangered species cause biodiversity problems and hence compromise the health of the forest. It is necessary to ensure that the ecosystem's health, such as forest areas, is adequately maintained. For the sustainability of forests and countries, it is necessary to monitor and track the health of forest areas. Global forest assessments provide information on development, changes, or progress in forests and forestry, which is required for decision-making on a national and international level. Therefore, collecting and compiling information related to our forest areas is essential. Since we have limited tools for monitoring the forest, this is where the need for innovation rises.

The main aim of our project is to design a system equipped with computer vision and a deep neural network capable of identifying fires, objects, and animals and making it such that it can be deployed onto an unmanned/manned vehicle. This system will be highly beneficial for the surveillance and health maintenance of remote forest areas as it will enable us to remotely monitor areas of the forest that we cannot access using traditional methods. It will also relieve humans of the risks and responsibility of going on expeditions into the forest by themselves. It also allows us to keep track of forest fires and animal populations and identify any malicious activities in the forest areas. Forests are an essential asset to our existence, and this makes the preservation of forests extremely important. Surveillance provides crucial forest data, such as human interference and early forest fire alerts, but multiple camera systems can be costly. The advantages of a surveillance rover are that it provides an actual time feed on our ground station(laptop), which can be recorded or post-processed; it is robust and more reliable than a CCTV camera which can leave blind spots. It makes autonomous decisions when an obstacle is encountered, identifies animals, and detects fire that can alert us about forest fires. While visiting a location which is dark or being on an expedition, it is always better to have someone carry your things for you, for example hold a torch or capture a feed while you focus on your activities. That's where an object following function plays its part. Utilizing robots for mundane tasks such as providing assistance makes it more reliable and precise. The basic idea behind the implementation of a computer vision enabled robot is to make it identify an object of a particular pattern by training it on the images captured by its camera and making it react to what it sees using the camera in real time. Robots with capabilities of following particular objects, living things, and humans are being actively researched for their potential to carry out routine tasks like carrying loads, collaborating with humans, and monitoring certain activities. Recent innovations in sensors and computer vision have helped us create more intuitive robots which can live with humans by utilizing their perception for movement estimation and obstacle avoidance. Object/People following robots can be used in purposes such as shopping carts for supermarkets, tour guides, and for carrying loads for industrial automation, hospitals, and other public places. In our case, the following feature has been deployed on a forest surveillance rover which can be used in forest related activities. This is achieved by doing the processing on the received camera feed from the rover on our laptop using MATLAB’s computer vision toolbox and MATLAB support package for Raspberry Pi.

II. LITERATURE REVIEW

Sahu et al. [1] have discussed how an autonomous vehicle's hardware setup is developed. OpenCV, the popular Computer Vision tool, is a controller of this robot. Images captured by a raspberry pi camera are processed by OpenCV and are sent as the signal in digital form to do the following action.

An algorithm has been developed based on color detection for navigating the vehicle in various directions. High-level algorithms such as 3D scene mapping, object recognition, and object tracking make the decisions by application. The raspberry pi camera captures images of the desired trajectory and symbols for making decisions. For the direction and movement of the vehicle, the images are processed to produce different commands.

Different symbols like stop left turn, and right turn is used as input. They verify the efficacy of the proposed algorithm experimentally.

Wu and Zhang [2] have worked on three crucial problems of forest fire detection: real-time detection, early fire detection, and false detection. To achieve real-time fire detection, they have implemented several object detection algorithms, such as Faster R-CNN, SSD, and Yolo V3. It is observed that SSD has the highest accuracy of 99.8% for fire and 68.4% for smoke detection. SSD detects the area of the fire by four values: Xmin, Ymin, Xmax, and Ymax.

These values represent the coordinates of the top left corner and the coordinates of the lower right corner; from these values, we calculate the area.

We catch the two interval frames of the fire; when the area grows more prominent, this must be the fire. This paper compares the performances of SSD and YOLO, and the results prove that SSD performs better in terms of detection accuracy and faster frame rate. The limitation is that these methods need a faster GPU, as NVidia 1070 has been used here.

Kumari and Sanjay [3] have worked on a robot car that can conduct surveillance operations in remote places, which may be harmful and difficult for humans to reach.

The authors have designed an android application that sends instructions to the robot through Bluetooth or Wi-Fi for navigation. The authors have created a mobile application made with MIT application creator. A smartphone is attached to the robot, along with the ESP8266 nodeMCU module, allowing the user to control it and provide a sound and video feed to the ground station. They have used an L298N HBridge motor driver to interface the Wi-Fi module with the motors. Audio and video feed are acquired using the mobile phone attached to the robot via an IP WEBCAM mobile app. They have also used the YOLO Algorithm for object detection, which identifies several objects.

S. Liawatimena et al. [4] aim at solving the problem of maintaining organized marine and fishery statistics according to the law in Indonesia in this paper.

They have developed a Matlab and transfer learning-based fish classification model on the dataset of fish images to solve the problem. Modified from the pre-trained network AlexNet they have made a network called FishNet. FishNet has a dataset of nearly fifteen thousand images divided into five thousand for each category of fish type. The three categories of fish are Coryphaena Hippurus, Euthynnus Affinis, and Katsuwonus Pelamis.

Dataset is split into 70:30, 70 for training, and 30 for the validation set. MATLAB is used for training purposes. The paper covers the concept of convolution neural networks and how a pre-trained network be used to make our network with high accuracy and less processing. FishNet, as it utilizes Alexnet, can achieve higher accuracy. FishNet achieves a validation accuracy of 99.63%.

In this paper, F. Yuesheng et al. [5] have worked on an optimized GoogleNet with the same accuracy and how it performs compared to other convolutional neural networks.

First, they use GoogleNet to classify tomatoes, pomegranates, oranges, lemons, apples, and colored peppers. To solve the problem of the low training speed of the GoogleNet network, they adjusted the structure of Inception and reduced the number of convolutional kernels of the model.

Then they increased the model's accuracy by 2% by making some changes. Then they compared the optimized GoogleNet to the other CNN like ResNet, AlexNet, VGG, and DenseNet.

The performance was increased to 98.82% and the speed to 33.68 sheets/sec.

We also explored various papers related to Computer Vision using Pi Cam, Deep Neural Networks, and its various implementations on systems similar to ours.

We then took a deep dive into MATLAB with Deep Learning Toolbox, Raspberry Pi Toolbox, and transfer learning to understand better the methods and concepts we wanted to implement in our project.

III. METHODOLOGY

A. Block Diagram of our System

The following is a block diagram for our proposed solution for fire, object, and animal identification features on a mobile robot.

B. Raspberry Pi Setup for MATLAB Deep Learning Implementation

To start working with the Raspberry Pi and all the functions and programs involved, we opted to go for a 'headless setup' of the Raspberry Pi to be able to use it with our computer. Since we are working with MATLAB, we had to use a modified version of Raspbian OS with Deep Neural Network capabilities. This OS and the MATLAB Support Package for Raspberry Pi are needed for using MATLAB with Raspberry Pi.

C. Face Detection

The first feature we implemented using the Raspberry Pi-MATLAB system was face detection and recognition using the Computer Vision Toolbox in MATLAB. In our system, we access the Raspberry Pi's camera view over Wi-Fi using the Raspberry Pi Toolbox and Webcam toolbox in MATLAB.

D. Object Detection Algorithm:

- Next, we implemented an object detection function in MATLAB using GoogleNet pre-trained neural network.

- Our system is capable of identifying and labeling 1000+ different objects, and this network has been modified and trained by us for our purposes.

- This feature enables our robot to have a wide range of functionalities and makes it very smart and robust. MATLAB takes input video at 30 Frames per second and gives us an output with the probability and name of the object in the frame.

- This method has dramatically succeeded, especially if only one object is in the frame. For multiple objects at once, the robot can get confused as it is programmed to identify one object at once.

???????E. ???????Animal Identification Algorithm:

Using Transfer Learning and a modified version of GoogleNet as our primary neural network, we have trained a deep neural network to identify and classify different 45 species of animals. This new network is called ‘animalnet’.

- Approximately 500 images of each animal were used for training by transfer learning.

- The network was then tested on about 50 images of each animal.

The Results for the Implementations are as follows:

a. Using image processing and pre-trained Deep Neural Network on MATLAB on

b. our Ground Station along with fire detection feature.

c. We have achieved a validation accuracy of 92.61% for animal identification.

d. We have also deployed the network to take input from the Raspberry Pi Camera

e. and it gave us highly accurate results.

F. ??????????????Fire Detection Algorithm

Using Transfer Learning with GoogleNet as our base network, we also designed a neural network to detect and identify a fire in the image data stream from the PiCam. The new network was named ‘firenet’.

- The Fire Detection feature will take inputs from the image processing part, as well as a DHT11 sensor.

- In case there is a fire detected in the image and the robot also detects high temperature in the area, the robot will alert the user immediately.

- Once a fire in detected, we can then use our robot to monitor the extent of the fire as well as call in an emergency response signal from the robot.

The Observations from the Fire Detection Algorithm Implementation were:

a. Using Transfer Learning with GoogleNet as our base network, we also designed a neural network to detect and identify a fire in the image data stream from the PiCam.

b. The validation accuracy for fire detection is 98%.

C. We have also deployed the network to take input from the Raspberry Pi Camera

and it gave us highly accurate results.

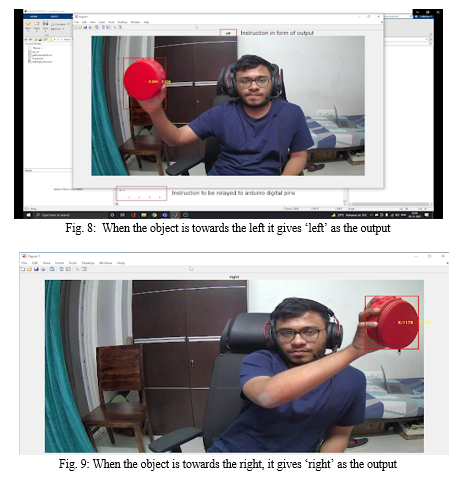

???????G. Follow Me Feature Implementation

An image detection-based Follow-Me feature for a rover can be a very important feature for purposes such as forest expeditions, videography and for tasks such as carrying heavy loads in warehouse robots.

- For the implementation of this feature, we used the computer vision toolbox for MATLAB.

- For this function, we use a red-coloured object because it is not a common colour in the forest environment and will not confuse the robot.

- First, our algorithm converts the incoming images into Binary, removing all colours in the image except the reds.

- Then, we filter the red parts using a filter such that we can isolate things that could be our red object.

- Our MATLAB Script uses Image Processing to filter out and select Red Objects that have an area of over 5000 pixels (adjustable) and marks them with a bounding box.

- Depending on the position of the bounding box from the centre of the frame and the area of the bounding box, the robot decides whether to turn left or right, go forward, backward or stop.

- The Raspberry Pi then communicates the instructions to the Arduino which drives the motors accordingly.

- If the object is not visible, the robot will execute a right turn till the object gets back in frame.

???????H. MATLAB App GUI

- For changing between different image identification modes and object following mode, we have created a GUI based Application using MATLAB.

- Using this Application, we can switch between all the functions seamlessly and with ease.

???????

???????

Conclusion

1) Thus, we have created a small-scale project wherein we have made a deployable system for face detection, fire detection, animal and object identification. 2) This system illustrates a low-cost, intelligent and portable solution which can be deployed on any rover, drone or aquatic robot easily. 3) We have created a solution involving mobile robots with surveillance cameras that can perform various kinds of vision-related tasks, enabling us to use it in various systems and backgrounds. 4) We have thus created a highly sophisticated system in a simple, usable form with an impressively accurate output. 5) The validation accuracy for ‘animalnet’ is 92.61%, while that of ‘firenet’ is 98%. 6) The implementation of Follow-Me Function for a mobile rover was successful and we are able to track and follow a red object. 7) We have created a GitHub Repository with our entire source code, MATLAB code as well as datasets. https://github.com/flux04/forest_surveillance_robot

References

[1] B. K. Sahu, B. Kumar Sahu, J. Choudhury and A. Nag,” Development of Hardware Setup of an Autonomous Robotic Vehicle Based on Computer Vision Using Raspberry Pi,” 2019 Innovations in Power and Advanced Computing Technologies (i-PACT), 2019, pp. 1-5, doi: 10.1109/i-PACT44901.2019.8960011. [2] Wu and L. Zhang,” Using Popular Object Detection Methods for Real Time Forest Fire Detection,” 2018 11th International Symposium on Computational Intelligence and Design (ISCID), 2018, pp. 280-284, doi: 10.1109/ISCID.2018.00070. [3] R. Kumari and P. S. Sanjay,” Smart Surveillance Robot using Object Detection,” 2020 International Conference on Communication and Signal Processing (ICCSP), 2020, pp. 0962-0965, doi: 10.1109/ICCSP48568.2020.9182125. [4] S. Liawatimena et al.,” A Fish Classification on Images using Transfer Learning and Matlab,” 2018 Indonesian Association for Pattern Recognition International Conference (INAPR), 2018, pp. 108-112, doi: 10.1109/INAPR.2018.8627007. [5] F. Yuesheng et al.,” Circular Fruit and Vegetable Classification Based on Optimized GoogleNet,” in IEEE Access, vol. 9, pp. 113599-113611, 2021, doi:10.1109/ACCESS.2021.3105112. [6] Budiharto, Widodo.” Intelligent surveillance robot with obstacle avoidance capabilities using neural network.” Computational intelligence and neuroscience 2015(2015). [7] A. A. Shah, Z. A. Zaidi, B. S. Chowdhry and J. Daudpoto,” Real time face detection/monitor using raspberry pi and MATLAB,” 2016 IEEE 10th International Conference on Application of Information and Communication Technologies (AICT), 2016, pp. 1-4, doi: 10.1109/ICAICT.2016.7991743. [8] E. Elbasi,” Reliable abnormal event detection from IoT surveillance systems,” 2020 7th International Conference on Internet of Things: Systems, Management and Security (IOTSMS), 2020, pp. 1-5, doi: 10.1109/IOTSMS52051.2020.9340162. [9] R. Huang, J. Pedoeem and C. Chen,” YOLO-LITE: A Real-Time Object Detection Algorithm Optimized for Non-GPU Computers,” 2018 IEEE International Conference on Big Data (Big Data), 2018, pp. 2503-2510, doi: 10.1109/Big-Data.2018.8621865. [10] [15] Sakali, Nagaraju, and G. Nagendra.” Design and implementation of Web Surveillance Robot for Video Monitoring and Motion Detection.” International Journal of Engineering Science and Computing (2017): 4298-4301. [11]

Copyright

Copyright © 2022 Lakshya Jain, Upendra Bhanushali, Rajashree Daryapurkar. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET47142

Publish Date : 2022-10-20

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online