Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Implementation of Smart Vehicle Assistant using Computer vision and Deep Learning

Authors: Vedant Nukte, Prof. Pooja Hajare, Sahil Waghmare, Sushil Kannake, Ishan Wade

DOI Link: https://doi.org/10.22214/ijraset.2024.61263

Certificate: View Certificate

Abstract

Autonomous cars are a popular research topic in the field of intelligent transportation systems because they have the potential to greatly reduce traffic and improve travel efficiency. Scene classification is a key technology for autonomous cars because it forms the basis of these vehicles\' decision-making. In recent years, deep learning-based methods have demonstrated promise in resolving the scene classification issue. However, further research is needed on a few difficulties related to scene classification methodologies, such as how to treat similarities and contrasts within the same category. To tackle these problems, this research suggests an improved deep network-based scene classification method. Autonomous cars are a popular research topic in the field of intelligent transportation systems because they have the potential to greatly reduce traffic and improve travel efficiency. Scene classification is a key technology for autonomous cars because it forms the basis of these vehicles\' decision-making. In recent years, deep learning-based methods have demonstrated promise in resolving the scene classification issue. However, further research is needed on a few difficulties related to scene classification methodologies, such as how to treat similarities and contrasts within the same category. To tackle these problems, this research suggests an improved deep network-based scene classification method. The experimental results demonstrate that the suggested method can achieve an accuracy higher than that of state-of-the-art methods.

Introduction

I. INTRODUCTION

Road safety is a major worry in our era of continuously developing technologies. This advancement is demonstrated by the advent of automated smart assistants in cars, which hold great promise for enhancing driver safety and navigation effectiveness. By creating a comprehensive, fully automated smart assistant for cars, the "Design and Implementation of an Automated Smart Assistant for Enhanced Vehicle Safety" project seeks to advance this evolutionary trend. Modern technologies including lane detection, blind spot monitoring, speed limit detection, traffic signal recognition, road sign interpretation, and obstacle identification are all included into the suggested system. By giving the driver a comprehensive awareness of their environment, these features encourage safer, more informed driving decisions. Through the seamless integration of deep learning, computer vision, and sensor fusion technologies, this project aims to revolutionise our engagement with transportation systems by offering an upgraded toolset for vehicular safety. Our solution not only improves vehicle safety but also contributes to the global drive towards autonomous vehicles by offering a strong base of dependable and durable detecting characteristics. Come along as we explore the intricacies, obstacles, and results of this grand effort aimed at ensuring a safer driving environment in the future.

II. LITERATURE SURVEY

An Improved Deep Network-Based Method for Autonomous Vehicle Scene Classification Autonomous cars are a popular research topic in the field of intelligent transportation systems because they have the potential to greatly reduce traffic and improve travel efficiency. Scene classification is a key technology for autonomous cars because it forms the basis of these vehicles' decision-making. In recent years, deep learning-based methods have demonstrated promise in resolving the scene classification issue. However, further research is needed on a few difficulties related to scene classification methodologies, such as how to treat similarities and contrasts within the same category. To tackle these problems, this research suggests an improved deep network-based scene classification method.[1]

A. A smart Outdoor Parking System Powered by IOT

IOT is a huge field for which research and application are still ongoing. In urban areas, where it might be difficult to find open spots, traditional parking systems are far too antiquated and inefficient. This can lead to heavy traffic, little crashes, and accidents in the open air. Even though numerous systems have produced reports in documents, the majority of locations are only equipped with organised door parking systems, and the majority of visitor destinations in smart cities are organised under manual parking systems.

B. Detection of Lane and Speed Breaker for Autonomous Vehicle using Machine Learning Algorithm.

Modern technology, which relies on vehicle cameras for lane and speed brake recognition, requires transportation to be automated and self-driven. [2]

C. RGB Camera Failure and their effects in Autonomous Driving Application

One of the most important sensors for applications involving autonomous driving is the RGB camera. Unquestionably, malfunctioning vehicle cameras might jeopardise an autonomous vehicle. [4]

D. Reinforcements Learning Framework for Video frame Based Autonomous Car

Its use in developing autonomous vehicles is yet a field of untapped research. Aviz is intended to prevent accidents brought on by human error, enabling autonomous vehicles to drive safely and conveniently.

However, further research is still needed on a few difficulties related to scene classification methodologies, such as how to manage contrasts and similarities within the same category. To overcome these problems, an improved deep network-based scene categorization method is suggested in this study. The proposed method extracts the features of scene representative objects using an upgraded faster region with convolutional neural network features (RCNN) network to produce local features. The Faster RCNN network is enhanced with a new residual attention block to highlight local semantics pertinent to driving conditions. In order to limit the potential, a modified version of the Inception module is used to extract global features and offer a combined Leaky ReLU and ELU function.

For haulage companies as well as traffic and street organisations, the proper planning of rest periods in light of the accessibility of parking spots in particularly still locations is a major challenge. In order to account for the continuous expectation of parking space occupancy, we provide a contextual examination of how You Just Look Once (YOLO)v8 can be used to identify heavy products cars in relatively calm regions during winter. We choose warm organisation cameras because winter's polar night and blanketed conditions typically pose some challenges for image recognition.

Given that these images typically feature a plethora of car covers and shorts, we used motion finding out how to YOLOv8 to investigate whether the front lodge and the rear are suitable components for heavy goods vehicle acknowledgment. Our results show how the prepared computation can reliably identify the front lodge of heavy goods vehicles, but it seems more difficult to discern the rear, especially when it is located far from the camera.[3]

an advanced learning-based object discovery method to continually locate a distant location in an image. It attempts to address one of the common problems in Advanced Driver Assistance Systems (ADAS) applications, which is, to identify the more modest and distant items with similar certainty as those with the greater and closer articles. It focuses on distant objects from a vehicular front camcorder point of view. This study introduces Concentrate Net, an efficient multi-scale object recognition organisation that focuses on the nearby far-off district and can recognise a fading point. [5]

Initially, the inferencing of the item identification model will generate a larger responsive field discovery result and predict a region that may be evaporating point, or at least the edge's farthest area. The image is then cropped near the fading point region and processed using the article discovery model for second inferencing in order to obtain results for distant object identification. Finally, using a specific non-most extreme Concealment (NMS) approach, the two-inferencing results converge.

Since the proposed model is implemented in some of the state-of-the-art object discovery models to truly examine plausibility, the suggested network engineering can be used in most item location models. Concentrate Net engineering models achieve crucial accuracy and review upgrades by using a lower goal input size and less model complexity than distinct models that utilise a higher goal input size.[8]

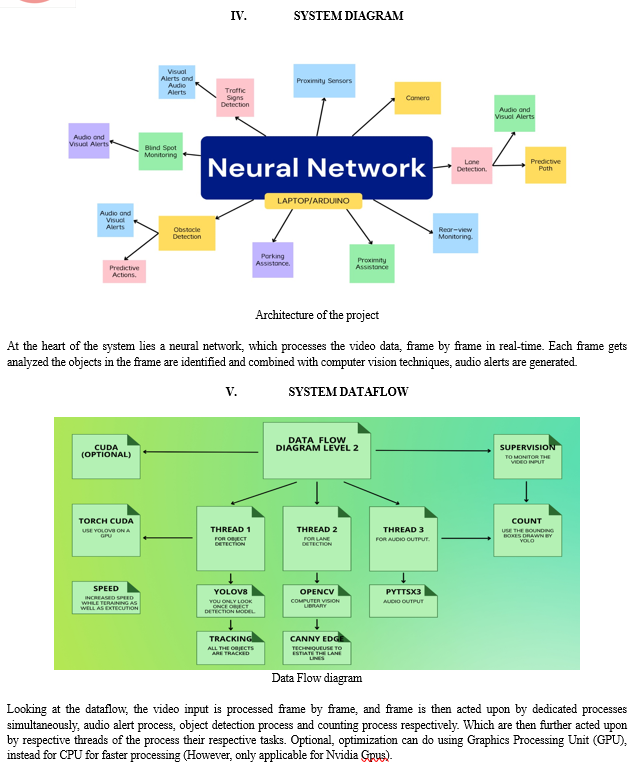

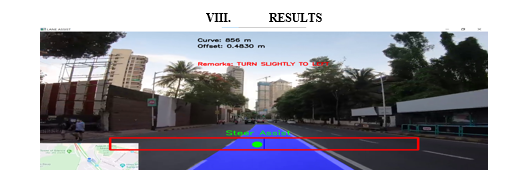

III. PROPOSED WORK

The system suggests using computer vision and object detection techniques to solve various driving challenges. Designed to only need a camera, screen, and audio output, it can be adapted to any vehicle with few tweaks. The application is completely autonomous and does not need any user input. The Neural Network at the heart of the technology is always in control of the application, and features are enabled based on vehicle mode (for optimal performance). The user interface is minimal and is not meant for the user; it only serves as visual output based on which audio output is generated. The application should be visually and conceptually minimal.

VI. OBJECTIVES

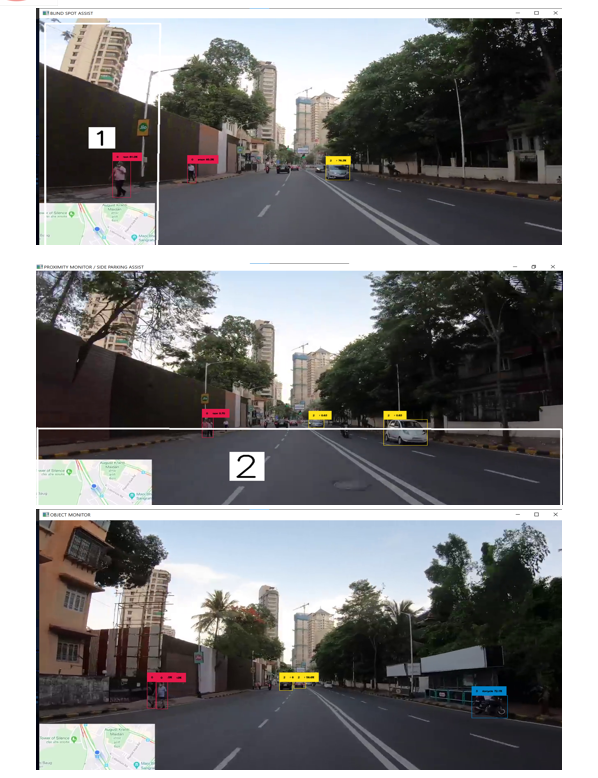

- Improvement of Road Safety: To design a smart assistant that enhances road safety by leveraging artificial intelligence and computer vision technology to detect lanes, blind spots, speed breakers, traffic signals, road signs, and potential obstacles.

- Real-Time Lane Detection: To enable real-time detection of lane markings that will help the driver stay within the correct lane, reducing risks associated with straying into the wrong lane.

- Blind Spot Detection: To create a reliable blind spot detection system that alerts the driver of potential hazards they may not be able to see in their mirrors, improving the safety of lane changes and turns.

- Speed Breaker Identification: To automatically identify and alert the driver of upcoming speed breakers, ensuring a smooth and safe ride while preventing potential vehicle damage.

- Traffic Signal Recognition: To design an intelligent system that accurately recognizes traffic signals in real-time, aiding drivers in making safer and more informed decisions on the road.

- Road Sign Detection: To incorporate a feature that detects and interprets various road signs, providing real-time information to the driver and facilitating adherence to traffic rules and regulations.

- Obstacle Identification: To develop a mechanism that effectively identifies any potential obstacles, such as pedestrians or other vehicles, preventing accidents and ensuring safe navigation.

VII. ALGORITHMS

A. Object Detection

The head is responsible for predicting bounding boxes and class probabilities for each object in the image. It takes the feature map produced by the backbone as input and applies a series of convolutional and up sampling layers to increase the spatial resolution of the feature map. The final layer of the head outputs a 4-dimensional tensor, where each element represents the predicted bounding box coordinates, class probability, and objectness score for a particular location in the feature map

B. Lane Detection Algorithm

The Canny edge detection algorithm is a widely used technique for detecting edges in images. It is a multi-stage algorithm that first applies a Gaussian blur to the image to reduce noise, then finds the gradient magnitude and direction at each pixel, and finally applies two thresholds to the gradient magnitude to suppress weak edges and isolate strong edges.

- Edge Detection Steps: Noise Reduction: The first step is to reduce noise in the image by applying a Gaussian blur. This is done by convolving the image with a Gaussian kernel. The size of the kernel can be adjusted to control the amount of smoothing.

- Gradient Calculation: The next step is to calculate the gradient magnitude and direction at each pixel. This is done by applying a Sobel operator to the image. The Sobel operator is a 3x3 filter that calculates the horizontal and vertical gradients at each pixel. The gradient magnitude is then calculated as the square root of the sum of the squares of the horizontal and vertical gradients. The gradient direction is calculated as the arctangent of the vertical gradient divided by the horizontal gradient.

- Non-Maximum Suppression: The final step is to apply two thresholds to the gradient magnitude to suppress weak edges and isolate strong edges. The first threshold, called the low threshold, is used to identify pixels that are likely to be part of an edge. The second threshold, called the high threshold, is used to identify pixels that are definitely part of an edge. Pixels with gradient magnitudes below the low threshold are discarded. Pixels with gradient magnitudes between the low and high thresholds are marked as "weak edges". Pixels with gradient magnitudes above the high threshold are marked as "strong edges".

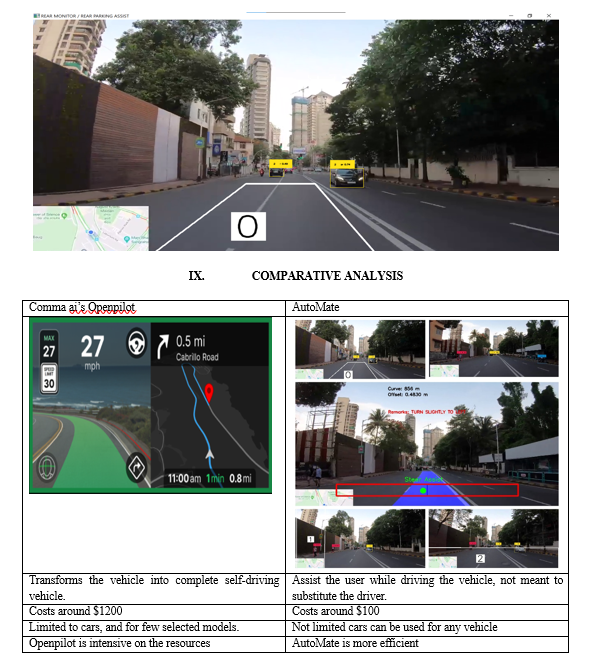

X. FUTURE SCOPE

As for future scope, the project can be developed and transformed into a complete kit or product, similar to comma ai’s openpilot, the kit will be completely autonomous and work with the vehicles system, instead of relying on the external computer device for functioning. The accuracy, efficiency of the project can be improved using more advanced hardware e.g. better cameras for video input. Underlying Neural Network can be trained for more epochs and for specific objects to get more accurate detections, however, there is plateau so it isn’t recommended. There is room for optimizations to make the project more resource friendly.

Conclusion

In conclusion, a major turning point in the advancement of automotive technology has been reached with the creation and deployment of a smart car drive assistant system that includes lane detection, object detection, traffic signal identification, road sign recognition, and parking help. This full feature set not only makes driving safer for pedestrians and drivers alike, but it also makes driving more comfortable and effective.

References

[1] Jianjun Ni, Kang Shen, Yinan Chen ,Weidong Cao and Simon X Yang, “An Improved Deep Network Based Scene Classification method for Self-Driving Cars”, in IEEE transaction on Instrument and measurement vol 71 2022. [2] R. K. Mohapatra, K. Shaswat, and S. Kedia, “Offline handwritten signature verification using CNN inspired by inception V1 architecture,” in Proc. 5th Int. Conf. Image Inf. Process. (ICIIP), Solan, India, Nov. 2019, pp. 263–267. [3] M. Kasper-Eulaers, N. Hahn, S. Berger, T. Sebulonsen, Ø. Myrland, and P. E. Kummervold, “Short communication: Detecting heavy goods vehicles in rest areas in winter conditions using YOLOv5,” Algorithms, vol. 14, no. 4, p. 114, Mar. 2021. [4] C. Wang et al., “Pulmonary image classification based on inceptionv3 transfer learning model,” IEEE Access, vol. 7, pp. 146533–146541, 2019. [5] F. Yu et al., “BDD100K: A diverse driving dataset for heterogeneous multitask learning,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Seattle, WA, USA, Jun. 2020, pp. 2633–2642. [6] Y. Chen, W. Li, C. Sakaridis, D. Dai, and L. Van Gool, “Domain adaptive faster R-CNN for object detection in the wild,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., Salt Lake City, UT, USA, Jun. 2018, pp. 3339–3348. [7] C. Chen, Z. Zheng, X. Ding, Y. Huang, and Q. Dou, “Harmonizing transferability and discriminability for adapting object detectors,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit. (CVPR), Seattle, WA, USA, Jun. 2020, pp. 8866–8875. [8] Y. Song, Z. Liu, J. Wang, R. Tang, G. Duan, and J. Tan, “Multiscale adversarial and weighted gradient domain adaptive network for data scarcity surface defect detection,” IEEE Trans. Instrum. Meas., vol. 70, pp. 1–10, 2021.

Copyright

Copyright © 2024 Vedant Nukte, Prof. Pooja Hajare, Sahil Waghmare, Sushil Kannake, Ishan Wade. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET61263

Publish Date : 2024-04-29

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online