Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

InsightStream: A Real-Time Perspective on Classroom Environment

Authors: Shubhi Srivastava, Abdul Manan, Akshith Krishnan, Arvinda H B, Aslam Asgar Khan

DOI Link: https://doi.org/10.22214/ijraset.2024.58970

Certificate: View Certificate

Abstract

Traditionally, understanding classroom environment relies on subjective observations and post-hoc surveys. \"Insight Stream\" proposes a paradigm shift, offering a real-time, data-driven perspective through machine learning-powered facial emotion detection. This project leverages AI to analyse student facial expressions during class, capturing the emotional undercurrents in real-time. By delving beyond spoken words, \"Insight Stream\" aims to: Quantify classroom engagement: Detect emotions like boredom, confusion, and excitement to gauge real-time student engagement and adapt teaching methods accordingly. Identify hidden anxieties: Uncover subtle cues of anxiety or discomfort that may go unnoticed, allowing for proactive support and personalized interventions. Optimize teaching delivery: Track shifts in emotional response to different teaching styles and materials, enabling instructors to fine-tune their methods for maximal impact. Foster well-being: Monitor overall emotional climate to ensure a positive and supportive learning environment, contributing to student well-being and academic success. \"Insight Stream\" goes beyond just observing the classroom - it delves into the hearts and minds of students, offering a real-time window into their emotional tapestry. This project holds immense potential to revolutionize teaching and learning, creating a dynamic and data-driven environment that caters to the holistic needs of every student.

Introduction

I. INTRODUCTION

In recent years, the integration of advanced technologies into educational institutions has revolutionized the traditional paradigms of teaching and learning. One such ground breaking innovation is the utilization of facial recognition technology to analyze and enhance the dynamics of class environments. This research paper explores the transformative potential of facial recognition technology in educational settings, focusing on its application to scrutinize and optimize the dynamics within classrooms. Educational institutions are dynamic ecosystems where the interplay of various factors shapes the learning experience. The traditional methods of assessing class dynamics often rely on subjective observations, surveys, and manual data collection, which may be time-consuming, prone to biases, and limited in their scope. In response to these challenges, facial recognition technology emerges as a promising solution, offering an objective and efficient means to analyze the intricacies of student engagement, teacher effectiveness, and overall class dynamics. This research project aims to delve into the multifaceted aspects of implementing facial recognition technology in educational institutions, with a particular focus on its impact on student participation, teacher-student interactions, and overall classroom atmosphere. By harnessing the power of facial recognition, we endeavor to unravel nuanced insights that traditional methods may overlook, providing educators and administrators with a more comprehensive understanding of the factors influencing the learning environment.

Furthermore, the integration of facial recognition technology raises ethical considerations and privacy concerns that necessitate careful examination. As we explore the potential benefits of this technology, it is crucial to establish ethical guidelines, ensuring that the implementation respects the rights and privacy of all stakeholders involved. This paper will delve into the ethical implications and propose a framework for responsible use, fostering a balanced approach that maximizes the advantages of facial recognition while safeguarding individual rights.

II. LITERATURE SURVEY

A. Technology

Technology Components

- Facial Emotion Detection

a. Machine Learning Algorithms: The core of the system involves the application of machine learning algorithms for facial emotion detection.

This likely includes deep learning techniques such as convolutional neural networks (CNNs) trained on facial expression datasets. These algorithms can recognize and classify emotions like happiness, sadness, surprise, boredom, and confusion based on facial features.

b. Real-Time Analysis: The emphasis on real-time sentiment analysis suggests that the machine learning models are optimized for quick and efficient processing. This is crucial for providing immediate feedback to educators and adapting teaching strategies dynamically during class sessions.

c. Accuracy and Precision: The technology aims for high accuracy and precision in emotion detection to ensure reliable insights into student engagement. The robustness of the facial emotion detection algorithms is crucial for the success of the project.

2. Hardware Development

a. Embedded Systems: The project involves the creation of dedicated hardware with an embedded facial emotion detection system. This could include specialized cameras or sensors designed to capture facial expressions in a classroom setting.

b. Processing Power: The hardware is likely equipped with sufficient processing power to run the machine learning algorithms locally, enabling real-time analysis without significant delays. This could involve the use of powerful processors or dedicated hardware accelerators.

c. Connectivity: The hardware is designed to seamlessly connect with other components of the system, such as the cloud server for data storage and analysis. Connectivity features may include Wi-Fi or Ethernet capabilities.

3. Cloud Technology

a. Video Data Storage: Storing video data in the cloud allows for scalable and secure storage. This approach ensures that large amounts of video footage captured during class sessions can be efficiently managed, accessed remotely, and analysed over time.

b. Remote Accessibility: Cloud storage facilitates remote access to video data, enabling educators to review sessions, monitor emotional trends, and make informed decisions regardless of their physical location.

c. Scalability: Cloud technology provides scalability, allowing the system to handle an increasing volume of data as the project expands to multiple classrooms or institutions.

4. User Interface

a. Intuitive Design: The user interface, likely accessible through a web portal or application, is designed to be intuitive for educators. It provides a user-friendly experience for accessing and interpreting real-time emotional data.

b. Visualization Tools: The interface may include visualization tools such as graphs, charts, or heatmaps to represent emotional trends over time. This helps educators quickly grasp insights from the data.

c. Alerts and Notifications: In cases where hidden anxieties or significant shifts in emotional responses are detected, the user interface may provide alerts or notifications to prompt timely intervention. The successful execution of the "Insight Stream" project relies on the seamless integration of these technological components. The facial emotion detection system embedded in the hardware works in harmony with cloud technology and the user interface to create a holistic solution. The real-time nature of the analysis and the ability to store and analyse data in the cloud contribute to the project's effectiveness in providing actionable insights for educators, ultimately aiming to enhance the teaching and learning experience in classrooms.

III. PAGE STYLE

A. Proposed System

- System Overview: The proposed system integrates facial recognition technology to analyse and optimize class dynamics in educational institutions. A user-friendly interface allows educators and administrators to access real-time insights into student engagement, teacher effectiveness, and overall class atmosphere.

- Components: Facial Recognition Cameras: Deployed strategically in classrooms for optimal coverage. High-resolution cameras with infrared capabilities for accurate facial feature detection in varying lighting conditions.

- Real-time Analysis Dashboard: User-friendly dashboard accessible to educators and administrators. Real-time analytics on student participation, emotions, and teacher-student interactions. Customizable reports and visualizations for easy interpretation of data.

- Facial Recognition Algorithm: Utilizes a robust facial recognition algorithm trained on diverse datasets. Incorporates emotion recognition to assess student engagement and sentiment during classes. Adaptive learning to continuously improve accuracy based on feedback and evolving class dynamics.

- Feedback and Notification System: Alerts for educators and administrators regarding unusual patterns or events. Continuous feedback mechanisms for users to provide input on system effectiveness.

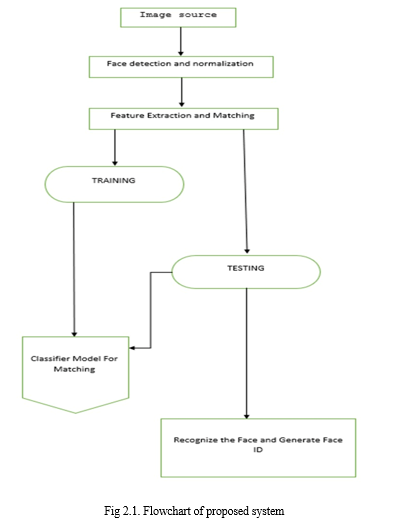

B. Methodology

- Describes the methodology employed for facial emotion detection.

- Discusses the choice of machine learning algorithms and techniques used for real-time analysis.

- Details any hardware specifications or considerations.

Conclusion

The \"Insight Stream\" project represents a transformative Endeavor in the realm of education technology, utilizing realtime facial emotion detection, hardware integration, and cloud-based analytics to revolutionize the understanding of classroom dynamics. 1) Real-Time Insights: The integration of machine learning algorithms for facial emotion detection has enabled the system to provide immediate and data-driven insights into the emotional tapestry of the classroom. Educators can now receive real-time feedback on student engagement, allowing for adaptive teaching strategies. Accuracy of facial recognition: As mentioned earlier, facial expressions can be subjective and influenced by various factors. The system\'s reliance on machine learning algorithms necessitates acknowledging the potential for misinterpretations. Mitigating bias: The algorithms used in the system might exhibit bias based on the data they are trained on. This can lead to misinterpretations of certain facial expressions, particularly for students from diverse ethnicities or cultural backgrounds. Teacher accountability: While the system provides real-time data, it\'s crucial for educators to exercise their own judgment and not solely rely on the technology\'s interpretations. 2) Adaptive Teaching Strategies: With the ability to quantify emotions and detect subtle cues, the system empowers educators to adapt their teaching styles dynamically. The project\'s success lies in its capacity to optimize instructional approaches based on the real-time emotional responses of students. Individualized learning: While the system might provide insights into the general emotional state of the class, it\'s vital to remember that students have individual learning styles and needs. Creative teaching methods: Effective teachers don\'t solely depend on student expressions to adapt their teaching. They can utilize various creative methods to gauge engagement, such as interactive activities, polls, or open-ended questioning. Student feedback: Direct student feedback through surveys or discussions can provide valuable insights into their understanding of the material and their emotional experience in the classroom. 3) Proactive Support: Uncovering hidden anxieties and discomfort through subtle cues enables educators to offer proactive support and personalized interventions. This aspect contributes significantly to creating a supportive learning environment that addresses students\' emotional needs. The role of social-emotional learning: Equipping students with social-emotional learning skills can empower them to self-regulate their emotions and effectively communicate their needs to teachers. Building trust: Creating a safe and trusting classroom environment is essential. Students should feel comfortable approaching the teacher with their concerns without fear of judgment. Addressing external factors: Recognizing that factors outside of school can also impact students\' emotional state is crucial. Teachers can connect with parents or school counsellors to provide a holistic support system. 4) Continuous Monitoring and Analysis: The establishment of a continuous monitoring system facilitates long-term trend analysis, providing educators with a comprehensive understanding of the emotional climate over multiple class sessions. This feature enhances the system\'s utility for ongoing improvement in teaching practices. Data privacy concerns: Ensuring the secure storage and responsible usage of student data collected by the system is paramount. Data analysis limitations: Large datasets can be overwhelming. Educators require training to effectively interpret the data and identify meaningful trends. Focus on learning outcomes: While emotional analysis provides insights, the ultimate objective should be to assess student learning and adjust teaching methods to improve academic achievement. 5) Scalability and Integration: The design goals related to scalability and integration have been achieved, allowing the system to adapt to various educational settings and seamlessly integrate with cloud technology. This scalability ensures that the project can evolve and scale to meet the needs of diverse classrooms. Teacher workload: Integrating new technologies should not add to the burden of educators. The system\'s design should be user-friendly and require minimal additional workload for teachers. Standardization and regulations: As the technology is implemented in various educational settings, clear guidelines and regulations are necessary to ensure responsible data use and student privacy protection. Teacher autonomy: While the system offers potential benefits, it shouldn\'t dictate teaching styles. Educators should retain autonomy in their approach and leverage the technology as a supplementary tool.

References

[1] Canal, F.Z., Müller, T.R., Matias, J.C., Scotton, G.G., de Sa Junior, A.R., Pozzebon, E. and Sobieranski, A.C., 2022. A survey on facial emotion recognition techniques: A state-of-the-art literature review. Information Sciences, 582, pp.593-617. [2] Lee, W., Allessio, D., Rebelsky, W., Satish Gattupalli, S., Yu, H., Arroyo, I., Betke, M., Bargal, S., Murray, T., Sylvia, F. and Woolf, B.P., 2022, June. Measurements and Interventions to Improve Student Engagement Through Facial Expression Recognition. In International Conference on Human-Computer Interaction (pp. 286-301). Cham: Springer International Publishing. [3] Shobha, T., & Anandhi, R. J. (2020). Ensemble Neural Network Classifier Design using Differential Evolution. Alliance International Conference on Artificial Intelligence and Machine Learning,300-309. [4] Alam, A. and Mohanty, A., 2022, December. Facial Analytics or Virtual Avatars: Competencies and Design Considerations for Student-Teacher Interaction in AIPowered Online Education for Effective Classroom Engagement. In International Conference on Communication, Networks and Computing (pp. 252- 265). Cham: Springer Nature Switzerland. [5] Shobha, T., & Anandhi, R. J.(2020).Robust Classifier Design with Ensemble Neural Network using Differential Evolution. International Journal of Engineering Trends and Technology,ISSN: 2231-5381 ,174-181. [6] Halder, A., Chakraborty, A., Konar, A. and Nagar, A.K., 2013, July. Computing with words model for emotion recognition by facial expression analysis using interval type-2 fuzzy sets. In 2013 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE) (pp. 1-8). IEEE. [7] Krishnaveni, A., Jeevitha, V., Akram, J., Vakmiki, N., Chamma, A., “A Survey Paper on Various Techniques used in Detection of Vitamin Deficiency using Image Processing and Neural Network“, 14th International Conference on Advances in Computing, Control, and Telecommunication Technologies, ACT 2023, 2023, 2023-June, pp. 403–408. [8] Zhong, H., Han, T., Xia, W., Tian, Y. and Wu, L., 2023. Research on real-time teachers’ facial expression recognition based on YOLOv5 and attention mechanisms. EURASIP Journal on Advances in Signal Processing, 2023(1), p.55. [9] Fakhar, S., Baber, J., Bazai, S.U., Marjan, S., Jasinski, M., Jasinska, E., Chaudhry, M.U., Leonowicz, Z. and Hussain, S., 2022. Smart classroom monitoring using novel real-time facial expression recognition system. Applied Sciences, 12(23), p.12134. [10] Borgalli, M.R.A. and Surve, S., 2022, March. Deep learning for facial emotion recognition using custom CNN architecture. In Journal of Physics: Conference Series (Vol. 2236, No. 1, p. 012004). IOP Publishing. [11] Bodapati, J.D., Naik, D.B., Suvarna, B. and Naralasetti, V., 2022. A deep learning framework with cross pooled soft attention for facial expression recognition. Journal of The Institution of Engineers (India): Series B, 103(5), pp.1395-1405. [12] Sajjad, M., Zahir, S., Ullah, A., Akhtar, Z. and Muhammad, K., 2020. Human behavior understanding in big multimedia data using CNN based facial expression recognition. Mobile networks and applications, 25, pp.1611-1621. [13] Ul Haq, I., Ullah, A., Muhammad, K., Lee, M.Y. and Baik, S.W., 2019. Personalized movie summarization using deep cnn-assisted facial expression recognition. Complexity, 2019, pp.1-10. [14] MKM Ms. Krishnaveni A, “IoT based Bank Locker Security Sysytem with Face Recognition”, Grenze International Journal of Engineering and Technology 9 (1), 2446-2452. [15] Yang, X. and Shang, Z., 2020. Facial expression recognition based on improved AlexNet. Laser & Optoelectronics Progress, 57(14), p.141026.

Copyright

Copyright © 2024 Shubhi Srivastava, Abdul Manan, Akshith Krishnan, Arvinda H B, Aslam Asgar Khan. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET58970

Publish Date : 2024-03-12

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online