Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

IoT Based Sign to Speech Converter System

Authors: Devyani Pravin Nagarale, Shweta Balaso Sangale, Ankita Jaykumar Rukade, Dhanashree Rupesh Wadd, Swati S. Halunde

DOI Link: https://doi.org/10.22214/ijraset.2024.64413

Certificate: View Certificate

Abstract

The idea introduces a system for facilitating communication between the deaf and the non-signing community through a wearable device called IoT based Sign-to-Speech Converter System. The System consist of glove & it utilizes Internet of Things (IoT) technology to bridge the communication gap by translating sign language (gestures) into spoken language in real-time. The core functionality of the system is augmented by seamless integration with an Android application, enhancing user experience and accessibility. The proposed system leverages a combination of sensors embedded within the glove to capture intricate hand movements and gestures commonly used in sign language. These data are transmitted wirelessly to a central processing unit, to interpret the gestures and generates corresponding spoken language output. The Android application serves as a user interface, allowing for customization of settings and additional features such as text-based communication. Effectiveness and practicality of the proposed system have been demonstrated, opening-up new avenues for inclusive communication and empowerment of the deaf community.

Introduction

I. INTRODUCTION

The project aims to develop an innovative solution to facilitate communication between the deaf and non-signing individuals through the creation of a wearable device called the Sign-to-Speech Converter System (SSCS). Leveraging IoT technology, this glove translates sign language gestures into spoken language in real-time. Furthermore, the integration of an Android application enhances user interaction and customization options. The core functionality of the SSCS lies in its ability to capture hand movements and gestures commonly used in sign language through embedded sensors. These data are then processed by a central unit, which utilizes machine learning algorithms to interpret the gestures and generate corresponding spoken language output.

The Android application complements this system by providing users with a customizable interface for adjusting settings, language preferences, and accessing additional features such as text-based communication. Key features of the SSCS include high accuracy in gesture recognition and adaptability to various sign languages and regional variations. Its ergonomic design ensures comfort and usability, making it suitable for extended periods of wear. Overall, the IoT-based Sign-to-Speech Converter System is a glove combined with the Android application, offers a promising solution to bridge the communication gap between the deaf and hearing communities, promoting inclusivity and empowerment. Future developments may focus on refining gesture recognition algorithms, expanding language support.

II. LITERATURE REVIEW

Kshitij Kadam1,Sakshi Telange2 etal. The paper presents the conception of Smart Gloves for mute people. The gloves are designed to detect the hand gestures of the user and translate them into audible signals. The system consists of a microcontroller, a wireless communication module, a flexible, and an audio device. The microcontroller receives data from the sensor and interprets the user's hand gestures. The wireless communication module transmits the interpreted data to the audio device, which produces the corresponding audible signals. The Smart Gloves offer a low-cost and user-friendly solution for mute people to communicate. The potential applications of the glove efficacy is evaluated through user studies. The results indicate that the Smart Gloves provide effective communication platform for mute people [1].

Sanjay S1,Dineshkumar S2 et al. This paper illustrates a Smart glove used to bridge the between the speech impaired and normal people in their day-today life. Nearly 70 million people are deaf by birth and 230 million people are hearing impaired or speechless because of the conditions such as autism or stroke. To overcome this situation, the research has been started, which uses flex sensors and accelerometer to read the gesture, Arduino UNO to process the input and a web UI to display the text output [2].

Amal Babour1, Hind Bitar2 et al. A prototype of a two-way communication glove, was developed to facilitate communication between deaf-mutes and non-deaf-mutes. IG consists of a gloves, flex sensors, a screen with a built-in microphone, a speaker. To facilitate communication from the deaf-mutes to the non-deaf-mutes, the flex sensors sense the hand gestures and connected wires, and then transmit the hand movement signals to the microcontroller where they are translated into words and sentences.

The output is displayed on a small screen attached to the gloves, and it is also issued as voice from the speakers attached to the gloves. A unit testing of IG has shown that it performed as expected without errors. In addition, IG was tested on ten participants, and it has been shown to be both usable and accepted by the target users [3].

Ajinkya Mhatre, Sarang Joshi. Sign language is used by hearing and speech impaired people for communication with normal people. In Sign language, impaired person perform hand gestures to communicate their message, but sometimes it becomes difficult to understand it by normal person. The main of this project is to solve this problem by developing a smart wearable hand glove. Impaired person may wear this glove and perform the hand gestures as per sign language. Further finger action will converted into specific sound which a normal person can easily understand [4].

[Kok Tong Lee, Pei Song Chee]. A smart glove was fabricated using five resistive flex sensors, which can be attached on each of the fingers, respectively. The flex sensor was characterized. A microcontroller was used to receive and process the data from the flex sensor and transmit it to a PC for machine learning and prediction. The SVM model was trained using 160 training data set, and the trained model could be used to recognize three objects with different shapes with an accuracy of 91.88%. The proposed AI-based smart glove has shown high accuracy in object recognition. The proposed approach can possibly provide a promising low-cost solution for the healthcare and robotics industries.[5]

Khushbu pal, Pradnya Padmukh. Every Normal human being sees, listen and then reacts to the situations by speaking himself out. But there are some human beings those who are not able to speak or listen, but they try to react through actions most of time normal people are not able to understand what the want to say. This application will help for both of them to communicate with each other. It consists of several parts, in part one with the help of hand gestures the signs will be detected by the sensors and the output will be given [6].

Doan Ngoc Phuong, Nguyen Thi Phuong Thanh. Robot operating system (ROS) is robot middleware. Although ROS is not an operating system, it provides the necessary services designed for a heterogeneous cluster of servers, controlling devices, deploying functions, transmitting messages, and managing devices. A set of ROS-based processes represented as architectural graphs that can receive and concatenate sensor data, state control, scheduling and other messages. The application of ROS to the product is in order to test and simulate the operation of the robot arm, thereby supporting researchers, improving, developing and deploying applications on robots smoothly [7].

III. SOLUTION PROCEDURE

At first as per the instructions, we have prepared for project group of four Members. Next, we were noticed to give choices for project guide and to search for project topic as well. We had submitted for guide and Department had assigned Ms. S.S. Halunde as our project guide. Along with that we had searched for project topic and the Domain also. At beginning we have chosen 3 topics in that first was Pick and Place Robot with Extra Features, second was Hyacinth Cutting Machine, third was Sign to Speech Converter System. As per the topics we have presented our ideas to PEC member and they told us to go for Third topic that is "Sign to Speech Converter System." Afterwards we have searched for different research papers and websites, information about it and requirements for hardware as well. As per the requirements we have studied all the points clearly and decided for hardware and planned for next work and scenario for implementation of project. And while studying we slightly changed our project title as "IoT Based Sign to Speech Converter System." And presented 25% of work to Project Guide and PEC members also prepared synopsis for 25% work of Project. In that they have asked for whole project planning and execution and told us to study further hardware work of project.

Then we had started for 50% phase of project. In hardware work we have decided to use NodeMCU as the main processing unit for our project, flex sensors, Bluetooth module and many more related components and created a understandable flow diagram and block diagram as well. But as we started to study deeply we noticed that NodeMCU was not compatible for project as it has only 2 Analog pins and we were needed 5 Analog pins so we decided to go for ESP32 and for displaying data we were decided to use Third Party Application on temporary purpose. In this scenario we have studied all the hardware. and presented 50% of work.

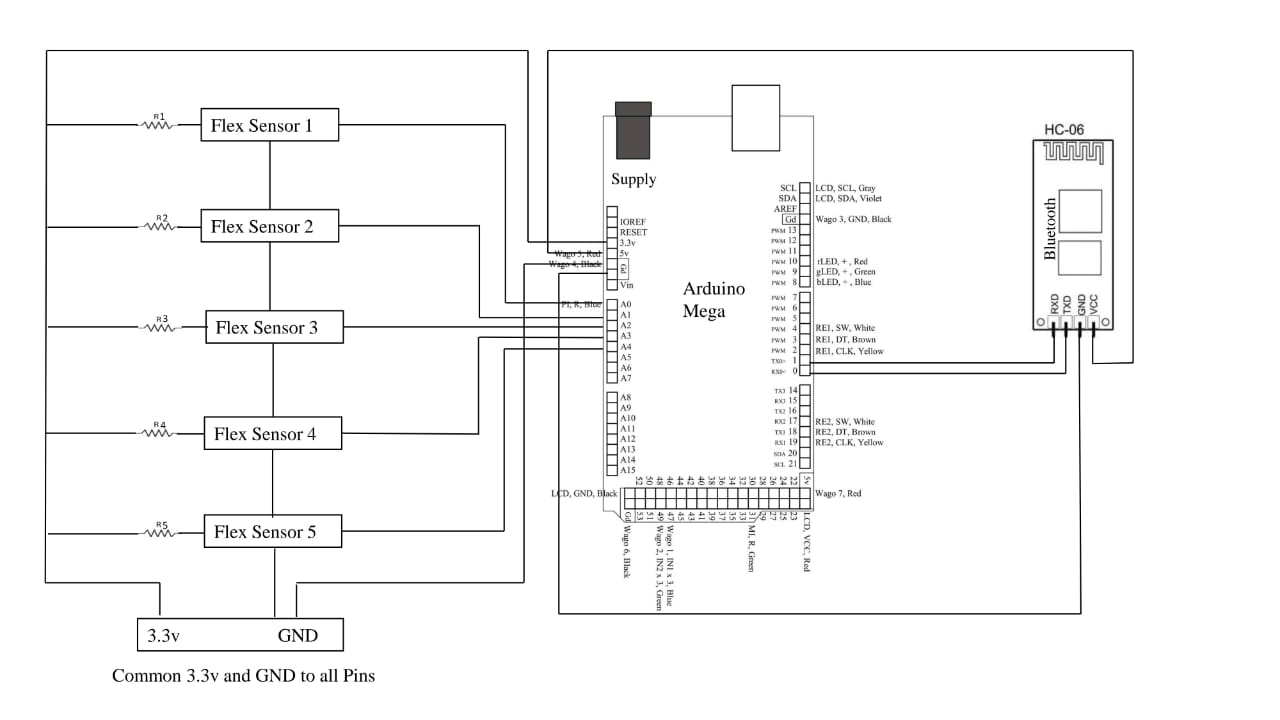

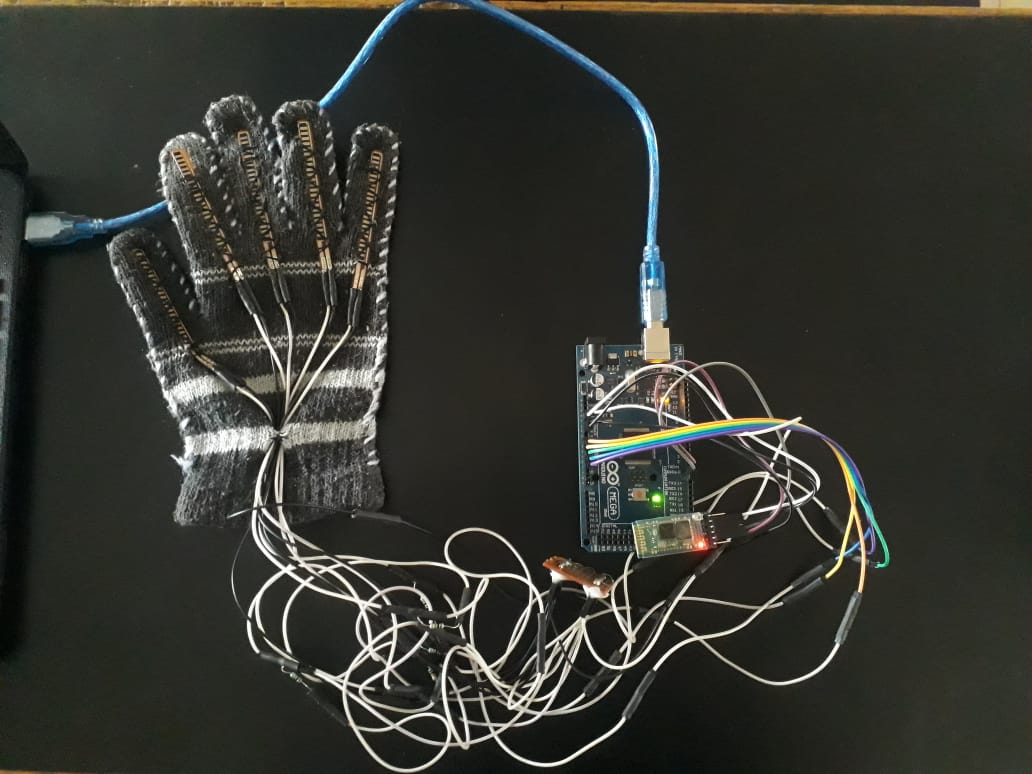

In 75% work of project, we were supposed to complete hardware and software part so we started to work for software design first, for our project we had designed a simple Android Application to fetch data directly to mobile application through Bluetooth from ESP32. We had designed circuit Diagram for connection and completed hardware part by doing all connections and mounting all components. But we noticed that ESP32 was not capable to provide 3.3v to all 5 flex sensors and because of this, 2 sensor were not able to fetch data correctly so we visited to guide as well as an industrial person Mr. Jaywardhan Bhosale for guidance about this situation. They had suggested us to use Arduino Mega to overcome this problem. As per the Arduino Mega we recreated the circuit diagram and reconnected whole circuitry after trying much we become successful to create whole hardware. Under guidance we had created code for Arduino in embedded C language and successfully dumped code in Ardiuno Mega successfully. Here the project is successfully completed.

In 100% the remaining part of project was completed such as Poster of project, Paper Publication and complete report with majesty diary. After that we presented the whole project with its output to a guide and PEC members.

IV. PROJECT FLOW

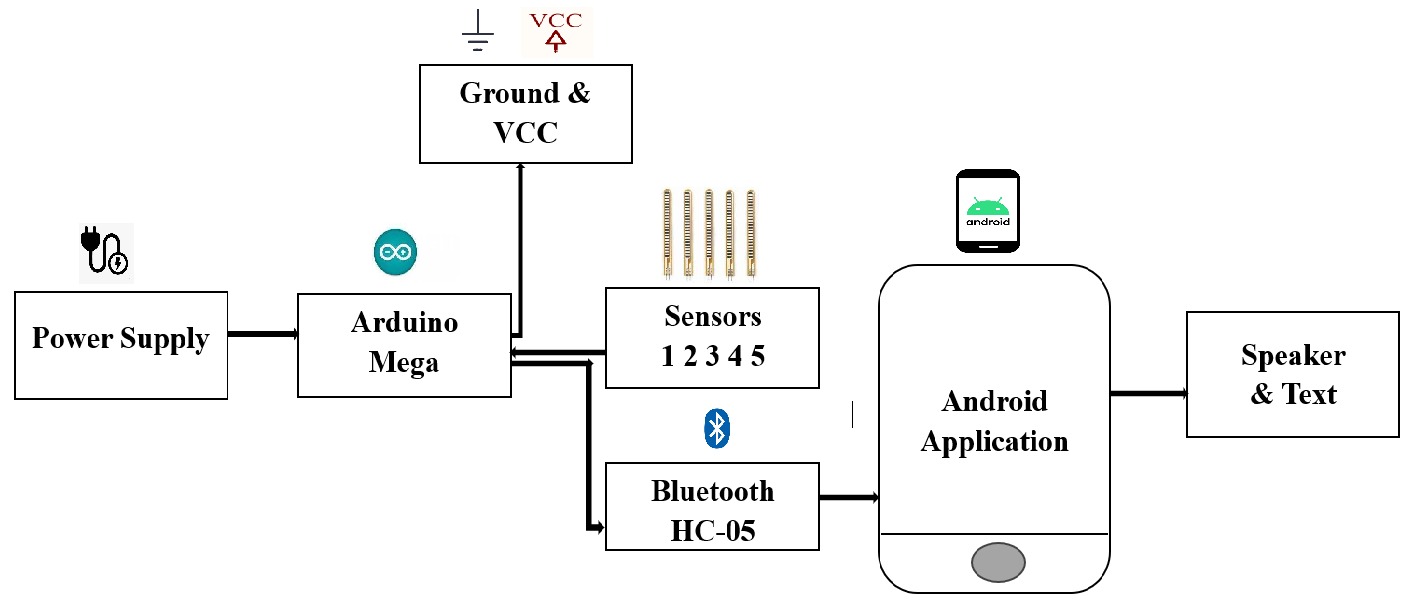

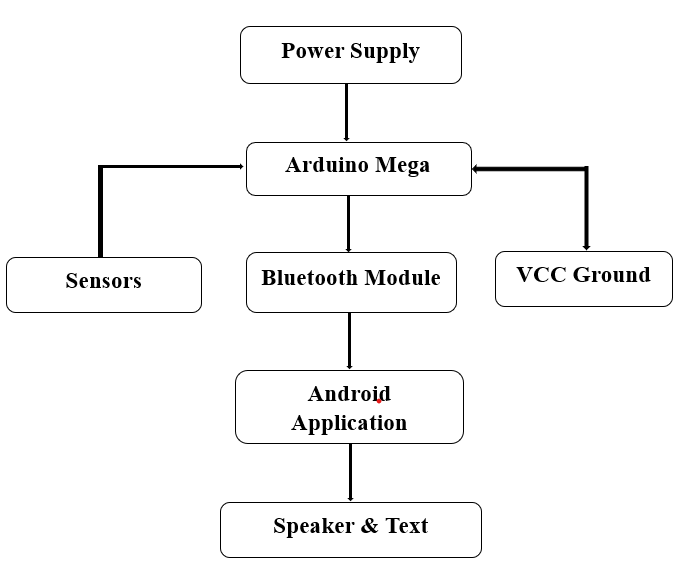

A. Architectural Design

B. Circuit Diagram

B. Circuit Diagram

C. Flow Diagram

D. Sequence Diagram

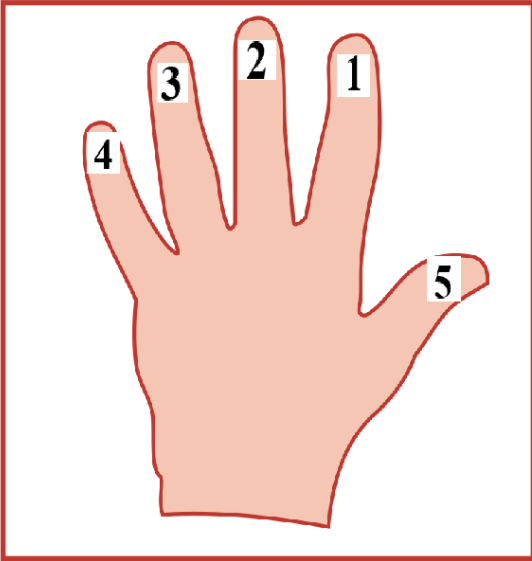

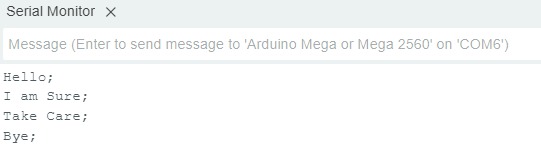

V. ANALYSIS TABLE

|

1 |

00000 |

Bye |

16 |

00011 |

Promise |

|

2 |

10000 |

Bless You/ Dog |

17 |

11100 |

Call |

|

3 |

01000 |

Take Care |

18 |

10011 |

Parents |

|

4 |

00100 |

Unmarried |

19 |

11001 |

Siblings |

|

5 |

00010 |

I am Sure |

20 |

10101 |

|

|

6 |

00001 |

Hello |

21 |

10110 |

Laughing |

|

7 |

11000 |

I am feeling cold |

22 |

01110 |

Right |

|

8 |

10100 |

I am Hungry |

23 |

01011 |

Slow as snell |

|

9 |

10010 |

I need Water |

24 |

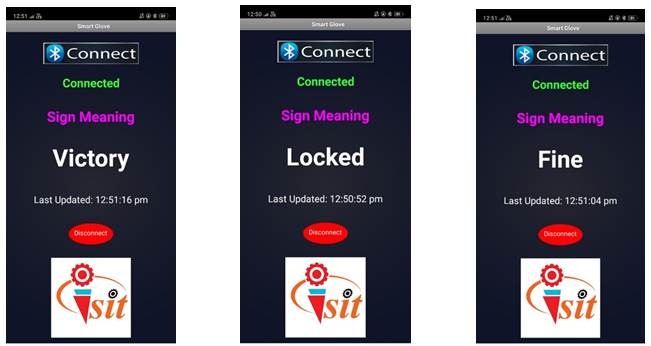

00111 |

Victory |

|

10 |

10001 |

Nice/ Beautiful |

25 |

11110 |

Best/ Good |

|

11 |

01100 |

Love |

26 |

01111 |

One/First/No |

|

12 |

01010 |

I don’t know |

27 |

10111 |

Four |

|

13 |

01001 |

Locked |

28 |

11011 |

Married |

|

14 |

00110 |

Shoot/Kill |

29 |

11101 |

I am in emergency |

|

15 |

00101 |

Family |

30 |

11111 |

Punch/ Yes |

VI. IMPLEMENTATION

A. Project Hardware Setup

B. Output on Serial Monitor of Arduino Software

C. Output Screen of Android Application

VII. FUTURE SCOPE

- Multi-Language Support: Expanding the system to support different spoken languages beyond the initial implementation, making it useful across diverse linguistic regions and cultures.

- Sign Language Variations: The system could be enhanced to recognize regional variations of sign languages (e.g., American Sign Language, British Sign Language, etc.), making it more inclusive and widely applicable.

- Speech-to-Sign: Future versions could enable bidirectional communication by incorporating a speech-to-sign function, translating spoken language back into sign language, aiding in communication for both deaf and non-deaf individuals.

- Cloud-Based Processing and Data Analytics: Incorporating cloud-based services to process and store data, which would enable real-time updates, improve the system’s learning curve, and offer analytics for tracking and improving user experience.

- Wearable Technology Enhancements: Improving the design and ergonomics of the glove to make it lighter, more durable, and energy-efficient. Integration of advanced sensor technologies could improve gesture accuracy and comfort.

- Integration with Other Platforms: The system could be integrated with other communication platforms like video conferencing apps, telemedicine systems, or even social media, enhancing accessibility in virtual spaces.

Conclusion

The IoT-based Sign-to-Speech Converter System offers an innovative solution for improving communication between the deaf and the non-signing community. By utilizing a glove embedded with sensors to capture sign language gestures and converting them into spoken language through a seamless integration with an Android application, the system effectively bridges the communication gap. The real-time translation, wireless data transmission, and customizable features make this system practical and accessible, providing an empowering tool for the deaf community and promoting more inclusive communication

References

[1] “A. Gayathri1, Dr A. Sasi Kumar2”- Sign Language Recognition For Deaf and Dumb People Using Android, Environment, International Journal of Current Engineering And Scientific Research (IJCESR),2017,vol 4. [2] “Dhawal L. Patel1, Harshal S. Tapase2, Praful A. Landge3, Parmeshwar P. More4, Prof. A.P. Bagade5” - SMART HAND GLOVES FOR DISABLE PEOPLE, International Research Journal of Engineering and Technology (IRJET), Volume: 05 Issue: 04 | Apr-2018 [3] “Jarndal, Anwar & DalalAbdulla, & Abdulla, Shahrazad & Manaf, Rameesa.” - Design and Implementation of A Sign-to- Speech/Text System for Deaf, and Dumb People. 10.1109/ICEDSA.2016.7818467. [4] “Abhilasha C Chougule, Sanjeev S Sannakki, Vijay S Rajpurohit”- Smart Glove for Hearing-Impaired, International Journal of Innovative Technology and Exploring Engineering (IJITEE) ISSN: 2278-3075, Volume-8, Issue- 6S4, April 2019 [5] Sanjay S1, Dineshkumar S2-Development of Smart Glove for Deaf, Mute People. [2021- 2022] [6] Kok Tong Lee, Pei Song Chee- Artificial Intelligence driven Smart Glove for object recognition application [2022]. [7] Laury Rodriguez, Zofia Przedworska- Development and implementation of an AI embedded and ROS compatible smart Glove System in human Robot interaction [2022] [8] Kshitij Kadam1, Sakshi Telange2, -Helping Hand:A Glove For Mute People.[2022-2023] [9] Amal Babour1, Hind Bitar-Intelligent Glove: An IT intervention for Deaf -Mute People [2022-2023] [10] Ajinkya Mhatre, Sarang Joshi-Wearable Smart Glove for Speech and Hearing-Impaired People. [2023-2024] [11] Prof. S.M.Jena, Poooja V. Gavhankar- A Review Paper on Smart Hand Gloves for Aphasia People [April 2023]

Copyright

Copyright © 2024 Devyani Pravin Nagarale, Shweta Balaso Sangale, Ankita Jaykumar Rukade, Dhanashree Rupesh Wadd, Swati S. Halunde. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET64413

Publish Date : 2024-09-30

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online