Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Literature Review of “Gesture Navigator: AI Based Virtual Mouse”

Authors: Ravi Kant, Divyansh Ruhela, Ashi Tyagi, Mayank Gupta, Kanak Singh, Ayush Tiwari

DOI Link: https://doi.org/10.22214/ijraset.2024.61942

Certificate: View Certificate

Abstract

The mouse is one of the wonderful inventions of Human-Computer Interaction (HCI) technology. Currently, wireless mouse or a Bluetooth mouse still uses devices and is not free of devices completely since it uses a battery for power and a dongle to connect it to the PC. In the proposed AI based virtual mouse system, this limitation can be overcome by employing webcam or a built-in camera for capturing of hand gestures and hand tip detection using computer vision. The algorithm used in the system makes use of the machine learning algorithm. Based on the hand gestures, the computer can be controlled virtually and can perform left click, right click, scrolling functions, and computer cursor function without the use of the physical mouse. The algorithm is based on deep learning for detecting the hands. Hence, the suggested system aims to minimize the risk of communicable diseases like COVID-19 transmission by reducing human contact and eliminating the need for additional devices to control the computer system.

Introduction

I. INTRODUCTION

A. Description

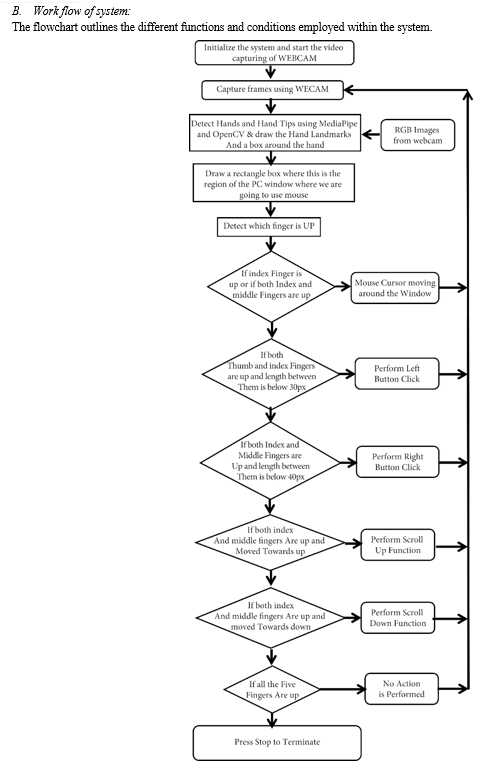

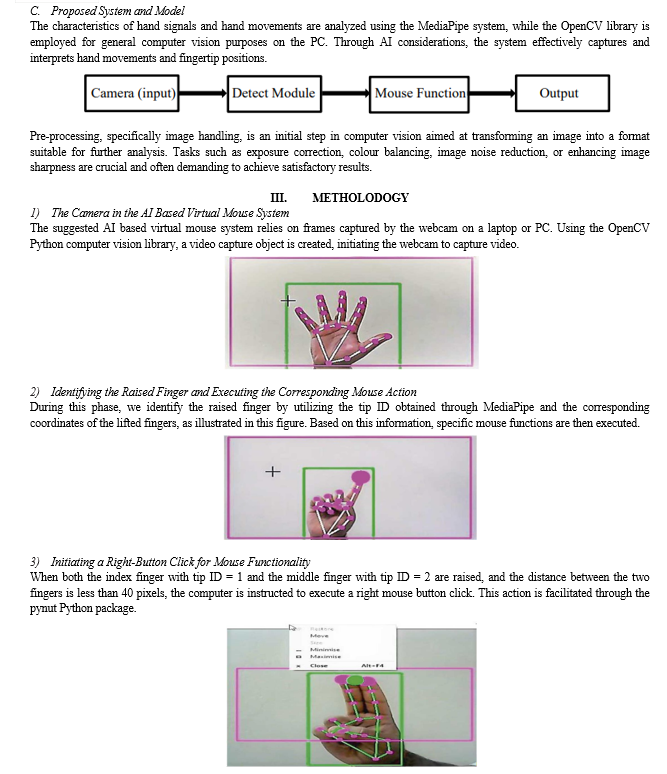

The proposed AI based virtual mouse system can be used to overcome problems in the real world such as situations where there is no space to use a physical mouse and for the persons who have problems in their hands and are not able to control a physical mouse. Also, amidst of the COVID-19 situation, it is not safe to use the devices by touching them because it may result in a possible situation of spread of the virus by touching the devices, so the proposed AI virtual mouse can be used to overcome these problems since hand gesture and hand Tip detection is used to control the PC mouse functions by using a webcam or a built-in camera. While using a wireless or a Bluetooth mouse, some devices such as the mouse, the dongle to connect to the PC, and a battery to power the mouse to operate are used, but in this paper, the user uses his/her built-in camera or a webcam and uses his/her hand gestures to control the computer mouse operations. In the proposed system, the web camera captures and then processes the frames that have been captured and then recognizes the various hand gestures and hand tip gestures and then performs the mouse function.

B. Objective

The main objective of the proposed AI based virtual mouse system is to develop an alternative to the regular and traditional mouse system to perform and control the mouse functions, and this can be achieved with the help of a web camera that captures the hand gestures and hand tip and then processes these frames to perform the mouse function such as left click, right click, and scrolling function.

The main goals of the Research work are:

- To develop a cursor control system virtually using hand gestures which performs operations such as left click, right click and cursor movement.

- A virtual assistant has to develop to enable users to give commands and access functions using either voice or hand gestures.

- The System will have a voice to text converter which interprets the voice message and converts it into relevant text format.

II. RELATED WORK

People have tried different ways to make a virtual mouse. Some used gloves and recognized hand gestures, while others used colour tips on hands. However, these methods weren't very accurate. Wearing gloves could make recognition less accurate, and some users might not like wearing gloves. Also, not detecting colour tips well could affect accuracy.

In the past, there was a system where users wore a DataGlove, but it couldn't do some gesture controls. Another study in 2010 used motion history images for hand gesture recognition, but it struggled with complex gestures.

In 2013, another study required stored frames for skin pixel detection and hand segmentation. A paper in 2016 introduced a system for cursor control using hand gestures with different bands for different functions, relying on colours.

In 2018, a model for a virtual mouse using hand gestures was proposed, but it only performed a few functions.

A recent approach involved tracking hand landmarks using colours and implementing a virtual mouse with an optical flow algorithm. The process included user initialization, cursor movement, and click detection.

Another technique detected relative head movements and converted them into mouse movements. This could replace traditional mouse by using hand gestures and a webcam for motion detection. The system moved the pointer based on the detected hand, controlling simple mouse functions without pressing buttons or manually moving a mouse on a physical computer.

A. Challenges

- Hand Detection: To ensure smooth operation, it's crucial to promote the identification and tracking of hands, distinguishing them from the surrounding environment. However, dealing with objects made of fabric can pose challenges to our ability to observe and differentiate. Variations in organic appearance and non-biological characteristics, as well as factors like temperature and surface differences, may contribute to issues in this regard.

- Hand Tracking: Monitoring hands is dependable at short distances, especially when aiming to enhance visibility for individual viewing cameras. As virtual reality extends its reach to incorporate hands into daily activities, hand tracking is expected to become more trustworthy. However, the challenges may intensify in regions with limited visibility, making tracking and visualization more challenging.

- Inclusivity and Involvement: Tracking hands and gestures poses various challenges associated with irregular embodiment and inclusivity. A crucial aspect of hand tracking involves separating the skin from the surrounding area, enabling the user to define and visualize the hand's movements and strength. An additional potential issue, not explicitly tackled, pertains to variations in skin color.

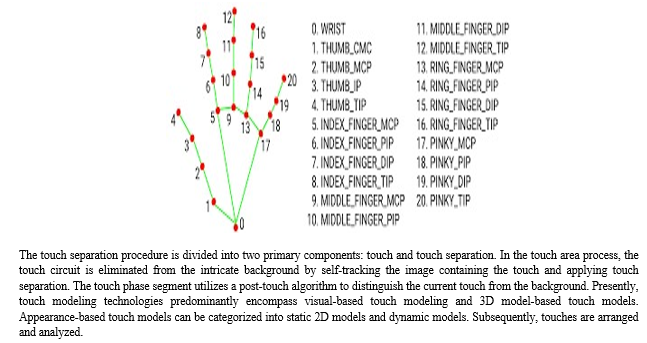

- Gesture Recognition Based on Computer Vision: Currently, touch-based touch perception stands as the predominant diagnostic approach. Information from touch images is gathered through one or more cameras, and the acquired data undergoes pre-processing, including sound removal and information enhancement. Subsequently, a separation algorithm is employed to identify the target touch within the image. The differentiation between actual touch and its significance is achieved through video processing and analysis, ultimately identifying the target touch using the touch detection algorithm. Touch-based touch recognition comprises three key components: touch recognition, touch analysis, and touch detection. The initial stage involves tapping the input image to generate a segment.

V. OVERVIEW

As technology advances, virtualization becomes increasingly prevalent. Speech recognition, for instance, plays a vital role in translating spoken language into text. This technology has the potential to replace traditional keyboards in the future. Similarly, eye tracking allows the control of the mouse pointer through eye movements, indicating a potential replacement for traditional mice.

Gestures, taking various forms such as hand images or pixel images, or any human-provided pose, can offer solutions that require less computational difficulty or power to operate devices. Companies are introducing different techniques to gather necessary information and data for recognizing hand gestures. Some models utilize special devices like data gloves and colour caps to create a comprehensive understanding of the gestures provided by users. These advancements signify a shift towards more intuitive and efficient human-computer interactions.

The Proposed system signifies the following advantages:

- Natural Interaction: Gesture control mimics natural hand movements, making it an intuitive way to interact with computers and devices.

- Hands-Free Operation: Gesture control eliminates the need for physical contact with input devices, which can be useful in situations where hands-free operation is essential, such as in medical settings, clean rooms, or for users with limited mobility.

- Creative and Artistic Applications: Gesture control is valuable in creative fields like digital art and design, as it enables more expressive and fluid input for tasks like drawing, sculpting, or 3D modeling.

- Novelty and Innovation: Gesture-controlled interfaces are often seen as cutting-edge and innovative, appealing to tech-savvy users and early adopters.

- Reduced Physical Fatigue: Gesture control can reduce physical fatigue associated with repetitive mouse movements and clicking, as it allows users to control the cursor and perform actions with minimal physical effort.

Conclusion

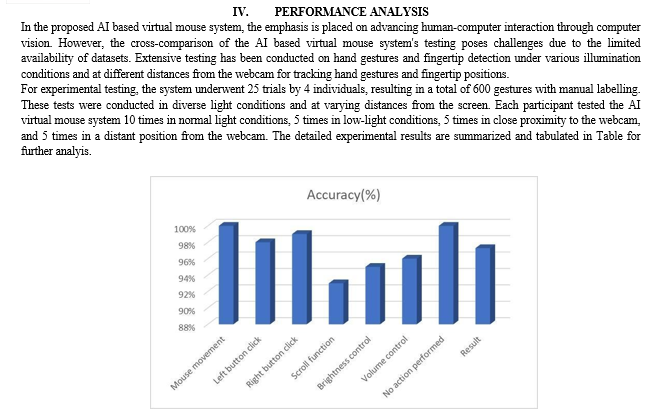

The primary objective of the AI visual mouse system is to enable users to control the mouse pointer through hand gestures, eliminating the need for physical manipulation. The system captures and processes hand gestures and fingertip movements, accessible through a webcam or built-in camera. The proposed model demonstrates high accuracy, making it applicable in real-world scenarios, such as reducing the spread of COVID-19 and eliminating the reliance on wearable devices. However, some limitations include a slight decrease in the accuracy of the right-click feature and challenges in clicking and dragging to select text. Future developments will focus on addressing these issues through the implementation of fingerprint capture methods for improved accuracy. Gesture recognition facilitates optimal interaction between humans and machines, playing a crucial role in developing alternative human-computer interaction methods. It allows for a more natural interface, with applications ranging from sign language recognition for the deaf and dumb to robot control. Gesture recognition finds applications in augmented reality, computer graphics, computer gaming, prosthetics, and biomedical instrumentation. The Digital Canvas, an extension of this system, is gaining popularity among artists, enabling the creation of 2D or 3D images using Virtual Mouse technology, where the hand serves as a brush and a Virtual Reality kit or monitor acts as the display. This technology can also aid patients without limb control and is utilized in modern gaming consoles for interactive games tracking a person\'s motions as commands. Future extensions of this work could focus on enhancing the system\'s ability to operate in complex backgrounds and diverse lighting conditions. The goal is to create an effective user interface encompassing all mouse functionalities. Research into advanced mathematical techniques for image processing and exploration of different hardware solutions could lead to more accurate hand detections. This project not only showcased various gesture operations but also highlighted the potential for simplifying user interactions with personal computers and hardware systems.

References

[1] F. Soroni, S. a. Sajid, M. N. H. Bhuiyan, J. Iqbal and M. M. Khan, \"Hand Gesture Based Virtual Blackboard Using Webcam,\" 2021 IEEE 12th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), 2021, pp. 0134-0140, doi: 10.1109/IEMCON53756.2021.9623181. [2] R. Lyu et al., \"A flexible finger-mounted airbrush model for immersive freehand painting,\" 2017 IEEE/ACIS 16th International Conference on Computer and Information Science (ICIS), 2017, pp. 395-400, doi: 10.1109/ICIS.2017.7960025. [3] Prof. S.U. Saoji, Nishtha Dua, Akash Kumar Choudhary, Bharat Phogat “Air Canvas application using OpenCV and NumPy in python International Research Journal of Engineering and Technology, Volume: 08, Issue 08 Aug 2021. [4] K. V. V. Reddy, T. Dhyanchand, G. V. Krishna and S. Maheshwaram, \"Virtual Mouse Control Using Colored Finger Tips and Hand Gesture Recognition \", 2020, doi:10.1109/HYDCON48903.2020.9242677. [5] P. Ramasamy, G. Prabhu and R. Srinivasan, \"An economical air writing system converting finger movements to text using web camera,\" 2016 International Conference on Recent Trends in Information Technology (ICRTIT), 2016, pp. 1-6, doi: 10.1109/ICRTIT.2016.7569563 [6] S. Bano, P. Jithendra, G. L. Niharika and Y. Sikhi, \"Speech to Text Translation enabling Multilingualism,\" 2020 IEEE International Conference for Innovation in Technology (INOCON),2020, pp. 1-4, doi: 10.1109/INOCON50539.2020.9298280. [7] K. H. Shibly, S. Kumar Dey, M. A. Islam and S. Iftekhar Showrav, \"Design and Development of Hand Gesture Based Virtual Mouse,\" 2019 1st International Conference on Advances in Science, Engineering and Robotics Technology (ICASERT), 2019, pp. 1-5, doi: 10.1109/ICASERT.2019.8934612. [8] Virtual Mouse Using Hand Gesture and Voice Assistant. Khushi Patel, Snehal Solaunde, Shivani Bhong, Prof. Sairabanu Pansare. Nutan College of Engineering and Research. [9] Hand Gesture Detection and Recognition System Muhammad Inayat Ullah Khan (2011). Master Thesis Computer Engineering, Nr: E4210D. [10] Deep Learning Based Real Time AI Virtual Mouse System Using Computer Vision to Avoid COVID-19. S. Shriram, B. Nagaraj, J. Jaya, S. Shankar and P. Ajay Volume 2021 | Article ID 8133076.

Copyright

Copyright © 2024 Ravi Kant, Divyansh Ruhela, Ashi Tyagi, Mayank Gupta, Kanak Singh, Ayush Tiwari. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET61942

Publish Date : 2024-05-11

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online