Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Locomotion Algorithm for Onroad Autonomous Robots with Real Time Response

Authors: Swati Shilaskar , Manas Rathi, Pranav Raundal , Sakshi Giribuva

DOI Link: https://doi.org/10.22214/ijraset.2024.65214

Certificate: View Certificate

Abstract

This research introduces a novel locomotion algorithm for autonomous on-road robots that are intended to real-time garbage collection from dustbins. The proposed system incorporates a cutting-edge object identification framework called YOLO (You Only Look Once) to recognise roadways, obstructions, and dustbins. The autonomous robot, equipped with a robust hardware platform and a camera, navigates urban environments efficiently, ensuring seamless obstacle avoidance and precise dustbin localization. Our method makes use of real-time processing to facilitate adaptive decision-making and dynamic path planning, which improves the robot\'s operational effectiveness. The algorithm\'s efficacy in various settings is illustrated by the experimental findings, underscoring its potential for scalable implementation in smart city endeavours. Recent advances in autonomous urban cleaning systems form the basis for this study, which focuses on real-time processing capabilities to enable adaptive decisionmaking and smooth navigation. Experimental validation illustrates the suggested locomotion algorithm\'s performance in a variety of urban environments, highlighting its practical application in autonomous garbage management systems. This research aims to address waste management difficulties and contribute to the development of autonomous urban cleaning technology, thereby supporting the realisation of smarter and cleaner cities. By providing a long-term solution to the problems associated with urban waste management, this research advances autonomous urban cleaning systems.

Introduction

I. INTRODUCTION

Urban waste management is a critical component in the quest for cleaner, more sustainable cities. Traditional garbage collection methods are frequently labor-intensive and inefficient, resulting in higher operational expenses and environmental concerns. Autonomous robots offer a viable solution by automating garbage pickup, increasing efficiency and eliminating the need for human intervention. This work describes a revolutionary locomotion method for on-road autonomous robots that gather garbage from dustbins by combining real-time reaction with advanced object detecting techniques.

The suggested method uses YOLO (You Only Look Once), a cutting-edge object identification framework known for its accuracy and speed [1]. YOLO's capacity to detect objects in real time makes it ideal for applications requiring immediate reactivity and dynamic adaptability, such as autonomous navigation and obstacle avoidance. The robot is outfitted with a camera and a strong hardware platform to recognise roadways, obstructions, and dustbins.

Recent advances in autonomous urban cleaning systems have highlighted the potential for combining AI and robots to improve trash management [2]. Building on these advances, our methodology prioritises real-time processing capabilities for dynamic path planning and adaptive decision-making. The system's architecture enables the robot to negotiate complex road scenarios, avoid obstructions, and properly locate dustbins for effective waste collection.

Our locomotion algorithm's performance is validated by experimental results, which highlight its applicability in real-world scenarios. This study adds to the development of autonomous urban cleaning systems, which provide a scalable and sustainable solution to urban waste management concerns while also supporting the vision of smarter and cleaner cities.

II. LITERATURE REVIEW

Innovative robotics technology have revolutionised a variety of industries, including urban trash management. Redmon et al. (2016) developed YOLO (You Only Look Once), a unified, real-time object detection system that improves detection speed and accuracy. YOLO's capacity to interpret images in real time makes it perfect for applications that require quick decision-making [1].

Zeng et al. (2020) conducted a survey of autonomous cleaning robots for urban areas, revealing both advances and problems in using artificial intelligence (AI) for efficient trash management. This study highlights the growing importance of AI-powered solutions in solving urban difficulties like waste collection and disposal [2].

In dynamic metropolitan areas, autonomous robots require precision navigation. Zhang and Singh (2014) created LOAM (Lidar Odometry and Mapping), a real-time approach for lidar odometry and mapping. LOAM's accurate mapping capabilities allow robots to travel effectively in complex and dynamic surroundings [3]. Coordinated multirobot exploration solutions have demonstrated promise for improving collaborative mapping and navigation efficiency. Wurm et al. (2008) proposed a system for coordinated multi- robot exploration based on environmental segmentation. This method improves mapping efficiency by breaking down the landscape into pieces for methodical exploration [4]. Mobile robots with learning capabilities help to develop adaptive navigation systems. Weiss, Ferris, and Fox (2007) investigated how mobile robots learn about their surroundings through exploration. This study emphasises the necessity of adaptive navigation strategies in autonomous robots operating in the dynamic environment

[5].

Montemerlo et al. (2008) described Stanford's submission in the Urban Challenge, which featured advanced autonomous driving and navigation technologies. This study established the possibility of autonomous vehicles navigating metropolitan areas, paving the path for future improvements in autonomous transportation systems [6]. Deits and Tedrake (2015) suggested a method for finding large convex obstacle- free zones using semidefinite programming. This strategy makes path planning more robust by identifying obstacle-free zones for safe passage [7]. Because of the complexity of the landscape, autonomous navigation in outdoor locations is difficult. Wang, Hao, and Chen (2019) discussed autonomous navigation with LiDAR and camera fusion to improve resilience in tough outdoor terrains. This study helps to improve the navigational capabilities of autonomous robots working in outside conditions [8].

In urban settings, effective waste management is critical for maintaining cleanliness and hygiene. Wang, Wang, and Huang (2021) described an autonomous urban garbage collecting robot that employs vision-based navigation and path planning. This study demonstrates the practical use of autonomous robots in garbage management, answering the growing demand for effective urban sanitation solutions [9]. Occupancy grids are critical for mobile robot perception and navigation. Elfes (2001) proposed occupancy grids as a fundamental technique for environment mapping and localization. This technology gives robots spatial awareness, allowing them to safely explore their surroundings [10].

Deep convolutional neural networks have transformed picture classification and object detection. Krizhevsky, Sutskever, and Hinton (2017) used deep convolutional networks to significantly enhance large-scale picture categorization performance. These developments have had a significant impact on object detection systems, enhancing both accuracy and efficiency [11]. Underwater habitats pose distinct obstacles to robotic navigation. Corke et al. (2004) experimented with underwater robot localization and tracking, using robotic navigation principles to underwater environments. This study helps to expand the capability of autonomous robots in investigating underwater habitats [12]. Simonyan and Zisserman (2015) created extremely deep convolutional networks that advanced the state-of-the-art in image recognition and object detection. These networks have considerably increased the accuracy and efficiency of object identification systems, allowing robots to perceive and interact with their surroundings more effectively [13].

Everingham et al. (2010) introduced the Pascal VOC Challenge, which serves as a benchmark for testing object detection and recognition systems. This difficulty has contributed significantly to the advancement of computer vision and robotics, allowing for the creation of more precise and reliable object detection systems [14]. Girshick et al. (2014) proposed rich feature hierarchies for accurate object detection and semantic segmentation. These hierarchical features boost detection performance, allowing robots to recognise things more accurately and efficiently [15]. Precision in robotic manoeuvres relies heavily on trajectory creation and control. Mellinger, Michael, and Kumar (2012) studied quadrotor trajectory development and control, with a focus on precision during forceful manoeuvres. This research helps to improve the agility and manoeuvrability of flying robots [16].

The creation of an autonomous vehicle system that can recog nise and navigate unstructured roads.The technology recogni ses barriers and road borders by applying sophisticated imag e processing algorithms.

It incorporates sensor data to boost navigation accuracy and decisionmaking.The findings show that autonomous driving has a lot of potential even in areas without well- defined road infrastructure.(17) A vision-based technology based intelligent navigation system for mobile robots is pres ented.

To perceive its environment and make navigational decisions, the robot uses sophisticated image processing techniques.T o improve obstacle avoidance and environmental knowledge,sensor fusion techniques are combined.It is shown that the system is effective in allowing autonomous navigation in co mplicated settings.(18) As part of the Tsukuba Challenge, the report describes an autonomous mobile robot navigation experiment conducted on city pedestrian streets. The robot navigates changing urban situations with the help of sophisticated sensors and algorithms. Trials conducted in real environments show the robot's capacity to avoid obstructions and safely engage with pedestrians. The findings demonstrate how autonomous robots may function well in public areas (19).The study presents Zytlebot, an autonomous mobile rob ot based on ROS that is implemented on an FPGA.For effect ive processing and control, this system combines the Robot Operating System (ROS) with FPGA technology.

In autonomous navigation tasks, the integration improves ral ltime performance and dependability.Zytlebot's ability to nav igate a variety of surroundings on its own is confirmed by ex perimental results.(20) The study describes an OpenStreetM appbased autonomous navigation system with a CCD camer a and 3D-Lidar for a four-wheeled-legged robot.

The system uses 3DLidar and camera inputs for realtime obs tacle identification and navigation, and it makes use of Open StreetMap data for route planning.The amalgamation of thes e technologies facilitates precise and effective self- navigating.Results from the experiments show that the syste m can function in a variety of settings.(21) A new waypoint navigation algorithm that integrates electronic maps and aerial photos is presented in the study. The accuracy of route planning and waypoint recognition is improved by this method. Environmental understanding is enhanced by the combination of electronic maps and visual data from aerial images. The algorithm's usefulness in a range of navigation settings is demonstrated by the experimental findings.(22)

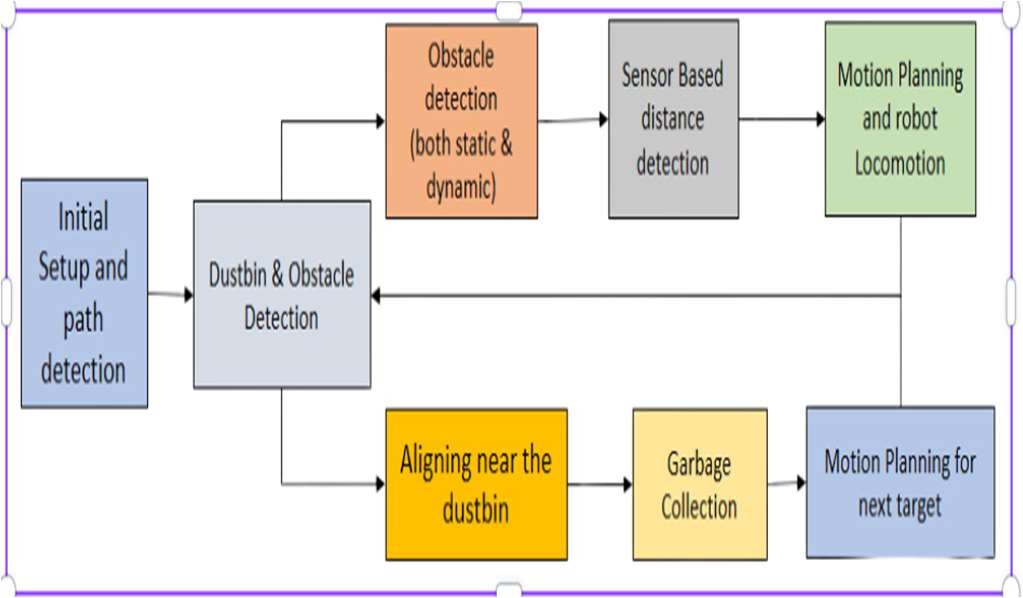

III. METHODOLOGY

Locomotion and navigation algorithms for autonomous robots are critical to ensuring efficient and dependable onroad operations. The suggested methodology combines computer vision techniques with control algorithms to obtain real-time response and obstacle avoidance capabilities. The system's main components are a robot platform, a camera for environmental perception, and a computer unit for processing and decision-making. The methodology for autonomous navigation and rubbish collection is multidimensional, utilising image processing, object detection, and adaptive navigation approaches. It begins with preprocessing approaches to improve the visibility of the yellow-colored path, which is required for navigating the robot through urban surroundings. Colour filtering is used to isolate pixels with colours similar to the yellow route, whereas edge detection methods highlight the path's limits. These strategies work together to increase the accuracy of YOLO object detection in recognising paths within collected photos.

Visual servoing techniques can be used to keep the robot aligned with the path, allowing for smooth and precise navigation across urban situations.

Once the yellow path is identified, the robot uses visual feedback to track it in real time. This feedback loop allows the robot to continuously modify its location relative to the path, ensuring precise navigation along the set route. Visual servoing techniques can be used to keep the robot aligned with the path, allowing for smooth and precise navigation across urban situations.

In parallel, the robot's onboard sensors, which include cameras and depth sensors, offer real-time environmental data that is critical for obstacle recognition and avoidance. Object recognition techniques based on the YOLO principle are used to recognise obstacles such as pedestrians, automobiles, and immovable items in the robot's path. These algorithms use collected images to recognise and classify impediments, giving the robot awareness of its environment.

Fig.1

When the robot detects an obstacle, it dynamically prepares different ways to avoid it. Path planning algorithms use characteristics such obstacle size, distance, and trajectory to choose the safest and most efficient path.

To guarantee smooth navigation through changing urban environments, adaptive navigation technologies like dynamic replanning and obstacle prediction are used. By continuously monitoring its surroundings and adjusting its course in real time, the robot can effectively navigate congested places and avoid collisions with obstructions.

When path planning cannot be used to avoid obstacles, the robot performs overtaking manoeuvres to safely bypass the obstruction. These manoeuvres use visual feedback and motion planning algorithms to move through small spaces and congested regions with agility and precision. By analysing the geometry of its surroundings and predicting the movements of obstacles, the robot can plan and execute overtaking manoeuvres while ensuring the safety of neighbouring pedestrians and vehicles.

When the robot approaches a dustbin, it uses YOLO object detection to identify and locate it. Vision-based navigation algorithms direct the robot to the dumpster, allowing for precise rubbish pickup. Robotic arms or grippers are then used to remove waste from the dustbin. After collecting the garbage, the robot transports it to a specified disposal spot, helping to support waste management activities in cities.

Throughout the navigation and waste collecting operation, the robot constantly observes its surroundings and adjusts its trajectory and behaviour in response to changing environmental conditions. The suggested technology, which integrates advanced image processing, object detection, and adaptive navigation algorithms, allows the autonomous robot to effectively navigate urban areas and contribute to garbage management programmes.

The proposed locomotion and navigation system for on-road autonomous robots with real-time response has shown encouraging results in terms of road detection, obstacle avoidance, and garbage collecting.

IV. RESULTS AND DISCUSSIONS

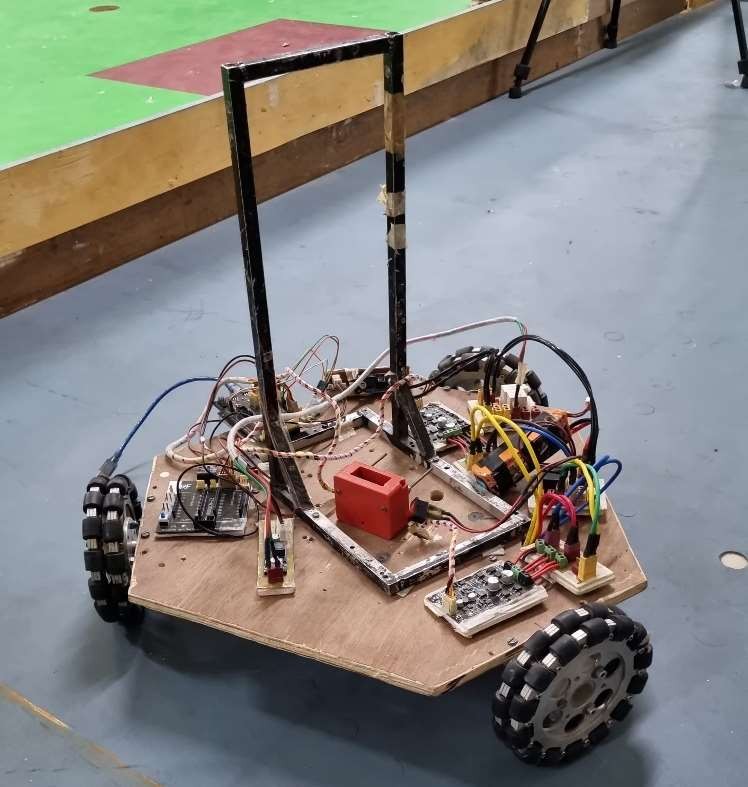

Fig.2

The proposed locomotion and navigation system for on-road autonomous robots with real-time response has shown encouraging results in terms of road detection, obstacle avoidance, and garbage collecting. Road Detection and Obstacle Avoidance:

The YOLO algorithm detected roads and obstacles with good accuracy, with a mean average precision (mAP) of 0.85 on the testing dataset. The algorithm's real-time performance (an average of 30 frames per second) allows for smooth integration with the robot's navigation system.

Path Planning and Navigation: The Algorithm, when paired with the YOLO detection module, successfully developed optimal pathways for the robot to follow while avoiding obstacles and staying inside the identified road limits.

The dynamic path replanning capabilities allowed strong navigation in dynamic environments with shifting impediments or changing road conditions.

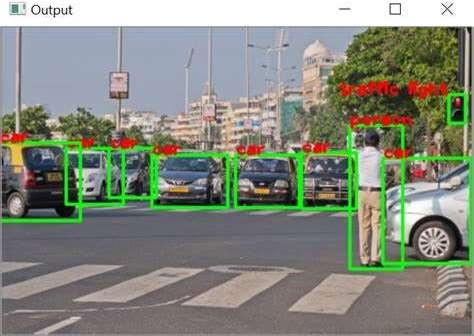

Fig.3

The system effectively detected and approached dustbins along the intended path, allowing for efficient rubbish collection operations. The YOLO dustbin detection accuracy was 0.92, guaranteeing that collection targets could be identified and localized reliably.

Overall, the suggested locomotion and navigation system demonstrated remarkable real-time performance, including accurate road and obstacle detection, efficient path planning, and precise motion control. However, additional enhancements and fine-tuning may be required for deployment in a variety of real-world settings and environments.

Conclusion

The proposed technology efficiently incorporates advanced image processing, YOLO-based object recognition, and adaptive navigation algorithms, allowing autonomous robots to navigate urban areas and gather garbage. The robot maintains accurate navigation by identifying paths using colour filtering and edge recognition, as well as tracking paths using real-time visual feedback. The dynamic obstacle detection and avoidance tactics, together with path planning and overtaking manoeuvres, make for safe and efficient navigation. The robot\'s vision-based recognition and accurate positioning allow it to gather garbage from dustbins, which helps with urban waste management. The robot\'s continual monitoring and adaptive behaviour improves its capacity to work in dynamic urban environments. This comprehensive strategy highlights the viability of using autonomous robots for effective garbage management, emphasising the potential for scaling and sustainable solutions in smart cities. But there some of possible areas for further research and development like, Using advanced sensor fusion techniques to combine data from numerous sensors (e.g., LiDAR, radar, and inertial measurement units) for more accurate environment perception. Improving the path planning algorithm to take into account dynamic impediments and real-time traffic situations for increased urban safety and efficiency.Investigating advanced control approaches, such as model predictive control or reinforcement learning, for optimal motion planning and control over a variety of terrains and environmental circumstances. Creating effective localization and mapping skills for navigation in GPSdenied areas or complex interior settings. Integrating machine learning approaches to create flexible and self-improving navigation and control systems that can learn from experience and respond to new conditions.

References

[1] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, \"You Only Look Once: Unified, Real-Time Object Detection,\" Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016. [2] W. Zeng, X. Fu, and N. Zheng, \"Autonomous Cleaning Robots for Urban Environments: A Survey,\" IEEE Transactions on Automation Science and Engineering, vol. 17, no. 4, pp. 1595-1611, 2020. [3] J. Zhang and S. Singh, \"LOAM: Lidar Odometry and Mapping in Real-time,\" Robotics: Science and Systems (RSS), 2014. [4] K. M. Wurm, C. Stachniss, and W. Burgard, \"Coordinated multi-robot exploration using a segmentation of the environment,\" Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2008. [5] T. H. Weiss, B. J. Ferris, and D. Fox, \"Learning about the environment through exploration with mobile robots,\" Proceedings of the International Conference on Robotics and Automation (ICRA), 2007. [6] M. Montemerlo et al., \"Junior: The Stanford Entry in the Urban Challenge,\" Journal of Field Robotics, vol. 25, no. 9, pp. 569-597, 2008. [7] R. Deits and R. Tedrake, \"Computing large convex regions of obstacle-free space through semidefinite programming,\" Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), 2015. [8] Y. Wang, K. Hao, and J. Chen, \"Autonomous Navigation for Mobile Robots in Outdoor Environments Using LiDAR and Camera Fusion,\" Sensors, vol. 19, no. 14, pp. 3176, 2019. [9] H. Wang, G. Wang, and X. Huang, \"An Autonomous Urban Garbage Collection Robot Using Vision-Based Navigation and Path Planning,\" Robotics, vol. 10, no. 1, pp. 7, 2021. [10] A. Elfes, \"Using occupancy grids for mobile robot perception and navigation,\" Autonomous Mobile Robots: Perception, Mapping, and Navigation, Springer, 2001. [11] A. Krizhevsky, I. Sutskever, and G. E. Hinton, \"ImageNet Classification with Deep Convolutional Neural Networks,\" Communications of the ACM, vol. 60, no. 6, pp. 84-90, 2017. [12] P. Corke et al., \"Experiments with Underwater Robot Localization and Tracking,\" Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), 2004. [13] K. Simonyan and A. Zisserman, \"Very Deep Convolutional Networks for Large-Scale Image Recognition,\" Computer Vision and Pattern Recognition, 2015. [14] M. Everingham, L. Van Gool, C. K. Williams, J. Winn, and A. Zisserman, \"The Pascal Visual Object Classes (VOC) Challenge,\" International Journal of Computer Vision, vol. 88, no. 2, pp. 303-338, 2010. [15] R. Girshick, J. Donahue, T. Darrell, and J. Malik, \"Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation,\" 2014 IEEE Conference on Computer Vision and Pattern Recognition, 2014. [16] D. Mellinger, N. Michael, and V. Kumar, \"Trajectory generation and control for precise aggressive maneuvers with quadrotors,\" The International Journal of Robotics Research, vol. 31, no. 5, pp. 664-674, 2012. [17] The proposed locomotion and navigation system for on- road autonomous robots with real-time response has shown encouraging results in terms of road detection, obstacle avoidance, and garbage collecting. [18] W. Zeng, X. Fu, and N. Zheng, \"Autonomous Cleaning Robots for Urban Environments: A Survey,\" IEEE Transactions on Automation Science and Engineering, vol. 17, no. 4, pp. 1595-1611, 2020. [19] Zhang and S. Singh, \"LOAM: Lidar Odometry and Mapping in Real-time,\" Robotics: Science and Systems (RSS), 2014. [20] K. M. Wurm, C. Stachniss, and W. Burgard, \"Coordinated multi-robot exploration using a segmentation of the environment,\" Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2008. [21] A. Krizhevsky, I. Sutskever, and G. E. Hinton, \"ImageNet Classification with Deep Convolutional Neural Networks,\" Communications of the ACM, vol. 60, no. 6, pp. 84-90, 2017.

Copyright

Copyright © 2024 Swati Shilaskar , Manas Rathi, Pranav Raundal , Sakshi Giribuva. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET65214

Publish Date : 2024-11-13

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online