Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

A Systematic Literature Review on The Role of LSTM Networks in Capturing Temporal Dependencies in Data Mining Algorithms

Authors: Kunal P. Raghuvanshi, Achal B. Pande, Nilesh S. Borchate, Rahul S. Ingle

DOI Link: https://doi.org/10.22214/ijraset.2024.64761

Certificate: View Certificate

Abstract

Data mining plays a crucial role in extracting meaningful insights from large and complex data sets, with broad applications in sectors like finance, health care, and market analysis. Traditional techniques—such as classification, clustering, association rule mining, regression, and anomaly detection—are effective for analyzing structured data but struggle with sequential data due to the challenges of modeling temporal dependencies. Long Short Term Memory (LSTM) networks, a specialized form of Recurrent Neural Networks (RNNs), provide a solution to these challenges. By incorporating memory cells and gating mechanisms, LSTM effectively manage long-term dependencies and address issues like vanishing and exploding gradients. This paper reviews the impact of LSTM networks on data mining, analyzing over 60 key publications. By synthesizing concepts and recent advancements, the review underscores how LSTMs enhance the ability of data mining algorithms to capture and predict temporal patterns, reflecting current research trends and innovations.

Introduction

I. INTRODUCTION

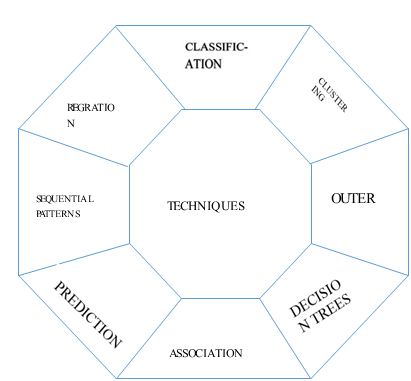

In today's data centric landscape, data mining play vital role in uncovering valuable insights from large data sets, influencing sectors such as finance, health care, and market research. Conventional data mining techniques—including classification, clustering, association rule mining, regression analysis, and anomaly detection—have been widely used to explore structured data and identify hidden patterns.

Despite their usefulness, these methods face challenges when dealing with temporal data, where capturing and predicting time-based dependencies is essential for effective analysis. Recent advancements in Long Short-Term Memory (LSTM) networks have significantly enhanced their potential. New innovations such as attention mechanisms, Transformer architectures, and hybrid models that combine LSTM with other neural networks have emerged.

These advancements allow for better handling of complex temporal dependencies, improving the efficiency of time-series forecasting, natural language processing, and dynamic pattern recognition. These developments have opened the door to novel applications by improving LSTM's ability to manage intricate temporal relationships more effectively. Long Short-Term Memory (LSTM) networks, introduced by Hochreiter and Schmidhuber in 1997, were developed to overcome the limitations of traditional Recurrent Neural Networks (RNNs).

LSTMs are designed to capture long-term dependencies in sequential data by using a more advanced architecture. This architecture incorporates memory cells along with three types of gates: input, forget, and output gates. These gates regulate the flow of information, helping to address issues like vanishing and exploding gradients, and improving the network’s ability to model sequences over time. This paper presents a comprehensive review of more than 60 key publications on the use of Long Short-Term Memory (LSTM) networks in data mining. By combining conventional techniques with the latest developments, the review provides a detailed assessment of how LSTM networks improve data mining algorithms, particularly in capturing and predicting temporal dependencies.

It highlights the advancements and trends in the field, demonstrating the significant impact of LSTM models on enhancing the accuracy and effectiveness of data mining processes.

Fig. 1. Overview of Traditional Data Mining Techniques

II. LITERATURE REVIEW

The studies showcase LSTM and hybrid models excelling in fields like stock market prediction, suggestion mining, and network intrusion detection, outperforming traditional methods. Future improvements focus on optimizing these models with advanced techniques like attention mechanisms and deep learning for better accuracy

|

Sr.no |

Author Name |

Conclusion |

Source |

Year |

|

|

Van-Dai Vuong |

The paper presents a method for integrating Bayesian LSTMs with state-space models (SSMs) for improved time-series forecasting. |

Elsevier [1] |

2024 |

|

|

Ruixuan Zheng , Yanping Bao a, Zhao , Lidong Xing a |

Significant differences in alloy yields based on raw material conditions highlight the inadequacy of single models for yield prediction. |

Elsevier [2] |

2023 |

|

|

Mobarak Abumohsen, Amani Yousef Owda , Majdi Owda , Ahmad Abumihsan |

The hybrid model provides accurate solar power forecasts, optimizing resource utilization for power systems. |

Elsevier [3] |

2024 |

|

|

Mengmeng Li , Xiaomin Feng , Mariana Belgiu |

The PST-LSTM model effectively extracts tobacco planting areas in smallholder farms using time-series SAR images. |

Elsevier [4] |

2024 |

|

|

U. B. Mahadevaswamy, Swathi P |

The Bidirectional LSTM model shows higher accuracy in predicting user sentiment from text reviews. |

Science Direct [5] |

2023 |

|

|

Haitao Wang and Fangbing Li |

The LSTM-GAT model effectively captures word order and syntactic information, improving text classification. |

Taylor [6] |

2022 |

|

|

Madhusmita Kunita, Popja Gupta |

Combining GloVe with LSTM optimally classifies news headlines, though data quality and training can affect results. |

Science Direct [7] |

2023 |

|

|

Regina OforiBoatenga , Magaly AcevesMartins |

Bi-LSTM with attention mechanisms improves abstract text classification for systematic literature reviews. |

Science Direct [8] |

2023 |

|

|

Arpita Maharathaa , Ratnakar Dasa , Jibitesh Mishraa, Soumya Ranjan Nayak , Srinivas Aoreca |

The study finds that stacked BiLSTM outperforms vanilla LSTM and CNN-LSTM for temperature prediction. |

Science Direct [9] |

2024 |

|

|

Siji Rani S, Shilpa P, Aswin G Menon |

The modified LSTM framework achieves high accuracy in drug recommendation based on patient symptoms |

Science Direct [10] |

2024 |

|

|

Xin Hua , Keyi Li , Jingfu Li, Taotao Zhonga , Weinong Wua , Xi Zhangc , Wenjiang Fengb |

The DM-OGA–LSTM model improves electricity consumption forecasting by capturing time-related and industry-specific factors. |

Science Direct [11] |

2021 |

|

|

Bo Xu , Cui Li , Huipeng Li , Ruchun Dingb |

The BiD-LSTM model, optimized using FOA, effectively diagnoses open circuit faults in MMC systems |

Science Direct [12] |

2022 |

|

|

Davi Guimaraes da Silva , Anderson Alvarenga de Moura Meneses |

Bi-LSTM outperforms LSTM in predicting power consumption time series, though with longer training times. |

Elsevier [13] |

2023 |

|

|

Khursheed Aurangzeb, Syed Irtaza Haider , Musaed Alhussein |

The Time2Vec-Bi-LSTM model shows strong accuracy in individual household energy consumption forecasting |

Elsevier [14] |

2024 |

|

|

Md Maruf Hossain , Md Shahin Ali , Md Mahfuz Ahmed . |

The Time2Vec-Bi-LSTM model shows consumption forecasting. |

Elsevier [15] |

2023 |

|

|

Kun Gao , ZuoJin Zhou , YaHui Qin |

Proposes a WTD-PS-LSTM model for gas concentration prediction, showing improved accuracy over traditional methods. WTD addresses EMD denoising limitations, but further optimization of WTD and PS is needed to enhance accuracy. |

Science Direct [16] |

2024 |

|

|

V. Shanmuganathan, A. Suresh |

Markov-enhanced LSTM model outperforms KNN, LSTM, and RNN for anomaly detection in sensor timeseries data, showing lower MAE, RMSE, MSE, and MAPE values. |

KeAi [17] |

2024 |

|

|

Samad Riaz, Amna Saghir, Muhammad Junaid Khan, Hassan Khan. |

Introduces TransLSTM model for suggestion mining, outperforming CNN, RNN/LSTM, BERT, and Transformers with high F1 scores. Future work to address noisy data and domain adaptation. |

Elsevier [18] |

2024 |

|

|

Shumin Sun1, Peng Yu1, Jiawei Xing1 |

Proposes a TransformerLSTM model for wind power prediction, achieving higher accuracy by capturing longterm dependencies in time series data. Data preprocessing techniques . |

[19] |

2024 |

|

|

Junwei Shi, ShiqiWang, Pengfei Qu?, Jianli Shao |

Presents the ICEEMDAN-SELSTM model for wind power forecasting, achieving 98.47% accuracy, addressing high-frequency data components, and improving stability and reliability |

Scientific Report [20] |

2024 |

|

|

ChiyinWang & Yiming Liu |

Develops an employee portrait model for diligence analysis and abnormal behavior prediction, using deep learning and GAN techniques, achieving 80.39% accuracy |

Scientefiic Report [21] |

2024 |

|

|

Abubakar Isah, Hyeju Shin Seungmin Oh, Sangwon Oh, Ibrahim Aliyu . |

Explores Digital Twins and multivariate LSTM networks for capturing long-term dependencies and handling missing values in time series, outperforming six baseline models |

Electronics [22] |

2023 |

|

|

Khan Md Hasib Sami Azam, Asif Karim Ahmed Al Marouf F.M. Javed Mehedi Shamrat Sidratul. |

Proposes MCNN-LSTM model for text classification, handling imbalanced data using Tomek-Link, outperforming traditional algorithms in classification accuracy. |

IEEE [23] |

2023 |

|

|

Zeyu Yin , Jinsong Shao , Muhammad Jawad Hussain , Yajie Hao . |

Introduces DPG-LSTM model for sentiment analysis, combining semantic and syntactic information for improved classification. Outperforms existing methods with high R, P, and F1 scores. |

Applied science [24] |

2022 |

|

|

Ajit Mohan Pattanayak, Aleena Swetapadma & Biswajit Sahoo |

This study compares various RNN models for stock market predictions, with single-layer LSTM showing the highest accuracy. Future work will explore hybrid models and advanced optimizations. |

Tyolar and Fransis [25] |

2024 |

|

|

Samad Riaz , Amna Saghir , Muhammad Junaid Khan , Hassan Khan , Hamid Saeed Khan , M. Jaleed Khan |

Introduces the TransLSTM model, outperforming existing methods in suggestion mining tasks, achieving high F1 scores, with plans to explore new attention mechanisms in future work. |

Elsevier [26 |

2024 |

III. PROPOSED WORK

Author propose a hybrid architecture that merges the strengths of LSTM networks and CNNs, effectively addressing datasets that possess both spatial and temporal characteristics. This flexibility allows our approach to be applied across diverse data types and mining scenarios, highlighting its versatility in practical applications.

Algorithm Architecture The proposed algorithm comprises three key components:

- Data Pre-processing Module This module prepares the input data for analysis, ensuring it is clean, normalized, and formatted appropriately for subsequent processing.

- CNN-based Feature Extraction Module In this phase, pre-processed data is fed into the CNN, which focuses on extracting spatial features.

- LSTM-based Temporal Analysis Module Following spatial feature extraction, the LSTM network analyzes these features to capture temporal dependencies.

Conclusion

This study offered an in-depth analysis of Long Short-Term Memory (LSTM) networks and their vital contribution to data mining, particularly for handling sequential and temporal information. Our examination of over 60 significant publications highlights that LSTMs consistently outperform conventional approaches in capturing complex temporal lrelationships within datasets. LSTM networks, with their use of memory units and gate mechanisms, successfully overcome the limitations of traditional Recurrent Neural Networks (RNNs), particularly the issues associated with vanishing and exploding gradients. These architectural advantages significantly improve the model\'s capacity for stable and accurate sequence processing. Moreover, the emergence of newer approaches, such as attention mechanisms and Transformer-based frameworks, alongside hybrid models, has further expanded the versatility of LSTM networks. These advances enable more accurate analysis of temporal data and broaden the potential applications, from time-series predictions to language understanding and pattern detection. The integration of LSTM networks into modern data mining methodologies represents a notable advancement. Ongoing research should aim at refining the structure of LSTM models, improving their scalability, and exploring their adoption in novel areas to fully exploit their potential in processing intricate temporal datasets. Combining LSTM with other neural network architectures also holds promise for enhanced performance in real-world tasks, such as language processing and sentiment detection.

References

[1] Vuong, V.-D., Nguyen, L.-H., & Goulet, J.-A. (2024). Coupling LSTM neural networks and state-space models through analytically tractable inference. International Journal of Forecasting. https://doi.org/10.1016/j.ijforecast.2024.04.002 [2] Zheng, R., Bao, Y., Zhao, L., & Xing, L. (2023). Method to predict alloy yield based on multiple raw material conditions and a PSOLSTM network. Journal of Materials Research and Technology, 27, 3310-3322. https://doi.org/10.1016/j.jmrt.2023.10.046. [3] Abumohsen, M., Owda, A. Y., Owda, M., & Abumihsan, A. (2024). Hybrid machine learning model combining of CNN-LSTM-RF for time series forecasting of Solar Power Generation. e-Prime - Advances in Electrical Engineering, Electronics and Energy, 9, 100636. https://doi.org/10.1016/j.prime.2024.100636:contentReference[oaicite:0]{index=0}. [4] Li, M., Feng, X., & Belgiu, M. (2024). Mapping tobacco planting areas in smallholder farmlands using Phenological-Spatial-Temporal LSTM from time-series Sentinel-1 SAR images. International Journal of Applied Earth Observation and Geoinformation, 129, 103826. https://doi.org/10.1016/j.jag.2024.103826. [5] Mahadevaswamy, U. B., & Swathi, P. (2023). Sentiment Analysis using Bidirectional LSTM Network. Procedia Computer Science, 218, 45–56. https://doi.org/10.1016/j.procs.2022.12.400. [6] Wang, H., & Li, F. (2022). A text classification method based on LSTM and graph attention network. Connection Science, 34(1), 2466- 2480. https://doi.org/10.1080/09540091.2022.2128047 [7] Khuntia, M., & Gupta, D. (2023). Indian News Headlines Classification using Word Embedding Techniques and LSTM Model. Procedia Computer Science, 218, 899-907. https://doi.org/10.1016/j.procs.2023.01.070 [8] Ofori-Boateng, R., Aceves-Martins, M., Jayne, C., Wiratunga, N., & Moreno-Garcia, C. (2023). Evaluation of Attention-Based LSTM and Bi-LSTM Networks for Abstract Text Classification in Systematic Literature Review Automation. Procedia Computer Science, 222, 114–126. https://doi.org/10.1016/j.procs.2023.08.149 [9] Maharatha, A., Das, R., Mishra, J., Nayak, S. R., & Aluvala, S. (2024). Employing Sequence-to-Sequence Stacked LSTM Autoencoder Architecture to Forecast Indian Weather. Procedia Computer Science, 235, 2258–2268. https://doi.org/10.1016/j.procs.2024.04.214 [10] Siji Rani S, Shilpa P, Aswin G Menon. (2024). Enhancing Drug Recommendations: A Modified LSTM Approach in Intelligent Deep Learning Systems. Procedia Computer Science, 233, 872–881 [11] Hu, X., Li, K., Li, J., et al. (2022). Load forecasting model consisting of data mining based orthogonal greedy algorithm and long short-term memory network. Energy Reports, 8, 235-242. https://doi.org/10.1016/j.egyr.2022.02.110 [12] Xu, B., Li, C., Li, H., & Ding, R. (2022). Fault diagnosis and location of independent sub-module of three-phase MMC based on the optimal deep BiD-LSTM networks. Energy Reports, 8, 1193– 1206. https://doi.org/10.1016/j.egyr.2022.07.122 [13] Da Silva, D. G., & Meneses, A. A. M. (2023). Comparing Long Short-Term Memory (LSTM) and bidirectional LSTM deep neural networks for power consumption prediction. Energy Reports, 10, 3315–3334. https://doi.org/10.1016/j.egyr.2023.09.175 [14] Aurangzeb, K., Haider, S. I., & Alhussein, M. (2024). Individual household load forecasting using bi-directional LSTM network with time-based embedding. Energy Reports, 11, 3963–3975. https://doi.org/10.1016/j.egyr.2024.03.028 [15] Hossain, M. M., Ali, M. S., Ahmed, M. M., Rakib, M. R. H., Kona, M. A., Afrin, S., Islam, M. K., Ahsan, M. M., Hasan Raj, S. M. R., & Rahman, M. H. (2023). Cardiovascular disease identification using a hybrid CNN-LSTM model with explainable AI. Informatics in Medicine Unlocked, 42, 101370. https://doi.org/10.1016/j.imu.2023.101370 [16] Gao, K., Zhou, Z.J., & Qin, Y.H. (2024). Gas concentration prediction by LSTM network combined with wavelet thresholding denoising and phase space reconstruction. Heliyon, 10, e28112. https://doi.org/10.1016/j.heliyon.2024.e28112. [17] Shanmuganathan, V., & Suresh, A. (2024). Markov enhanced ILSTM approach for effective anomaly detection for time series sensor data. International Journal of Intelligent Networks, 5, 154– 160. https://doi.org/10.1016/j.ijin.2024.02.007. [18] Riaz, S., Saghir, A., Khan, M. J., Khan, H., Saeed Khan, H., & Jaleed Khan, M. (2024). TransLSTM: A hybrid LSTMTransformer model for fine-grained suggestion mining. Natural Language Processing Journal, 8, 100089. https://doi.org/10.1016/j.nlp.2024.100089 :contentReference[oaicite:0]{index=0} [19] Sun, S., Yu, P., Xing, J., Cheng, Y., Yang, S., & Ai, Q. (2023). Short-term wind power prediction based on ICEEMDAN-SELSTM neural network model with classifying seasonal data. Energy Engineering, 120(12), 2762-2782. https://doi.org/10.32604/ee.2023.042635 :contentReference[oaicite:0]{index=0}. [20] 0. Shi, J., Wang, S., Qu, P., & Shao, J. (2024). Time series prediction model using LSTM-Transformer neural network for mine water inflow. Scientific Reports, 14, 18284. https://doi.org/10.1038/s41598-024-69418- z :contentReference[oaicite:0]{index=0}. [21] Wang, C., & Liu, Y. (2024). Analysis of employee diligence and mining of behavioral patterns based on portrait portrayal. Scientific Reports, 14(11942), 1-17. https://doi.org/10.1038/s41598-024- 62239- 0:contentReference[oaicite:0]{index=0}. [22] Isah, A., Shin, H., Oh, S., Aliyu, I., Um, T., Kim, J. (2023). Digital Twins Temporal Dependencies-Based on Time Series Using Multivariate Long Short-Term Memory. Electronics, 12(4187). https://doi.org/10.3390/electronics12194187 [23] Khan, M. H., Azam, S., Karim, A., Marouf, A. A., Shamrat, F. M. J. M., Montaha, S., Yeo, K. C., Jonkman, M., Alhajj, R., & Rokne, J. G. (2023). MCNN-LSTM: Combining CNN and LSTM to Classify Multi-Class Text in Imbalanced News Data. IEEE Access. DOI: 10.1109/ACCESS.2023.3309697(23) [24] Yin, Z., Shao, J., Hussain, M. J., Hao, Y., Chen, Y., Zhang, X., & Wang, L. (2023). DPG-LSTM: An Enhanced LSTM Framework for Sentiment Analysis in Social Media Text Based on Dependency Parsing and GCN. Applied Sciences, 13(1), 354. https://doi.org/10.3390/app13010354 :contentReference[oaicite:0]{index=0}. [25] Pattanayak, A. M., Swetapadma, A., & Sahoo, B. (2024). Exploring different dynamics of recurrent neural network methods for stock market prediction: A comparative study. Applied Artificial Intelligence, 38(1), e2371706. https://doi.org/10.1080/08839514.2024.2371706 [26] Riaz, S., Saghir, A., Khan, M. J., Khan, H., Khan, H. S., & Khan, M. J. (2024). TransLSTM: A hybrid LSTMTransformer model for finegrained suggestion mining. Natural Language Processing Journal, 8, 100089. https://doi.org/10.1016/j.nlp.2024.100089 :contentReference[oaicite:0]{index=0}.

Copyright

Copyright © 2024 Kunal P. Raghuvanshi, Achal B. Pande, Nilesh S. Borchate, Rahul S. Ingle. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET64761

Publish Date : 2024-10-23

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online