Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Mask and Social Distancing Detector for Enhanced Public Safety

Authors: Dr Benakappa S M, Aishwarya , Ananya S, Atiya Aymen, Divya S B

DOI Link: https://doi.org/10.22214/ijraset.2024.66060

Certificate: View Certificate

Abstract

This paper presents an enforcement of safety measures such as wearing face masks and maintaining physical distancing, which has become essential in various public places to ensure public health and safety. Despite the significance of these measures, many individuals fail to comply, necessitating automated systems for monitoring and regulation. This work explores using state-of-the-art deep learning models to create an effective face mask and social distancing detection system. The study evaluates, compares, and contrasts various object detection frameworks to identify the most suitable models for these tasks. Techniques such as Convolutional Neural Network (CNN) architectures MobileNetV2 are analyzed alongside single-shot detectors and multiple iterations of the YOLOv4 framework.

Introduction

I. INTRODUCTION

In modern society, ensuring public safety and adherence to essential health and safety protocols is a growing concern, particularly in densely populated or high-traffic environments. Measures such as wearing masks and maintaining appropriate social distancing have proven effective in mitigating risks associated with airborne contaminants and infectious diseases [1]. However, monitoring these practices on a large scale remains a significant challenge due to the limitations of manual observation and enforcement, which can be time-consuming, inconsistent, and resource-intensive. To address these challenges, this paper proposes an automated system that integrates advanced computer vision [2] and deep learning [3] techniques for real-time monitoring of mask compliance and social distancing. The system employs the YOLOv4 (You Only Look Once version) [4] [5] object detection framework for its high-speed and accurate detection of individuals, enabling precise estimation of interpersonal distances in dynamic settings. A Convolutional Neural Network (CNN) based on MobileNetV2 [6] is utilized for mask detection, offering a lightweight and efficient solution for classifying facial mask usage.

II. LITERATURE REVIEW

In the present scenario, mask and social distancing detection is of paramount importance where the literature survey provides an overview of existing research and developments related to the work, highlighting key findings, methodologies, and gaps in the field. It helps establish a foundation for the current study by reviewing relevant studies and identifying trends, challenges, and opportunities for future work.

[7] Mahmoud, M., et al. (2024)

The authors have surveyed existing techniques for detecting and recognizing masked faces. The study compared deep learning approaches for masked face detection, recognition, and unmasking. Methods such as convolutional neural networks (CNNs) and transfer learning were analyzed, focusing on datasets and challenges in maintaining accuracy under occlusion.

[8] Kumar, Rohit, et al. (2023)

This study integrated YOLOv4 and MobileNet architectures for face mask detection. The YOLOv4 model was used for object detection, while MobileNet enhanced computational efficiency. Training was performed using a labelled dataset of masked and unmasked faces. The system’s accuracy and speed were validated on real-world data, achieving a balance between precision and real-time applicability

[9] Li, Hongwei, et al. (2023)

A hybrid approach combining YOLOv4 for object detection and MobileNet for feature extraction was proposed to monitor social distancing. Distance estimation was achieved by projecting detected individuals into a top-down plane using perspective transformations. The model was trained on a custom dataset to adapt to crowded settings.

[10] Mostafa, S. A., et al. (2024)

This study employs the YOLO-based deep learning model integrated with drone surveillance for face mask detection in public spaces. The framework processes live video streams captured by drones, using YOLOv4 to detect individuals and classify face mask usage. The system is optimized for real-time accuracy, achieving reliable results across various public scenarios. Training and validation are conducted on datasets combining images of masked and unmasked faces to ensure robust performance.

[11] Saha, S., et al. (2023)

The authors utilized YOLOv4 for detecting people and masks in video streams. A distance measurement algorithm was implemented to identify social distancing violations, while mask compliance was assessed via bounding box classification. The system processed surveillance data from public areas to validate accuracy.

[12] Zhenfeng Shao, et al. (2023)

This research employed UAVs equipped with computer vision capabilities to monitor social distancing. The detection system used YOLOv4 for pedestrian tracking, combined with a spatial analysis module to calculate distances. Experiments were conducted in real-world outdoor environments for scalability assessment.

[13] Agarwal, K., et al. (2023)

A system leveraging YOLOv4 and MobileNet was developed for real-time detection of face masks and social distancing violations. The methodology involved preprocessing input data, detecting individuals and masks, and calculating inter-person distances. Validation was done on datasets from various public environments to ensure robustness.

[14] Li, X., et al. (2023)

The paper proposed a real-time framework using YOLOv4 and MobileNet for simultaneous detection of face masks and social distancing violations. Mask compliance was assessed using CNN classifiers, while a geometric algorithm measured distances between individuals. The model was tested on urban surveillance footage.

[15] Kumar, R., et al. (2023)

A dual-model approach was proposed using YOLOv4 for object detection and a feature-matching algorithm for mask classification. The system measured distances between detected individuals using a camera calibration model. Training and validation datasets included a mix of simulated and real-world scenarios.

The literature survey highlights advancements in mask and social distancing detection, focusing on deep learning techniques like YOLOv4 and MobileNet. These studies emphasize real-time accuracy, robustness in diverse environments, and applications such as drone-based surveillance and urban monitoring. Challenges include handling occlusion, perspective transformations, and maintaining efficiency. The findings provide a foundation for developing scalable systems to enhance public safety.

III. METHODOLOGY

The proposed system integrates MobileNet, YOLOv4, and CNN to create an efficient detection pipeline. MobileNet, fine-tuned with datasets of masked and unmasked faces, performs binary classification to determine mask usage [16]. The YOLOv4 identifies individuals calculates pairwise distances, flagging violations of predefined thresholds for social distancing [17]. A CNN enhances the system with additional analysis, such as evaluating mask quality or recognizing crowded environments. Data preprocessing, including augmentation and normalization, ensures robust model performance. Metrics such as accuracy, precision, recall, F1-score, and mean average precision (mAP) evaluate the models, achieving real-time, high-accuracy results suitable for diverse deployment environments.

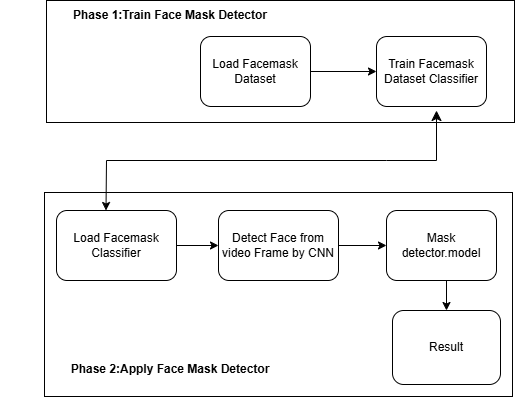

Fig. 3.1 System Architecture of face mask and social distancing

The system architecture has two phases as shown in Fig. 3.1, Train Face Mask Detector and Apply Face Mask Detector. Phase 1 involves loading a face mask dataset, pre-processing it, and training a CNN classifier to generate the Mask Detector Model. Phase 2 deploys the trained model, detects faces in video frames using CNN, and determines mask compliance. Detected faces are classified as wearing a mask or not. The results enable real-time monitoring for mask compliance.

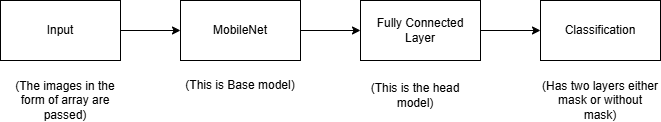

Fig. 3.2 Face Mask Identification Model

The input images were passed to the base model in the form of an array as shown in Fig. 3.2, as the algorithm required numeric data. The selected base model is the pre-trained MobileNet model and in the next step the output of the base model is given as an input to the head model. The head model is a fully connected layer. And finally, the last step of the algorithm consists of classification which has two layers: mask and without mask. The above algorithm produces a result where it detects the presence of a mask in the live feed.

(a) (b)

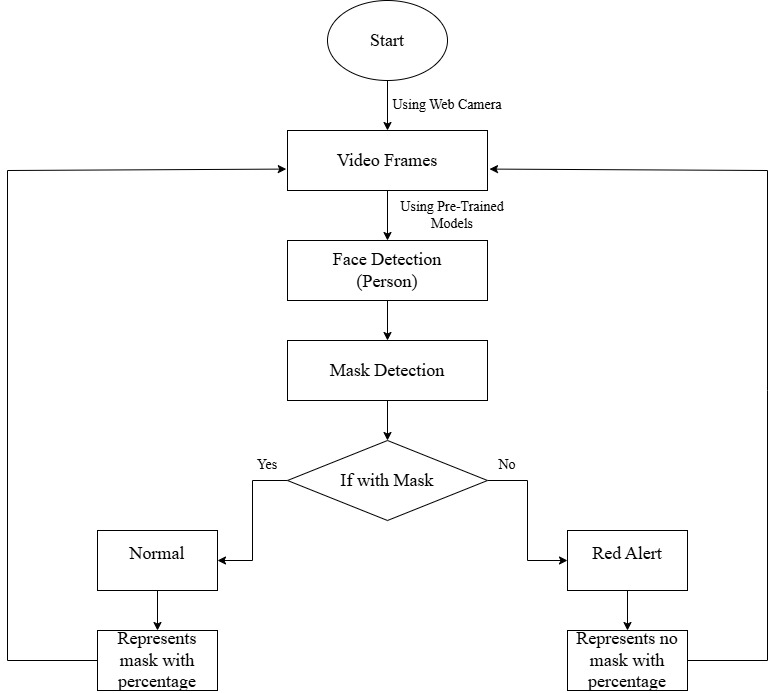

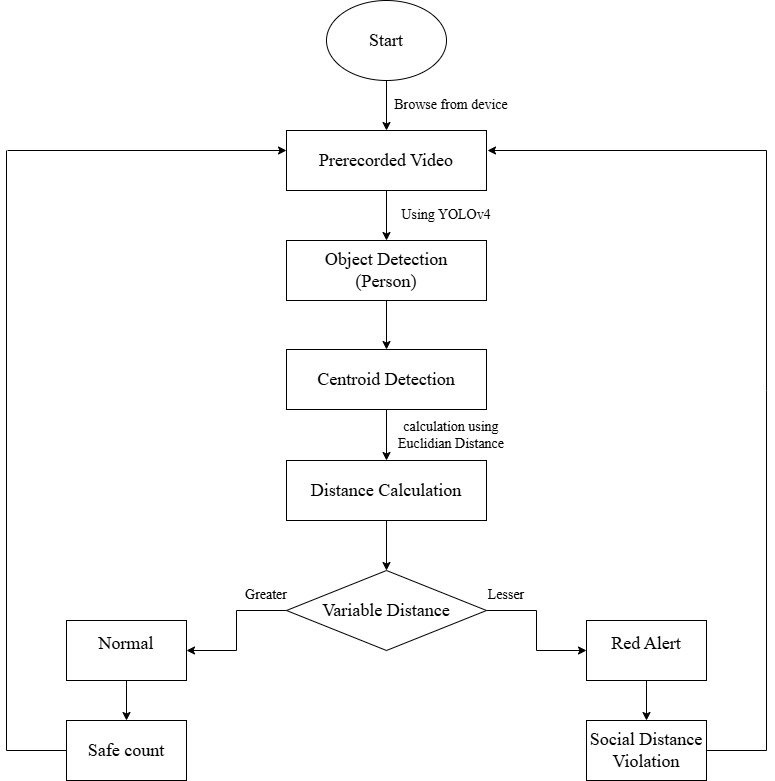

Fig. 3.3 Flow Chart of face mask detection and Social distancing detector

Fig. 3.3 (a) and (b) contain the flowcharts of the face mask detection and social distancing detector. The procedure starts at the "Start" node, which signifies the initialization of the system. This is followed by the Input Image stage, where the system receives input data in the form of an image or video frame. This input is crucial as it serves as the basis for detecting faces and identifying their mask status. The process begins with the Start node, initializing the system, and activating the video capture module. The Capture Video step collects recorded footage from surveillance cameras, serving as the input for further processing.

|

Parameters for social distancing Assumption: minimum distance = 50px maximum distance = 80px In which the centroid is the coordinates of the rectangle bound to the persons are considered for Euclidean distance calculation between the two centroids ensuring that the person is safe, if the distance between them is greater than 50px indicating the presence of a normal label else the red alert message is displayed. |

For alert message Case i: (CX1, CY1) = (292,170) (CX2, CY2) = (248,172) The centroid coordinates of the detected two persons, on applying Euclidean distance to those centroids we get, D[i, j] = √ (CX2-CX1)²+(CY2-CY1)2 = (248-292)2+(172-170)2 =1936+4 = 44.04543109 D [i, j] < minimum Distance 44.04 543109 < 50 = In safe |

|

Case ii: D[i,j] be greater than max distance Centroids coordinates (CX1, CY1) = (394,144) (CX2, CY2) =(248,172) Euclidean distance: D [i,j] =(248-394)2+(172-144)2 =21316+784 =22100 D[i,j] =148.66 > maximum distance As the distance is greater than specified maximum distance and therefore no action |

Case iii: If D [i,j] in between max and min distance . Centroid coordinates of each detected person: (CX1, CY1) = (248,171) (CX2, CY2)= (298, 172) D[i, j] =298-2482+172-1712 =502+12 =250 D[i,j]=50.009999 > minimum distance but lesser than maximum distance, therefore considered as safe |

IV. RESULTS AND DISCUSSION

The research work carried out effectively utilizes computer vision to monitor public safety by detecting whether individuals are wearing masks and maintaining proper social distancing. Using machine learning models, it accurately identifies mask usage and measures distances between people in real-time. This tool enhances public safety by providing immediate feedback and supporting compliance with health guidelines, contributing to safer environments in public spaces. The system is efficient, scalable, and can be integrated into various surveillance setups for enhanced monitoring during health crises.

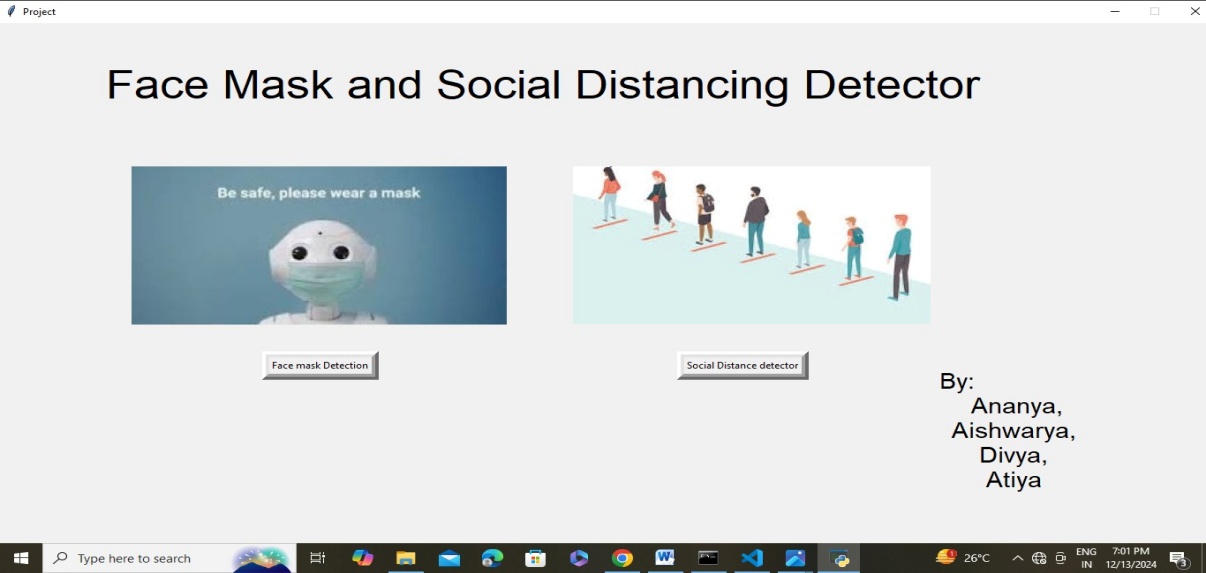

Fig 4.1. GUI of the final Outlook of the work

The Fig. 4.1 is the interface features two buttons for face mask detection and social distance detection. The left section emphasizes mask-wearing with a masked robot, while the right shows people maintaining safe distances, simplifying user interaction for safety monitoring.

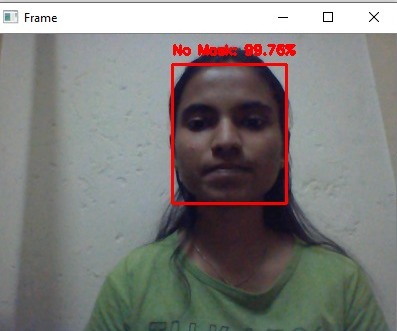

Fig 4.2. Output of mask detection for NO MASK

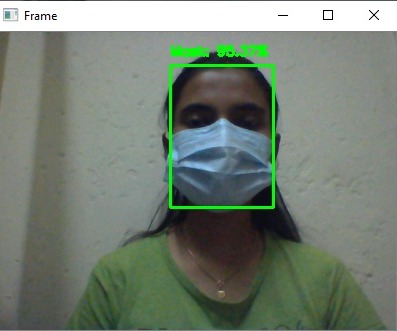

Fig. 4.3 Output of mask detection with MASK

The Fig. 4.2 and 4.3 are the outputs of a mask detection system classify individuals as "Mask" or "No Mask" with confidence scores, enclosing faces in green or red bounding boxes. The system continuously analyzes visual data to ensure accurate mask compliance detection using deep learning techniques.

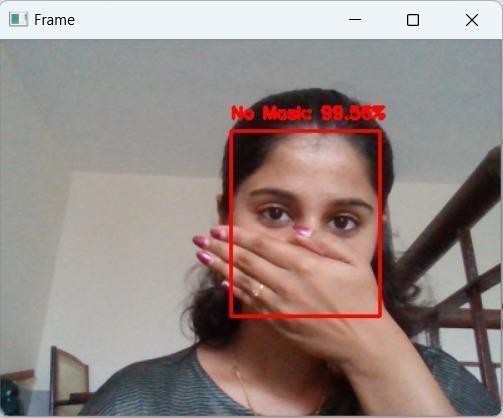

Fig. 4.4 A mask detection system identifies "No Mask: 99.50%" with a red box around the face

The Fig. 4.4 refers to a mask detection system that uses computer vision to determine whether a person is wearing a face mask. The system detects a face in the image and surrounds it with a red bounding box labelled "No Mask: 99.50%," indicating its high confidence that the individual is not wearing a mask.

(a)

(b)

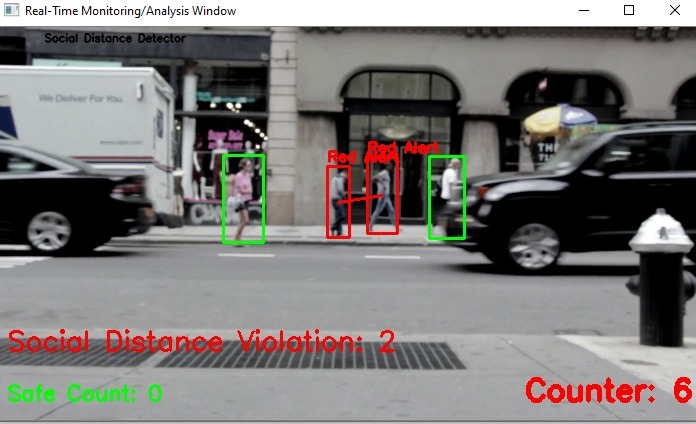

Fig. 4.5 Social distance detector button output

The Fig. 4.5 represents the Systems using computer vision to detect individuals and monitor social distancing by marking compliant individuals in green and violators in red. They display counts for total individuals, safe individuals, and violations, ensuring public safety in high-density areas like streets and malls.

Conclusion

This paper demonstrates the effective integration of computer vision and deep learning technologies to address critical public health challenges. By leveraging MobileNetv2 for lightweight and efficient mask detection, and YOLOv4 for social distancing monitoring, the system offers a comprehensive solution for automating safety measures in various environments, including hospitals, transportation hubs, and educational institutions. This paper has the ability to provide reliable and scalable detection of mask usage and social distance violations underscores its potential to enforce safety guidelines, mitigate the spread of infectious diseases, and enhance public safety. This flexible and efficient framework not only addresses pandemic needs but also prepares for future health emergencies, showcasing the transformative role of machine learning in public health management.

References

[1] Alcázar, Claudia M. Escobedo, et al. \"Review of Social Distancing and Face Mask of Coronavirus Spread.\" (2023). [2] Prasad, Janvi, et al. \"COVID vision: An integrated face mask detector and social distancing tracker.\" International Journal of Cognitive Computing in Engineering 3 (2022): 106-113 [3] Kashyap, Gautam Siddharth, et al. \"Detection of a facemask in real-time using deep learning methods: Prevention of Covid 19.\" arXiv preprint arXiv:2401.15675 (2024). [4] Mokeddem, Mohammed Lakhdar, Mebarka Belahcene, and Salah Bourennane. \"Real-time social distance monitoring and face mask detection based Social-Scaled-YOLOv4, DeepSORT and DSFD&MobileNetv2 for COVID-19.\" Multimedia Tools and Applications 83.10 (2024): 30613-30639 [5] Ayaz, Muhammad, et al. \"Enhancing Face Mask Detection in Public Places with Improved Yolov4 Model for Covid-19 Transmission Reduction.\" [6] Narmada, D. \"Face Mask and Social Distancing Detection in Marketable Places using Convolutional Neural Network Algorithm.\" 2024 International Conference on Inventive Computation Technologies (ICICT). IEEE, 2024. [7] Mahmoud, Mohamed, Mahmoud SalahEldin Kasem, and Hyun-Soo Kang. \"A Comprehensive Survey of Masked Faces: Recognition, Detection, and Unmasking.\" arXiv preprint arXiv:2405.05900 (2024). [8] Kumar, Rohit, et al. \"Face mask detection using deep learning with YOLOv4 and MobileNet.\" Springer Advances in Intelligent Systems and Computing (2023): 45-50. [9] Li, Hongwei, et al. \"A novel approach for social distancing detection in crowded areas using YOLOv4 and MobileNet.\" ScienceDirect AI Applications Journal 14.2 (2023): 223. [10] Mostafa, Salama A., et al. \"A YOLO-based deep learning model for Real-Time face mask detection via drone surveillance in public spaces.\" Information Sciences (2024): 120865. [11] Saha, S., et al. \"Social Distancing and Face Mask Detection using YOLOv4 and MobileNet.\" IEEE Xplore, 2023. This study employs YOLOv4. [12] Zhenfeng Shao, Gui Cheng, Jiayi Ma, Zhongyuan Wang, Jiaming Wang, Deren Li, “Real-time and Accurate UAV Pedestrian Detection for Social Distancing [13] Wuhan, Hubei, China, 430072, 2021 Agarwal, K., et al. \"Real-time Safe Social Distance and Face Mask Detection using YOLOv4.\" IEEE Xplore, 2023. [14] Li, X., et al. \"Real-time Face Mask and Social Distancing Detection Using YOLOv4.\" Springer Link, 2023. [15] Kumar, R., et al. \"Real-time Social Distancing and Face Mask Detection with YOLOv4.\" IEEE Xplore, 2023. [16] Guerrieri, Marco, and Giuseppe Parla. \"Real-time social distance measurement and face mask detection in public transportation systems during the COVID-19 pandemic and post-pandemic Era: Theoretical approach and case study in Italy.\" Transportation Research Interdisciplinary Perspectives 16 (2022): 100693. [17] Guerrieri, M., & Parla, G. (2022). Transportation Research Interdisciplinary Perspectives, 16, 100693.

Copyright

Copyright © 2024 Dr Benakappa S M, Aishwarya , Ananya S, Atiya Aymen, Divya S B. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET66060

Publish Date : 2024-12-22

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online