Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Master Face Generation Using Latent Variable Evolution in Face Recognition System

Authors: Sumathi. P, Aravindh N, Aravinth M, Paari S, Ashik Anto

DOI Link: https://doi.org/10.22214/ijraset.2024.61097

Certificate: View Certificate

Abstract

Face authentication is now widely used, especially on mobile devices, rather than authentication using a personal identification number or an unlock pattern, due to its convenience. It has thus become a tempting target for attackers using a presentation attack. Traditional presentation attacks use facial images or videos of the victim. Previous work has proven the existence of master faces, i.e., faces that match multiple enrolled templates in face recognition systems, and their existence extends the ability of presentation attacks. In this paper, we perform an extensive study on latent variable evolution (LVE), a method commonly used to generate master faces. We run an LVE algorithm for various scenarios and with more than one database and/or face recognition system to study the properties of the master faces and to understand in which conditions strong master faces could be generated. Moreover, through analysis, we hypothesize that master faces come from some dense areas in the embedding spaces of the face recognition systems. Last but not least, simulated presentation attacks using generated master faces generally preserve the false-matching ability of their original digital forms, thus demonstrating that the existence of master faces poses an actual threat.

Introduction

I. INTRODUCTION

A. Anomaly Detection

Challenges in detection include the protecting information and communications through the use of codes, so that only those for whom the information is intended can read and process it. There are two types of methods used for processing namely, Image processing, Data processing. The two general phases that all types of data have to undergo the information obtained from Security processing.

B. General Introduction

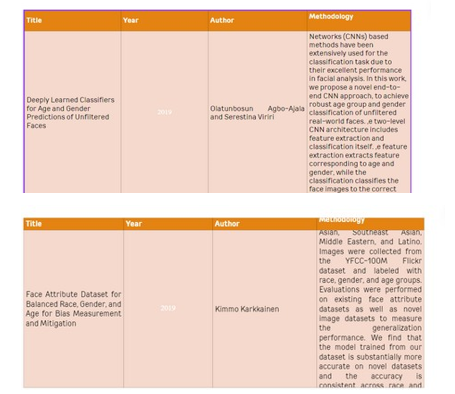

Facial analysis has gained much recognition in the computer vision community in the recent past. Human’s face contains features that determine identity, age, gender, emotions, and the ethnicity of people Among these features, age and gender classification can be especially helpful in several real-world electronic customer relationship management, biometrics, electronic vending machines, human-computer interaction, entertainment, cosmetology, and forensic art However, several issues in age and gender classification are still open problems. Age and gender predictions of unfiltered real-life faces are yet to meet the requirements of commercial and real-world applications in spite of the progress computer vision community keeps making see conventional hand- engineered methods relied on the differences in dimensions of facial features and face de scripters which do not have the ability to handle the varying degrees of variation observed in these challenging unconstrained imaging conditions .images in these categories have some variations in appearance, noise, pose, and lighting which may affect the ability of those manually designed computer vision methods to accurately classify the age and gender of the images. Recently, deep learning-based methods have shown encouraging performance in this field especially on the age and gender classification of unfiltered face images

II. EXISITING SYSTEM

Feature extraction in facial analysis involves isolating and extracting distinctive characteristics such as facial landmarks and texture patterns, which are indicative of age and gender. These features are then utilized in the classification process to categorize face images into appropriate age groups and genders.

The process encompasses image pre-processing techniques to enhance image quality, feature learning algorithms to discern meaningful patterns, and classification methods to assign labels based on learned features. By combining these stages effectively, the system can accurately identify age and gender attributes from facial images, facilitating various applications such as demographic analysis and personalized marketing. Additionally, feature extraction in facial analysis often involves the use of deep learning architectures, such as convolutional neural networks (CNNs), to automatically learn hierarchical representations of facial features directly from raw image data. Furthermore, ongoing research in facial analysis continues to explore innovative approaches, including the integration of contextual information and multi-modal data fusion, to further enhance the capabilities of age and gender prediction.

A. Objective And Scope

To effectively classify and predict data in facial analysis, several strategies can be employed to mitigate accuracy issues and enhance overall performance. These include optimizing feature extraction techniques to capture relevant age and gender-related attributes accurately. Additionally, employing advanced machine learning algorithms such as deep learning models can help in learning complex patterns from facial data, improving classification accuracy. Regularization techniques can be utilized to prevent overfitting and ensure generalizability of the model across diverse datasets. Furthermore, employing data augmentation methods can enrich the training data, leading to more robust models capable of handling variations in facial expressions, lighting conditions, and poses. Lastly, fine-tuning hyperparameters and model architectures through rigorous experimentation and validation can further refine the predictive capabilities of the system, ultimately enhancing its performance in accurately classifying and predicting age and gender from facial images.

Optimizing feature extraction, leveraging advanced machine learning algorithms, and employing regularization techniques are key strategies to enhance the accuracy and performance of facial analysis systems for classifying age and gender from images. Moreover, integrating domain knowledge and expertise into the feature extraction and model design process can enhance the interpretability and meaningfulness of the extracted features, thereby improving the system’s ability to capture subtle age and gender-related cues from facial images. Additionally, ongoing monitoring and updating of the model with new data can ensure its adaptability to evolving trends and variations in facial characteristics, thereby maintaining its relevance and effectiveness over time.

Documentation and Reporting: Document the development process, model architecture, and evaluation results in detail. Provide clear documentation on how to interpret the model's predictions and incorporate them into cybersecurity decision-making processes. Ethical Considerations: Ensure that the development and deployment of the predictive model adhere to ethical guidelines and respect user privacy rights. Implement safeguards to prevent misuse or unintended consequences of the system. Continuous Improvement: Foster a culture of continuous improvement by soliciting feedback from cybersecurity professionals, stakeholders, and end-users. Use this feedback to iteratively enhance the effectiveness and reliability of the predictive model over time.

III. SOFTWARE REQUIREMENTS

A. Software Requirements

- Operating System : Windows 7

- Language : Python

- IDE: Anaconda - Spyder

B. Hardware Requirements

- Hard Disk: 1000 GB

- Monitor: 15 VGA color

- Mouse: Microsoft.

- Keyboard: 110 keys enhanced

- RAM: 4GB

C. Software Description

Face Morphing Software: Tools designed to create morphed images by blending facial features from multiple source images, potentially allowing attackers to generate synthetic faces that can fool face recognition systems.

Deep fake Generation Platforms: Advanced software utilizing deep learning techniques to create highly realistic fake videos or images by swapping faces, enabling attackers to create convincing impersonations for bypassing face recognition systems.

Adversarial Example Generation Frameworks: Software frameworks capable of generating subtle perturbations to input images that are imperceptible to humans but can fool face recognition algorithms, thereby facilitating adversarial attacks.

Presentation Attack Simulation Software: Tools that simulate various types of presentation attacks, such as printed photos, masks, or 3D models, to assess the vulnerability of face recognition systems to different spoofing methods.

Face recognition systems are increasingly being deployed in various applications, from security to authentication. However, they are susceptible to attacks, particularly master face attacks, where adversaries attempt to fool the system by presenting synthetic or altered faces. This paper presents a generalized software description for master face attacks on face recognition systems. The software employs deep learning techniques, such as generative adversarial networks (GANs), to generate synthetic facial images that deceive the face recognition system. Additionally, the software incorporates optimization algorithms to fine-tune the generated faces for optimal deception. Security measures, including watermarking and encryption, are implemented to prevent misuse of the software. This software aims to raise awareness about the vulnerabilities of face recognition systems and the importance of robust security measures to mitigate potential risks. As face recognition systems become more prevalent in everyday life, understanding and addressing their vulnerabilities is crucial for ensuring user privacy and security.

Face recognition systems play a vital role in various domains, including security, access control, and biometric authentication. These systems analyze facial features to verify or identify individuals. However, they are vulnerable to attacks, particularly master face attacks, where adversaries attempt to deceive the system by presenting synthetic or altered faces.

Master face attacks pose a significant threat to the reliability and security of face recognition systems. Adversaries can exploit vulnerabilities in the system to gain unauthorized access or impersonate individuals. Therefore, it is crucial to develop countermeasures to mitigate the impact of such attacks.

In this paper, we present a generalized software description for master face attacks on face recognition systems. The software leverages advanced techniques, including deep learning and optimization algorithms, to generate synthetic facial images that deceive the recognition system. Additionally, security measures are implemented to prevent misuse of the software.

Master face attacks involve the generation or manipulation of facial images to deceive face recognition systems. Adversaries may use various techniques to create synthetic faces that resemble the target individual or alter existing faces to evade detection. Master face attacks involve the generation or manipulation of facial images to deceive face recognition systems. Adversaries may use various techniques to create synthetic faces that resemble the target individual or alter existing faces to evade detection.

Common Methods Used in Master Face Attacks Include:

- Image manipulation: Adversaries may alter facial features, such as the eyes, nose, or mouth, to create a synthetic face that resembles the target individual.

- Generative adversarial networks (GANs): GANs are deep learning models that consist of two neural networks, a generator and a discriminator, trained adversarial to generate realistic-looking images. Adversaries can use GANs to create synthetic faces that deceive the face recognition system.

- Optimization algorithms: Optimization algorithms can be employed to fine-tune the generated faces to maximize the likelihood of a successful attack. These algorithms adjust the facial features to enhance the resemblance

- The software architecture for master face attacks on face recognition systems consists of the following components:

- Data preprocessing module: This module preprocesses the input facial images to extract facial features and normalize them for further processing.

- Generative adversarial network (GAN) module: The GAN module generates synthetic facial images that deceive the face recognition system. It consists of a generator network that generates fake faces and a discriminator network that evaluates the authenticity of the generated faces.

- Optimization module: The optimization module fine-tunes the generated faces using optimization algorithms to maximize the likelihood of a successful attack. It adjusts the facial features to enhance the resemblance to the target individual while minimizing the risk of detection. The software is implemented using Python programming language and popular deep learning frameworks such as TensorFlow or PyTorch.

The following are the key implementation details for each module:

a. Data preprocessing module: This module utilizes image processing libraries such as OpenCV to preprocess the input facial images. It applies techniques such as face detection, alignment, and normalization to extract facial features and prepare them for further processing.

b. Generative adversarial network (GAN) module: The GAN module is implemented using deep learning frameworks such as TensorFlow or PyTorch. It consists of a generator network, typically a convolutional neural network (CNN), that generates fake faces, and a discriminator network, also a CNN, that evaluates the authenticity of the generated faces.

IV. SOFTWARE TESTING STRATEGIES

A. Validation Testing

The system's performance is rigorously evaluated under normal conditions using accuracy, FAR, FRR, and throughput metrics, while its ability to detect spoofing attempts is measured through SDR and FAR. Additionally, the system's resilience to adversarial attacks is tested by evaluating its accuracy and robustness against various techniques, ensuring its effectiveness in real-world scenarios.

B. User Acceptance Testing

User acceptance testing (UAT) for face recognition systems against master face attacks involves verifying end users' satisfaction and acceptance of the system's authentication reliability and security measures. This includes presenting users with diverse scenarios, collecting feedback from various demographics, and using surveys

C. Output Testing

Validate the system's alert generation and notification mechanisms in response to detected spoofing attempts or suspicious behavior. Verify that the system generates alerts or triggers alarms promptly when detecting potential security threats and effectively communicates relevant information to system administrators or security personnel.

Logging and Audit Trail Verification: Review the system's logging and audit trail records to ensure comprehensive coverage of authentication events, including legitimate user access attempts, spoofing detections, and system responses. Verify that the system accurately logs relevant details and timestamps for forensic analysis and incident response purposes.

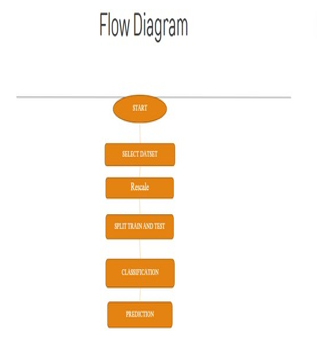

Flow Diagram:

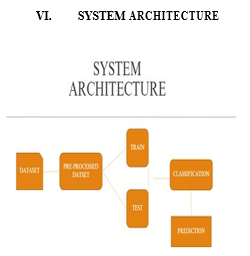

V. IMPLEMENTATIONS: MODULES

- Data Selection and Loading

- Data Preprocessing

- Rescaling

- Splitting Dataset into Train and Test Data

- Classification

- Prediction

- Result Generation

VII. FUTURE ENHANCEMENT

A. Enhancing Spoof Detection Algorithms

In the ever-evolving landscape of facial recognition technology, the detection of spoofing attempts, such as deepfake videos or high-quality masks, has become increasingly critical. To address this challenge, the development and integration of more sophisticated spoof detection algorithms are imperative. These algorithms leverage advanced computer vision and machine learning methods to identify and mitigate realistic spoofing attempts effectively.

Advanced computer vision techniques enable the system to analyze facial features, textures, and motion patterns in-depth, distinguishing between genuine faces and spoofing artifacts with greater accuracy. Machine learning algorithms play a crucial role in training the system to recognize subtle cues indicative of spoofing, continuously improving its detection capabilities over time.

By harnessing large datasets of both genuine and spoofed facial images, these algorithms can learn complex patterns and variations inherent in spoofing attempts. Deep learning architectures, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), are particularly effective in extracting meaningful features from facial data, enabling the system to discern between real and fake faces with high precision.

Furthermore, the integration of anomaly detection techniques enhances the system's ability to detect previously unseen spoofing methods.

By identifying deviations from normal facial characteristics or behaviors, these algorithms can flag suspicious activities indicative of spoofing attempts, thereby bolstering the system's overall security posture.

B. Multi-Modal Authentication

In addition to advancing spoof detection capabilities, implementing multi-modal biometric authentication systems.

By combining face recognition with other biometric modalities such as iris recognition, fingerprint scanning, or voice recognition, these systems create a multi-layered authentication framework that significantly enhances security and resilience. Each biometric modality captures unique physiological or behavioral characteristics, making it inherently resistant to spoofing attacks. By integrating multiple modalities, the system can leverage the strengths of each biometric trait while mitigating their individual weaknesses.

For example, while facial recognition excels in user convenience and non-intrusiveness, iris recognition offers unparalleled accuracy and resistance to spoofing. By requiring simultaneous verification of both facial and iris biometrics, the system can achieve a higher level of confidence in user authentication, reducing the risk of unauthorized access due to spoofing attempts.

Moreover, the fusion of biometric modalities enhances the system's adaptability to diverse environmental conditions and user preferences. In scenarios where facial recognition may be compromised due to poor lighting or occlusions, iris or fingerprint authentication can serve as reliable fallback options, ensuring seamless user authentication under various circumstances.

C. Continuous Learning and Adaptation

To stay ahead of evolving threats, facial recognition systems must incorporate mechanisms for continuous learning and adaptation. This entails leveraging feedback from real-world usage scenarios and adversarial testing to improve the system's robustness and accuracy over time.

By collecting data on user interactions and authentication outcomes in real-time, the system can dynamically adjust its algorithms and parameters to adapt to emerging patterns and trends.

Machine learning algorithms play a pivotal role in this process, as they can analyze vast amounts of data and identify subtle changes indicative of potential security threats or performance degradation. Adversarial testing, which involves deliberately subjecting the system to spoofing attempts and other security challenges, serves as a valuable source of feedback for system improvement. By simulating real-world attack scenarios, adversarial testing helps identify vulnerabilities and weaknesses in the system's defenses, prompting the development of countermeasures and mitigation strategies.

Conclusion

In this study, Recently, age, gender, and the regency of personal photos have become important information for several organizations and governments for business, identification, security and, usage. Also, this data collected from persons through the enterprise system, so the form validators were proposed to reduce the user data entry errors. In this paper, we attempt to propose a new solution to validate these data by predicting age and gender from a single person photo and comparing it with age, gender and tan detection. Then, after evaluation, we found it has good results in gender prediction, but it still suffers in age prediction. it has good results in Tan prediction Consume.

References

[1] R. Yamashita, M. Nishio, R. K. G. Do, and K. Togashi, ?Convolutional neural networks: an overview and application in radiology, ? Insights into Imaging, vol. 9, no. 4. Springer Verlag, pp. 611–629, Aug. 01, 2018, doi: 10.1007/s13244-018-0639-9. [2] Paul Viola and Michael J Jones. Robust real-time face detection. International journal of computer vision, 57(2):137– 154, 2004. [3] Savas, Burcu Kir, Sumeyya Ilkin, and Yasar Becerikli. \"The Realization of Face Detection and Fullness Detection in Medium by Using Haar Cascade Classifiers.\" 2016 24th Signal Processing and Communication Application Conference (SIU), 2016. doi:10.1109/siu.2016.7496215. [4] Rath, Subrat Kumar, and Siddharth Swarup Rautaray. \"A survey on face detection and recognition techniques in different application domain.\" International Journal of Modern Education and Computer Science 6.8 (2014): 34 [5] Hatem, Hiyam, Zou Beiji, and Raed Majeed. \"A survey of feature base methods for human face detection.\" International Journal of Control and Automation 8.5 (2015): 61-78 [6] E. Alajrami, H. Tabash, Y. Singer and M. Astal, \"On using AI- Based Human Identification in Improving Surveillance System Efficiency\", 2019 International Conference on Promising Electronic Technologies (ICPET), 2019. Available: 10.1109/icpet.2019.00024 [Accessed 4 June 2020]. [7] Bhattacharya, Jhilik, et al. \"Real-time DNN-based Face Identification for the Blind.\" International Conference on Applications in Electronics Pervading Industry, Environment and Society. Springer, Cham, 2017. [8] Qawaqneh, Zakariya, Arafat Abu Mallouh, and Buket D. Barkana. \"Deep Convolutional Neural Network for Age Estimation based on VGG-Face Model.\" arXiv preprint arXiv:1709.01664 (2017) [9] N. Chauhan, \"Predict Age and Gender using Convolutional Neural Network and openCV\", Medium, 2020. [Online]. Available: https://towardsdatascience.com/predict-age-and-gender- usingconvolutional-neural-network-and-opencv-fd90390e3ce6. [Accessed: 05- Jun- 2020. [10] Liao, Haibin, et al. \"Age estimation of face images based on CNN and divide-and-rule strategy.\" Mathematical Problems in Engineering, 2018 [11] Uricár, Michal, et al. \"Structured output svm prediction of apparent age, gender and smile from deep features.\" Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. 2016. [12] O. Agbo-Ajala and S. Viriri, \"Face-Based Age and Gender Classification Using Deep Learning Model\", Image and Video Technology, pp. 125-137, 2020 [13] J. Bhattacharya, F. Guzzi, S. Marsi, S. Carrato, and G. Ramponi,?Real-time DNN-based on the classification.2021

Copyright

Copyright © 2024 Sumathi. P, Aravindh N, Aravinth M, Paari S, Ashik Anto. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET61097

Publish Date : 2024-04-27

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online