Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- References

- Copyright

Movie and Music Recommendation System based on Facial Expressions

Authors: Mukesh Patil, Harsha Bodhe

DOI Link: https://doi.org/10.22214/ijraset.2024.58355

Certificate: View Certificate

Abstract

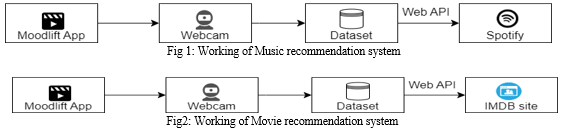

This paper depicts a novel approach of recommendation system created to extract user’s facial expressions and analyze it using Convolutional Neural Network. CNN is a type of artificial neural network which primarily is used for image recognition and processing by recognizing patterns in images. Initially, an inbuilt webcam will operate to detect user’s facial expression which reduces system’s cost compared to alternative methods. The main concern in existing recommendation systems is manual sorting, so to avoid it, this model is proposed. ‘Movie and Music Recommendation System based on Facial expressions’ provides a way to automatically play the music and movie without spending much time browsing it. The most common problem we all face is, whenever we hop onto third party movies and music platforms, we find tons of options and get stuck into the confusion of what to watch in terms of movies and spend half of our time surfing the options and then we decide to watch something specific. Computer vision is used to understand people in images and videos. This research project introduces an ingenious recommendation system that capitalizes on facial expressions in real time, customize movie and music suggestions through emotion analysis. Haar Cascade algorithm and CNN targets to detect facial feature detection. The database of movies is routed to IMDB site and songs to Spotify API. Training of dataset is done on FER 2013 sample images. It has an input layer, convolutional layer, dense layer and output layer. After the emotions are detected, mood classification is done (for song: tone, valence, timber; for movie: happy, surprise, sad, neutral, anger.) The model is displayed on a website created using Html and CSS with Flask as interface. This system yields better performance results. This recommendation system is exhibited on a website which is user friendly created with HTML and CSS with Flask.

Introduction

I. INTRODUCTION

Music and Movies are always known to alter human emotions, enhancing their mood and reduce depression. The primary objective of this project is to develop a model which can detect emotions based on facial expressions. To achieve this objective clearly, we have to involve the database of the songs and movies based on mood genre. This database will recommend songs and movies based on the expressions. It also aims to create an easy-to-use interface so the user can easily get their hands on the website. The interface of the website is created using HTML and CSS with Flask. The main objective is to evaluate and test the accuracy and effectiveness of the model in detecting the emotions and retrieving it. Since multiple options of music and movies creates a lot of confusion to choose from, this model proves helpful for those who want to seamlessly get the results. The development of an easy- interface is the most important part to get the recommendations in real-time. The Convolutional Neural Network is used to detect and analyze the emotions. It consists of input layer, convolutional layer, dense layer and output layer.CNN extracts features from the image and determines the specific expression. To accurately detect emotions, we need to detect faces within an image and run the model to detect expressions. In terms of results, the major goal was to create and establish a framework for customers to assist them to find an ideal choice for them. The project seeks to discover the correlation and similarity between the different songs and to construct a recommendation system framework that suggests new movies/songs.

II. LITREATURE REVIEW

Computer vision and machine learning techniques are integrated to compile the music dataset to play music playlist by detecting facial expressions. Convolutional Neural Network and Deep Neural Network are assigned to recognize human emotions and patterns. Webcam detects emotions using streamlite –webrtc and streamlite packages. Mediapipe, numpy, keras, OS Module libraries are used to process the model working. The pros were better security and accurate face detection tactis [1]. To reduce computational time and achieve efficiency in recommendation system Convolutional neural network has been used to recognize human emotions. Pygame is used for music recommendation system.

After detecting expressions from webcam, the system processes to perform its task of recommendation. The emotions that the system can detect were happy, sad, angry, neutral, or surprised. After determining the user’s emotion, the proposed system provided the user with a playlist that contains music matches that detected the mood. The advantages were accurate reading of frames and there were disadvantages as machine occupied powerful data storages [2]. The music recommendation system based on facial emotion recognition aims to reduce the efforts of users in creating and managing playlists. Tinker and Convolutional neural network algorithms are used to detect user image emotions according to specific genre. It stated merits as accurate recommendation of assets and the demerits where it took up most of memory of CPU for processing [3]. Module comprises of system which is hybrid in network which has recurrent neural network and 3D convolutional neural network in display. Experimentations of extensively showing combining RNN and C3D together can improve video-based emotion recognition [4]. Automated facial landmarks are extracted during emotions display. The landmarks of face are taken in consideration are accordingly localization od sailent patches is done [5]. Extraction of sailent features is done on the face capturing the emotions and computer vision play vital role of displaying the responses by capturing the expressions [6]. Convolutional neural network captures expressions to suggest music's on specified individual expressions especially if we feel exhausted, tired or happy or good. Pre-trained networks which are sequential are used to train the datasets when the emotions are being detected and extracted [6].

III. PROBLEM STATEMENT

To make Music & Movie Recommendation System seamlessly effortless based on human expressions which can be carried out without human intervention and by giving non-complex user-interaction.

IV. PROPOSED SYSTEM

A. Proposed Statement

To design and implement a real time music and movie recommendation system which detects the facial expressions of the user using an inbuilt webcam, where the emotions are detected by Convolutional Neural Network.

B. Proposed Solution

First the system detects the expressions of the user and extracts the features from the image using Convolutional Neural Network by using mediapipe. The system is trained as per FER 2013 dataset which enables it to identify and detect emotions. The captured image is input to CNN which learns features directly. The features are analyses to determine the current emotion of user. Each emotion which is detected will be mapped to the model which is named as ‘MoodLift’ it will correspond music via Spotify API and movies through IMDB site automatically. The system opts for better user experience by invoking human computer interaction.

V. SYSTEM REQUIREMENTS

A. Hardware Requirements

These are the Hardware interfaces used Processor:

- Processor – Intel Pentium 4 or equivalent

- RAM –Minimum of 4GB or higher

- HDD –100GB or higher

- Architecture – 32-bit or 64-bit

- Mouse – Scroll or optical mouse

- Keyboard –Standard 110 keys keyboard

- Web camera

B. Software Requirements

- Operating System – Windows 10 or 11

- Programming Language – Python –3.10.9

- Microsoft C++ 14.0 Build Tools

- MediaPipe

- TensorFlow

VI. METHODOLOGY

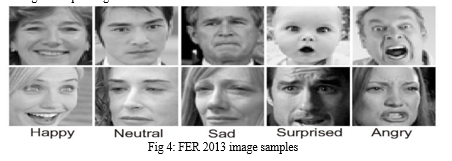

We have built a recommendation system using the Kaggle dataset. Training of dataset is done using FER 2013 data sample images. The training dataset consists of 24176 and the testing dataset contains 6043 images. There are 48x48 pixel grayscale images of faces in the dataset. We have created the emotion recognition model using Python programming language and deployed it on the web application using flask. CV2, TensorFlow, NumPy, matplotlib, and other libraries are also utilized to develop this working model. The model is built using the transfer learning approach for which the Mobile Net model is used. The FER-2013 dataset, which comprises around 35000 photos, was utilized for model training and validation. This model is deployed on a website created with HTML and CSS using the flask framework. Based on the five emotions, a new dataset of movies and music was constructed. Data from movies and songs is utilized that correlates various emotions. So, for the creation of this model, we initially must perform below steps:

- Data Collection

- Data pre-processing

- Data training

- Data testing

- Data evaluation

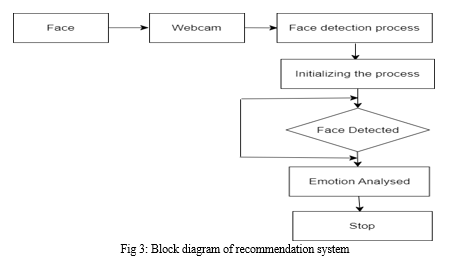

So, for Data Collection, we collected datasets for multiple movies and music which can be classified according to the emotions (happy, sad, angry, surprised, neutral). Then the next step is to pre-process the data which involves changing the raw data into a clean dataset. The machine is trained over the expressions. The dataset emotions are trained based on FER 2013 image samples and later tested over numerous epochs to achieve efficiency. Finally, the machine will be able to detect the emotions since we have trained the machine to understand those emotions. So, evaluation of emotions will take place and subsequently movies and music of identified expressions will be displayed. Face detection is considered under computer vision technology, as CV can detect the images or recognize the face using a webcam. In this process the algorithms are trained and developed so that they can detect object detection or faces. This detection can be in real-time or of videos or images. OS module is included in the program which provides python functions for interacting with the operating system. It is Pythons utility module. Hardcascades frontal default model is used to detect faces and train model.

After testing numerous harcascade models for different angles of face, we came to conclusion that frontal default version of model was not most appropriate. After evaluating the model, we concluded that the face should be facing the camera properly, there should be no noise in the image or face, the face should not be tilted. When custom model was used with custom parameters the accuracy was good, but results were not accurate. The first model predicted false positive for neutral, classifying happy faces as ones filled with neutral ones. There were even a few false positives for ‘sad’. VGG16 model worked with 61% accuracy in testing data and performed better than custom parameters. However, cons were found by confusion matrix as it identified few sad images as neutral. Where VGG16 model was efficient in classifying very diverse expressions. VGG16 also took up a lot of space. VGG16 model couldn't distinguish differences in subtle emotions. So, to overcome this issue VGG16 was used with custom parameters, and this gave better performance. Mood Classifier is used to classify music and movies as per different emotions as mentioned Main aspect for music recommendation was to train model to take different features of song like acoustic, timber, tone, rhythm etc. and to determine if a song was happy, sad, neutral, energetic. We found a playlist of songs as per genre and mood on Spotify. For happy songs we used pop as EDM, for sad we used mellow and for neutral we used Lofi. More than 300 songs per genre were used to create a dataset of 1200 songs. For movie recommendation we have trained the model with FER 2013 dataset images to extract features to determine the genre depending on the five emotions.

VII. FUTURE WORK INSIGHTS

The recommendation system has tremendous potential for development and implementation. Throughout history, facial recognition technology has been employed as a highly effective method for understanding and interpreting human emotions. So, future work may involve integrating the system with a large dataset of movies and music. Integrating the dataset to Spotify API for music and IDMB for movies through the website. Creating web pages for displaying various genre datasets. With ongoing study, this proposed system has potential to enhance both human wellbeing and musical listening experiences in several ways. To interlink the music dataset, we will have to create a playlist on Spotify regarding specific genre. Training of FER 2013 image samples is to be done over enormous epochs to gain efficiency. Achieving accuracy to recognize emotions despite noise or blur image. er vision and machine learning techniques for connecting facial emotion for recommendation. To make the approach to use Deep Neural Networks (DNN) to learn the most appropriate feature abstractions. DNNs have been a recent successful approach in visual object recognition, human pose estimation, facial verification and many more. VGG16 along with custom parameters are to be exhibited to make the model more efficient to determine the emotions accurately to display the results.

References

[1] S.Sunitha, V.Jyothi, P.Ramya, S.Priyanka. ‘Music Recommendation Based on Facial Expression by Using Deep Learning’, 2023 IRJMETS. [2] Rahul ravi, S.V Yadhukrishna, Rajalakshmi, Prithviraj, ‘A Face Expression Recognition Using CNN &LBP’,2020 IEEE. [3] Madhuri Athavle, Deepali Mudale, Deepali Shrivastav, Megha Gupta. ‘Music Recommendation Based on Face Emotion Recognition’ , 2021, JIEEE. [4] Fan, Yin, et al. \"Video-based emotion recognition using CNN-RNN and C3D hybrid networks.\" Proceedings of the 18th ACM International Conference on Multimodal Interaction’, ACM, 2016. [5] S.L.Happy and A. Routray. ‘Facial expression recognition using features of salient facial patches’,2015, IEEE [6] Madhuri Athavale ‘Music Recommendation Based on Face Emotion Recognition’ ,2021, JIEEE.

Copyright

Copyright © 2024 Mukesh Patil, Harsha Bodhe. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET58355

Publish Date : 2024-02-08

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online