Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

MRI Tumor Segmentation and Tumor Classification using Deep Learning

Authors: Dr. Supriya Dinesh, Poonam Balasaheb Hajare, Yashika Deepak Agarwal

DOI Link: https://doi.org/10.22214/ijraset.2024.60903

Certificate: View Certificate

Abstract

A non-invasive imaging method that creates three-dimensional, finely detailed anatomical pictures is called magnetic resonance imaging (MRI). It is frequently employed in the diagnosis, monitoring, and detection of diseases. A magnetic field and computer-generated radio waves are used in magnetic resonance imaging (MRI), a medical imaging technology that produces detailed pictures of your body\'s organs and tissues. Manual reading of these image and classification is a tedious task and requires dedicated professionals. The proposed work intends to ease this task using deep learning techniques for MRI tumor segmentation and classification. It uses VGG and CNN architecture for this purpose. This paper demonstrates the use of deep learning in MRI classification

Introduction

I. INTRODUCTION

Magnetic Resonance Imaging is referred to as MRI. It's a medical imaging method that produces finely detailed pictures of the inside of the body by using radio waves and powerful magnets. It's often employed to identify and track a range of medical issues. An intense magnetic field and radio waves are used in MRI technology to produce precise pictures of your body. Your body's hydrogen atoms are aligned by the magnetic field, and when radio waves are delivered, the atoms release signals that are utilized to produce the visuals. The most dangerous tumor kinds in the world are brain tumors. The most prevalent primary brain tumor, glioma, is caused by glial cell carcinogenesis in the brain and spinal cord. Patients with glioblastoma often have an average survival period of less than 14 months following diagnosis. Glioma is defined by many histology and malignancy grades. Medical professionals have long utilized magnetic resonance imaging (MRI), a well-liked non-invasive method that creates a multitude of distinct tissue contrasts in each imaging modality, to identify brain malignancies. Nonetheless, the laborious and time-consuming process of manually segmenting and analyzing structural MRI images of brain tumors can now only be completed by qualified neuroradiologists. Consequently, brain tumor identification and therapy will be greatly impacted by an automated and reliable brain tumor segmentation system. Additionally, it can result in the prompt detection and treatment of neurological conditions including schizophrenia, Alzheimer's disease (AD). In order to make therapeutic progress more relevant and successful, radiologists can provide critical information regarding the volume, location, and shape of tumors (including augmenting tumor core areas and complete tumor regions) with the use of an automated lesion segmentation approach. Tumor and normal adjacent tissue (NAT) differ in a number of ways that make segmentation in medical imaging analysis less efficient. These variations include size, location, form, and bias field an undesired artifact resulting from faulty image capture.

A. MRI Scan Evaluation And Details

Brain evaluation results from MRI scans might be normal or abnormal. Cerebrospinal fluid (CSF), white matter (WM), and gray matter (GM) tissues are the three main types of normal brain tissues seen in MRIs. In addition to the previously mentioned normal tissues, a tumorous brain scan frequently shows edema, necrosis, and core tumors. Within a core tumor, necrosis is a dead cell; on the other hand, edema is seen close to the edges of an active tumor. Swelling that results from fluids being trapped around a tumor is called edema. It can be vasogenic in extra-axial tumors that are not infiltrative, like meningiomas, or it can be infiltrative in tumors that penetrate the brain's WM tracts, such gliomas. In addition, these tissues frequently show similar intensity characteristics in structural MRI sequences including FLAIR, T1-w, and T2-w. For example, the challenge of distinguishing between the inflammation that is linked with the tumor and the tumor itself was mentioned. Furthermore, Alves et al. showed how challenging it is to distinguish between cancers based just on signal intensities. They gave an example using a case where two patients were given diagnoses for two distinct types of brain tumors since they had traits of similar severity and had large amounts of edema surrounding them. Brain tumors are one of the main causes of death in both adults and children.

According to the American Brain Tumor Association (ABTA), about 612,000 people in the West receive a brain tumor diagnosis. Tumors, sometimes called neoplasms, are uneven patches of tissue that arise from aberrant cell growth. There are two main kinds of brain tumors that have been found; they are classified according to the tumors' location (primary or metastatic) and growth type (malignant or benign). MRIs, or magnetic resonance imaging, can detect even the smallest details within the body. MRI is frequently utilized in the management of brain lesions and other malignancies. With MRI, we may find abnormalities and anticipate anatomical features. This technique may identify changes in tissue or structures more accurately than computed tomography, and it can also determine the size of a tumor. Brain tumor identification by hand is a difficult process. Therefore, it is essential to automate the segmentation and classification of brain tumors utilizing computer vision techniques. While computational tools help interpret the subtleties of medical imaging, clinical procedures enable the extraction of pertinent data and a comprehensive analysis of pictures. Accurately assessing the morphology of tumors is essential to improving treatment outcomes. Despite significant efforts in this field, clinicians continue to determine tumors manually, which hinders communication between researchers and medical professionals.

II. LITERATURE REVIEW

There have been a lot of automatic categorization systems recently; they may be categorized into feature learning and assessment procedures. CNN is one of the more recent deep learning approaches that has shown to be reliable and is widely used in medical image analysis. Additionally, they are less effective in applications with small datasets, demand large GPUs, have a high temporal complexity, and require a large dataset for training—all of which increase user costs—than traditional techniques. Because choosing the right deep learning tools requires knowledge of several parameters, training techniques, and topology, it may be very challenging. Sakshi Ahuja et al. [1] employed the superpixel approach and transfer learning for brain segmentation and tumor identification, respectively. This model was trained using the VGG 19 transfer learning model, and the dataset came from the BRATS 2019 brain tumor segmentation competition. Superpixel method was used to separate the tumor into pictures of LGG and HGG. As a consequence, the average dice index was 0.934, which was different from the ground truth data. U-Net was utilized by Hajar Cherguif and colleagues [2] to do semantic segmentation on medical pictures. Using the U-Net design, a well-convoluted 2D segmentation network was created. The suggested model was tested and assessed using the BRATS 2017 dataset. There were four deconvolutional layers, 27 convolutional layers, and a 0.81 dice coef in the suggested U-Net design. In order to obtain correct findings from MRI scans, Chirodip Lodh Choudhury et al.,[3] used deep learning techniques incorporating deep neural networks and combined it with a Convolutional Neural Network model. A fully connected neural network was coupled to a suggested 3-layer CNN architecture. An accuracy of 96.05% and an F-score of 97.33 were attained. Ahmad Habbie et al.,[4] used an active contour model to assess MRI T1 weighted images and semi-automated segmentation to determine whether a brain tumor was likely.

An analysis was conducted on the effectiveness of morphological geodesic active contour, morphological active contour without edge, and snake active contour. According to the statistics, MGAC outperformed the other two.

Neelum et al., [5] examined the likelihood of getting a brain tumor and using a concatenation technique for the deep learning model in this work. The Inception - v3 and DenseNet201 pre-trained deep learning models were utilized to identify and categorize brain cancers. The v3 model's inception was pre-trained to extract characteristics, which were then combined to classify tumors. Next, the categorization phase was completed.

III. METHODOLOGY

A. Algorithm

- Image Acquisition: MRI scans are performed to capture detailed images of the patient's body, specifically the area of interest where the tumor is located.

- Preprocessing: The acquired MRI images undergo preprocessing steps like noise reduction, image enhancement, and normalization to improve the quality and consistency of the images.

- Tumor Segmentation: Using advanced image processing algorithms, the tumor region is segmented and outlined in the MRI images. This involves identifying the boundaries and differentiating the tumor from the surrounding healthy tissues.

- Feature Extraction: Relevant features, such as shape, texture, and intensity characteristics, are extracted from the segmented tumor region. These features provide quantitative information about the tumor's properties.

- Tumor Classification: Machine learning or statistical models are trained using the extracted features to classify the tumor into different types or categories. This step helps in determining the tumor's malignancy, grade, or subtype.

- Diagnosis and Treatment Planning: The classified tumor information is analyzed by medical professionals to make accurate diagnoses and plan appropriate treatment strategies tailored to the patient's specific condition.

B. Datasets and Evaluation Metrics

Training and assessing deep learning models for tumor segmentation and classification requires access to annotated MRI datasets. Research in this area has been made easier by a number of publicly accessible datasets, including the TCIA (The Cancer Imaging Archive) repository, the MICCAI (Medical Image Computing and Computer Assisted Intervention) Brain Tumor Segmentation Challenge datasets, and the BraTS (Brain Tumor Segmentation) dataset. Evaluation criteria including the area under the receiver operating characteristic (ROC) curve, sensitivity, specificity, and dice similarity coefficient (DSC) are frequently used to evaluate segmentation and classification performance.

C. MRI Tumor Segmentation

The technique of dividing an image into sections of interest is called segmentation. Segmentation in the context of MRI tumor analysis is the process of separating the borders of the tumor from the surrounding healthy tissues. In this endeavor, deep learning-based segmentation techniques have shown impressive results. For MRI tumor segmentation, a number of designs have been proposed, including convolutional neural networks (CNNs), recurrent neural networks (RNNs), and their mixtures. To improve segmentation accuracy, these strategies make use of big annotated datasets and multi-scale processing, attention mechanisms, and generative adversarial networks (GANs). U-Net is a well-known architecture for MRI tumor segmentation that has shown outstanding performance because of its special design that was made for the study of medical images. This work presents a concentrated evaluation of U-Net's usage in MRI tumor detection, emphasizing its innovations, architecture, advantages, and drawbacks. It also goes over several U-Net extensions and tweaks and how they affect segmentation efficiency and accuracy. The U-Net design comprises of an expanding route that conducts segmentation by upsampling and concatenating feature maps from the contracting path, and a contracting path that extracts features using a sequence of convolutional and pooling layers. Accurate object location is made possible by this symmetric design, which also maintains contextual information. Additionally, exclude links in the contracting and expanding channels between related levels.

D. MRI Tumor Classification

The goal of tumor classification is to divide segmented tumors into several classes according to their features, including grade, kind, and malignancy. Deep learning methods outperform more conventional machine learning methods in the categorization of MRI tumors, which has led to their widespread use. Usually, these techniques use characteristics taken from divided tumors as input to deep neural networks that classify the data. Tumor classification has made use of architectures such as CNNs, recurrent neural networks (RNNs), and deep belief networks (DBNs), frequently in combination with methods like ensembling and transfer learning to increase classification accuracy.

E. VGG in CNN architecture

The popular CNN architecture known as VGG is renowned for being easy to understand and efficient when it comes to image classification jobs.

In this work, the effectiveness of traditional CNN architectures and VGG for MRI tumor identification is compared. The deep architecture of VGG is typified by its many convolutional layers, max-pooling layers, and fully linked layers for classification at the conclusion. Small (3x3) convolutional filters are used across the network to facilitate the learning of hierarchical representations and local information. The amount of layers and parameters in VGG architectures, including VGG16 and VGG19, vary, with deeper models being able to capture more intricate information. CNN Structure MRI tumor detection using conventional CNN designs usually start with convolutional layers, then go on to pooling layers for feature extraction, fully connected layers for classification. The number of layers, filter sizes, and activation functions in these topologies might differ. Although CNNs have demonstrated efficacy in a range of image processing applications, their performance may be influenced by parameters including dataset quality, network depth, and parameter tweaking.

IV. RESULTS

Deep learning's potential and efficacy in medical image analysis are frequently emphasized in the conclusion of studies on MRI tumor segmentation and classification. Accuracy and precision, automation for efficiency, generalization across datasets, challenges and limitations, and therapeutic implications are a few possible key themes.

The purpose of this study is to provide a segmentation strategy to help experts identify brain tumors. With 98.8% pixel and 98.93% classification accuracy, this method is capable of segmenting and classifying brain tumors. Here, using an MRI scan, we will provide a distinct mask picture of a certain subject. This work aims to help professionals discover brain cancers by proposing a segmentation technique, which is inspired to reduce the problem of such less precise diagnosis.

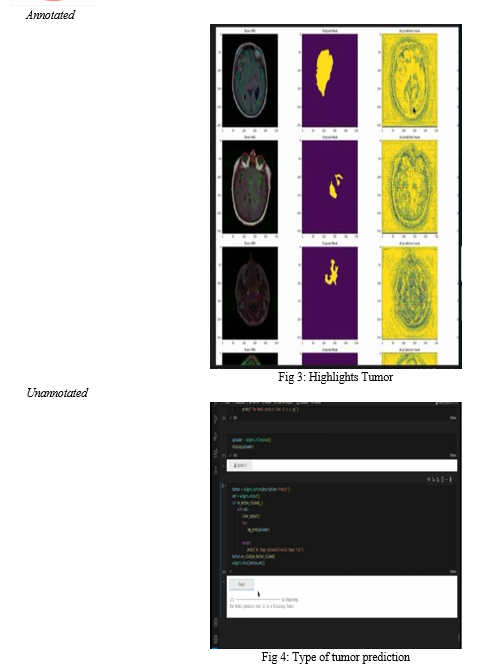

A. Annotated and Unannotated Approach

- Annotated Approach: In the annotated approach, particular tumor locations are marked in mask pictures that correlate to MRI images. These annotated photos are used to train deep learning algorithms to determine the correlation between tumor sites and visual attributes. Accurate tumor detection is made possible by the explicit supervision that annotated masks offer during model training.

- Unannotated Approach: In this method, tumor identification is done immediately on MRI pictures without the need for specific annotations. The raw MRI scans are the only data used to train deep learning models; no further annotations are used. This method is based on the model's implicit inference of tumor areas without explicit supervision, and its ability to learn discriminative features directly from the input pictures.

In the comparison study, both annotated and unannotated deep learning models for MRI tumor identification are trained and evaluated. In the annotated technique, training is done using annotated MRI datasets, which are collections of MRI pictures matched with matching mask images. On the other hand, the unannotated technique uses raw MRI pictures in unannotated MRI datasets for training. Model performance is evaluated. The availability of datasets, the cost of annotation, and the availability of computational resources are some of the variables that influence the decision between annotated and unannotated methodologies for MRI tumor identification. Unannotated algorithms have the potential to simplify the tumor identification process and lessen reliance on manual annotations, but annotated approaches provide explicit supervision and may initially produce greater accuracy. To increase tumor detection performance, further research is required to investigate hybrid techniques that make use of both annotated and unannotated data.

Conclusion

To sum up, deep learning methods for MRI tumor segmentation and classification have demonstrated significant potential to enhance the precision and effectiveness of tumor analysis in medical imaging. The code was implemented on VS code using deep learning model. Large annotated datasets, deep learning architecture developments and growing computer power are likely to make further research in this area possible and improve the practical usefulness of automated MRI-based tumor detection and treatment planning.

References

[1] Sakshi Ahuja, B.K Panigrahi, and Tapan Gandhi, “Transfer Learning Based Brain Tumor Detection and Segmentation using Superpixel Technique ,” in International Conference on Contemporary Computing and Applications, IEEE, 2020. [2] Hajar Cherguif, Jamal Riffi, Mohamed Adnane Mahrez, Ali Yahaouy,and Hamid Tairi, “Brain Tumor Segmentation Based on Deep Learning,” in International Conference on Intelligent Systems and Advanced Computing Sciences (ISACS), IEEE, 2019. [3] Chirodip Lodh Choudhary, Chandrakanta Mahanty, Raghvendra Kumar,and Borjo Kishore Mishra, “Brain Tumor Detection and Classificationusing Convolutional Neural Network and Deep Neural Network,” in International Conference on Computer Science, Engineering and Applications (ICCSEA), IEEE, 2020. [4] Ahmad Habbie Thias, Donny Danudirdjo, Abdullah Faqih Al Mubarok,Tati Erawati Rajab, and Astri Handayani, “Brain Tumor Semi-automatic Segmentation on MRI T1-weighted Images using Active Contour Models,” in International Conference on Mechatronics, Robotics and Systems Engineering (MoRSE), IEEEE, 2019. [5] Neelum Noreen, Sellapan Palaniappam, Abdul Qayyum, Iftikhar Ahmad, Muhammad Imran, “Attention-Guided Version of 2D UNet for Automatic Brain Tumor Segmentation,” IEEE Access, Volume: 8. [6] Ms. Swati Jayade, D.T. Ingole, and Manik D.Ingole, “MRI Brain Tumor Classification using Hybrid Classifier,” in International Conference on Innovative Trends and Advances in Engineering and Technology, IEEEE,2019. [7] Zheshu Jia and Deyun Chen, “Brain Tumor Detection and Classification of MRI images using Deep Neural Network,” in IEEE acess, IEEE 2020. [8] Sungheetha, Akey, and Rajesh Sharma. ”GTIKF-Gabor-Transform Incorporated K-Means and Fuzzy C Means Clustering for Edge Detection in CT and MRI.” Journal of Soft Computing Paradigm (JSCP) 2, no. 02 (2020): 111-119 [9] Pranian Afshar, Arash Mohammadi, and Konstantinos N.Plataniotis,“BayesCap: A Bayesian Approach to Brain Tumor Classification Using Capsule Networks,” IEEE Signal Processing Letters(Volume 27),2020.’

Copyright

Copyright © 2024 Dr. Supriya Dinesh, Poonam Balasaheb Hajare, Yashika Deepak Agarwal . This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET60903

Publish Date : 2024-04-24

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online