Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Multiclass Medical X-ray Image Classification using Deep Learning with Explainable AI

Authors: M K Gagan Roshan, P Sai Deekshith, Ankit Kesar, Pramod H , Sonika Sharma D

DOI Link: https://doi.org/10.22214/ijraset.2022.44541

Certificate: View Certificate

Abstract

COVID-19, also known as novel coronavirus, created a colossal health crisis worldwide. This virus is a disease that basically comes from Severe Acute Respiratory Syndrome (SARS) and Middle East Respiratory Syndrome (MERS). A novel coronavirus, COVID-19, is the infection caused by SARS-CoV-2. The early detection of COVID-19 and the accurate separation of non-COVID-19 cases at the lowest cost and in the early stages of the disease are among the main challenges in the current COVID-19 pandemic. As pneumonia also is a significant indication of COVID-19, then it is necessary to detect it in the early stages. Another challenge is that it is very elusive to classify the chest x-ray between COVID-19 and pneumonia as the visual indications for both the labels are quite similar. The application of deep learning in the field of radiologic image processing reduces false-positive and negative errors in the detection of this disease and could offer a unique opportunity to provide fast, cheap, and safe diagnostic services to patients. Also, the Deep learning models are considered to be the “black boxes”. According to the ethics of AI in radiology, “transparency, interpretability, and explainability are necessary to build patient and provider trust”. Pneumonia is one of the significant indications of COVID-19 disease, and it opens holes in the lungs giving them a “honeycomb-like structure”. So, early screening of chest x-rays could be the best choice and efficient one because they are inexpensive and are easily available. Our model presents an approach using existing Deep Learning models which focuses on enhancing the preprocessing stage to obtain accurate and reliable results classifying the chest x-rays into 3 classes: COVID-19, Pneumonia, Normal. Our model will also explain its predictions using interpretability techniques. This chapter includes strategies for predicting future situations using historical data. existing set of data. Deep learning techniques have been used and two solutions, one for predicting the chance of being infected and other for forecasting the number of positive cases, are discussed. A trial was done using different models, and the algorithm that predicted results with the best accuracy are covered in the chapter. COVID-19 may be easier to detect because of the availability of various models for predicting infectious disease.

Introduction

I. INTRODUCTION

Coronavirus disease is a disease which spreads from one person to another through air and other mediums. Coronavirus is caused by SARS-COV2 virus. Pneumonia is another respiratory disease which affects the air sacs in one or both lungs.

Recent research shows that advanced artificial intelligence concepts such as Deep learning show efficiency in detecting patterns such as those that can be found in diseased tissue and also has the ability to eliminate the problem of misdiagnosis when the visual indicators of two classes are quite elusive.

The goal is to create a Multiclass Deep Learning Model (CNN) and that can classify images into Covid-19 pneumonia and normal from X-Rays. Our model proposes a new approach based on existing Deep Learning models that focuses on improving the preprocessing stage to obtain accurate and reliable results classifying images into pneumonia COVID-19 and normal from Chest X-ray and CT- Scan images to detect lung region which is affected that contains covid 19 virus and leave the non infected lung regions that can lead to dip in accuracy. The biggest problem with any respiratory disorders is that symptoms takes days or weeks to fully seen in any patient.This may hamper the identification of covid 19 or even pneumonia cases faster. Due to this, Covid 19 spread faster across the globe and has led to millions of deaths because any person with covid 19 may not know that he is a carrier and can spread the virus to other persons unknowingly. For early detection of disease, medical field has tried to bring a powerful and good influence on the realm

Though traditional detection methods such as reverse transcription polymerase chain reaction (RT-PCR) from a nasopharyngeal swab have proven to be extremely successful, they take long time to provide results and may also have a high rate of false positive results, as seen in the results of the study. As a result, Deep Learning and Explainable AI is being investigated as a possible replacement. We all know In December 2019, a Chinese people in Wuhan, the capital of Hubei province in mainland China, identified the coronavirus illness (COVID-19), a global pandemic. There have been introduction of human vaccines which are not that accurate in combating corona virus. COVID-19 spread is faster when people gather in large numbers. Thus, to control the spread of the disease, frequent lockdowns/travel restrictions, and frequent hand washing is always recommended to prevent potential viral infections of both covid 19 and pneumonia. Meanwhile, high temperature and cough are the most common infection symptoms. Other symptoms may occur, including dehydration, fatigue, loss of appetite, malaise, clammy skin, or sweating. COVID19 may progress to viral pneumonia which has a 5.8% mortality risk and can also lead to respiratory infections.The death rate of COVID-19 is equivalent to 5% of the death rate of the 1918 Spanish flu pandemic. The total number of people infected with COVID-19 worldwide is 52.9Cr as of May 31 2022 whereas the numbers of reported deaths are 62.9L as of May 31 2022. Most of the cases were recorded in India,USA, Spain, Italy, France, Germany, mainland China, UK, and Iran [2]. Saudi Arabia, with 7.67L cases, has the highest number of reported cases among all the Arab countries. Meanwhile, the number of reported cases in Jordan is 17L, whereas the numbers of deaths are 14,066. The number of reported cases in Australia is 72.4L, whereas the numbers of deaths are 8,470. Since February 2020, mobile technology services, such as mobile apps such as arogya setu app,etc., have been introduced to curb the potential risk of infection in India. By comparing our findings to those of previous research, we show that our deep learning models and explainable AI models outperform those based on structural design, and that our models attain state-of-the-art performance.

With explainable AI, we investigated the procedure behind our model for COVID-19 detection and also to explain why such a result has been obtained.

II. BACKGROUND

The use of CNNs (Convolutional Neural Network) for image classification tasks is important because they have demonstrated a high accuracy performance in the fields of image recognition and object detection.

The target was on CNN because they provide a scalable approach to image classification and object recognition tasks, leveraging principles from linear algebra, specifically matrix multiplication, to identify patterns within an image.

Convolutional network has 3 main layers:

- Convolutional Layer: The convolutional layer is the core building block of a CNN, and it is where the majority of computation occurs. It requires a few components, which are input data, a filter, and a feature map.

- Pooling Layer: Pooling Layers, also known as downsampling, conducts dimensionality reduction, reducing the number of parameters in the input.

- Fully-connected (FC) Layer: In the fully-connected layer, each node in the output layer connects directly to a node in the previous layer. This layer performs the task of classification based on the features extracted through the previous layers and their different filters.

To mitigate the small number of samples we have used transfer learning which extracts knowledge extracted by pre trained models to the model to be trained.

Their success lies in the fact that they are able to capture hidden features of the images, through their numerous hidden layers.

So, overall we try to build our own neural network and also use different pretrained models like Resnet,vgg,xception etc. to effectively build a fully accurate model based on the results and comparisons. The goal of the financial ruin is to find out the amazing-appearing Deep model for predicting and forecasting COVID-19 and pneumonia. later on, readers will acquire a glimpse of some deep learning fundamentals and the way Deep learning may be used to expect and forecast COVID-19, which may additionally assist in destiny health care automation responsibilities the usage of deep learning and explainable AI within the face of the ability for using CT photographs as a complementary screening method for COVID-19 and also pneumonia images, alongside the demanding situations of interpreting CT for COVID-19 screening, enormous studies have been completed on a way to locate COVID-19 using CT photos. Deep getting to know is now significantly utilized in all aspects of COVID-19 research geared in the direction of controlling the continuing outbreak reference deliver an overview of the currently evolved structures based on deep getting to know strategies the use of one of a kind scientific imaging modalities which includes CT and X-ray.

Reference mounted a database of masses of CT scans of COVID-19 superb instances and developed a deep getting to know approach with high pattern efficiency based on self-supervision and transfer studying in addition, researchers have developed an synthetic intelligence device able to diagnosing COVID-19 and setting apart the ailment from the opposite not unusual pneumonia in addition to the regular cases. furthermore, reference created a library containing CT images of 1,345 pneumonia images (inclusive of those with COVID-19), one hundred thirty medical signs and symptoms (a series of symptoms and symptoms which includes biochemical and mobile evaluation of blood and urine), as well as the medical signs of SARS-CoV-2, and made predictions on whether every affected character professional horrible, moderate, and intense cases.

Explainable Artificial Intelligence (Explainable AI) is a new field or a branch of AI which is normally used to overcome the weaknesses present in black-box machine learning models and also deep learning models. This new branch of explainable AI not only makes deep learning models more explainable, while still maintaining a high level of predictions to the medical professionals how a particular patient has been affected and how the person can be treated can be explained using lime models or some other explainable AI models.

A. ResNet

A residual neural network (ResNet) is one of the models the authors have used which is one of the models in artificial neural networks. It is a gateless or open-gated variant of the HighwayNet,the first working very deep feed forward neural network with hundreds of layers, much deeper than previous neural networks. Skip connections or shortcuts are used to jump over some layers (HighwayNets may also learn the skip weights themselves through an additional weight matrix for their gates). Typical ResNet models are implemented with double- or triple- layer skips that contain nonlinearities (ReLU) and batch normalization in between. Models with several parallel skips are referred to as DenseNets.In the context of residual neural networks, a non-residual network may be described as a plain network.

B. Xception

Xception is a deep convolutional neural network architecture which involves Depthwise Separable Convolutions. This network was introduced by Francois Chollet who works at Google, Inc

Xception full form is “extreme inception”, that is it takes the principles of Inception to an extreme. In Inception, 1x1 convolutions is used to compress the original input, and from each of those input spaces we use different type of filters on each of the depth space.Where as in Xception it reverses the steps followed in Inception.It first applies the filters on each of the depth map and then finally compresses the input space using 1X1 convolution by applying it across the depth. Xception method is almost identical to a depth wise separable convolution. There is one more difference between Inception and Xception. The presence or absence of a non-linearity after the first operation. In the Inception model, both operations are followed by a ReLU non-linearity, however Xception does not introduce any nonlinearity.

C. VGG

The full form of VGG is Visual Geometry Group and was developed by researchers of oxford.VGG is mainly divided into blocks where each block is further divided into 2D Convolution and Max Pooling layers. VGG is a convolutional neural network model which has achieved great accuracy score.It is one of the most popular image recognition architectures.It is more complex and more improvements than AlexNet.The model can take an image input size of 224-by-224.

D. Lime

Take a case where the model takes an image and predicts with 70% accuracy that a patient has covid 19 or pneumonia. Even though the model might have given the correct diagnosis, the doctor can’t give proper treatment as he doesn’t know the reason behind the model prediction. This is where Explainable AI comes into play which helps explain a given AI model’s decision making process. Explainable AI has great potential and a newly found AI branch. Some of XAI techniques including GRAD-CAM and LIME have been explained in this research.

When given a model and a dataset LIME follows the following steps:

Sampling and obtaining a proper dataset: LIME provides locally faithful explanations around the vicinity of the instance being explained. By default, it is able to produce 5000 samples of the feature vector following the normal distribution. Then it obtains the target variable for these 5000 samples using the prediction model, whose decisions it’s trying to explain. LIME also deploys a Ridge Regression model on the samples using only the features selected.

E. GradCam

GradCam is all about producing visual explanation heat-maps that will help us understand how deep learning algorithms make decisions.

At Foresight, we have focused on our model, our model uses visual explanation to improve our datasets and models.

Gradient-weighted Class Activation Mapping (Grad-CAM), uses the gradients of any target concept (say ‘covid’ in a classification network or a sequence of words in captioning network) flowing into the final convolutional layer to produce a coarse localization map highlighting the important regions in the image for predicting the regions affected.

III. RELATED WORK

The author to detect malaria used a Canny edge detection algorithm to detect the target feature. Random Forest Algorithm, Logistic Regression, Decision Tree were used to train the model for classification. An explanation technique called LIME was used to interpret the prediction. Random Forest outperformed all the other models with Precision - 0.82, Recall - 0.86, F1 Score - 0.84. LIME XAI technique introduced the predicted image into green (uninfected) and red (infected) areas along with the yellow boundary which tells the most important pixels in the decision-making procedure.[1]

The author uses LIME, an algorithm that can explain the predictions of any classifier or regressor in a faithful way, by approximating it locally with an interpretable model. SP-LIME, is a method that selects a set of representative instances with explanations to address the “trusting the model” problem, via submodular optimization. Comprehensive evaluation with simulated and human subjects, where we measure the impact of explanations on trust and associated tasks. They proposed LIME, a modular and extensible approach to faithfully explain the predictions of any model in an interpretable manner. We also introduced SP-LIME, a method to select representative and non-redundant predictions, providing a global view of the model to users. Our experiments demonstrated that explanations are useful for a variety of models in trust-related tasks in the text and image domains, with both expert and non-expert users: deciding between models, assessing trust, improving untrustworthy models, and getting insights into predictions.[2]

Author described the studies related to the explainability of deep learning models in the context of medical imaging. There has been significant progress in explaining the decisions of deep learning models, especially those used for medical diagnosis. Understanding the features responsible for a certain decision is useful for the model designers to iron out reliability concerns for the end-users to gain trust and make better judgments. Almost all of these methods target local explainability, i.e. explaining the decisions for a single example. This then is extrapolated to a global level by averaging the highlighted features, especially in cases where the images have the same spatial orientation. However, emerging methods like concept vectors provide a more global view of the decisions for each class in terms of domain concepts.[3]

Author uses new CNN model for detection of Covid 19 by multiclass classification The proposed deep learning model produced an average classification accuracy of 90.64% and F1-Score of 89.8% after performing 5-fold cross-validation on a multi-class dataset consisting of COVID-19, Viral Pneumonia, and normal X-ray images.[4]

Author uses Feature Extraction using pretrained CNN, Fine-tuning, with different dataset combination ResNet50 was the CNN that presented an excellent performance, both using feature extraction or fine-tuning. Also found that the results achieved by the fine-tuning technique were superior compared to feature extraction.[5]

The author in [6] proposes a parallel Convolutional Neural Networks (CNNs) model for the detection of covid-19 infected patients using chest X-ray radio graphs. This paper also presents how to evaluate the effectiveness of the state-of-the-art CNN proposed by the scientific community about their expertise in the automatic diagnosis of Covid-19 from thoracic X-rays.

The hierarchical structure in AIDCOV captures the dependencies among features and improves model performance while an attention mechanism makes the model interpretable and transparent. This paper uses several publicly available datasets of both computed tomography (CT) and X-ray modalities. In this paper [7] study, an Artificial Intelligence model for Detection of COVID-19 (AIDCOV) is developed to classify chest radiography images as belonging to a person with either COVID-19, other infections, or no pneumonia (i.e., normal).The interpretable model can distinguish subtle signs of infection within each radiography image.

Author in [8] suggests implementing a machine learning model that incorporates transfer learning to automatically detect Covid-19 from chest X-ray images. The suggested model is built on top of the VGG16 architecture and pre-trained ImageNet weights. Compared with the VGG19, Inception-V3, Inception-ResNet, Xception, RestNet152-V2, and DenseNet201 models, the VGG16 model achieved the highest testing accuracy of 98% on 10 epochs as well as high positive-class accuracy. Gradient-weighted class activation mapping (Grad-CAM) was also applied to detect the regions that have a greater impact on the model classification decision.

This[9] Paper presents,promising Convolutional Neural Network (CNN) model with transfer learning for the accurate diagnosis of covid-19. Multi-class classification will be generated in this study (covid vs. normal(healthy) vs. pneumonia). Experiments were performed with 1,143 covid-19, 1,341 normal, 1,345 pneumonia CXR images. Performance measures such as accuracy, precision, recall, and f1 score are used to assess the proposed system efficacy. The purpose of this study is to compare the performance of each model while resizing the image [(256,256), (224,224), (128,128), (64,64)].

In this [10] paper author Investigated the aid of Artificial Intelligence and Data Mining techniques to automate the task of diagnosing COVID-19 from Chest X-Rays medical images. Used support vector machines and Naive Bayes classification for the prediction.The naive Bayes algorithm shows 89.6% accuracy with F measurement around 89.1%. The recall metric seems to have a low value of around 80%. The results of CNN over Keras and TensorFlow platforms, as reported, achieved 90-92% accuracy, 100% sensitivity, and 80% specificity which indicated that this model.

IV. EXPERIMENT METHODOLOGY

In the proposed work, multiple experiments were conducted to evaluate the performance of the various classifiers and then examined their performance.

A. Datasets Used

In the proposed work, more than one experiment had been carried out to evaluate the performance of the numerous classifiers after which tested their overall performance.

The experiment requires Chest X-ray images of covid 19,normal and pneumonia images. which will make the model more sturdy and correct, those images are collected from 2 exclusive datasets. There are as following:

- Covid-19-Radiography-Dataset: This dataset includes chest X-ray pictures of covid ,normal and pneumonia with around 1089 covid images, 1231 normal images, 1345 pneumonia images.[a]

B. Experimental Procedure

This topic, COVID-19 identification from radiological pictures, is approached as a multi-classification problem. CNNs are the classifiers used in our method, and they may be aided by a deep learning technique called transfer learning.

The ImageNet dataset is used to train the CNN models. However, because the CXRs/CT Scans utilised in this study are of varying sizes, the dimensions of ImageNet pictures were determined to be exactly 224x224. As a result, the images must be resized to fit the appropriate dimensions.

- Preprocessing used for Chest X Ray

- Only the resizing of images to 224x224x3 of all three classes as mentioned above.

- Through Image Data Generator following parameters were set

? rotation_range=15

? width_shift_range=0.1

? height_shift_range=0.1

? zoom_range=0.2

? shear_range=0.1

? horizontal_flip=True

The performance metrics used in this experiment are as follow:

a. Accuracy: Accuracy is one of the most intuitive performance metrics. It is defined as the ratio of correctly predicted samples to the total number of samples.

b. Recall: Recall is another important performance metric. Recall measures the proportion of actual positive labels correctly identified by the model. Recall measures any model’s capability of identifying True positives.

2. F1 Score: F1 score is a very important metric, as it considers both precision and recall. It is the harmonic mean of both precision and recall .

3. Training Model

After the preprocessing steps ,the dataset is converted or split into training data, test data, validation data. We have used three CNN models to build out training data.

a. xception Model: The dataset was split into 2199 training data images 733 valid data images and 733 test data images which belong to three classes. The top layer of the network in the xception was then replaced with the training dataset obtained from our experiment and then the xception model was built. For prediction of the images the training data was labelled with array indices with covid images labelled as 0,normal images labelled as 1,pneumonia images labelled as 2.A confusion matrix was then used to describe the performance of a classification model on a set of test data for which the true values are known. The Xception model is then used to obtain both actual class and predicted class of the images in the dataset.Accuracy ,recall and F1 scores have been discussed below.

b. VGG Model: Similar procedure explained in the xception model is followed except that the parameters are not trained again rather the trained parameters from the xception model is used again in the VGG model.

c. ResNet Model: Similar procedure explained in the xception model is followed except that the parameters are not trained again rather the trained parameters from the xception model is used again in the ResNet model.Resnet Model along with the predictions also explains the statistics of the obtained trained model.

3. Explanations for the predictions using Lime

After installing the necessary libraries for running lime explanations we have used the xception model to load the training data onto the lime explainer function.

Lime model then explains the predictions made by the model by assigning affected regions with red and green colours.The doctors can then treat the patients according to the regions most affected.

Gradcam model based on training model given by vgg and resnet generates a heatmap of the regions affected the most.

So based on the ui implemented by the team users can upload any image and get the output on which class the image belongs to.

Explainable ai then shows the affected regions using lime and gradcam models.

IV. RESULTS AND DISCUSSIONS

After carrying out numerous experiments, the results that we got for each analysis are described below.

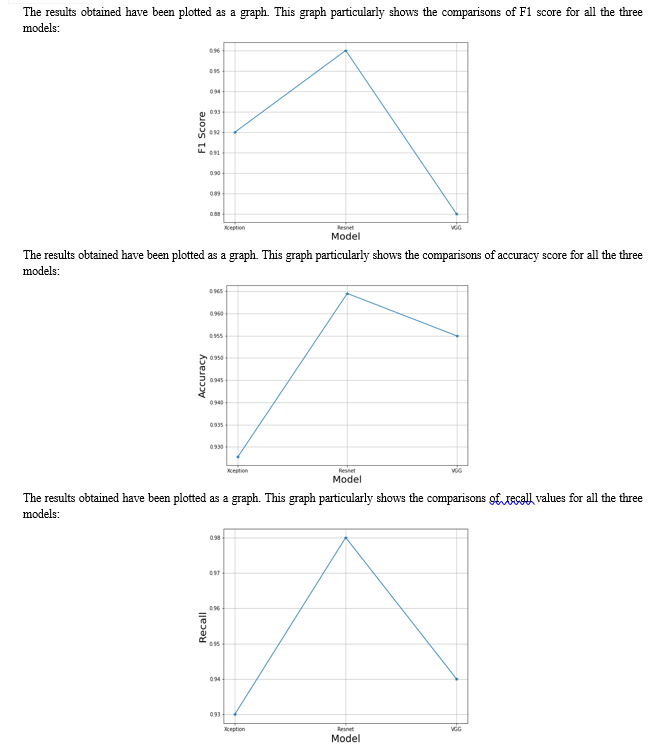

For chest x-ray images, Xception model gave the accuracy of 92.7%, recall score of 93% and f1 score of 92%.Resnet model gives accuracy 96.4%,recall 98% and f1 score 96%. VGG model gives accuracy of 95.4%,recall score of 94% and f1 score of 88%.

Conclusion

In this study, we propose and develop an efficient system to classify the chest x-ray image into one of the three categories: Covid-19, pneumonia, Normal. The dataset will be collected from Kaggle, various other sources. We have used Deep learning mainly the pre-trained models to perform the classification as it shows efficiency in detecting patterns such as those that can be found in diseased tissue and also has the ability to eliminate the problem of misdiagnosis when the visual indicators of two classes (Covid-19 and Pneumonia) are quite elusive. Additionally, we have tried to explain the predictions of the model since the deep learning models are basically considered to be the “black boxes”. Explanations are important especially in the healthcare sector since it is a sensitive domain. So, we have explained the predictions by the models developed using the Explainable AI frameworks such as LIME, GradCam, etc. In the Future we are enabling no physical presence for doctors to review the condition of patients, we hope further development can be done in classification as well as our system to provide Doctorless treatment for curing certain respiratory diseases. We will look different up to other models which will provide better performance.

References

[1] Anik Khan, Nirman Kumar ”CIDMP: Completely Interpretable Detection of Malaria Parasite in Red Blood Cells using Lower-dimensional Feature Space” Conference Paper · May 2020 DOI:10.1109/IJCNN48605.2020.9206885 https://www.researchgate.net/publication/341685830 [2] Sameer Singh “Why Should I Trust You? Explaining the Predictions of Any Classifier” This work was supported in part by ONR awards #W911NF-13- 1-0246 and #N00014-13-1-0023, and in part by TerraSwarm, one of six centers of STARnet, a Semiconductor Research Corporation program sponsored by MARCO and DARPA. [3] Amitojdeep Singh, Sourya Sengupt, and Vasudevan Lakshminarayanan “Explainable deep learning models in medical image analysis”. arXiv:2005.13799v1 [4] Faizan Ahmed, Syed Ahmad Chan Bukhari, and Fazel Keshtkar. 2020. A Deep Learning Approach for COVID-19 & Viral Pneumonia Screening with X-ray Images. Digit. Gov.: Res. Pract. 2, 2, Article 18 (December 2020), 12 pages. https://doi.org/10.1145/3431804 [5] Antonios Makris, Ioannis Kontopoulos, and Konstantinos Tserpes. 2020. COVID-19 detection from chest X-Ray images using Deep Learning and Convolutional Neural Networks. In 11th Hellenic Conference on Artificial Intelligence (SETN 2020), September 2-4, 2020, Athens, Greece. ACM, New York, NY, USA, 7 pages. https://doi.org/10.1145/3411408.3411416 [6] Mahir Dursun and Namrig.H.S.FEDAL. 2021. COVID-19 detection by X Ray images and Deep Learning. In 2021 6th International Conference on Mathematics and Artificial Intelligence (ICMAI 2021), March 19–21, 2021, Chengdu, China. ACM, New York, NY, USA, 8 pages. https://doi.org/10.1145/ 3460569.3460591 [7] Maryam Zokaeinikoo, Pooyan Kazemian, Prasenjit Mitra, and Soundar Kumara. 2021. AIDCOV: An Interpretable Artificial Intelligence Model for Detection of COVID-19 from Chest Radiography Images. ACM Trans. Manage. Inf. Syst. 12, 4, Article 29 (October 2021), 20 pages. https://doi.org/10.1145/3466690 [8] Amy Chen, Jonathan Jaegerman, Dunja Matic, Hassaan Inayatali, Nipon Charoenkitkarn, and Jonathan Chan. 2020. Detecting Covid-19 in Chest X-Rays using Transfer Learning with VGG16. In CSBio \'20: Proceedings of the Eleventh International Conference on Computational Systems-Biology and Bioinformatics (CSBio2020), November 19–21, 2020, Bangkok, Thailand. ACM, New York, NY, USA, 4 pages. https://doi.org/10.1145/3429210.3429213 [9] Tyas Farrah Dhiba and Hsi-Chieh Lee. 2021. Finding an Efficient Image Size for Covid-19 Diagnosis using Chest X-Ray Images. In 2021 5th International Conference on Medical and Health Informatics (ICMHI 2021), May 14–16, 2021, Kyoto, Japan. ACM, New York, NY, USA, 4 pages. https://doi.org/10.1145/ 3472813.3473217 [10] Faten Kharbat, Tarik Elamsy, Nuha Hamada. 2020., Diagnosing COVID 19 in X-ray Images Using HOG Image Feature and Artificial Intelligence Classifiers. In Proceedings of ACM BCB \'20, September 21–24, 2020, Virtual Event, USA, 5 pages. https://doi.org/10.1145/3388440.3415987

Copyright

Copyright © 2022 M K Gagan Roshan, P Sai Deekshith, Ankit Kesar, Pramod H , Sonika Sharma D. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET44541

Publish Date : 2022-06-19

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online