Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Multiscale Deep - CNN Based Target Prediction in Hyperspectral Image with Transfer Learning

Authors: Mahaiyo Ningshen, Mukesh Kumar Yadav, Tarun Nabiyal

DOI Link: https://doi.org/10.22214/ijraset.2024.58278

Certificate: View Certificate

Abstract

There are different types of landscape throughout the world which is not readily or directly approachable for human being but their analysis to uncover factual information has become necessary for forming important decision when developing any fresh project. The geographical and landscape scenes can be adequately represented through hyperspectral images captured using remote sensors. The data in the images can potentially be both vast and intricate to analyze and it is essential to consistently perform adequate pre-processing. In this work, we have put up the use of deep learning and transfer learning for object prediction in hyperspectral data. There are mainly two algorithms that have been implemented in this research. The first method is based on Multi-Scale Deep CNN (Convolutional Neural Network) which takes hyperspectral data with varying sizes as the input to detect pixels whose intensity spreads uniformly over many wavelengths or may vary rapidly. Secondly, hyperspectral image sources are not readily available and can be expensive and there are also possibilities for high analysis complexity in the research, so a Transfer Learning based algorithm is applied to the DCNN model. Superior performance in accuracy was noted in the evaluation with respect to the F1 score and recall values for different objects fluctuate between 0.8 to 1.0. Further, we conducted a comparative study, pitting the proposed method against other state-of-the-art target prediction methodologies.

Introduction

I. INTRODUCTION

Remote sensing technology has been an integral element in computer vision research over the years. Remote sensors pos- sess the capability to capture vast expanses of the Earth’s geo- graphical area, presenting opportunities for the comprehensive analysis of overall conditions in the given area. Hyperspectral images are composed of a multitude of bands which encom- pass the full spectrum of wavelengths in the electromagnetic spectrum. Henceforth, the data include substantial spectral and spatial details to effectively characterize individual objects. Hy- perspectral data analysis can offer diverse facts about the land- scape suitable for decision making process. The information gathered through the research have been incorporated into nu- merous everyday applications [1]. The traditional methods like RGB (Red, Green, Blue) image processing are not sufficient to process such data. Earth observation data have an extensive number of entities, covering minerals, soils, vegetation, and the like. and manual processing and differentiating such objects is beyond the capacity of the human eye [2][3]. So, an automated mechanism is required for effective processing, feature extrac- tion and object predictions.

Dimensional reduction is one of the key phase in hyperspec- tral data analysis. In hyperspectral data, every pixel is situated at numerous contiguous narrow wavelength bands, and a sig- nificant portion of them exhibit high correlation with each other [4]. Thus, the complexity of analyzing these data is increased unnecessarily when accounting for the overlapping features of various dimensions. De-correlating these bands or features is the main goal of dimensionality reduction, aiding in the separa- tion and processing of valuable bands [5] as indicated in Figure 1. One of the widely adopted techniques for dimensionality re- duction is PCA (Principal Component Analysis), which lever- ages data variance for reduction of the number of wavelength bands within the dataset. However, PCA struggles to efficiently utilize local features due to the low signal-to-noise ratios it en- counters [6]. Thus, the efficiency of hyperspectral data analysis is compromised, leading to a decrease in performance. Tech- niques like regression-based dimensionality reduction, Multidi- mensional Spacing (MDS) and Locality Projection Pursuit (PP) have also been in existence over the years [7][8].

Beyond dimensionality reduction, the primary focus of re- search lies in the crucial areas of classification and prediction techniques [9]. Across the years, researchers have devised numerous techniques in these domains. In the early stages of development, methods such as Constrained Signal Detector (CSD), Orthogonal Subspace Projection (OSP), and Adaptive Subspace Detector (ASD) were introduced [10]. With the con- tinuous evolution of neural networks and deep learning, tech- niques based on neural network have also become a promi- nent choice [11]. With its learning and non-linear discrimina- tion ability, it excels in producing highly efficient results. In the realm of supervised techniques, CNN stands out for its ef- fectiveness and high-quality performance. Different manifes- tations of CNN have been developed over time, each with its own merits and drawbacks relative to others[12]. In the fast- paced evolution of modern neural networks, the landscape has witnessed the introduction of more optimized models featuring deeper layers for enhanced learning.

II. RELATED WORK

Target prediction is a binary class classification technique. The prediction process for target pixels involves the utilization of statistical information derived from the background pixels [13]. The performance metrics for these methods are entirely determined by distance measurements. Srivastava et al. [14] introduced an efficient method for differentiating target pix- els from background pixels, leveraging unsupervised transfer learning techniques.

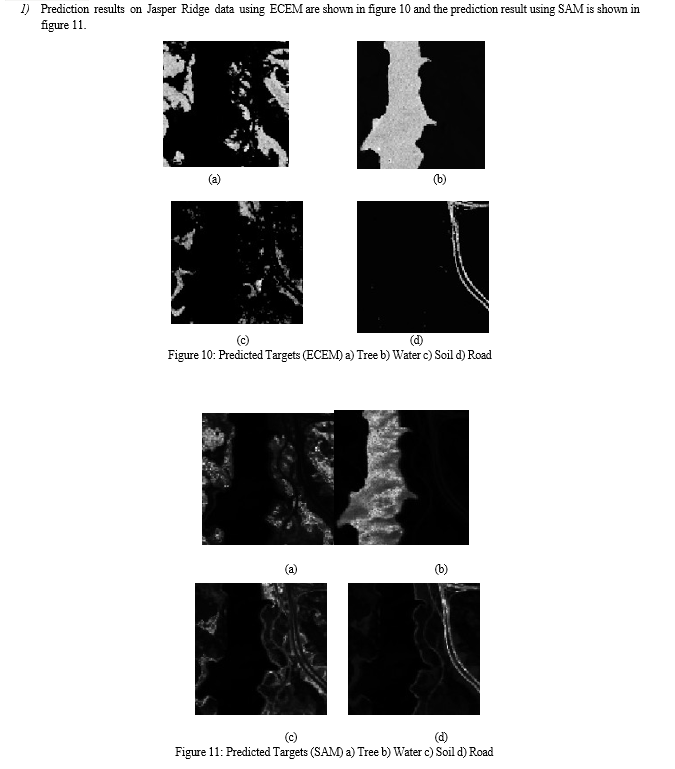

William et al.’s approach [15], which is among the early state-of-the-art prediction techniques, involves the application of the Constrained Energy Minimization (CEM) technique for mapping mine tailings distribution. Other approach like Spec- tral Angle Mapper (SAM) computes the angle difference be- tween the target spectrum and the reference spectrum vectors to assess their similarity. A single target SAM is transition to mul- tiple targets SAM for discriminating tree species based on the reflectance of each species’s leaves [16]. Furthermore, Kwon et al. [17] demonstrated the use of a kernel-based, non-linear form of match filter for predicting targets in hyperspectral data. In a comparative study, Tiwari et al.[18] evaluated SAM alongside four prediction algorithms: Spectral Co-Relation Method (SCM), Independent Component Analysis (ICA), Or- thogonal Subspace Projection (OSP) and Constrained Energy Minimization (CEM). Hyperspectral data in real time is known for its high non-linearity and it can introduce difficulties for the linear analysis methods mentioned above, potentially causing a decrease in performance. An Ensemble-based Constrained En- ergy Minimization (ECEM) technique was put forth by Zhao et al. [19] in 2019, aiming to enhance the ability to discrim- inate non-linearity in the data and improve the generalization prowess of the detector. This detector is an extension of the ear- lier CEM model, employing numerous learners for effectively learning and predicting.

As computing technology has advanced in recent years, neu- ral networks have become increasingly crucial in the explo- ration of hyperspectral imagery. Deep Learning (DL) has proven highly advantageous for analyzing data falling in the RGB spectrum, with numerous models applied successfully to achieve optimal performance. On the other hand, hyperspectral image, taken by airborne sensors and satellites, presents pixel values across multiple wavelength ranges, posing numerous challenges for achieving a comprehensive and accurate anal- ysis. Nonetheless, there has been continuous innovation and significant evolution in deep learning has occurred since 2017 [20].

In transitioning from traditional approaches to Deep Learn- ing neural networks, a pivotal change is the adoption of a fully connected architecture, departing from the conventional classi- fier [21]. The models are mathematically structured in a more suitable manner, such as in Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs). In CNNs [22], layers are designed to perform feature extraction and generate suitable inputs for subsequent stages of analysis. The archi- tectural development began with a uni-dimensional structure, referred to as 1D CNN, and then advanced into 2D and 3D structures. RNNs operate by storing information from previous steps, which is then employed in the processing of subsequent data. RNN demonstrated efficient capability in modeling de- pendencies, whether short-term or long-term within the data’s spatial and spectral composition, enabling accurate classifica- tion of hyperspectral imagery [23]. The utility of these models extends to semi-supervised and unsupervised learning scenar- ios.

In his work, Barrera et al.[24] employs a 2D convolutional architecture to effectively discern the internal characteristics of fruits and vegetables using hyperspectral data. It surpasses tra- ditional classifiers, such as SVM. In 2019, Freitas et al. ex- plored a 3D deep CNN for predicting target in maritime surveil- lance [25]. Typically, the availability of hyperspectral im- agery data is constrained, potentially impacting the effective- ness of classification or detection models. Obtaining hyper- spectral data tailored to specific locations or requirements can be prohibitively expensive. To address these challenges, trans- fer learning is employed in deep neural networks, offering a solution to mitigate the limitations imposed [26]. Despite the diverse wavelength ranges at which various hyperspectral data may be captured, there exists common spectral and spatial in- formation among them. Leveraging this shared information, knowledge learned from one dataset can be efficiently applied to predict unknown elements in different data.

This approach not only facilitates effective prediction but also significantly contributes to reducing the computational complexity of the learning model. This study introduces some models and al- gorithms aimed at mitigating the challenges encountered. The proposed methods encompass detection techniques using deep neural networks, as outlined in the research.

III. PROPOSED TARGET PREDICTION MODEL

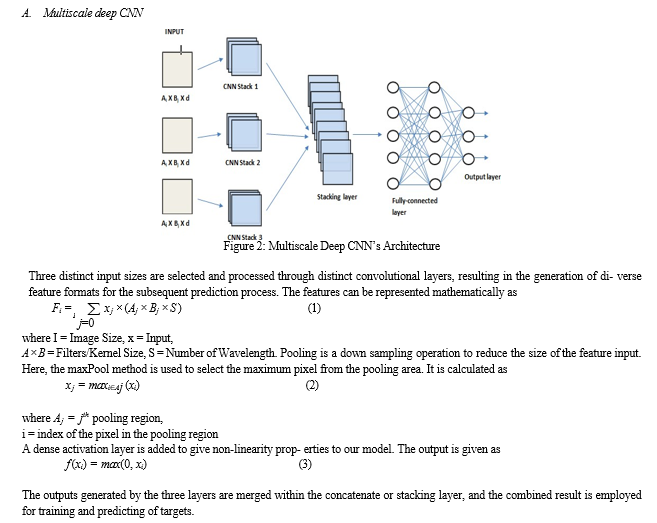

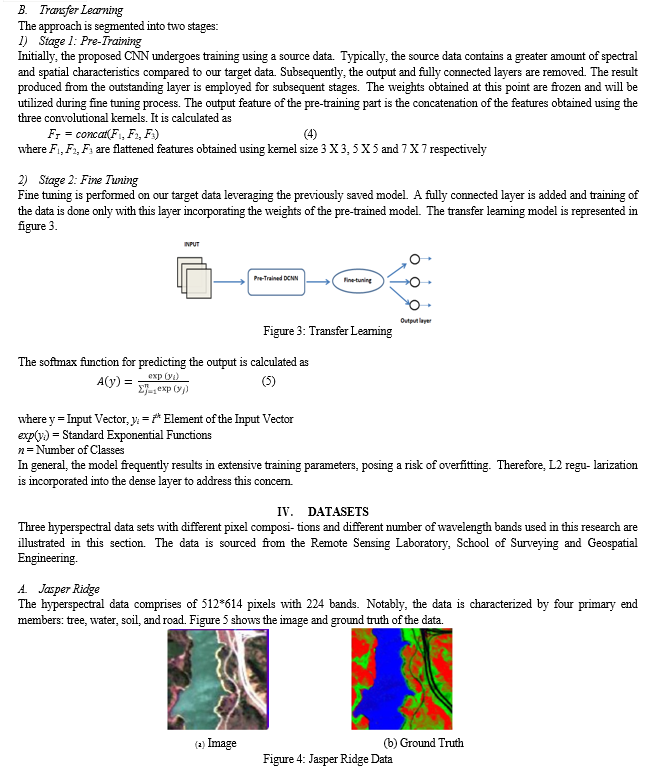

While all previous CNN models have been designed to ac- cept inputs of fixed sizes, the proposed deep CNN approach has been specifically developed to accommodate variable in- put sizes. This is achieved by configuring various CNN stacks, each tailored to handle different input sizes, yet sharing com- mon parameters, allowing them to operate concurrently. The outputs from the diverse CNN stacks are integrated through a layer, and predictions are subsequently conducted on the con- solidated output. The parallel model here with varied input dimensions proves beneficial in the extraction of extremely low- level features in comparison to other CNN architectures. Subsequently, transfer learning is applied to the CNN, enhancing its ability to leverage the learned features for improved performance. The weights and already trained parameters from a pre- existing model are utilized to train and predict on a different dataset in transfer learning, leading to a significant reduction in the computational overhead of constructing a neural network model with vast amounts of data. The volume of data samples for training is also maximize with transfer learning.

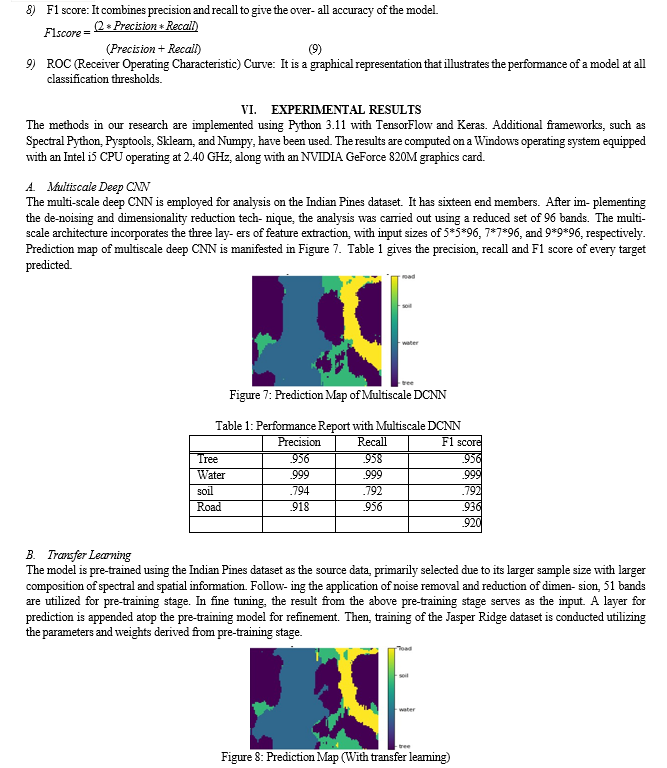

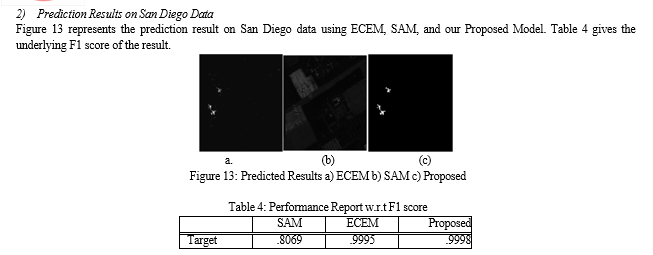

In both datasets, the overall performance experienced enhancement through the implementation of our proposed method. In Jasper Ridge, apart from soil, the performance was largely uniform across all targets. This occurrence can be mostly attributed to the original data, where the radiance of the soil’s pixels was inherently low, and their resemblance with some other different target. Nevertheless, the accuracy get significantly enhanced by adopting the proposed model. As for San Diego data, ECEM and the proposed method exhibit a similar level of performance. ECEM, as evidenced in pre- vious results, has attained a very high accuracy, focusing pre- dominantly on the detection of single targets. When evaluat- ing both computational efforts and performance, our proposed model demonstrates a distinct superiority in detecting objects within hyperspectral data.

Conclusion

The complexity of hyperspectral data, with its multiple layers of information, presents a significant challenge during process- ing and the extraction of specific information. In this study, the prediction of objects has been conducted using hyperspectral data of the Earth’s landscape. The application of a multiscale deep CNN classifier on hyperspectral data proved to be more efficient in comparison to existing CNN-based algorithms. Fur- thermore, a transfer learning model was integrated into the mul- tiscale deep CNN. The post-transfer learning performance was evaluated, comparing precision, recall, and F1 score with the original multiscale deep CNN. The results indicated an over- all enhancement in accuracy. Moreover, transfer learning con- tributed to a noteworthy reduction in computational complex- ity. The results obtained from the proposed work were further compared with those of other recent methods for target predic- tion. In subsequent studies, enhancing the robustness of pre- processing and dimensionality reduction can be achieved by de- termining the object type in individual bands using the radiance values. Exploring the use of kernel-based methods may prove beneficial. Additionally, we can configure the transfer learning model to extract insights from multiple data sources through the incorporation of different datasets, thereby enriching features’s diversity.

References

[1] A. Ozdemir and K. Polat, “Deep learning applications for hyperspectral imaging: A systematic review,” Journal of the Institute of Electronics and Computer, vol. 2, no. 1, pp. 39–56, 2020. [2] P. C. Pandey, K. Manevski, P. K. Srivastava, and G. P. Petropoulos, “The use of hyperspectral earth observation data for land use/cover classifica- tion: Present status, challenges and future outlook,” Hyperspectral Re- mote Sensing of Vegetation, 1st ed.; Thenkabail, P., Ed, pp. 147–173, 2018. [3] A. Vali, S. Comai, and M. Matteucci, “Deep learning for land use and land cover classification based on hyperspectral and multispectral earth observation data: A review,” Remote Sensing, vol. 12, no. 15, p. 2495, 2020. [4] T. Ada˜o, J. Hrus?ka, L. Pa´dua, et al., “Hyperspectral imaging: A review on uav-based sensors, data processing and applications for agriculture and forestry,” Remote sensing, vol. 9, no. 11, p. 1110, 2017. [5] J. Khodr and R. Younes, “Dimensionality reduction on hyperspectral images: A comparative review based on artificial datas,” in 2011 4th international congress on image and signal processing, IEEE, vol. 4, 2011, pp. 1875–1883. [6] P. Deepa and K. Thilagavathi, “Feature extraction of hyperspectral im- age using principal component analysis and folded-principal compo- nent analysis,” in 2015 2nd International Conference on Electronics and Communication Systems (ICECS), IEEE, 2015, pp. 656–660. [7] V. Laparra, J. Malo, and G. Camps-Valls, “Dimensionality reduction via regression in hyperspectral imagery,” IEEE journal of selected topics in signal processing, vol. 9, no. 6, pp. 1026–1036, 2015. [8] M. Belkin and P. Niyogi, “Laplacian eigenmaps and spectral techniques for embedding and clustering,” Advances in neural information process- ing systems, vol. 14, 2001. [9] C.-I. Chang and S.-S. Chiang, “Anomaly detection and classification for hyperspectral imagery,” IEEE transactions on geoscience and remote sensing, vol. 40, no. 6, pp. 1314–1325, 2002. [10] C.-I. Chang, “Orthogonal subspace projection (osp) revisited: A com- prehensive study and analysis,” IEEE transactions on geoscience and remote sensing, vol. 43, no. 3, pp. 502–518, 2005. [11] S. Hartling, V. Sagan, and M. Maimaitijiang, “Urban tree species clas- sification using uav-based multi-sensor data fusion and machine learn- ing,” GIScience & Remote Sensing, vol. 58, no. 8, pp. 1250–1275, 2021. [12] D. Bhatt, C. Patel, H. Talsania, et al., “Cnn variants for computer vision: History, architecture, application, challenges and future scope,” Elec- tronics, vol. 10, no. 20, p. 2470, 2021. [13] D. Manolakis, E. Truslow, M. Pieper, T. Cooley, and M. Brueggeman, “Detection algorithms in hyperspectral imaging systems: An overview of practical algorithms,” IEEE Signal Processing Magazine, vol. 31, no. 1, pp. 24–33, 2013. [14] N. Srivastava, E. Mansimov, and R. Salakhudinov, “Unsupervised learn- ing of video representations using lstms,” in International conference on machine learning, PMLR, 2015, pp. 843–852. [15] W. H. Farrand and J. C. Harsanyi, “Mapping the distribution of mine tailings in the coeur d’alene river valley, idaho, through the use of a constrained energy minimization technique,” Remote Sensing of Envi- ronment, vol. 59, no. 1, pp. 64–76, 1997. [16] R. H. Yuhas, A. F. Goetz, and J. W. Boardman, “Discrimination among semi-arid landscape endmembers using the spectral angle mapper (sam) algorithm,” in JPL, Summaries of the Third Annual JPL Airborne Geo- science Workshop. Volume 1: AVIRIS Workshop, 1992. [17] H. Kwon and N. M. Nasrabadi, “Kernel spectral matched filter for hy- perspectral imagery,” International Journal of Computer Vision, vol. 71, pp. 127–141, 2007. [18] K. C. Tiwari, M. K. Arora, D. Singh, and D. Yadav, “Military target detection using spectrally modeled algorithms and independent compo- nent analysis,” Optical Engineering, vol. 52, no. 2, pp. 026 402–026 402, 2013. [19] R. Zhao, Z. Shi, Z. Zou, and Z. Zhang, “Ensemble-based cascaded con- strained energy minimization for hyperspectral target detection,” Re- mote Sensing, vol. 11, no. 11, p. 1310, 2019. [20] P. Rajpurkar, J. Irvin, K. Zhu, et al., “Chexnet: Radiologist-level pneu- monia detection on chest x-rays with deep learning,” arXiv preprint arXiv:1711.05225, 2017. [21] J. Xiao, H. Ye, X. He, H. Zhang, F. Wu, and T.-S. Chua, “Attentional factorization machines: Learning the weight of feature interactions via attention networks,” arXiv preprint arXiv:1708.04617, 2017. [22] T.-H. Hsieh and J.-F. Kiang, “Comparison of cnn algorithms on hy- perspectral image classification in agricultural lands,” Sensors, vol. 20, no. 6, p. 1734, 2020. [23] N. Audebert, B. Le Saux, and S. Lefe`vre, “Deep learning for classifica- tion of hyperspectral data: A comparative review,” IEEE geoscience and remote sensing magazine, vol. 7, no. 2, pp. 159–173, 2019. [24] J. Barrera, A. Echavarr?a, C. Madrigal, and J. Herrera-Ramirez, “Clas- sification of hyperspectral images of the interior of fruits and vegetables using a 2d convolutional neuronal network,” in Journal of Physics: Con- ference Series, IOP Publishing, vol. 1547, 2020, p. 012 014. [25] S. Freitas, H. Silva, J. M. Almeida, and E. Silva, “Convolutional neural network target detection in hyperspectral imaging for maritime surveil- lance,” International Journal of Advanced Robotic Systems, vol. 16, no. 3, p. 1 729 881 419 842 991, 2019. [26] Y. Liu, L. Gao, C. Xiao, Y. Qu, K. Zheng, and A. Marinoni, “Hyper- spectral image classification based on a shuffled group convolutional neural network with transfer learning,” Remote Sensing, vol. 12, no. 11, p. 1780, 2020.

Copyright

Copyright © 2024 Mahaiyo Ningshen, Mukesh Kumar Yadav, Tarun Nabiyal. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET58278

Publish Date : 2024-02-02

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online