Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Neural Style Transfer

Authors: Dhawade Sarika, Gawali Akanksha, Jathar Pallavi, Khamkar Snehal, Bhosale Swati S.

DOI Link: https://doi.org/10.22214/ijraset.2024.61748

Certificate: View Certificate

Abstract

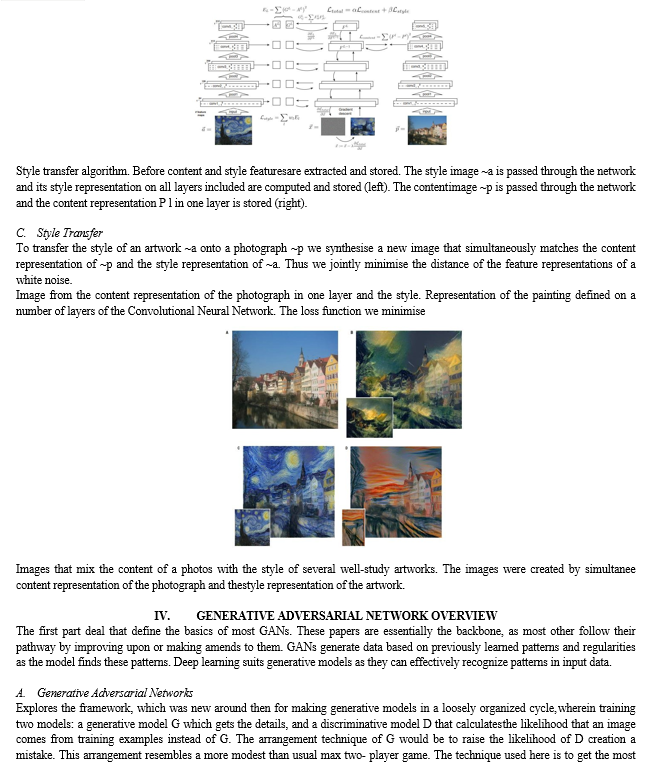

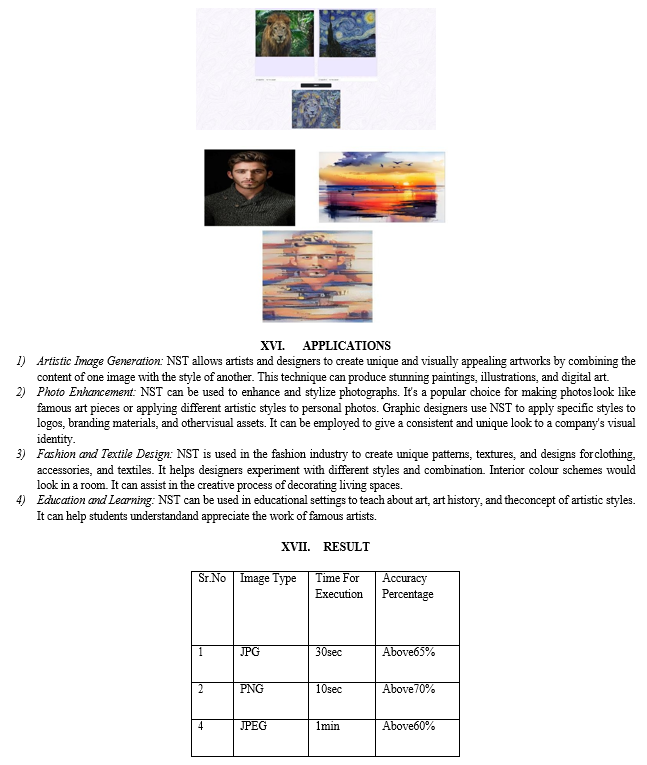

The Basically [NST] means a Neural Style Transfer is one Model for changing the whole behaviour of the images. Through the NST we can create a multiple new images using a multiple content image and multiple style images, through this model we can change the all internal structure & Environment of the images, and also in some images the model can transform the style or change a style. Neural style transfer is a machine learning, deep learning and artificial intelligence related one technique that combines the features of a content images and style images. Under the content images the all content are present and under the style images all style related images are present are over here. This technique combines the contents images with the style of another image creating visually captivating art works that bridge the gap between human creativity and computational process. Introducing the neural network architecture that enables the extraction of content and style features from images.

Introduction

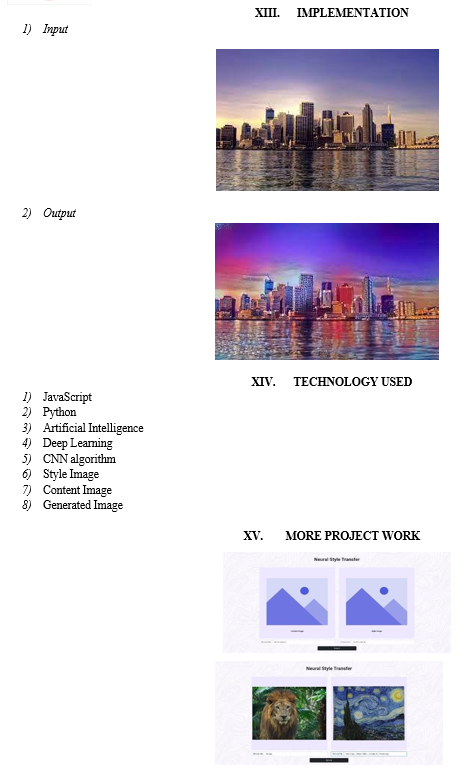

I. INTRODUCTION

Transferring the style from one image into another can be considered a mystery of texture transfer. In texture transfer the goal is to synthesise a texture from a source image while constraining the texture synthesis in order to preserve the semantic content of a target image. For texture synthesis there exist a large range of powerful semi-parametric algorithms that can synthesise photorealistic natural textures by resampling the pixels of a given source texture. Most previous texture transfer algorithms rely on these nonparametric methods for texture synthesis while using different ways to preserve the structure of the goal image. For instance, introduce a correspondence map that includes features of the target image such as image intensity to constrain the texture synthesis procedure. Here use image analogies to transfer the texture from an already stylised image into a goal image. Focuses on transferring the high texture information while preserving the coarse scale of the target image. Enhance this algorithm by additionally informing the texture transfer with edge orientation Information. Although these algorithms achieve remarkable results, they all suffer from the same fundamental limitation: they use only lower image features of the goal image to. Ideally, however, a style transfer algorithm should be able to extract the semantic image content from the goal image (e.g. the objects and the general scenery) and then inform a texture transfer procedure to render the semantic content of the goal image in the style of the source image. Therefore, a fundamental prerequisite is to find image representations that independently model variations in the semantic image, content and style images in which generated in a new image. The use of image style migration technology has become extra and more extensive, usually to migrate the artistic style of one picture to another picture, is the image to ensure that the content characteristics do not change significantly without major changes in the art style. With the rise of deep learning with the help convolutional neural network models to achieve image style migration, which attracted wide attention. NST has been an important research direction for both decades. Firstly the emergence of neural networks, style migration was a field of non- photorealistic rendering, and then, the neural network- based texture synthesis technology provided new ideas for style migration. Although style migration has a nice visual effect, there are still few problems to be solved. How to better the efficiency of the algorithm under the premise of ensuring the quality of stylized images is the direction that needs to be studied at real-time. Here we will provide an overview of the few year’s developments in NST.

II. LITERATURE SURVEY

In [1] Progress in electron microscopy-based high- resolution connectomes is limited by data analysis throughput. Here, they present SegEM, a toolset for efficient semi-automated analysis of large scale fully Stained 3D-EM datasets for the reconstruction of neuronal circuits. By combining skeleton reconstructions of neurons with automated volume segmentations, SegEM allows the reconstruction of neuronal circuits at a work hour consumption rate of about 100-fold less than manual analysis and about 10-fold less than existing segmentation tools.

In [2] the primate visual system achieves remarkable visual object recognition performance even in brief presentations, and under changes to object exemplar, geometric transformations, and background variation (a.k.a. core visual object recognition). This remarkable performance is mediated by the representation formed in inferior temporal (IT) cortex. In parallel, recent advances in machine learning have led to ever higher performing models of object recognition using artificial deep neural networks (DNNs).

In [8] recently, methods have been proposed that perform texture synthesis and style transfer by using convolutional neural networks (e.g. Gatys et al. [2015, 2016]). These methods are exciting because they can in some cases create results with state-of-the-art quality. However, in this paper, show these methods also have limitations in texture quality, stability, requisite parameter tuning, and lack of user controls. This paper presents a multiscale synthesis pipeline based on convolutional neural networks that ameliorates these issues.

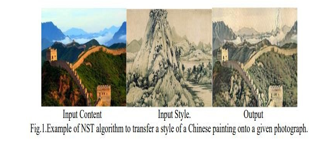

III. DEEP IMAGE REPRESENTATION

The results presented under were generated on the common of the VGG network, which was trained to perform object recognition and localisation and is described extensively in the real work. We normalized the network by scaling the weights some that the mean activation of each convolutional filter over images and positions is equal to one. Such re- scaling can be done for the VGG network without changing its result, because it contains rectifying linear activation functions and no normalization or pooling over feature maps. We do not use any of the totally connected layers.

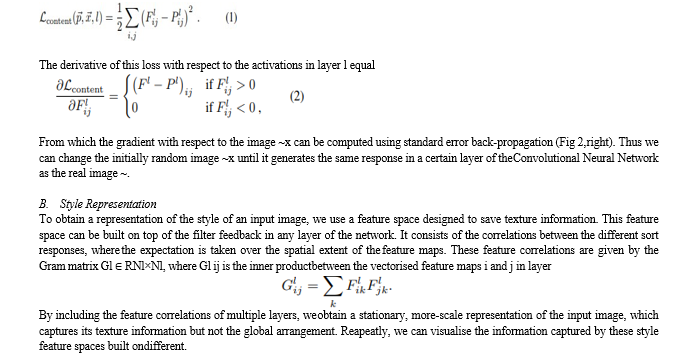

A. Content Representation

Generally all layer in the network defines a non-linear filter bank whose complexity increases with the position of the layer in the network. Hence a given input image ~x is encoded in each layer of the Convolutional Neural Network by the sort responses to that image. We then define the squared-error loss between the both feature representations.

X. ALGORITHMS

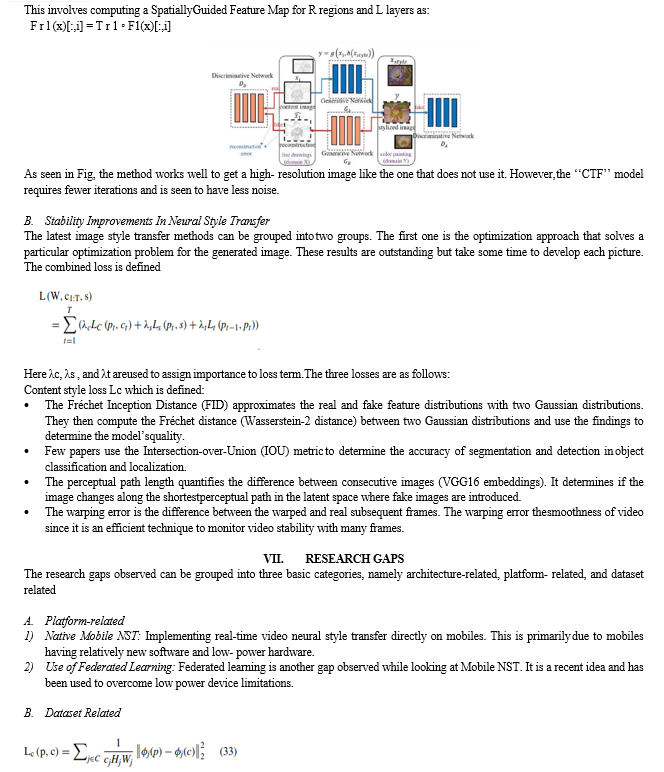

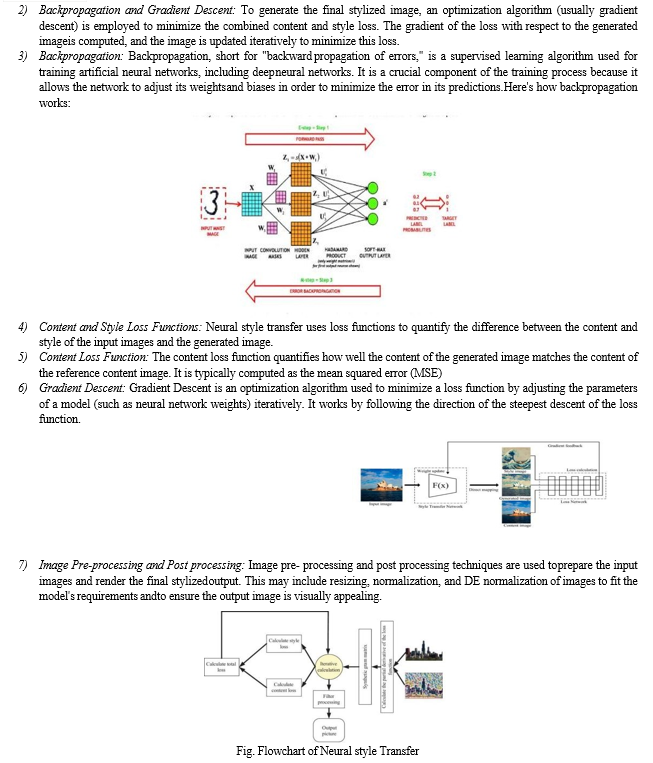

Here are the key algorithms & Technique used in a typical NST:

- Pre-trained CNNs: Convolutional Neural Networks, such as VGG-16 or VGG-19, are often used as the backbone for NST. These networks are pre-trained on large image datasets (e.g., ImageNet) and serve as feature extractors for both content and style information.

- Content Loss Function: The content loss is calculated by measuring the difference between the feature representations of the generated image and the content image. It is typically based on the mean squared error (MSE) between the feature maps.

- Style Loss Function: The style loss is computed by comparing the statistics of feature maps from the generated Image and the style image. This involves calculating the Gram matrix and evaluating the mean squared error between the Gram matrices of feature maps from the style and generated images.

- Total Variation Regularization: To promote spatial coherence and reduce artifacts in the generated image, total variation (TV) regularization is often used. TV regularization penalizes rapid changes in pixel values.

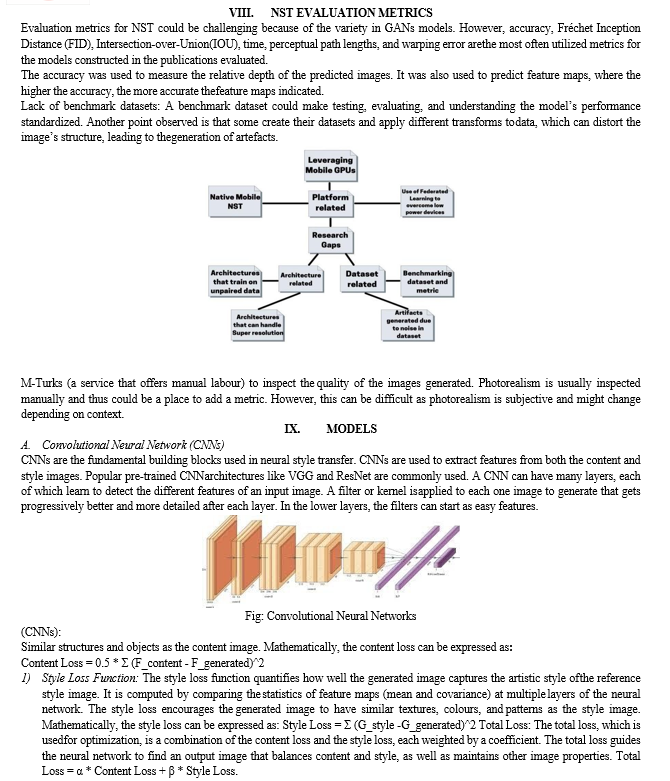

- Optimization Algorithm: Gradient Descent or its variants, such as L-BFGS (Limited-memory Broyden- Fletcher-Goldfarb-Shanno) or Adam, is employed to iteratively adjust the pixel values of the generated image to minimize the combined content and style loss.

- Hyper Parameters: Tuning hyper parameters, including the weights for content and style losses, the learning rate, and the number of iterations, is essential to achieving the desired balance between content and style.

- Initialization: Initialization of the generated image, also known as the starting point, can influence the final result. Common initializations include using the content image, random noise, or a combination of both.

- Multi-Style Transfer: Extending NST to incorporate multiple style references, allowing for the creation of images that combine the styles of multiple artists or artworks

XI. TOOLS

- Tensor Flow: Tensor Flow is a mostly-used open-source deep learning framework developed by Google. It provides a high-level API for building neural networks, making it popular for implementing NST.

- PyTorch: PyTorch is a multiplatform deep learning framework prosper by Facebook's AI Research lab. It is known for its flexibility and dynamic computation graph, which makes it a popular choice for many research projects, including NST.

- Keras: Keras is an open-source high-level neural networks API that runs on top of other deep learning frameworks like Tensor Flow and Theano. It simplifies the implementation of neural networks, making it accessible for NST projects.

- Fast Neural Style Transfer (NST) Tools: Various prebuilt NST tools and implementations are available, which allow users to apply NST without extensive coding. Examples include Fast Neural Style Transfer in Tensor Flow, Artistic Style Transfer with Keras, and more.

- Adobe Photoshop and Other Image Editing Software: Traditional image editing software like Adobe Photoshop adjusting the appearance of images.

- Jupyter Notebooks: Jupyter notebooks are often used for experimenting with NST and visualizing the results. They provide an interactive and visually rich environment for exploring the code and its effects.

- Online NST Services: There are various online platforms and services that offer NST as a service. Users can upload their content and style images, and the service will generate the stylized image. These platforms often use their own implementations of NST.

XII. ADVANTAGES

- Artistic Creativity: NST allows artists and designers to create unique, artistic images by blending the content of one image with the style of another.

- Automation: NST automates the process of applying a specific artistic style to an image, saving time and effort compared to manual artistic rendering. It can generate stylized images relatively quickly, making it suitable for real-time. Applications like photo and video filtering.

- Educational Tool: It can be used in educational contexts to help students understand the relationship between content and style in visual art. NST is a subject of ongoing research, and it provides an avenue for experimenting with deep learning and image processing techniques.

Conclusion

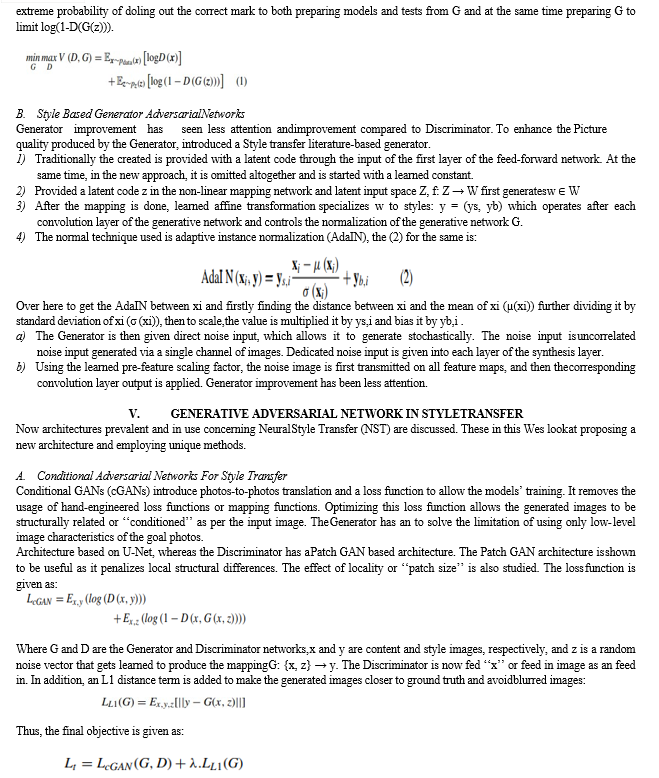

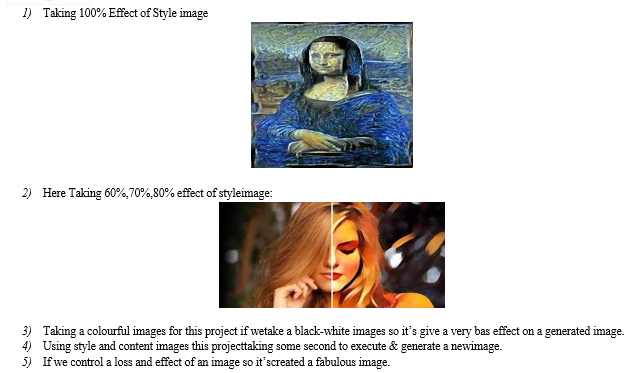

We concluded that, neural style transfer (NST) is a powerful and versatile technique that offers numerous advantages for a wide range of creative and practical applications. It combines the content of one image with the artistic style of another, enabling the generation of unique and visually appealing artwork. Whether you\'re an artist, a designer, a researcher, or a hobbyist, NST can be a valuable tool to enhance your projects. The GANs improvement style, explaining how Spatial, Colour, and Scale control can allow better image generation. Lastly, how NST can be applied over mobile devices in real-time using GANs has been explained. . In short, image style migration based on deep learning not only promotes the development of computer field, but also receives wide attention in other fields, so the development of NST has important research significance and broad application scenarios.

References

[1] M. Berning, K. M. Boergens, and M. Helmstaedter. SegEM: Efficient Image Analysis for High-Resolution Connectomics. Neuron, 87(6):1193–1206, Sept. 2015. [2] C. F. Cadieu, H. Hong, D. L. K. Yamins, N. Pinto, D. Ardila, E. A. Solomon, N. J. Majaj, and J. J. DiCarlo. Deep Neural Networks Rival the Representation of Primate IT Cor tex for Core Visual Object Recognition. PLoS Comput Biol, 10(12):e1003963, Dec. 2014. [3] L.-C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, and A. L. Yuille. Semantic Image Segmentation with Deep Convolutional Nets and Fully Connected CRFs. arXiv:1412.7062 [cs], Dec. 2014. arXiv: 1412.7062. [4] M. Cimpoi, S. Maji, and A. Vedaldi. Deep filter banks for texture recognition and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 3828–3836, 2015. 2 [5] J. Donahue, Y. Jia, O. Vinyals, J. Hoffman, N. Zhang, E. Tzeng, and T. Darrell. DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition. arXiv:1310.1531 [cs], Oct. 2013. arXiv: 1310.1531. [6] Leon Gatys, et al.\"A Neural Algorithm of Artistic Style.\" Journal of Vision 16.12(2016): doi:10.1167/16.12.326. [2]. Mordvintsev, Alexander, Christopher Olah, and Mike Tyka. \"Inceptionism: Going deeper into neural networks.\" (2015). [7] Berger, Guillaume, and Roland Memisevic. \"Incorporating long-range consistency in cnn-based texture generation.\" arXiv preprint arXiv:1606.01286 (2016). [8] Risser, Eric, Pierre Wilmot, and Connelly Barnes. \"Stable and controllable neural texture synthesis and style transfer using histogram losses.\" arXiv preprint arXiv:1701.08893 (2017). [9] Castillo, Carlos, et al. \"Son of zorn\'s lemma: Targeted style transfer using instance-aware semantic segmentation.\" 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2017. [10] Li, Yanghao, et al. \"Demystifying neural style transfer.\" arXiv preprint arXiv:1701.01036 (2017). [11] Li, Chuan, and Michael Wand. \"Combining markov random fields and convolutional neural networks for image synthesis.\" Proceedings of the IEEE conference on computer vision and pattern recognition. 2016. [12] Johnson, Justin, Alexandre Alahi, and Li Fei-Fei. \"Perceptual losses for real-time style transfer and super- resolution.\" European conference on computer vision. Springer, Cham, 2016 . [13] Ulyanov, Dmitry, et al. \"Texture networks: Feed- forward synthesis of textures and stylized images.\" ICML. Vol. 1. No. 2. 2016. [14] Li, Chuan, and Michael Wand. \"Precomputed real-time texture synthesis with markovian generative adversarial networks.\" European conference on computer vision. Springer, Cham, 2016. [15] A. Krizhevsky, I. Sutskever, and G. E. Hinton. Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems, pages 1097–1105, 2012. 2 [16] M. Kummerer, L. Theis, and M. Bethge. Deep Gaze I: Boost- ¨ ing Saliency Prediction with Feature Maps Trained on ImageNet. In ICLR Workshop, 2015. [17] J. Long, E. Shelhamer, and T. Darrell. Fully Convolutional Networks for Semantic Segmentation. pages 3431–3440, 2015. [18] Research on Neural Style Transfer To cite this : Xueyuan Liu 2021 J. Phys.: Conf. Ser. 2079 012029. [19] Neural Style Transfer: A Critical Review AKHIL SINGH,2021

Copyright

Copyright © 2024 Dhawade Sarika, Gawali Akanksha, Jathar Pallavi, Khamkar Snehal, Bhosale Swati S.. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET61748

Publish Date : 2024-05-07

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online