Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Nutri-Check-A Food Image Recognition and Calorie Counter Website

Authors: Vaishali S. Rajput, Aadi Wavhal, Aanya Jain, Aaron R. Abreo, Aary V. Lomte, Aasama A. Ramteke, Aashana Sonarkar

DOI Link: https://doi.org/10.22214/ijraset.2024.60856

Certificate: View Certificate

Abstract

Nutri-Check is an innovative web-based platform designed to revolutionize dietary checking and calorie counting. This report provides an overview of the Nutri-Check platform, detailing its key features, functionalities and the underlying technology that powers its accurate food recognition and calorie counting capabilities. The primary goal of Nutri-Check is to simplify and enhance the process of monitoring dietary intake by allowing users to conveniently upload images of their meals. Leveraging state-of-the art image recognition algorithms, Nutri-Check identifies various food items within seconds, eliminating the need of manual entry of nutritional information. Nutri-Check employees deep learning and computer vision algorithms to accurately identify and categorize food items in uploaded images. Users receive instant feedback on the caloric content of their meals, aiding in better-informed dietary decisions. The Nutri-Check platform has the potential to significantly impact individuals’ health and wellness by promoting conscious and informed food choices. The integration of the cutting-edge image recognition AI tool, helps in the accurate image recognition and calorie counting. In conclusion, this research paper highlights the capabilities of Nutri-Check as a food image recognition and calorie counting website, showcasing its potential to contribute to the ongoing efforts in promoting healthier eating habits and supporting individuals in achieving their nutritional goals.

Introduction

I. INTRODUCTION

The proposed project aims to provide an aid in keeping the record of the daily nutritional intake and volume estimation of the user’s diet. This is a service that can detect nutritional information based on images. It can help you monitor your food intake, track your calories and provide feedback. It can also be used for medical purposes, such as nutrition estimation for various medical conditions or disorders. It would be beneficial for health enthusiasts, sportspersons, medical patients and those looking forward to a healthy lifestyle.

The literature review includes research that explores the challenges and applications of automatic food intake monitoring through Computer Vision, focusing on tasks like classification, recognition, and segmentation. A systematic review of several papers led to a final selection of fewer papers on automatic food intake monitoring systems and a few on related Computer Vision tasks.

Through our project, the importance of healthy eating habits is emphasized, addressing issues like obesity and proposing a web-based application that uses deep learning to estimate food attributes and nutritional values. The role of AI in dietary studies is brought to light, highlighting the association between poor lifestyle choices, chronic diseases, and the potential of technology for improved dietary monitoring.

The review paper discusses the challenges in understanding food from digital media and its crucial role in human health. Computer Vision specialists have developed methods for automatic food intake monitoring, focusing on tasks like classification, recognition, and segmentation.

The systematic review covered 434 papers, narrowing down to 23 papers on automatic food intake monitoring systems and 46 papers on Computer Vision tasks related to food image analysis. The importance of food for health is emphasized, addressing the rising obesity rates. The proposed web-based application aims to estimate food attributes using deep learning for image classification and nutritional analysis. The papers highlight the use of artificial intelligence, particularly deep learning, in food image recognition and nutrition analysis. Challenges and limitations are discussed, with suggestions for future research to improve food recognition systems. Additionally, the survey discusses the association between dietary-related problems like obesity and chronic diseases and the role of mHealth apps in addressing these issues through automatic dietary monitoring systems based on machine learning methods. The survey concludes with a discussion of research gaps and open issues in the field.

II. LITERATURE REVIEW

- 2nd International Conference on Computer Science and Computational Intelligence 2017, ICCSCI 2017,

13-14 October 2017, Bali, Indonesia

“Machine Learning and SIFT Approach for Indonesian Food Image Recognition”

Stanley Giovany, Andre Putra, Agus S Hariawan, Lili A Wulandhari.

Computer Science Department, School of Computer Science, Bina Nusantara University

Jl. KH. Syahdan No. 9, Kemanggisan, Jakarta Barat, Jakarta, Indonesia.

Abstract:

Currently, image become one of the most popular research area, computer learn to recognize many kinds of object based on image. This research are developed since it becomes common habit to take a picture in any activities, for instances before having a meal. Capturing image of meal allows to extract information which contain in food for health reference. The challenge in food recognition that these objects have various shape and appearances, especially Indonesian food, which may have different character for same type of food based on origin of foods. This research proposes a technique in food recognition, especially Indonesian food, using SIFT and machine learning techniques. K-Dimensional Tree (K-D Tree) and Backpropagation Neural network (BPNN) are chosen as machine learning techniques to recognize three types of Indonesian food namely Bakso, Ayam bakar and Sate. Experimental results shows BPNN has higher accuracy compare to K-D Tree which is 51% and 44% for BPNN and K-D Tree respectively.

© 2017 The Authors. Published by Elsevier B.V.

Peer-review under responsibility of the scientific committee of the 2nd International Conference on Computer Science and Computational Intelligence 2017.

Keywords: SIFT, BPNN, Food Image, Indonesian Food Image

2. “Applying Image-Based Food-Recognition Systems on Dietary Assessment: A Systematic Review”

Kalliopi V Dalakleidi, Marina Papadelli, Ioannis Kapolos, and Konstantinos Papadimitriou

Department of Food Science and Technology, University of the Peloponnese, Kalamata, Greece; and Laboratory of Food Quality Control and Hygiene,

Department of Food Science and Human Nutrition, Agricultural University of Athens, Athens, Greece.

Abstract:

Dietary assessment can be crucial for the overall well-being of humans and, at least in some instances, for the prevention and management of chronic, life-threatening diseases. Recall and manual record-keeping methods for food-intake monitoring are available, but often inaccurate when applied for a long period of time. On the other hand, automatic record-keeping approaches that adopt mobile cameras and computer vision methods seem to simplify the process and can improve current human-centric diet-monitoring methods. Here we present an extended critical literature overview of image-based food-recognition systems (IBFRS) combining a camera of the user’s mobile device with computer vision methods and publicly available food datasets (PAFDs). In brief, such systems consist of several phases, such as the segmentation of the food items on the plate, the classification of the food items in a specific food category, and the estimation phase of volume, calories, or nutrients of each food item. A total of 159 studies were screened in this systematic review of IBFRS. A detailed overview of the methods adopted in each of the 78 included studies of this systematic review of IBFRS is provided along with their performance on PAFDs. Studies that included IBFRS without presenting their performance in at least 1 of the above-mentioned phases were excluded. Among the included studies, 45 (58%) studies adopted deep learning methods and especially convolutional neural networks (CNNs) in at least 1 phase of the IBFRS with input PAFDs. Among the implemented techniques, CNNs outperform all other approaches on the PAFDs with a large volume of data, since the richness of these datasets provides adequate training resources for such algorithms. We also present evidence for the benefits of application of IBFRS in professional dietetic practice. Furthermore, challenges related to the IBFRS presented here are also thoroughly discussed along with future directions. AdvNutr 2022;13:2590–2619.

3. “A Comprehensive Survey of Image-Based Food Recognition and Volume Estimation Methods for Dietary Assessment”

Ghalib Tahir and Chu Kiong Loo.

Abstract: Dietary studies showed that dietary-related problem such as obesity is associated with other chronic diseases like hypertension, irregular blood sugar levels, and increased risk of heart attacks. The primary cause of these problems is poor lifestyle choices and unhealthy dietary habits, which are manageable using interactive mHealth apps. However, traditional dietary monitoring systems using manual food logging suffer from imprecision, underreporting, time consumption, and low adherence.

Recent dietary monitoring systems tackle these challenges by automatic assessment of dietary intake through machine learning methods. This survey discusses the most performing methodologies that have been developed so far for automatic food recognition and volume estimation. First, we will present the rationale of visual-based methods for food recognition. The core of the paper is the presentation, discussion and evaluation of these methods on popular food image databases. Following that, we discussed the mobile applications that are implementing these methods. The survey ends with a discussion of research gaps and open issues in this area.

Keywords: Food recognition, Feature extraction, Automatic-diet monitoring, Image analysis, Volume estimation, Interactive segmentation, Food datasets.

4. “A review on food recognition technology for health applications”

Dario Allegra,Sebastiano Battiato,Alessandro Ortis,Salvatore Urso,

Riccardo Polosa.

Department of Mathematics and Computer Science; Center of Excellence for the Acceleration of

Harm Reduction (CoEHAR), University of Catania, Catania, Italy.

Abstract: Food understanding from digital media has become a chal[1]lenge with important applications in many different domains. On the other hand, food is a crucial part of human life since the health is strictly affected by diet. The impact of food in people life led Computer Vision specialists to develop new methods for automat[1]ic food intake monitoring and food logging. In this review paper we provide an overview about automatic food intake monitoring, by focusing on technical aspects and Computer Vision works which solve the main involved tasks (i.e., classification, recogni[1]tions, segmentation, etc.). Specifically, we conducted a systematic review on main scientific databases, including interdisciplinary databases (i.e., Scopus) as well as academic databases in the field of computer science that focus on topics related to image under[1]standing (i.e., recognition, analysis, retrieval). The search queries were based on the following key words: “food recognition”, “food classification”, “food portion estimation”, “food logging” and “food image dataset”. A total of 434 papers have been retrieved. We excluded 329 works in the first screening and performed a new check for the remaining 105 papers. Then, we manually added 5 recent relevant studies. Our final selection includes 23 papers that present systems for automatic food intake monitoring, as well as 46 papers which addressed Computer Vision tasks related food images analysis which we consider essential for a comprehensive overview about this research topic. A discussion that highlights the limitations of this research field is reported in conclusions.

5. “Web Based Nutrition And Diet Assistance Using Machine Learning”

Mrs.M.Buvaneswari,M.E1, S.Aswath2, P.Karthik3, M.Mohammed Raushan4

1Assistant Professor, Dept. of Computer Science and Engineering, Paavai Engineering College, Tamil Nadu, India

2Student, Dept. of Computer Science and Engineering, Paavai Engineering College, Tamil Nadu, India

3 Student, Dept. of Computer Science and Engineering, Paavai Engineering College, Tamil Nadu, India

4 Student, Dept. of Computer Science and Engineering, Paavai Engineering College, Tamil Nadu, India

Abstract - The importance of food for living had been discussed in several medical conferences. Consumers now have more opportunities to learn more about nutrition patterns, understand their daily eating habits, and maintain a balanced diet. Due to the ignorance of healthy food habits, obesity rates are increasing at tremendous speed, and this leads more risks to people’s health. People should control their daily calorie intake by eating healthier foods, which is basic thing to avoid obesity. Although food packaging comes with nutrition (and calorie) labels, it’s still not very efficient and conveniant for people to refer to App-based nutrient systems which analyzes realtime images of food and analyze its nutritional content which can be very handy. It improves the dietary habits and helps in maintaining a healthy lifestyle. This project aims at developing web based application that estimates food attributes such as ingredients and nutritional value by classifying the input image of food. This method uses deep learning model (CNN) to identify food accurately and uses the Food API's to give the nutritional value of the identified food.

Key Words: Diet Assistance, Machine Learning, Diet Recommender System, Food Recognition, Image processing, Feature Extraction

6. “Deep Learning for Food Image Recognition and Nutrition Analysis Towards Chronic Diseases Monitoring: A Systematic Review”

Merieme Mansouri · Samia Benabdellah Chaouni · Said Jai Andaloussi · Ouail Ouchetto

Received: 24 June 2022 / Accepted: 27 May 2023 © The Author(s), under exclusive licence to Springer Nature Singapore Pte Ltd 2023

Abstract: The management of daily food intake aids to preserve a healthy body, minimize the risk of many diseases, and monitor chronic diseases, such as diabetes and heart problems. To ensure a healthy food intake, artifcial intelligence has been widely used for food image recognition and nutrition analysis. Several approaches have been generated using a powerful type of machine learning: deep learning. In this paper, a systematic review is presented for the application of deep learning in food image recognition and nutrition analysis. A methodology of systematic research has been adopted resulting in three main felds: food image classifcation, food image segmentation and volume estimation of food items providing nutritional infor mation.“57” original articles were selected and synthesized based on the use case of the approach, the employed model, the used data set, the experiment process and fnally the main results. In addition, articles of public and private food data sets are presented. It is noted that among the literature review, several deep learning-based studies have shown great results and outperform the conventional methods. However, certain challenges and limitations are presented. Hence, some research directions are proposed to apply in the future to improve the food recognition systems for dietary assessment.

Keywords Deep learning: · Food image recognition · Size estimation · Food monitoring · Dietary assessment.

III. METHODOLOGY/EXPERIMENTAL

A. Basic Flow Of Methodology

- Design user-friendly, attractive website by using HTML for website structure, CSS for further design, JavaScript for further website functioning.

- Linking of pages during development of website for user interface(like Sign-up and Login).

- Use the backend software to store the data(database).

- Host the website on a web server or cloud platform to make it accessible on the internet.

- Embed or connect to AI tool.

- Test the website to ensure it works correctly and handles various scenarios and user interactions.

- Define Objectives: Clearly define the objectives of the website. Understand the target audience and the specific features required for food image recognition.

- Select AI Tool or API: Choose a suitable AI tool or API for image recognition.[1]

- Design:

- Wireframing: Create wireframes for the website's pages, including the login, sign-up, and about pages. Plan the layout, navigation, and placement of key elements.

- UI/UX Design: Design the user interface keeping in mind a user-friendly experience. Use a clean and intuitive design for better engagement.

- Backend Development: Choose a Backend Framework: Select a backend framework.

- Select a Database: Choose a database system (e.g., MySQL, MongoDB) to store user data, preferences, and any other relevant information.

- Frontend Development:

- Choose a Frontend Framework: Select a frontend framework like React, Angular, or Vue.js for building interactive and dynamic user interfaces.

- Implement UI Components: Develop the login, sign-up, and about pages. Ensure responsiveness for various screen sizes.

- Connect Frontend and Backend:

- API Integration: Connect the frontend and backend to enable communication between different components.

- Handle Image Uploads: Implement functionality to allow users to upload food images for recognition.

- Integrate AI Tool or API:

- Integrate Image Recognition: Incorporate the selected AI tool or API for food image recognition. Adjust the backend to send images to the AI service and process the results.

- Testing:

- Unit Testing: Perform unit tests for each component to ensure individual functionalities work as expected.

- Integration Testing: Test the entire system to ensure seamless integration between frontend, backend, and the AI tool.

- Deployment:

- Choose Hosting Service: Select a hosting service and deploy both frontend and backend.

- Domain Registration: Register a domain name and configure it to point to the deployed website.

B. Actual Methodology/ Process

- HTML document serves as the foundation for a website, incorporating styles from Bootstrap, custom styles, and various JavaScript functionalities to create an interactive and visually appealing web experience focused on nutrition-related information.

a. The <head> section contains meta-information about the document, such as character set, viewport settings, keywords, and descriptions. Various external stylesheets and fonts are linked in the head section.

b. A favicon (site icon) is specified using the <link> tag in the head section. The favicon is a small image that appears in the browser tab.[2]

c. Google Web Fonts are linked to the document to use custom fonts. Two icon font stylesheets are linked for using Font Awesome and Bootstrap icons.

d. Several external libraries are linked to provide additional styles and functionalities. These include animations, carousel styles, and a date/time picker.

e. The Bootstrap framework is included, and a customized stylesheet (css/bootstrap.min.css) is linked, indicating the use of Bootstrap for styling.

f. A custom stylesheet (css/style.css) is linked to provide additional styling specific to the website.

g. The body of the document contains various sections for different parts of the website. A navigation bar (<nav>) is defined with links to different pages of the website. A hero section with a background image and a call-to-action button is created. Service section with information about different nutritional components (carbohydrates, fats, protein, vitamins). About Us section with images and a description of the website's mission and purpose. Footer section with links to company-related pages.

h. Several JavaScript libraries are included at the end of the document. These libraries provide functionality for animations, scrolling effects, carousel interactions, and date/time pickers.

i. A back-to-top button is included, which is a common UI element to scroll the page back to the top.

j. The document includes script tags at the end to link JavaScript files for various functionalities like animations, scrolling, counters, carousel, and date/time picker.

2. The provided CSS code is a stylesheet that defines the styling and layout for various elements on a website.

a. CSS Variables: Custom CSS variables (defined using :root) are used to store color values (--primary, --light, --dark). These variables can be reused throughout the stylesheet, providing a centralized way to manage colors.

b. Font Styles: Custom font styles are defined for specific elements. For example, the class .ff-secondary sets the font family to 'Pacifico' and various classes like .fw-medium and .fw-semi-bold set specific font weights.

c. Styles for a back-to-top button with the class .back-to-top are defined. This button is positioned fixed at the bottom right and is initially hidden (display: none). It becomes visible as the user scrolls down the page.

d. Styles for a spinner with the ID #spinner are defined. The spinner is initially hidden and becomes visible during transitions.

e. General styles for buttons are defined, including font family, weight, and text transformation. Specific styles for primary and secondary buttons are also provided.

f. Styles for the navigation bar (.navbar) and navigation links are defined. The color, padding, and other properties change based on user interactions, such as scrolling.

g. Styles for a hero header section with a background image and rotating image animation are defined. The breadcrumb items have customized styles as well. Styles for section titles, including a before and after pseudo-element to add decorative lines on both sides of the title. Styles for service items, including a box-shadow effect and hover styles.

h. Styles for a video section, including a play button with animations, and modal styles for displaying the video. Styles for team items, including box-shadow and hover effects. Styles for a testimonial carousel, including dot navigation and individual testimonial item styles. Styles for social buttons, links, and copyright information in the footer. The footer menu and links have specific styles, including hover effects.[1]

i. Media Queries: Media queries are used to make the styles responsive based on the screen size, adjusting navbar and other element styles for smaller screens.

3. JavaScript code enhances the functionality and user experience of a website by incorporating features such as animations, sticky navigation, dropdowns, a back-to-top button, a modal video player, and a testimonials carousel. It leverages the jQuery library for DOM manipulation and various third-party libraries for specific functionalities.

a. A function named spinner is defined to hide a spinner element with the ID #spinner after a short delay (1 millisecond). The spinner is presumably used to indicate that content is being loaded.

b. The script initializes the Wow.js library, which is a library for triggering animations on elements as they become visible during scrolling.

c. The script adds a class to the navbar with the class. sticky-top and shadow-sm when the user scrolls down the page, making the navbar sticky. The sticky behavior is removed when the user scrolls back up.

d. For screens larger than 992 pixels, the script enables a dropdown menu to appear on mouse hover. It adds or removes the show class to achieve this effect.

e. The script shows or hides a "Back to Top" button based on the user's scroll position. When clicked, it smoothly scrolls the page back to the top.

f. The script uses the counterUp function on elements with the attribute data-toggle="counter-up" to animate counting up from a specified dela.

g. The script handles modal functionality for playing a video. When a button with the class .btn-play is clicked, it sets the video source. The modal is displayed with the video autoplaying, and it stops when the modal is closed.

h. The script initializes a testimonials carousel using the Owl Carousel library. It provides settings for autoplay, smart speed, centering items, margin, loop, and responsiveness for different screen sizes.

i. The script is wrapped in an IIFE to create a private scope for the variables and functions, preventing pollution of the global namespace.

j. jQuery is used throughout the script to select and manipulate DOM elements.

k. The code enhances the website's user experience by adding interactive elements such as a sticky navbar, dropdowns, a back-to-top button, and animated counters.

4. SCSS (Sass) stylesheet that customizes the Bootstrap framework for use in a website. SCSS stylesheet is used to customize the Bootstrap framework to suit the specific design requirements of the website. It provides a way to easily modify the default styles provided by Bootstrap and create a unique visual identity for the site.[3]

a. Variable Declarations: Custom color variables like $primary, $light, and $dark are defined for primary, light, and dark colors, respectively. Font-related variables like $font-family-base, $headings-font-family, $body-color, $headings-color, etc., are declared. Other variables like $border-radius, $link-decoration, and $enable-negative-margins are set.

b. Custom Styling: The variables declared at the beginning are used to override or customize Bootstrap's default styles. For example, $body-bg is used to set the background color of the body, $body-color is used to set the text color, and $headings-color is used to set the color of headings. Custom font families, weights, and other styling choices are applied to the overall design. Responsive font sizes are enabled with $enable-responsive-font-sizes.

c. Usage in HTML: In the HTML documents of the website, this custom stylesheet is linked after the Bootstrap stylesheet, ensuring that the custom styles override the default Bootstrap styles.

d. The custom stylesheet allows the developer to tailor the visual appearance of the website by adjusting color schemes, font choices, and other design elements. By using Sass, the code is more maintainable and organized, thanks to features like variables and the ability to nest styles.

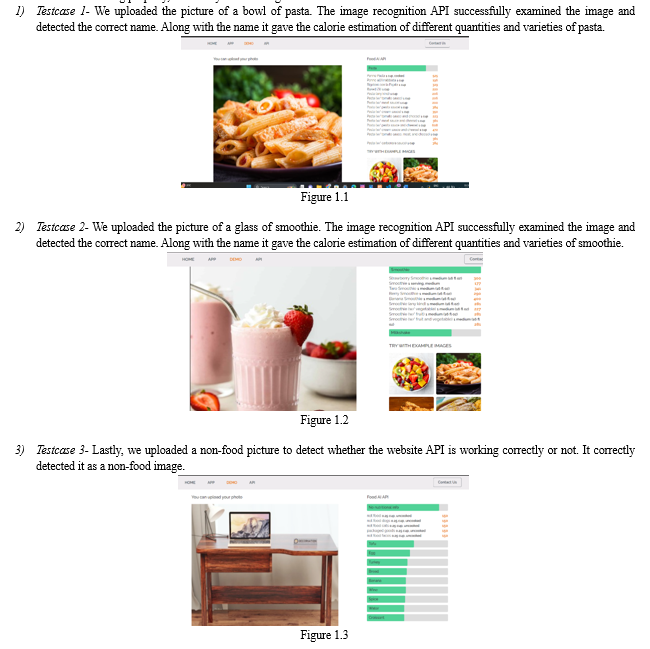

IV. RESULTS AND DISCUSSIONS

With this website, we are successfully able to examine what the food item is and what is the calorie intake one has if he consumes it. We have tested this website, with various food items to check its accuracy. With the certainty of this website and the AI tool embedded in it working properly, we hereby are showing our results.

In conclusion, the testing of our food image recognition and calorie counter website has demonstrated promising results. The accuracy and efficiency of the image recognition feature showcase its potential to revolutionize the way users track their dietary intake.

V. ACKNOWLEDGMENT

First and foremost, I extend my heartfelt thanks to my project supervisor Mrs Vaishali Rajput, whose expertise and valuable insights played a pivotal role in shaping the project. Their guidance provided me with a clear direction and helped me overcome challenges during the development process. I am grateful for the encouragement and support from my fellow classmates and friends. Their constructive feedback and collaborative spirit created a positive environment that fuelled my motivation to excel in this project. I would like to acknowledge the wealth of knowledge and resources provided by the academic staff and the library, which greatly contributed to the research and implementation phases of the website.

Conclusion

In conclusion, the development of this nutrition-focused website marks a significant milestone. The primary objective was to create a platform that not only provides users with comprehensive information about the nutritional value of various foods but also offers a personalized experience through a secure login and signup system. Through diligent research and coding efforts, the website now serves as a valuable resource for individuals seeking to make informed dietary choices. Users can easily access detailed nutritional information, including essential nutrients, calories, and other relevant data for a wide range of foods. The intuitive user interface ensures a seamless and engaging experience, making it easy for users to navigate and find the information they need. The inclusion of a login and signup page adds an extra [4]layer of functionality, allowing users to create personalized profiles. This feature opens up possibilities for tracking and analyzing their dietary habits over time, promoting a more tailored and goal-oriented approach to nutrition.As a computer science student, this project has provided hands-on experience in various aspects of web development, including front-end design, back-end functionality, and database management. The implementation of secure user authentication demonstrates a commitment to user privacy and data protection.In summary, this nutrition-focused website not only meets its core objectives of providing valuable nutritional information but also showcases my growing skills and understanding in the field of computer science. Moving forward, there is ample room for further enhancements and feature additions, ensuring the continued evolution of this project and its contribution to the intersection of technology and healthy living.

References

[1] 2nd International Conference on Computer Science and Computational Intelligence 2017, ICCSCI 2017, 13-14 October 2017, Bali, Indonesia “Machine Learning and SIFT Approach for Indonesian Food Image Recognition” Stanley Giovany, Andre Putra, Agus S Hariawan, Lili A Wulandhari. [2] “Applying Image-Based Food-Recognition Systems on Dietary Assessment: A Systematic Review” Kalliopi V Dalakleidi, Marina Papadelli, Ioannis Kapolos, and Konstantinos Papadimitriou. [3] “A Comprehensive Survey of Image-Based Food Recognition and Volume Estimation Methods for Dietary Assessment” Ghalib Tahir and Chu Kiong Loo. [4] “A review on food recognition technology for health applications” Dario Allegra,Sebastiano Battiato,Alessandro Ortis,Salvatore Urso, Riccardo Polosa. [5] “WEB BASED NUTRITION AND DIET ASSISTANCE USING MACHINE LEARNING” Mrs.M.Buvaneswari,M.E, S.Aswath, P.Karthik, M.Mohammed Raushan. [6] “Deep Learning for Food Image Recognition and Nutrition Analysis Towards Chronic Diseases Monitoring: A Systematic Review” Merieme Mansouri · Samia Benabdellah Chaouni · Said Jai Andaloussi · Ouail Ouchetto.

Copyright

Copyright © 2024 Vaishali S. Rajput, Aadi Wavhal, Aanya Jain, Aaron R. Abreo, Aary V. Lomte, Aasama A. Ramteke, Aashana Sonarkar. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET60856

Publish Date : 2024-04-23

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online