Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Off-Line and Online Handwritten Character Recognition Using RNN-GRU Algorithm

Authors: Dr. S. Kanmani, B. Sujitha, K. Subalakshmi, S. Umamaheswari, Karimreddy Punya Sai Teja Reddy

DOI Link: https://doi.org/10.22214/ijraset.2023.50184

Certificate: View Certificate

Abstract

Recognizing handwritten characters is an extremely difficult task in the domains of pattern recognition and computer vision. It involves the use of a process that enables computers to identify and convert handwritten or printed characters, such as letters and numbers, into a digital format that is usable by the computer. Currently, the RNN-CNN hybrid algorithm is employed to predict handwritten text in images with an accuracy rate of 91.5%. However, the existing system can only recognize characters and words character-by-character and word-by-word. The proposed system aims to address this limitation by enabling line-by-line recognition and the conversion of handwritten text to OCR. To achieve this, the system utilizes the GRU algorithm to predict the next letter in incomplete words. Furthermore, the IAM dataset, consisting of 135,000 annotated sentences, is utilized to detect and rectify spelling errors in texts.

Introduction

I. INTRODUCTION

Computer software that recognizes and interprets handwritten input from a variety of sources, including paper documents, photographs, touch screens, and other media, is called handwriting recognition (HWR).This technology presents one of the most complex challenges in the field of pattern recognition and computer vision. HWR technology enables computers to recognize and understand handwritten characters and words inputted by the user. This is achieved through the conversion of the handwritten input into a computer-readable format, typically Unicode text. Typically, the input device comprises a stylus and a touch-sensitive screen. This feature presents a significant advantage of electronic storage over physical storage.

Optical Character Recognition (OCR) is the mainstream practice for handwriting recognition. It involves glance at a handwritten document and changing it into a simple text document. Additionally, OCR can work by capturing a picture of handwritten text. However, there are numerous variations in handwriting quality, making it difficult to provide sufficient examples of how each character may appear. Furthermore, some characters are quite similar in appearance, making accurate recognition by a computer challenging. Handwriting can be classified into manuscript form and cursive form. Manuscript handwriting, which involves writing individual block letters, is easier for computers to recognize. Conversely, cursive handwriting requires recognition tools to correctly identify each character, which are often joined. Thus, accurately recognizing and identifying each character in cursive handwriting remains a challenging task for handwriting recognition tools.

II. LITERATURE SURVEY

[2] The purpose of this research is to improve the accuracy of handwritten text recognition (HTR) systems using deep learning algorithms. Existing research methods for HTR have some limitations, so the researchers collected data, extracted features, and trained a deep learning model using a 2D LSTM approach to address these limitations. They also employed a word recognition strategy to enhance accuracy. The resulting approach was integrated into an OCR system, and its performance was compared with another approach on the IAM handwritten dataset.

The study found that the 2D LSTM-based outperformed the other approach. However, it should be noted that the experiments were limited to the IAM dataset, and more research is needed to evaluate the generalizability of the findings to other datasets. Moreover, the paper does not provide a comprehensive comparison with other state-of-the-art methods for HTR.

[8] Handwriting recognition without writing boxes was discovered by H. Oda et al. for on-line search of handwritten text. This paper presents a novel approach for searching on-line handwritten text without requiring a writing box, by searching for a specific keyword in a lattice consisting of potential character segmentations. To improve accuracy and decrease noise in the search results, the method takes into account the recognition accuracy and the keyword's length. The paper also briefly surveys related work in the field of on-line handwritten text recognition and search. To estimate the proposed method, experiments were conducted. The result is that the method achieved a recall percentage of 89.4%, a precision rate of 90.2%, and an F-measure of 0.912 when searching for three-character keywords, demonstrating its effectiveness in reducing noise and improving search accuracy. However, the paper lacks a comprehensive analysis of the proposed method's performance and a comparison with existing methods. It also fails to discuss potential limitations or drawbacks of the approach.

[11] This paper confirmed the detection and recognition of handwritten text lines on answer sheets with small amounts of tag data. This paper describes a novel approach to detect and recognize handwriting text-lines in scanned answer sheet images, with a focus on addressing the challenges of automatic location and recognition of handwritten text in the education industry, using few labeled data. In particular, to improve recognition accuracy, dataset synthesis method is used that finds written text in scanned answer sheet images and an advanced handwritten text recognizer based on CRNN. The proposed method focuses on the depth of the network and each of the MLC modules, showing that both can contribute to handwritten text line recognition. Experimental results show that the proposed method has better detection and recognition accuracy than the existing methods. However, the proposed dataset synthesis method may not be generalizable to other types of handwritten text or languages, and the proposed handwritten text-line recognition method may not perform well on highly cursive or illegible handwriting.

[3] The authors encountered several difficulties in recognizing non-digital handwritten Quranic Arabic text, which include distinct writing styles, the usage of diacritics, overlaps and ligatures. Their paper delves into the recognition system for Quranic-handwritten text and details the associated challenges, such as the similar appearances of certain letters that hinder Quranic handwriting recognition. Furthermore, the paper distinguishes between non-digital and digital text recognizing systems, highlighting that online recognition has a higher recognition rate than offline recognition due to the temporal nature of writing, where the characters can be distinguished based on the order in which they are stroked. Although the paper does not provide any specific findings as it offers an overview of the challenges and recognition system for non-digital Quranic-handwritten text, it examines the unique attributes of the Arabic linguistic and the issues related to non-digital Quranic-handwritten text, providing examples. However, this paper does not discuss any new or novel techniques for recognition of handwritten Quranic text, and it does not provide a detailed analysis or evaluation of any specific recognition system. The paper's limitations are not explicitly stated.

[17] This proposed system introduces Adversarial Feature Enhancing Network for quick recognition of handwritten phrases offline. The proposed AFEN work consists of 5 main components: common feature extractor, branch search, RoIRotate for feature extraction, disputed feature learning network, and text. Compared to previous methods, it has been suggested in the literature that the AFEN method is effective with controlled testing of two popular sentence registers, IAM and Rimes. However, this article has some limitations. For example, the recognition model evaluates the performance of the proposed AFEN system with only two documents together and against other unknown documents. Also, this article does not provide detailed information about the computational complexity of the proposed method, which may be problematic for real-time applications. Finally, this paper does not compare the proposed AFEN system with state-of-the-art handwritten deep learning models to better understand its performance.

III. RELATED WORK

The presented study introduces a technique for handwritten character recognition that involves a hybrid feature extraction method and optimal feature subset selection. This approach employs both Genetic Algorithm and an adaptive Multi Layer Perceptron classifier to accomplish this task. Based on moment, distance, geometrical, and local features, the study extracts seven distinct feature sets. Finally, the classifier is tested on a separate dataset of unknown characters to assess its performance. The limitation is that the proposed approach lacks a thorough examination of the computational complexity and lack of accuracy rate, which can be a significant concern for real-time applications or large-scale datasets. Additionally, this existing system does not include a comparative analysis of the proposed method against other state-of-the-art techniques for handwritten character recognition, which makes it challenging to evaluate the relative performance of the approach.

IV. PROPOSED WORK

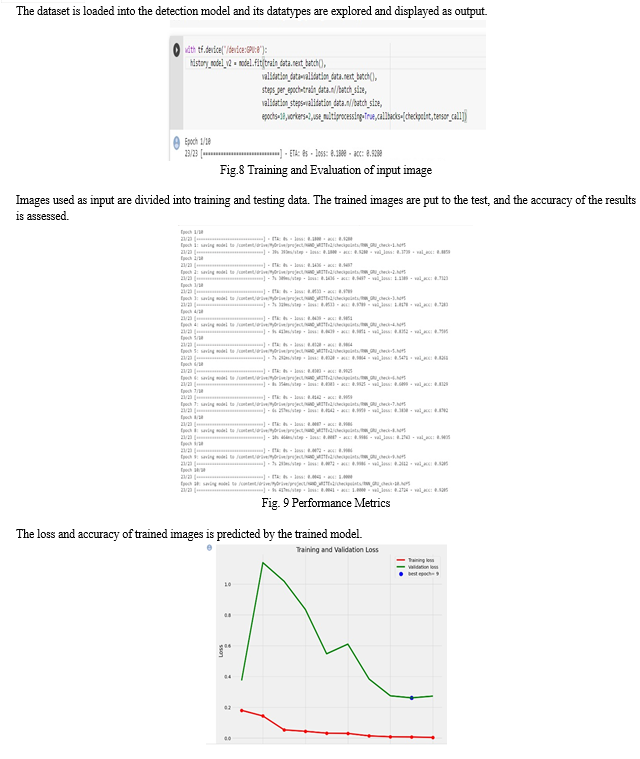

This proposed system proposes a novel solution for the classification problem. The approach involves a modified version of data splitting and loading mechanisms. The data is partitioned into training, testing, and validation sets with their corresponding annotations. The loaded data undergoes preprocessing steps and is configured to be loaded into the proposed system. The system employs a combination of RNN and GRU algorithms for image segmentation and letter prediction, respectively. This enables the segmentation of words, letters, and paragraphs, which can then be converted into OCR.

A. Dataset Preparation

We selected a dataset that is extensively used in the field of handwriting recognition: the IAM Handwriting Database. This collection includes 13,353 photos of handwritten text lines produced by 657 authors. The IAM The database, which is categorised as a modern collection, has 1,539 handwritten pages and 657 authors' contributions totaling 115,320 words. A collection of unregulated handwritten text forms, which were scanned at 300dpi resolution and stored in PNG format of images with 256 gray levels, make up the IAM Handwriting Database. These forms are sorted into distinct directories where all the forms in each directory were written by the same person. The dataset is labeled at the sentence, line, and word levels, and the words have been extracted from scanned text pages using an automatic segmentation scheme, which was then manually verified. The IAM Handwriting Database is an essential dataset in the field of handwriting recognition because of its substantial size, variety of handwriting styles, and its labeling at the sentence, line, and word levels. It has been used to train and test different machine learning models for handwriting recognition and is expected to continue to be a significant resource for future research in this area.

B. Detailed Design Diagram of the Proposed Work

V. ALGORITHM USED

A. GRU Algorithm For Prediction Of Next Character

The GRU (Gated Recurrent Units) algorithm is used for predicting the next character in a sequence. GRU networks are similar to LSTM networks, but they differ in a few key ways. GRU, in contrast to LSTM, uses hidden states rather than a cell state to transmit information. GRU networks also only have two gates. (Reset and Update Gate),which makes them faster than LSTM networks. Furthermore, GRU requires fewer tensor operations, which further increases its speed. GRUs use gating mechanisms to selectively update and forget information, allowing them to capture long-term dependencies in sequential data.

The Update Gate is a crucial component of gated recurrent neural networks. It serves as a combination of the Forget Gate and Input Gate. The Input Gate chooses what fresh information to add to the current hidden state while the Forget Gate chooses which information from the previous hidden state to delete. In essence, the Update Gate controls how much of the prior hidden state should be carried over to the current time step. The Reset Gate is an essential component of gated recurrent neural networks. Its primary function is to regulate the flow of information by determining how much past information should be retained or discarded. In particular, the Reset Gate helps to prevent the problem of gradient explosion by resetting past information that is no longer relevant to the current time step. By doing so, the Reset Gate helps to maintain the stability and accuracy of the model's predictions.

Fig.5 Gated Recurrent Unit (GRU) Algorithm

In the context of next character prediction, a GRU can be trained on a sequence of characters, with the goal of predicting the next character in the sequence given the previous ones. In order to reduce the cross-entropy loss between the expected and real next characters, the model is trained. Once trained, the model can be used to generate new text by iteratively predicting the next character and feeding it back into the model.

# Inputs:

x: Input at current time step

hid_prev: Hidden state from previous time step

Wght_z, U_z, b_z: Weight matrices and bias vector for update gate

Wght_r, U_r, b_r: Weight matrices and bias vector for reset gate

Wght_h, U_h, b_h: Weight matrices and bias vector for candidate activation

# Outputs:

hid_next: Hidden state for current time step

#Algorithm:

# Compute update gate

z = sigmoid(Wght_z * x + U_z * hid_prev + b_z)

# Compute reset gate

r = sigmoid(Wght_r * x + U_r * hid_prev + b_r)

# Compute candidate activation

h_candidate = tanh(Wght_h * x + U_h * (r * hid_prev) + b_h)

# Compute new hidden state

hid_next = (1 - z) * hid_prev + z * h_candidate

In this pseudocode, x is the input at the current time step, hid_prev is the hidden state from the previous time step, and hid_next is the hidden state for the current time step. The weight matrices (Wght_z, U_z, Wght_r, U_r, Wght_h, and U_h) and bias vectors (b_z, b_r, and b_h) are learned parameters that control the flow of information through the GRU cell. The sigmoid and tanh functions are used to apply non-linear transformations to the inputs and hidden states.

B. Recurrent Neural Network(RNN)

Recurrent Neural Networks (RNNs) are a type of neural network that can be used for handwritten character recognition. RNNs are designed to process sequential data, where each input in the sequence depends on the previous inputs in the sequence. Handwritten characters can be represented as a sequence of pixel values, making them a good fit for RNNs. To use an RNN for handwritten character recognition, the first step is to preprocess the input images. This typically involves converting the images to grayscale and scaling them to a fixed size. The resulting images can then be treated as a sequence of pixel values and fed into the RNN. During training, the RNN learns to predict the correct label for each image by processing the image as a sequence of pixel values and adjusting its internal state at each time step. The network can be trained using backpropagation and a variety of optimization algorithms, such as stochastic gradient descent or Adam optimization.

One popular type of RNN for handwritten character recognition is the Gated Recurrent Unit. Once trained, the RNN can be used to recognize handwritten characters by feeding in an image of a character and decoding the output of the network to determine the predicted label. Numerous applications can be made use of this procedure such as automated handwriting recognition, optical character recognition, and even in recognizing handwritten digits in cheque processing. Overall, RNNs are a powerful tool for processing sequential data, making them a suitable choice for handwritten character recognition tasks where the input data is a sequence of pixels in an image.

VI. MODULES USED

The following are the modules in proposed system: Image Acquisition, Pre-processing, Image Segmentation, Feature Extraction, Model Generation(Post-processing), Image Classification and Recognition.

[1] The recognition process's initial phase is image acquisition. Depending on the type of Character Recognition method used, image acquisition refers to retrieving an image from a source. An offline and online Character text recognition method takes the input in the form of an image. The conversion of handwritten or printed documents into an electronic format is called image acquisition. The characters are written on a sheet of paper and then scanned to get a picture. Digitization is the process of converting a document into an electronic format. Depending on the field of work, the initial setup and long-term maintenance required to capture images are crucial factors in image acquisition.

[2] Pre-Processing is an essential step in preparing data for future analysis. It involves several techniques to format the input image, such as reducing Noise, Normalization, Smoothing, and other methods used in the Recognition Process. The primary purpose of Preprocessing is to enhance the quality of the image and analyze it more accurately. Image pre-processing involves operations at the lowest level of image abstraction, and these operations do not increase image information. However, they can decrease the image information if necessary. The ultimate purpose of pre-processing is to enhance visual attributes that are important for the processing and analysis task, improve the image data, and suppress unwanted distortions.

[3] Segmentation is a crucial step in any Recognition method as it involves separating lines, words, or characters from a handwritten document. This process involves breaking down the input image into various sub-groups called segments of image. Image segmentation is a method utilized to partition a digital image into subgroups, thereby simplifying the image and allowing for subsequent processing or analysis of each image segment. Technically speaking, segmentation entails labelling pixels in order to distinguish between objects, persons, or other significant aspects in the image. Image segmentation is frequently used for object detection. The object detector functions on a bounding box that has already been defined by the segmentation algorithm, which results in an improvement in accuracy and a reduction in inference time. A key component of computer vision methods and technologies is image segmentation.

[4] Feature extraction is the process of obtaining important data or information from an independent format image. This stage is crucial in extracting the most relevant information from the text image, which aids in character recognition. Selecting a stable and representative set of features is essential in designing a pattern recognition system. The process of manually extracting features include identifying, characterizing, and devising a method for extracting the features that are pertinent to a particular issue. Making conclusions about whether characteristics might be valuable often requires having a solid understanding of the context or domain.

[5] The Post-processing stage involves printing the corresponding recognized characters in a structured text form, which is achieved using OCR. However, recognition errors are common in system results due to character classification and segmentation problems. To correct these errors, contextual post-processing techniques are employed by CR systems. The statistical approach is commonly used in this context due to its computational time and memory utilization advantages. Also, the preprocessed features are used to train the GRU algorithm. During training, the algorithm learns to recognize patterns in the input data and make predictions about the corresponding output label. The trained model is then validated using a separate set of validation data to evaluate its performance. Finally, the model is tested using a set of test data to evaluate its accuracy in recognizing new handwritten characters.

[6] The classification stage is the decision-making component of the recognition system, responsible for identifying each pattern and assigning it to the appropriate character class. Once the system is prepared to recognize handwritten input images, it undergoes training using several input images to successfully recognize various handwritten characters in different styles.

In the time complexity of GRU, N refers to the number of input features, D refers to the number of neurons in the hidden layer, and T refers to the length of the input sequence (number of timesteps).

Based on these evaluation parameters, we can see that GRU models have lower time complexity compared to CNN and SVM models, but higher time complexity compared to MLP models. In terms of computational time, GRU models generally have shorter training and inference times compared to Random Forest models.

Overall, GRU models outperform other algorithms in Handwritten Character Recognition in terms of F1 Score, accuracy, and Word Error Rate.

Conclusion

Handwritten character recognition has seen a rise in popularity in recent years., particularly in creating paperless environments through the digitization and processing of existing paper documents. To this goal, a number of methods for reading handwriting have been put forth. Among these, the RNN with Gated Recurrent Unit network on a series-to-sequence approach has become a popular architecture of a neural network for recognition of characters. We experimented with this strategy on the IAM dataset while contrasting the outcome to that of other models in order to assess its efficacy. Our findings show that the suggested model produced the maximum letter accuracy and word accuracy, making it the best-performing model for recognizing handwritten character. In conclusion, the use of RNNs with GRUs on a sequence-to-sequence method has the potential to enhance the precision and effectiveness of automatic text recognition systems.

References

[1] Agrawal, P., Chaudhary, D., Madaan, V. et al. Automated bank cheque verification using image processing and deep learning methods. Multimed Tools Appl 80, 5319–5350, 2021. [2] A Nikitha, Geetha J, D.S.Jayalakshmi . Handwritten Recognition Using Deep Learning M. S. Ramaiah Institute of Technology, 12 Nov 2020. [3] Arshad Iqbal, Aasim Zafar. Et al. Offline Handwritten Quranic Text Recognition: A Research Perspective, Aligarh Muslim University, 01 Feb 2021. [4] Ashlin Deepa, R.N., Rajeswara Rao, R. A novel nearest interest point classifier for offline Tamil handwritten character recognition. Pattern Anal Applic 23, 199–212, 2020. [5] Chatterjee, A., Ghosal, S.K. & Sarkar, R. LSB based steganography with OCR: an intelligent amalgamation. Multimed Tools Appl 79, 11747–11765, 2020. [6] Dey, R., Balabantaray, R.C. & Mohanty, S. Sliding window based off-line handwritten text recognition using edit distance. Multimed Tools Appl 81, 22761–22788, 2022. [7] Drobac, S., Lindén, K. Optical character recognition with neural networks and postcorrection with finite state methods. IJDAR 23, 279–295, 2020. [8] Geetha, R., Thilagam, T. & Padmavathy, T. Effective offline handwritten text recognition model based on a sequence-to-sequence approach with CNN–RNN networks. Neural Comput & Applic 33, 10923–10934, 2021. [9] H.Oda, Akihito Kitadai, M.Onuma. et al. A search method for on-line handwritten text employing writing-box-free handwriting recognition, University of Tokyo, 26 Oct 2020. [10] Kiran, P., Parameshachari, B.D., Yashwanth, J. et al. Offline Signature Recognition Using Image Processing Techniques and Back Propagation Neuron Network System. SN COMPUT. SCI. 2, 196, 2021. [11] Kunnan Wu, Huiyuan Fu, Wensheng Li. Et al. Handwritten Text-line Detection and Recognition in Answer Sheet Composition with Few Labeled Data, Beijing University of Posts and Telecommunications, 16 Oct 2020. [12] Lincy, R.B., Gayathri, R. Optimally configured convolutional neural network for Tamil Handwritten Character Recognition by improved lion optimization model. Multimed Tools Appl 80, 5917–5943, 2021. [13] Mahmud, H., Islam, R. & Hasan, M.K. On-air English Capital Alphabet (ECA) recognition using depth information. Vis Comput 38, 1015–1025, 2022. [14] Raj, M.A.R., Abirami, S. Junction Point Elimination based Tamil Handwritten Character Recognition: An Experimental Analysis. J. Syst. Sci. Syst. Eng. 29, 100–123, 2020. [15] Sinwar, D., Dhaka, V.S., Pradhan, N. et al. Offline script recognition from handwritten and printed multilingual documents: a survey. IJDAR 24, 97–121, 2021. [16] Wu, JW., Yin, F., Zhang, YM. et al. Handwritten Mathematical Expression Recognition via Paired Adversarial Learning. Int J Comput Vis 128, 2386–2401, 2020. [17] Yaoxiong Huang, Zecheng Xie, Lianwen Jin. Et al. Adversarial Feature Enhancing Network for End-to-End Handwritten Paragraph Recognition, South China University of Technology, 01 Sep 2020. [18] Yichao Wu, Fei Yin, Zhuo Chen. Et al. Handwritten Chinese Text Recognition Using Separable Multi-Dimensional Recurrent Neural Network, Chinese Academy of Sciences, 01 Nov 2020. [19] https://paperswithcode.com/dataset/iam [20] https://www.kaggle.com/datasets/naderabdalghani/iam-handwritten-forms-dataset

Copyright

Copyright © 2023 Dr. S. Kanmani, B. Sujitha, K. Subalakshmi, S. Umamaheswari, Karimreddy Punya Sai Teja Reddy. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET50184

Publish Date : 2023-04-07

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online