Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Online Network Protection Firmware for Malware Identification Utilizing Transformer Learning

Authors: Geetha M, Ramkumar R, Sarankumar C, Velmurugan K, Boopathi N

DOI Link: https://doi.org/10.22214/ijraset.2024.58280

Certificate: View Certificate

Abstract

Malware ID expects a critical part in network security with the expansion in malware improvement. What more, kinds of progress in cutting edge assaults. Noxious programming applications, or malware, are the principal wellspring of different security issues. For different reasons, including the taking of state of the art developments and insightful properties, regulative exhibitions of retaliation, and the modification of sensitive information, to give some examples, these pernicious applications plan to perform unapproved exercises on the host machines to assist their makers. More valuable assistance systems are required because of the quick expansion of noxious programming on the web and their self changing skills, as in polymorphic and remarkable malware. This task proposes to support the MalFree Sandbox with stacked bidirectional long transient memory (Stacked BiLSTM) and generative prepared transformer based (GPT2) critical learning language models for recognizing pernicious code isolated. The proposed computations, specifically the bidirectional long transient memory (BiLSTM) model and the generative prepared transformer 2 (GPT-2) method, employ gathering rules derived from Minimal Executable (PE) Records static examination results to identify harmful code pieces. To comprehend malwares through MalFree Sandbox, care should be taken to sandbox the malwares in a climate that considers an encapsulation and exhaustive evaluation while in addition keeping on propelling spread from being gifted.

Introduction

I. INTRODUCTION

Malware (destructive composing PC programs) is a massive gamble to PC designs, cells, and affiliations all around the planet. Malware can incur harm, including taking delicate information, holding onto structures, and upsetting essential associations. There is a growing demand for improved methods because traditional mark-based malware discovery frameworks are unable to distinguish between new and advanced malware variants. Malware code pieces, as a rule, to overlook a framework then again contraption's security strategies by executing themselves on the design. Aggressors could exploit blemishes in PC structures to take delicate information, spy on the compromised system, or assume command over the structure. Malware is often referred to as malicious "documents," but malicious code typically only affects a small portion of a document rather than the entire document. Model development for malware affirmation, as a rule, with consolidate, not completely firmly established by one or the other static or dynamic evaluations, and every so often crossbreed assessment. The solid evaluation adopts a gander at the strategy to acting of PE (Supportive Executable) reports upon execution, while the static assessment processes the substance of the PE records without execution. The DL alludes to setting up a potentially complicated learning system tended to by a solitary model, a Profound Cerebrum Association (DNN), and is the finished learning approach. The organization tends to the whole objective structure via mechanizing feature extraction totally without preprocessing. Utilizing the open-source disassembler objdump, we separate gathering codes in this task. This contraption makes movements as documents or sentences. Those information are then utilized for model turn of events, considering that the get-together code gives accurate data to acquiring fundamental coding plans. For this, we utilize the disassembler yield as information Worldwide Journal of Investigation Dispersion and Studies, Vol 4, no 6, pp 2686-2692 June 2023 2687 information to foster a language model helped with word implanting in this way to dealing with conventional language. Then, by using this language model, we plan to see whether an executable record is harmful or harmless. Basically, we endeavor outrageous area on bunch directions' executable archives. Computer-based intelligence, a subset of man-made intellectual prowess, includes significant learning. It connects with us to kill data from the layers present in its planning. It is utilized in Picture Attestation, Intimidation Disclosure, News Appraisal, Stock Evaluation, Self-driving vehicles, and Clinical advantages like unsafe advancement picture appraisal, and so on. By offering more information into the affiliation, the layers get organized well as a rule.

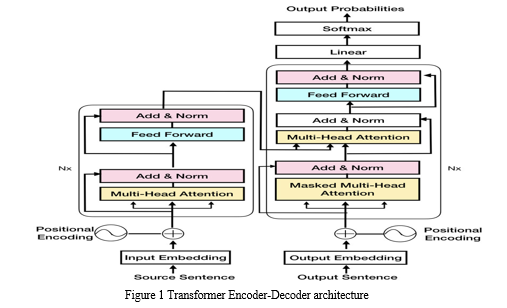

II. TRANSFORMER

Thus, to tackle the constraints of both RNN and encoder-decoder models, the creators of Transformers [8] have proposed an answer. They depend on the seq2seq encoder-decoder by supplanting RNN with consideration mechanisms.2APREPRINT - Mama RC H 8, 2021The consideration instrument permits the Transformers to have an extremely long haul memory. A Transformer model has the ability to "attend" or "focus" on all of the tokens that have previously been produced. The consideration system permits the decoder to go back over the whole sentence and specifically separate the data it needs during interpreting. Consideration gives the decoder access to every one of the secret conditions of the encoder. We can't just give the decoder a whole sequence; instead, we need to give it some kind of synthesis vector because the decoder still needs to make a single prediction for the next word. So it asks the decoder to pick which stowed away states to utilize and which to overlook by weighting the secret states. The decoder then receives a weighted amount of secret states to use to foresee the following word. In this part, we de?ne the setting of the Transformer-based approach by introducing the vast majority of the methodologies related to the designs of the methodologies. The Transformer in NLP is another design that targets tackling sequence to grouping assignments while effectively overseeing long-range conditions. The Transformer has been proposed in [8]. A Transformer is a design that evades repeat and depends altogether on a consideration component to draw global dependencies among info and result. Before Transformers, predominant succession transduction models were based on complex repetitive or convolutional brain networks that incorporate an encoder and a decoder. Transformers likewise utilize an encoder and a decoder, yet the disposal of repeat for consideration mechanisms allows for a lot more prominent parallelization than strategies like RNNs and CNNs. The change is unquestionably a significant advancement over the RNN-based seq2seq models. However, it has its own restrictions. Consideration can only be paid to message strings of ?xed length. The text should be partitioned into various portions or pieces before being introduced into the framework as information and this causes setting fracture. For instance, BERT [4], another linguistic presentation model from Google computer based intelligence, utilizes pre-preparing and ?ne-tuning to make cutting edge models for a wide range of undertakings. These undertakings incorporate inquiry responding to frameworks, opinion investigation, and phonetic induction. Transfer learning has been utilized in NLP utilizing pre trained language models that have extraordinary structures.

A. Proposed Framework

The proposed framework, MalFree, is an association prosperity on the web firmware that uses progressed huge learning strategies, unequivocally stacked bidirectional long passing memory (Stacked BiLSTM) and generative coordinated transformer based (GPT-2) language models, to see and thwart malware assaults. A stacked bidirectional long transient memory (Stacked BiLSTM) and generative prepared transformer based (GPT-2) significant learning language model for detecting noxious code are explicitly proposed in the estimates.

Developed language models by removing headings from the. Text sections of both harmful and harmless Adaptable Executable (PE) records. To learn and break down the models, the BiLSTM model cycles a gathering of data parts across time. In contrast, the GPT-2 model, which is based on transformers, lets multiple information components interact simultaneously by displaying long conditions between input grouping components with equal arrangement handling. The accompanying step is to isolate equivalent credits, like syntactic and semantic characteristics, from the Global Diary of Exploration Distribution and Audits, Vol. 4, No. 6, pp. 2686-2692 June 2023 2690 social event rules by utilizing the point of view of NLP showing by DL. This models were intended to truly learn and eliminate the elements and properties of low level enrolling create and sort out the restriction of reports.

III. STACKED BILSTM

The Stacked BiLSTM part of MalFree is answerable for breaking down the way of behaving of the organization traffic to recognize designs that are characteristic of malware action. The model is prepared on an enormous dataset of malware tests to figure out how to perceive normal malware ways of behaving like order and-control correspondence, information exfiltration, and organization surveillance. 3.2 GPT-2 The GPT-2 part of MalFree is liable for producing alarms and notices in light of the result of the Stacked BiLSTM model. At the point when the model recognizes dubious conduct in the organization traffic, it sends a caution to the GPT-2 model, which creates a characteristic language ready message that can be shown to the client or shipped off a security tasks focus (SOC). The framework works continuously and is intended to be profoundly versatile and adaptable, working across various stages and conditions. It utilizes a mix of directed and unaided learning ways to deal with distinguish both known and obscure malware dangers. The proposed framework functions as follows:

- Data Arrangement: MalFree gathers information from different sources, including document frameworks, network traffic, and design logs.

- Feature Expulsion: MalFree disposes of material parts from the gathered information utilizing procedures like static and dynamic assessment.

- Stacked BiLSTM: MalFree utilizes a Stacked BiLSTM mind relationship to isolate the disposed of parts and receive malware assaults. Because it is based on a large dataset of known malware tests, the Stacked BiLSTM model is able to precisely identify new and previously unknown malware threats. GPT-2: MalFree besides utilizes a GPT-2 language model to convey customary language portrayals of recognized malware chances, making limitless mind boggling for online protection specialists to comprehend and answer these dangers.

- Avoidance and Reaction: By preventing dubious records and association traffic, MalFree actively prevents malware attacks. It likewise produces admonitions and notification to make online security specialists aware of possible risks, permitting them to act rapidly to forestall further mischief.

IV. SYSTEM TESTING

Testing is a fundamental piece of the improvement joint effort for any association security firmware, including MalFree. Complete testing system is fundamental to guarantee that MalFree is productive in seeing and ruining malware assaults. By planning these different testing techniques, any issues or deficiencies can be seen and would in general before game-plan, guaranteeing that MalFree gives strong and solid association security assurance.

A. Test and Result

Investigate ID: MF-TC-001

Dissect Depiction: Test the comfort and execution of MalFree's Stacked BiLSTM and GPT-2 models for seeing and upsetting malware.

B. Test Steps

- Ship off MalFree and confirm that it is running and utilitarian.

- Begin a replicated malware assault by running a known malware report on a test framework.

- Make sure that MalFree's Stacked BiLSTM and GPT-2 models see the malware thinking about its engraving, direct, and different parts.

- Affirm that MalFree produces a caution or notice to display the presence of the malware.

- Check that MalFree makes the suitable move to forestall the malware from working, for example, detaching the pernicious record or forestalling organization traffic.

- Make sure that MalFree logs the divulgence and assumption for the malware, including the information and eventual outcome of the Stacked BiLSTM and GPT-2models.

- Measure the time taken by MalFree's Stacked BiLSTM and GPT-2 models to perceive and frustrate the malware.

V. EXPLORATORY RESULT

- MalFree's farewell is effective and useful.

Overall Journal of Investigation Appropriation and Reviews, Vol 4, no 6, pp 2686-2692 June 2023 2691

2. The imitated malware assault is started effectively.

3. MalFree's Stacked BiLSTM and GPT-2 models recognize the malware thinking about its engraving, lead, and different parts.

4. MalFree produces an admonition or notice to show the malware's presence.

5. MalFree makes a fitting move to foil the malware from executing.

6. MalFree logs the region and equilibrium of the malware, including the information and result of the Stacked BiLSTM and GPT-2 models.

7. MalFree's Stacked BiLSTM and GPT-2 models see and impede the malware inside the average time interval.

4.3 Experiments The accompanying experiments were raced to survey MalFree's presentation:

a. Trial 1: Malware ID Portrayal: MalFree is gone after for its ability to recognize malware persistently.

Result: MalFree had the choice to recognize the malevolent executable record and hinder its execution, in like manner thwarting any wickedness to the structure.

b. Contextual analysis 2: Deceiving Positive Recognizable proof Portrayal: MalFree is gone after for its ability to perceive malicious and non-malicious records.

Result: MalFree recognized no bogus up-sides and had the option to recognize malignant and non-malevolent documents.

c. Third Experiment: Resource Use Portrayal: MalFree is tested for how it uses assets.

Result: MalFree caused no huge effect on the framework execution and had the option to work proficiently even while various resource intensive applications were being executed.

Conclusion

The MalFree network security firmware for malware conspicuous confirmation and assumption utilizing transformer learning with stacked bidirectional long transient memory (Stacked BiLSTM) and generative pre prepared transformer-based (GPT-2) models was endeavored and viewed as altogether persuading, exact, and fit. MalFree had the decision to recognize malware progressively, see noxious and non-hurtful records, and capacity proficiently without inviting on any enormous effect on framework execution. Taking into account the results of the tests, MalFree is enthusiastically proposed for use in any framework requiring basic level association security attestation.

References

[1] Caviglione, L.; Choras, M.; Crown, I.; Janicki, A.; Mazurczyk, W.; Pawlicki, M.; Wasielewska, K. Tight Weapons contest: Outline of Current Malware Dangers and Patterns in Their Discovery. 9, 5371–5396, IEEE Access 2021. [ CrossRef] [2] Morgan, S. Cybercrime Harms $6 Trillion by 2021. 2017. Accessible on the web: (accessed on July 15, 2021) https://cybersecurityventures.com/hackerpocalypsecybercrimereport-2016/ [3] Cannarile, A.; Dentamaro, V.; Galantucci, S.; Iannacone, A.; Impedovo, D.; Pirlo, G. Looking at Profound Learning andShallow Learning Methods for Programming interface Calls Malware Expectation: A Review. Appl. Sci. 2022, 12, 1645. [ CrossRef] [4] Villalba, L.J.G.; Orozco, A.L.S.; Vivar, A.L.; Vega, E.A.A.; Kim, T.- H. Emancipate product Programmed Information Obtaining Apparatus. IEEE Access 2018, [5] Anusha Damodaran, Fabio Di Troia, Corrado Aaron Visaggio, Thomas H Austin, and Imprint Stamp. A comparisonof static, dynamic, and half breed investigation for malware identification. [6] Ya Pan, Xiuting Ge, Chunrong Fang, and Yong Fan, \"Journal of Computer Virology and Hacking Techniques,\" 13(1), 2017. A precise writing survey of android malware detectionusing static investigation. IEEE Access, 8:116363-116379, 2020.8 APREPRINT - Mama RC H 8, 2021 [7] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-preparing of profound bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018. [8] Zhilin Yang, Zihang Dai, Yiming Yang, Jaime Carbonell, Russ R Salakhutdinov, and Quoc V Le. Xlnet: Summed up autoregressive pretraining for language getting it. In Advances in brain data processingsystems, pages 5753-5763, 2019. [9] Yi Tay, Mostafa Dehghani, Dara Bahri, and Donald Metzler. Ef?cient transformers: An overview. arXiv preprintarXiv:2009.06732, 2020. [10] Salman Khan, Muzammal Naseer, Munawar Hayat, Syed Waqas Zamir, Fahad Shahbaz Khan, and Mubarak Shah. Transformers in vision: An overview. Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, ukasz Kaiser, and Illia Polosukhin are the authors of the arXiv preprint that was published in 2021. [11] Consideration is all you really want. In Advances in brain data handling frameworks, pages5998-6008, 2017

Copyright

Copyright © 2024 Geetha M, Ramkumar R, Sarankumar C, Velmurugan K, Boopathi N . This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET58280

Publish Date : 2024-02-02

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online