Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Pattern Recognition in Embedded Systems for Event Occurrences

Authors: Abhishek Urunkar, Sudhanshu Khapre, Mayuri Kasabe

DOI Link: https://doi.org/10.22214/ijraset.2024.58523

Certificate: View Certificate

Abstract

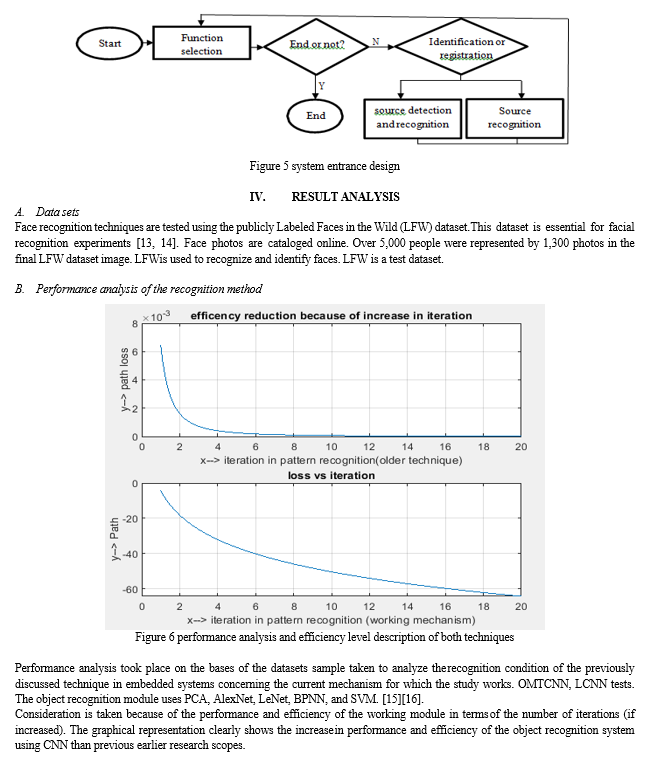

PR (pattern recognition) typically includes interaction with humans and other complicated processes in the real world, embedded systems are ideal candidates. A typical PR application often considered the more perceptual branch of AI, responds to external events that the system detects through physical sensors or input devices by activating actuators or displaying relevant information. To explore the embedded recognition system and apply the deep learning algorithm to face detection, the deep learning-based Convolutional Neural Network (CNN) suggests two deep face detection methods. These are presented to use the deep learning algorithm. This was done to make it possible for us to use the deep learning algorithm for face detection. Because of this, to analyze the built-in face recognition system and applied the deep learning algorithm to the process of identifying faces. In addition, to do both things simultaneously. OMTCNN\'s training accuracy is 85.14%, higher than the unimproved algorithm. Accuracy of the recognition and calculation acceleration modules boosts embedded system face detection and identification performance. Embedded deep learning recognition is helpful.

Introduction

I. INTRODUCTION

A. Embedded System

An embedded system is a computer system that is integrated into a larger mechanical or electronic system. An embedded system has a processor, memory, and I/O devices. [1][2] It's built-in electrical or electronic hardware and mechanical components. Embedded systems often demand real-time computing because they regulate machine activities. Embedded systems control many modern devices. [3] In 2009, 98% of microprocessors were used in embedded systems.

Microprocessors that are "ordinary" are also widely used, particularly in more complex systems. These microprocessors are also prevalent; they store their memory and peripheral interface circuitry on chips that are housed externally to the device. Microcontrollers, which are essentially microprocessors with integrated memory and peripheral interfaces, are typically used in today's embedded systems; however, standard microprocessors are also widely used in these kinds of systems. Microcontrollers are essentially microprocessors with integrated memory and peripheral interfaces.

Engineers specializing in design can enhance the functionality of embedded systems, so reducing the overall cost and size of the product while simultaneously improving its dependability and performance.

This is feasible because the embedded system is tailored specifically to perform its required functions. Embedded system manufacturers can take advantage of economies of scale for some of their products because these products are produced in large quantities.

Embedded systems can range in complexity from a single microcontroller chip to several units, peripherals, and networks in equipment racks or across huge geographical areas connected by long-distance communications lines. Low-complexity embedded systems include a single microcontroller chip.

B. Pattern Recognition in Embedded System

The field of pattern recognition has reached an advanced degree of development where the study of algorithms for recognizing patterns in data has reached a mature state. On the other hand, a sizeable percentage of embedded system designers do not possess the requisite abilities in the field of pattern recognition. When it comes to implementing these algorithms into the system designs being created, this confronts the designers with a challenge. Embedded systems are the focus of this study, in which we provide a framework for pattern recognition. By utilizing an interactive environment, a tutorial, and reference code, this framework enables developers to design a K- nearest neighbors classification technique, which is a robust model in pattern recognition. This may be accomplished by using the K-nearest neighbors classification algorithm. Embedded systems were the focus of the framework's development. We allowed sixty-six students to take part in an experiment designed to compare how well they performed in terms of the quality of their code and the speed at which they developed it when utilizing the suggested framework versus how well they performed when not utilizing the framework. This step was taken to demonstrate the usefulness of the proposed framework. According to the findings, the utilization of our framework results in a notable enhancement in the overall quality of the development experience.

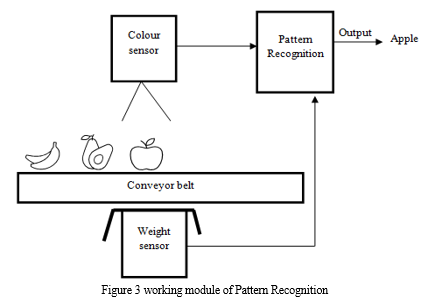

C. Working on pattern recognition

Figure 2 shows the overlap between mature and embedded domains. In numerous applications, embedded systems overlap with control systems, signal processing, and pattern recognition (Figure 1). In overlapping embedded system applications, developers may need to consult with different sectors to produce a product [2, 3]. Many embedded system developers lack the financial means, access, or experience for these specialists. Control systems and signal processing experts developed reusable frameworks to solve specific embedded system development issues.

These embedded system frameworks are popular. PID controllers and FIR filters make it easier for developers to build embedded systems without professional knowledge.

II. REVIEW OF LITERATURE

J Wu et.al., (2020) [3] stated that in deep Spiking neural networks (SNNs), layers are learned in concert with the ANN conversion process for a progressive learning curve. In this study, we examine the similarities and differences between ANNs and SNNs, both of which employ spike count to simulate neuron activity in an analog fashion. We provide a layer-wise learning strategy with a flexible training scheduler to tweak the network's weights. Weighing precision and fan-in connections are constrained by progressive tandem training. After being trained, SNNs excel in large-scale tasks like object recognition, image reconstruction, and speech separation in terms of both classification and regression. It paves the way for wireless and embedded gadgets.

LM. Dang, et.al., (2020) [4] stated that Using information gathered from vision and embedded sensors, Human Activity Recognition (HAR) develops apps with an awareness of their surroundings for use on the Internet of Things and healthcare. Despite HAR evaluations and surveys, the underlying issue has been ignored. Researchers are looking into HAR. Checking the state of HAR. In this survey, the benefits, and drawbacks of HAR are compared. Data from sensors and cameras are used in HAR methods. Collecting data, preparing it, engineering features, and training are all tasks that fall under the purview of the various subgroups. Deep learning using HAR is also discussed.

J. Alvarez-Montoya et.al., (2020) [5] stated that the accuracy of strain sensors made with fiber optics has greatly improved fast orthogonal search (FOS). Although FOS has been used for Structural Health Monitoring (SHM) in flight, automatic damage identification has not been achieved in all flight phases or for all damages. Twenty Fiber Bragg Gratings (FBGs) were installed in the front spar, along with a wireless remote sensing subsystem and a miniaturized data collection subsystem for strain signals. Only six of the HUMS flights were perfect; the other ten were either mediocre or worse, simulating damage. Using strain to detect damage was practical in flight. Damage and efficiency rankings are completed by machine learning.

M. Knapik et.al., (2019) [6] stated that the research provides a novel method for detecting driver weariness using thermal imaging and yawn detection. This study updates active drivers' assistance systems to warn of tiredness based on ongoing observations. Using thermal imaging, the approach works day and night without distracting drivers. Face alignment begins with eye-corner detection. The proposed yawning thermal model detects yawns. An annotated image database was established for quantitative evaluation. Experiments in the lab and on real cars showed the method's effectiveness.

PY. Hao et.al., (2019) [7] stated that Embedding POIs. The proposed technique learns latent representations from geo-tagged postings. CNN text mining reveals POI representations. Using a multimodal embedding model that encompasses location, time, and language, POI posts are monitored and event or burst information is extracted. Combining matrix factorization and real- time POI embedding provides a more thorough technique. Our technique is evaluated using geotagged NYC tweets. Current events boost POI recommendation algorithms.

E. Lygouras et.al., (2019) [8] stated that the research discusses real-time human identification on an autonomous rescue Unmanned Aerial Vehicle (UAV). The embedded system could recognize open-water swimmers using deep learning. This enables the UAV to give accurate, unsupervised assistance, boosting first responder capabilities. The suggested system combines computer vision techniques for precise human recognition and rescue apparatus release. It has discussed hardware configuration and system performance.

S. wang et.al., (2019) [9] Stated that the analysis suggests using an embedded for real-time grid monitoring and adaptive SEA selection. It guarantees precise measurements independent of input noise. The main approach is to create featured scalograms that are then fed into a convolutional neural network (CNN) to classify events. As demonstrated by our findings, the suggested system provides a precise classification of power grid events, paving the way for real-time situational awareness on a grid scale.

III. RESEARCH METHODOLOGY

A. Background Study

CNN, utilized for image identification and processing, is optimized for handling pixel data. Machine vision applications, such as image and video recognition, decision support systems, and Natural Language Processing (NLP), often use CNNs, a kind of Artificial Intelligence (AI) for image processing that employs deep learning to carry out both generating and descriptive tasks. When processing pictures, convened lower-resolution segments of the original image.

This technique accelerates detection and shrinks images. After generating a picture pyramid, image data may be fed into a neural network model. First, FCNs process pictures (FCN). Image filters Pnet. Probability and bounds. Pnet removes high-coincidence face frames. Scaling Rnet's image. Onet gets the zoomed image slice to alter the prediction for non-maximum output. Improves pixelated images.

B. Problem Formation

The improvement of multi-core face recognition may be achieved by combining OMTCNN with LCNN. According to the study, the accuracy of OMTCNN's training is superior to that of MTCNN. Tests are successful with integrated face recognition. Embedded system face detection and identification was significantly improved because to improvements in face recognition accuracy and computation acceleration. It is helpful to have deep face recognition [15].

C. MTCNN optimization mechanism

Multi-Task Cascaded Convolutional Neural Networks (MTCNN) detect faces and key points.

Cascaded Deep Neural Networks detect faces. Step-by-step decisions can filter non-facial information early in object recognition. Network calculation increases prediction accuracy. Real- time face detection [8].

MTCNN's face detection method is like V- J's. Cascaded DNNs Pnet, Onet, and Rent. Three DNNs yield consistent loss functions. MTCNN's output has the same loss function and three sub-parts.

Embedded facial recognition uses an optimized MTCNN algorithm. Embedded system facial detection algorithm module. The neural network has a unique receptive field size, and image production must use image pyramid processing. Additional faces are detected by creating more image pyramids from full-size faces Lower limit of face detection pixels is 80, and the image size is lowered to reduce input size.

C. Lightweight Pattern layout theory using CNN

DCNN can recognize faces because of its ability to use feature vectors. Measures the equidistant position of two vectors [10, 11]. In terms of facial recognition, DCNN performs exceptionally well. To embed a complex DCNN in a complex network. CNN Important techniques include residual, compression-excitation, and feature pooling (MFGP). Modulus R was bypassed. An R convolution. The input and the output are combined in convolutional layers. The gradient does not explode or vanish when a jumper connection is made, and a sum is processed. The properties of LCNN are weighted by C-E. The map of characteristics C and E averaged. To reduce the size of the channel data, vectors are used. Vector feature representation of a feedforward neural network with two layers. S-shaped likelihood. The output of LCNN's neural network model can be quickly compressed using MFGP. When C-E is used together with MFGP, map selection is enhanced.

D. Construction Of Multi-Core Embedded Pattern Recognition System

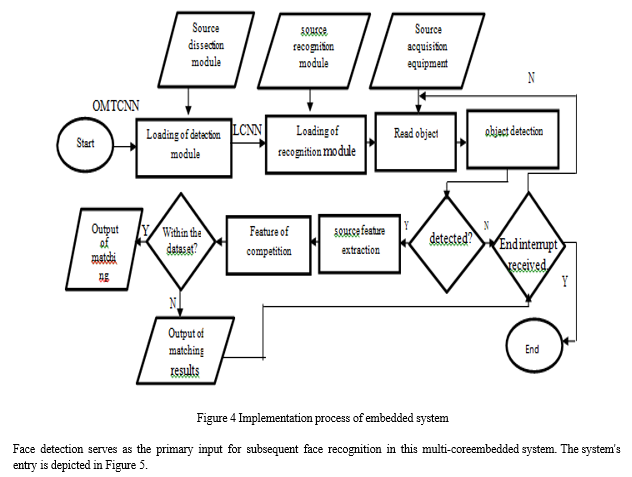

Detection and recognition are math-intensive [11, 12]. Two embedded systems boost facial recognition. Due to platform integrity and module independence, socket connections transport data between platforms. OMTCNN identifies people. TX2 and NCS were chosen for cost, power usage, and other reasons. Algorithms should increase core use.

The process by which these face detection and identification algorithms have been included into this multi-core embedded system is shown in Figure 4. It is possible that these techniques are placed elsewhere inside the embedded system itself. The system is capable of doing a range of activities, including registering users and recognising the users' goods, and it has the capacity to complete all of these things. Two of these duties are registering users, and the other is recognising the users' belongings. The following is a list of two of the activities that may be accomplished by using the system. When you have completed this stage of the procedure, the next step is installing software onto the computer that is capable of facial recognition. a picture that was taken by the device that is the subject of the investigation at this time. The detector is the component that decides whether or not it is present, and the recognition module is the component that is responsible for determining which characteristic should be retrieved. Both of these components work together to determine whether or not it is there. Both of these components contribute to the overall process of determining whether or not it is present by working together. The combined efforts of these two facets of the study will help decide, in part, if anything is there or not. This might be either positive or negative. We wish to make this function more comprehensible to users by drawing analogies between it and a database in the hopes that this would lessen any misunderstanding that users may have as a consequence of using this feature.

Conclusion

Facial features can be identified with the help of proposed Deep Neural Network techniques. When applied to LFW face verification, OMTCNN achieves a higher rate of precision and accuracy than previously researched theologies. `Facial recognition performed by LCNN performs exceptionally well. Recognition with several cores is fast and reliable. Based on these findings, it generates construct responsive recognition systems. Neural network systems, radar processing, speech recognition, text classification, image processing, and computer vision can all benefit from pattern recognition. It, too, finds application in fields as diverse as biometrics, bioinformatics, big data analysis, and data science. It emphasizes the fundamentals. The techniques for recognizing patterns in data using machine learning are the subject of this paper. We use machine learning every day, often without even realizing it. There are two types of machine learning, called supervised and unsupervised. Members of this monitored system report their information, expected harvest, and the accuracy of the forecast. As a result of the prep work, fresh information will be employed. The effectiveness of unsupervised algorithms is low. A. Future Scope Given that pattern recognition is still an emerging field of study, there is ample opportunity for new insights and an infinite range of potential applications that hold great promise for enhancing human existence in the not-too-distant future. Still to come are more developments that will make use of Pattern recognition. The field of robotics is at the forefront of many emerging technologies. Where it is feasible to improve productivity and make progress in training humanoids and other AI.

References

[1] Barr, Michael. \"Embedded systems glossary.\" Neutrino Technical Library (2007). [2] Steve, Heath. \"Embedded Systems Design.\" EDN Series for Design Engineers (2003). [3] Wu, Jibin, Chenglin Xu, Daquan Zhou, Haizhou Li, and Kay Chen Tan. \"Progressive tandem learning for pattern recognition with deep spiking neural networks.\" arXiv preprint arXiv:2007.01204 (2020). [4] Dang, L. Minh, Kyungbok Min, Hanxiang Wang, Md Jalil Piran, Cheol Hee Lee, and Hyeonjoon Moon. \"Sensor-based and vision-based human activity recognition: A comprehensive survey.\" Pattern Recognition 108 (2020): 107561. [5] Alvarez-Montoya, Joham, Alejandro Carvajal-Castrillón, and Julián Sierra-Pérez. \"In-flight and wireless damage detection in a UAV composite wing using fiber optic sensors and strain field pattern recognition.\" Mechanical Systems and Signal Processing 136 (2020): 106526. [6] Knapik, Mateusz, and Bogus?aw Cyganek. \"Driver’s fatigue recognition based on yawn detection in thermal images.\" Neurocomputing 338 (2019): 274-292. [7] Hao, Pei-Yi, Weng-Hang Cheang, and Jung-Hsien Chiang. \"Real-time event embedding for POI recommendation.\" Neurocomputing 349 (2019): 1-11 [8] Lygouras, Eleftherios, Nicholas Santavas, Anastasios Taitzoglou, Konstantinos Tarchanidis, Athanasios Mitropoulos, and Antonios Gasteratos. \"Unsupervised human detection with an embedded vision system on a fully autonomous UAV for search and rescue operations.\" Sensors 19, no. 16 (2019): 3542. [9] Wang, Shiyuan, Payman Dehghanian, and Li Li. \"Power grid online surveillance through PMU-embedded convolutional neural networks.\" IEEE Transactions on Industry Applications 56, no. 2 (2019): 1146-1155. [10] Chi, Jianning, Shuang Zhang, Xiaosheng Yu, Chengdong Wu, and Yang Jiang. \"A novel pulmonary nodule detection model based on multi-step cascaded networks.\" Sensors 20, no. 15 (2020): 4301. [11] Maji, Partha, and Robert Mullins. \"On the reduction of the computational complexity of deep convolutional neural networks.\" Entropy 20, no. 4 (2018): 305. [12] Eghbalian, Sajjad, and Hassan Ghassemian. \"Multispectral image fusion by deep convolutional neural network and new spectral loss function.\" International Journal of Remote Sensing 39, no. 12 (2018): 3983-4002. [13] Iqbal, Mansoor, M. Shujah Islam Sameem, Nuzhat Naqvi, Shamsa Kanwal, and Zhongfu Ye. \"A deep learning approach for face recognition based on angularly discriminative features.\" Pattern Recognition Letters 128 (2019): 414-419. [14] Oh, Seon Ho, Geon-Woo Kim, and Kyung-Soo Lim. \"Compact deep learned feature-based face recognition for Visual Internet of Things.\" The Journal of Supercomputing 74, no. 12 (2018): 6729-6741. [15] Vaswani, Namrata, Thierry Bouwmans, Sajid Javed, and Praneeth Narayanamurthy. \"Robust subspace learning: Robust PCA, robust subspace tracking, and robust subspace recovery.\" IEEE signal processing magazine 35, no. 4 (2018): 32-55. [16] Ghulanavar, Rohit, Kiran Kumar Dama, and A. Jagadeesh. \"Diagnosis of faulty gears by modified AlexNet and improved grasshopper optimization algorithm (IGOA).\" Journal of Mechanical Science and Technology 34, no. 10 (2020): 4173-4182. [17] Lv, Xue, Mingxia Su, and Zekun Wang. \"Application of face recognition method under deep learning algorithm in embedded systems.\" Microprocessors and Microsystems (2021): 104034.

Copyright

Copyright © 2024 Abhishek Urunkar, Sudhanshu Khapre, Mayuri Kasabe. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET58523

Publish Date : 2024-02-20

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online