Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Performance Improvement of Rice Leaf Disease Prediction Model using Enhanced Super-Resolution Generative Adversarial Networks

Authors: Umesh S, Vimala Mathew, Praveen Kumar T, Poornima Thangam I, Amal Krishna U R, Prasoon Kumar KG, Nandakumar R

DOI Link: https://doi.org/10.22214/ijraset.2024.64242

Certificate: View Certificate

Abstract

The objective of this paper is to propose a method to improve the performance of Rice Leaf Disease Prediction Deep Learning Model. The primary goal is to empower farmers with precise information about crop disease, enabling them to judiciously apply pesticides suitably to their crop\'s needs. The method proposed here uses image enhancement using Enhanced Super Resolution Generative Adversarial Network. Experiment was carried out using Rice Leaf Disease dataset which includes 16,000 rice leaf images categorized into four groups. Among these groups, three contain 4,000 images each, showcasing three different diseases and another 4000 images of healthy leaves. The best accuracy of the model came out to be 99.68. Also all individual diseases are identified very well and other model evaluation aspects also giving good results. Average precision obtained is 99.67, average recall value=99.68 and average f1-score came out to be 99.67. Conclusion: The performance results obtained is the highest what has been achieved of all the models that have been developed till now for this task.

Introduction

I. INTRODUCTION

Rice is the staple food for the majority population in the world and is the main contributor for the agricultural economy of many countries. India stands second in rice production after China. Rice production is severely affected by various biotic stresses which includes plant pathogens and insect pests. The diseases which causes severe economic damage to the crop includes bacterial leaf blight (Xanthomonas oryzae pv. Oryzae), brown lspot (Helminthosporium oryzae), leaf smut (Ustilaginoidea virens) etc. [1]. In the absence of timely detection and intervention, these diseases can cause significant yield loss, sometimes resulting in complete crop failure causing huge loss to the farmers. Traditional methods of disease identification require the help of plant pathologists and extension workers. However, this is not always possible for the farmers and they sometimes follow the wrong management practices, which results in failure to control the diseases. The challenging task of identifying specific diseases may result in farmers using excessive or wrong chemicals to safeguard their crops which leads to residue problems and cause health hazards to the consumers. Hence right diagnosis and early detection of the disease is crucial for minimizing crop damage by diseases[2].

The field of deep learning technology, driven by Artificial Intelligence, is experiencing rapid growth and advancement, and has made a significant breakthrough in automated disease classification and detection based on image recognition resulting in smart management of economically important diseases in various crops [3]. Machine learning and deep learning models are employed to identify and categorize diseases from images in the context of predicting diseases in rice leaves. These models learn to identify the characteristic features of each disease, such as the shape, size, color, and texture of lesions. Once a model is trained, it can be used to predict the disease of a new rice leaf image with a high degree of accuracy [2]. Image processing, which has become an invaluable tool for analyzing vast amounts of data in diverse industries, including agriculture, stands out as another rapidly expanding area of research [3]. The proposed work combines Image processing with Artificial intelligence to improve the accuracy of rice leaf disease prediction. This helps as an effective pre-processing to remove noises present in the dataset.

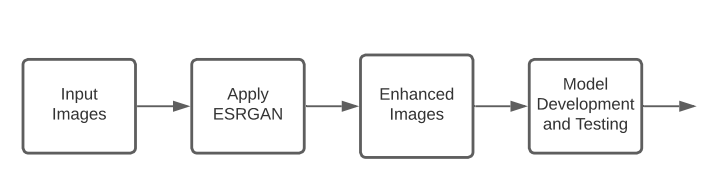

Figure1: Block diagram showing image enhancement and model development

Figure 1 shows two sets of rice the images before and after image enhancement using ESRGAN.

II. LITERATURE SURVEY

For the classification of healthy and diseased paddy plants, a wide array of deep learning and machine learning models are at our disposal, but the real challenge is to develop a method with higher performance evaluation metrics.

Qing Yao et a. [4] developed a SVM based model for identifying rice diseases by applying image processing techniques. Features like texture and shape were obtained from the images from the segmented diseased spots. Three important rice leaf diseases were identified by this SVM based on their shape and colour. The SVM scored an accuracy of 97.2%.In 2019, Jahid Hasan et al [5], proposed a model with the SVM classifier combined with deep CNN.This model was trained for nine different rice diseases using a dataset of 1080 images . Extracted features from the DCNN model were then used to train the SVM classifier. An accuracy of 97.5% was achieved by this model for identifying nine various rice diseases. In the work done by Al-Amin et al [6], the CNN model used 900 pictures of diseased and healthy leaves. The 10-times cross validation technique was used to train and identify 4 common known rice diseases and achieved 97.40% accuracy. Qiao, Y Ramesh and Vydeki [7] developed a recognition and classification system based on Jaya algorithm for identifying paddy leaf diseases and they achieved a high level of accuracy in the detecting rice leaf diseases In the work by Kaur et al [8], feature extraction was done on a dataset of 5368 images by the SqueezeNet pre-trained model.Then the neural network classifier achieved 96.5% accuracy in classifying the lrice leaf disease on 10 cross-folds and 98.3% accuracy on 20 cross folds and the comparison outputs with basic machine learning classifiers produced satisfying results.

Another classification model for automatically classifying 5 types of diseases was suggested by Bhattacharya [9]. DenseNet 201 was used. Weights from pre-trained network were set instead of assigning random weights, but the layers were trained again on the given dataset in order to produce results. This model achieved an accuracy of 97.04 % for training and an accuracy of 95.44 % for the validation dataset respectively. Mavaddat et al [10] used two transfer learning methods for the purpose. The initial approach utilizes the output of a pre-trained model based on CNN in conjunction with a classifier. The second method involves keeping the lower layers fixed, refining the weights in the upper layers of the pre-trained network, and subsequently integrating an appropriate classifier into the model. Finally, VGG16 with 2-layers fine tuning showed 100% accuracy and f1-score. In the method by Venu et al [11], an intelligent edge detector was used to find the severity of the affected region. It was checked with a set of 1766 pictures obtained from open repositories, having healthy as well as diseased paddy leaves. This CNN achieved an accuracy of nearly 96.7%. The same model was tested with MobilenetV2 algorithm and achieved an accuracy of 89%. A CNN framework that involves pre-processing, segmentation, and finally classification of rice leaf images was developed by Singh et al [12] and was implemented using Python. The results in terms of precision, accuracy, and recall showed good progress.

Another CNN was identified by Modak et al [13]and it provided 75% accuracy rate in identifying various rice leaf diseases . In the work done by Aggarwal et al [14], a data-set of 551 images covering three different types of paddy leaf disease blast 192 images, BS 200 images and BLB 192 images were used and the InceptionResNetV2 model achieved 88 % accuracy. Also, comparison results showed that better results are produced by pre-trained models for identifying paddy leaf diseases. Sharma et al [15], experimented the classification in two phases, the binary classification of fine and RLB infected crop is the first one and the multiclassification of RLB disease severity was the second one. In this way, 94.33% accuracy in binary and 95.3% accuracy in multi-classification infection severity were attained. Based on the Mandalay dataset of images, Ul Haq et al [16], proposed a CNN model for four rice leaf diseases and the output was compared to that of Resent and Resnet50 and better results were obtained. In the work by Bhartiya et.al [17], after extracting the features of rice leaf disease images, the accuracy using Quadratic SVM classifier is found to be 81.8% and AUC is 0.92. Shape features were also used to differentiate between different types of rice diseases. Here , some reduction in accuracy is due to missing noise reduction techniques while pre-processing the data set.

III. MATERIAL AND METHODS

A, Dataset used

The dataset comprises 16,000 images of rice leaves distributed across four groups. Three of these groups consist of 4,000 images each, which features three distinct diseases, while the remaining 4,000 images feature healthy rice leaves.The images are of size 64X64. The dataset details including disease names are included in the Table 1 below.

|

Disease |

Number of images |

|

Bacterial leaf blight |

4000 |

|

Brown spot |

4000 |

|

Leaf smut |

4000 |

|

Healthy |

4000 |

Table 1: Rice leaf dataset image distribution

1) Sample Images

B. Data Pre-processing

As the deep leaning models require all the images should be of same size, all the images were resized to a consistent dimension 64x64. In order to accelerate the model's learning process and achieve improved performance, the pixel intensity values were normalized to fall within the range of 0 to 1. This normalization was achieved by dividing the pixel values by 255. Dataset including 16000 images were split into training and testing set keeping their ratio 80:20

C. Deep Learning Model Development

Multiple deep learning models including pretrained models were created utilizing the dataset to assess model performance in order to identify the optimal model. The list of models tried are listed below.

- Basic CNN model : A basic CNN model having 2 hidden layers of 32 and 64 neurons each was used.

- VGG16

- VGG19

- AlexNet

- MobileNetV2

- Resnet50

- Inceptionv3

1) VGG16 And VGG19

The Visual Graphics Group (VGG) at the University of Oxford developed VGG16 and VGG19, renowned for their uniform architecture characterized by 16 and 19 layers, respectively. These networks predominantly feature 3x3 convolutional layers and max pooling layers. Despite their straightforward design, VGG models have excelled in numerous visual recognition tasks, showcasing impressive performance levels.

2) AlexNet

One of the pioneering deep convolutional neural networks, designed by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton, is the winner of the 2012 ImageNet Large Scale Visual Recognition Challenge (ILSVRC). This victory significantly propelled the use of deep learning in computer vision. Comprising 5 convolutional layers succeeded by max-pooling layers and fully connected layers, it stands as a landmark in the evolution of neural networks for image recognition.

3) MobileNetV2

MobileNetV2, crafted by Google, is tailored for mobile and embedded vision tasks. Prioritizing speed and efficiency without sacrificing accuracy, it employs depthwise separable convolutions and inverted residuals to streamline computations while upholding strong performance.

4) InceptionV3

Google's Inception architecture, a part of the Inception family, enhances computational efficiency and performance. It achieves this by employing modules that use different kernel sizes (1x1, 3x3, 5x5) to capture diverse-scale features while keeping computational expenses low.

5) ResNet50

ResNet50 is from the ResNet (Residual Network) family by Microsoft Research. It's famed for its residual connections, enabling training of exceptionally deep networks by addressing the vanishing gradient issue. These networks include shortcut connections in each block, helping gradients flow better during training by skipping one or more layers.

D. Image Enhancement

For improving the performance of the models, in addition to the fine tuning of the models, image enhancement was also applied.

An image enhancement method named Enhanced Super Resolution Generative Adversarial Networks (ESRGAN). The ESRGAN works based on the following principles

1) Super Resolution Image

Super resolution is a processing of increasing the resolution of an image of comparatively lower resolution with minimum loss in the image content. Algorithms including Multi-exposure image noise reduction, Single-frame deblurring and Sub-pixel image localization are made useful for this task.

2) Generative Adversarial Network

GAN works based on the concept of discriminator and generator. The generator generates the images and discriminator identifies the generated image as good candidate or not.

3) SRGAN

While the generator and discriminator will get trained based on the GAN architecture, SRGANs use the help of the perceptual/content loss function to reach achieve the results. While the GAN architecture trains both the generator and discriminator, SRGANs employ the assistance of a perceptual/content loss function to achieve their desired outcomes. So not only is the adversarial loss helping adjust the weights, but the content loss is also taken care [18].

4) ESRGAN

The ESRGAN model consistently delivers superior perceptual quality compared to previous super-resolution methods [19].

ESRGAN is an improved version of SRGAN. It addresses some of the limitations and issues present in the original SRGAN model, offering better performance and visual quality.

Both SRGAN and ESRGAN are based on the Generative Adversarial Network (GAN) architecture. They use a generator to upscale low resolution images and a discriminator to distinguish between the generated high resolution images and real high-resolution images. The generator in ESRGAN is more advanced and incorporates some architectural improvements over SRGAN.

ESRGAN uses a perceptual loss function that combines content loss and adversarial loss. It also incorporates a feature loss, which is more effective at capturing high-frequency details and improving image quality. SRGAN primarily uses adversarial loss. ESRGAN often benefits from larger and more diverse training datasets, which helps it produce better super-resolved images. ESRGAN aims to reduce artifacts and improve image quality compared to SRGAN. It typically generates images with fewer distortions, such as checkerboard patterns, and produces sharper and more realistic results. ESRGAN is an evolution of SRGAN, with improvements in architecture, loss functions, training data, and image quality. It is generally considered to produce better results for image super-resolution tasks. However, the field of image super-resolution is continuously evolving, and new models and techniques may have emerged since my last knowledge update in January 2022.

All images in the dataset were enhanced by applying ESRGAN. CNN and all 6 pretrained models were again developed using the enhanced images. The process is described as a block diagram in Figure1. The training and testing curve for the loss and accuracy of the models developed after image enhancement are included in Figure 3.

E. Experimental Set up

- The models were developed using Python programming in a Linux environment.

- Models were created using both raw and processed images.

- The models were trained using a system with NVIDIA Quadro RTX 5000 GPU, Intel Gold 5220 processor, and 132GB memory.

- The models and performance results were generated and results were plotted using the visualization methods in Python.

IV. RESULTS AND DISCUSSION

In this section, we showcase the results obtained from the utilization of the ESRGAN model on images both before and after enhancement. Our primary objective was to evaluate the model's ability to enhance image quality and increase clarity.

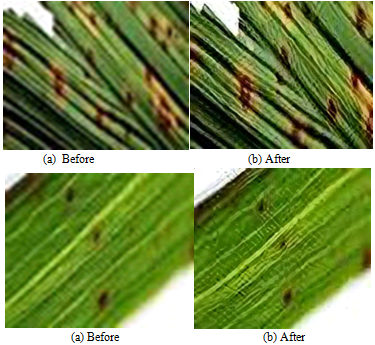

Below, Figure 2 illustrates rice leaf images in two states: one before ESRGAN, as shown in Figure (a), and the other after ESRGAN processing, as depicted in Figure (b).

Figure 2: Rice leaf images before and after applying ESRGAN

The images, before applying ESRGAN, frequently exhibit issues like low resolution, pixelation, and a deficiency of detail. With the implementation of ESRGAN, we have noted a significant improvement in image quality. The post-enhancement images demonstrate enhanced clarity, finer details, and decreased pixelation. This improvement is notably conspicuous in regions featuring intricate textures and delicate patterns, such as those present on rice leaves.

We performed a visual comparison between the original images and those improved by ESRGAN. The enhancement was clearly visible through heightened sharpness, refined textures, and overall image quality. This illustrates the model's capacity to recover or enhance subtle details that were previously indistinct or missing.

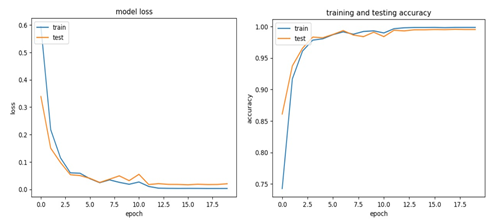

Figure 3: Accuracy and loss plot of the model during training, and testing phases

Figure 3 illustrates the accuracy and loss of the model during the training and testing phases.

|

Sl No |

Model |

Accuracy % before enhancement |

Accuracy % after enhancement |

|

1 |

VGG16 |

99.30 |

99.30 |

|

2 |

VGG19 |

93.77 |

93.85 |

|

3 |

AlexNet |

95.23 |

99.10 |

|

4 |

MobileNetV2 |

98.05 |

98.65 |

|

5 |

Resnet50 |

97.98 |

93.93 |

|

6 |

Inceptionv3 |

82.95 |

83.70 |

|

7 |

CNN |

99.55 |

99.68 |

Table 2: Performance comparison of the models developed before and after enhancement

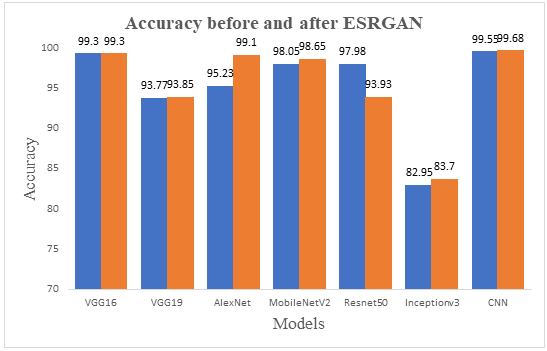

The data presented here compares the accuracy of various models in their performance before and after image enhancement using the ESRGAN model. Each model's accuracy percentage is reported both before and after the enhancement process.

Figure 4 depicts a bar graph illustrating the comparison of model accuracy before and after enhancement. In this graph, it is evident that there is a noticeable difference between the accuracy before and after enhancement. This data indicates that ESRGAN has varying effects on different models. Some models improve significantly, while others only show minor changes.

|

Disease Name |

precision |

recall |

f1 score |

|

healthy |

1.00 |

1.00 |

1.00 |

|

bacterial leaf blight |

1.00 |

1.00 |

1.00 |

|

brown spot |

1.00 |

0.99 |

0.99 |

|

leaf mut |

0.99 |

1.00 |

0.99 |

|

Average |

99.67 |

99.68 |

99.67 |

Table 3: Performance Evaluation matrix for individual diseases

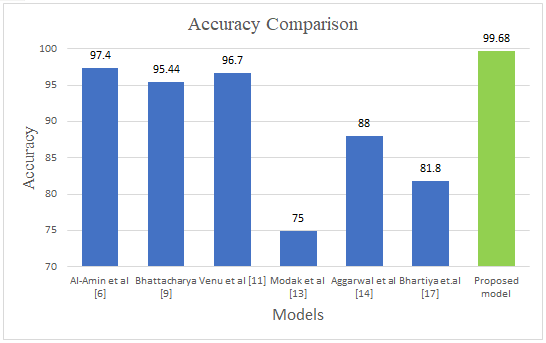

Rice leaf disease detection is a classification task. In addition to accuracy various other evaluation metrics were also computed and the result is included in

Table 3. Performance of other existing models are listed in Table4. The same is described as bar plot in Figure 5.

|

Sl No |

Model |

Accuracy Percentage |

Method Used |

|

1 |

Qing Yao et a. [4] |

97.2 |

SVM |

|

2 |

Jahid Hasan et al [5 |

97.5 |

SVM |

|

3 |

Al-Amin et al [6] |

97.40 |

CNN |

|

4 |

Bhattacharya [9] |

95.44 |

DenseNet 201 |

|

5 |

Venu et al [11] |

96.7 |

CNN |

|

6 |

Modak et al [13] |

75 |

CNN |

|

7 |

Aggarwal et al [14], |

88 |

InceptionResNetV2 |

|

8 |

Bhartiya et.al [17], |

81.8 |

Quadratic SVM |

|

9 |

ESRGAN based model |

99.68 |

CNN |

Table 4: Model Performance Comparison with existing works

Figure 5: accuracy comparison of proposed model with existing results (our result is to be added in the graph)

Conclusion

Rice leaf disease prediction models were prepared and evaluated. The contribution of our work is twofold. Firstly, very good performance is achieved using the CNN and pre-trained models. Secondly average performance improvement of 0.20 % is achieved by using the proposed image enhancement method ESRGAN. The highest accuracy obtained is 99.68% which is better than all other solutions obtained till now. By integrating cutting-edge technologies like Convolutional Neural Networks (CNN), for the development of real-time disease monitoring systems could be made possible. These sophisticated algorithms not only enhance our understanding of plant ailments but also enable us to swiftly identify and respond to disease outbreaks In the years to come, the scope of this work could be broadened to encompass an even wider range of leaf diseases, spanning from the rare and unusual to the emerging ones. This expanded disease identification system holds the promise of revolutionizing crop production practices, thereby strengthen the nation\'s economy. This work can be further taken ahead through user-friendly mobile applications.

References

[1] Kaur, K. Guleria, and N. K. Trivedi, “Rice Leaf Disease Detection: A Review,” Proc. IEEE Int. Conf. Signal Process. Control, vol. 2021-Octob, pp. 418–422, 2021, doi: 10.1109/ISPCC53510.2021.9609473. [2] G. K. V. L. Udayananda, C. Shyalika, and P. P. N. V. Kumara, “Rice plant disease diagnosing using machine learning techniques: a comprehensive review,” SN Appl. Sci., vol. 4, no. 11, p. 311, Nov. 2022, doi: 10.1007/s42452-022-05194-7. [3] T. Gayathri Devi and P. Neelamegam, “Image processing based rice plant leaves diseases in Thanjavur, Tamilnadu,” Cluster Comput., vol. 22, no. S6, pp. 13415–13428, Nov. 2019, doi: 10.1007/s10586-018-1949-x. [4] Q. Yao, Z. Guan, Y. Zhou, J. Tang, Y. Hu, and B. Yang, “Application of support vector machine for detecting rice diseases using shape and color texture features,” 2009 Int. Conf. Eng. Comput. ICEC 2009, pp. 79–83, 2009, doi: 10.1109/ICEC.2009.73. [5] M. J. Hasan, S. Mahbub, M. S. Alom, and M. Abu Nasim, “Rice Disease Identification and Classification by Integrating Support Vector Machine with Deep Convolutional Neural Network,” 1st Int. Conf. Adv. Sci. Eng. Robot. Technol. 2019, ICASERT 2019, vol. 2019, no. Icasert, pp. 1–6, 2019, doi: 10.1109/ICASERT.2019.8934568. [6] M. Al-Amin, D. Z. Karim, and T. A. Bushra, “Prediction of rice disease from leaves using deep convolution neural network towards a digital agricultural system,” 2019 22nd Int. Conf. Comput. Inf. Technol. ICCIT 2019, no. December, pp. 1–5, 2019, doi: 10.1109/ICCIT48885.2019.9038229. [7] S. Ramesh and D. Vydeki, “Recognition and classification of paddy leaf diseases using Optimized Deep Neural network with Jaya algorithm,” Inf. Process. Agric., vol. 7, no. 2, pp. 249–260, Jun. 2020, doi: 10.1016/j.inpa.2019.09.002. [8] A. Kaur, K. Guleria, and N. Kumar Trivedi, “A Deep Learning based Model for Rice Leaf Disease Detection,” 2022 10th Int. Conf. Reliab. Infocom Technol. Optim. (Trends Futur. Dir. ICRITO 2022, pp. 1–5, 2022, doi: 10.1109/ICRITO56286.2022.9964487. [9] A. Bhattacharya, “A Novel Deep Learning Based Model for Classification of Rice Leaf Diseases,” Proc. 2021 Swedish Work. Data Sci. SweDS 2021, pp. 1–6, 2021, doi: 10.1109/SweDS53855.2021.9638278. [10] M. Mavaddat, M. Naderan, and S. E. Alavi, “Classification of Rice Leaf Diseases Using CNN-Based Pre-Trained Models and Transfer Learning,” Proc. 2023 6th Int. Conf. Pattern Recognit. Image Anal. IPRIA 2023, no. February, pp. 1–6, 2023, doi: 10.1109/IPRIA59240.2023.10147178. [11] S. Venu, T. L. Surekha, P. Vasavi, and P. V. Kumar, “Deep Learning based Leaf Disease Detection using Convolutional Neural Network,” 2023 5th Int. Conf. Inven. Res. Comput. Appl., pp. 01–05, 2023, doi: 10.1109/icirca57980.2023.10220942. [12] G. Singh and R. Singh, “Deep Learning-based Rice Leaf Disease Diagnosis using Convolutional Neural Networks,” Int. Conf. Sustain. Comput. Smart Syst. ICSCSS 2023 - Proc., no. Icscss, pp. 107–111, 2023, doi: 10.1109/ICSCSS57650.2023.10169402. [13] A. Modak, A. Padamwar, S. Pokale, R. Raut, A. Devkar, and S. Patil, “Disease Detection in Rice leaves using Convolutional Neural Network,” Int. Conf. Appl. Intell. Sustain. Comput. ICAISC 2023, pp. 1–6, 2023, doi: 10.1109/ICAISC58445.2023.10200216. [14] M. Aggarwal, V. Khullar, and N. Goyal, “Exploring Classification of Rice Leaf Diseases using Machine Learning and Deep Learning,” Proc. 2023 3rd Int. Conf. Innov. Pract. Technol. Manag. ICIPTM 2023, no. Iciptm, 2023, doi: 10.1109/ICIPTM57143.2023.10117854 [15] R. Sharma, V. Kukreja, R. K. Kaushal, A. Bansal, and A. Kaur, “Rice Leaf blight Disease detection using multi-classification deep learning model,” 2022 10th Int. Conf. Reliab. Infocom Technol. Optim. (Trends Futur. Dir. ICRITO 2022, pp. 1–5, 2022, doi: 10.1109/ICRITO56286.2022.9964644. [16] U. U. Haq, M. Ramzan, M. Bilal, S. Khaliq, and A. Rafiq, “Rice Plants Diseases Detection and Classification Using Deep Learning Models,” no. July, 2023. [17] V. P. Bhartiya, R. R. Janghel, and Y. K. Rathore, “Rice Leaf Disease Prediction Using Machine Learning,” ICPC2T 2022 - 2nd Int. Conf. Power, Control Comput. Technol. Proc., no. c, 2022, doi: 10.1109/ICPC2T53885.2022.9776692. [18] C. Ledig et al., “Photo-realistic single image super-resolution using a generative adversarial network,” Proc. - 30th IEEE Conf. Comput. Vis. Pattern Recognition, CVPR 2017, vol. 2017-January, pp. 105–114, 2017, doi: 10.1109/CVPR.2017.19. [19] Wang, X., Yu, K., Wu, S., Gu, J., Liu, Y., Dong, C., Qiao, Y ,Change Loy, C. “Esrgan: Enhanced super-resolution generative adversarial networks” In Proceedings of the European conference on computer v ision (ECCV) workshops 2018,pp. 0-0.

Copyright

Copyright © 2024 Umesh S, Vimala Mathew, Praveen Kumar T, Poornima Thangam I, Amal Krishna U R, Prasoon Kumar KG, Nandakumar R. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET64242

Publish Date : 2024-09-15

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online