Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

A Research on Machine Learning Methods in a Healthcare Management Music System for Recommendations

Authors: Shikha Chaudhary

DOI Link: https://doi.org/10.22214/ijraset.2024.58150

Certificate: View Certificate

Abstract

One of the best treatments for illnesses is music, which accelerates healing for patients. Music stimulates the patient\'s emotional thinking, helping them to overcome their illness and become psychologically well. This study examines the many kinds of machine learning techniques and how they are used to music recommendations for healthcare management. Both supervised and unsupervised machine learning approaches, as well as their subcategories, are covered. According to study, music therapy enhances people\'s quality of life while gradually lessening the negative impacts of illness on the body. A strong recommendation system combined with music therapy is a powerful tool for treating people\'s emotional and psychological problems.One crucial metric used to assess the efficacy of music recommendation algorithms is classification accuracy. In this survey, music recommendations for patient care management are made using machine learning algorithms.

Introduction

I. INTRODUCTION

One of the universal languages that is said to be able to communicate feelings to individuals everywhere is music [1]. In addition to sharpening our minds and keeping us psychologically fit, music aids in maintaining mental and attentional control [1]. Experimental evidence has demonstrated that music helps us feel less stressed and restores a normal heart rate and respiration rate [1].Research has shown that music therapy can help patients sleep better and experience less stress and despair. Utilising music stimuli that raise gamma waves in the brain, music recommendation systems enhance psychiatric symptoms and memory function in patients. Thus, it is possible to analyse the emotional impacts of music by measuring physiological indicators on the skin [2].Using a novel combination of IoT and deep learning techniques, a subset of machine learning, the intelligent background music system is simultaneously conceived and constructed. Outstanding results in data processing for communication systems have been demonstrated by a cleverly built background music system. [3].

Research in the areas of music emotion recognition (MER) and music information retrieval (MIR) is constantly changing how people find and listen to music. The process of combining data from MIR and MER has become more commonplace, incorporating annotations and various musical components with machine learning or data mining methods [4]. In addition, Human Activity Recognition makes use of an inertial sensor to power a suggestion system that aids in the comprehension of medical health care systems and human behaviour [5]. The prediction of a suitable music suggestion system for healthcare is a difficult one since it might differ from person to person depending on the patient's state of mind. The different works on music recommendation systems are analysed in this paper.The different works on music recommendation systems are analysed in this paper. This work serves as a resource for upcoming academics studying music recommendation systems. The survey's findings offer machine learning-based music recommendation systems for hospital administration.

The structure of this study is as follows: In Section 2, the taxonomy of the music recommendation system is displayed.

Section 3 presents the literature on music recommendation systems. In Section 4, the Comparative analysis is displayed. The survey paper's conclusion is presented in Section 5.

II. BACKGROUND

People might experience a wide range of emotions when listening to music. In order to increase the classification accuracy of the music recommendation system, this study makes use of machine learning techniques, which are helpful in successfully extracting features [1]. The process of recommending music to patients based on their emotions involves gathering data, which is referred to as a dataset.

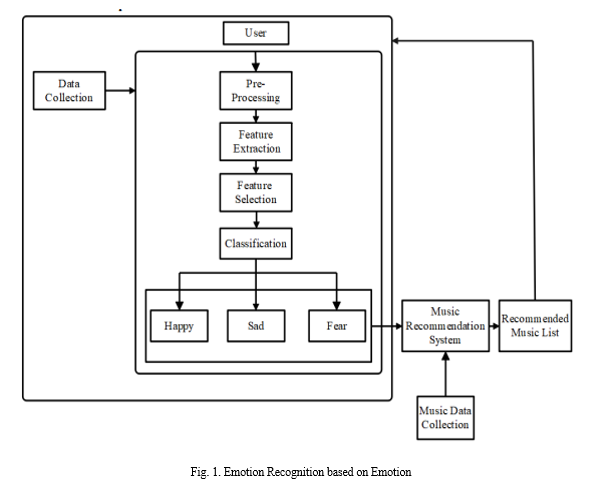

The dataset is sent to the preprocessing step in order to eliminate noise, eliminate unused spaces, and boost the effectiveness of the music recommendation algorithm. Based on patient mood, Figure 1 recognises emotions [6].After preprocessing is finished, the feature extraction procedure must be carried out in order to extract the patient's emotions from the music genres—such as melodic, classical, etc. Next, in order to improve the classification results and reduce the space and temporal complexity of the model under discussion, the data is moved to feature selection. Emotions including fear, anger, sadness, and happiness are detected using a categorization procedure. The generated music recommendation system's accuracy and identification rates were enhanced using a range of categorization methods.

A. Taxonomy of the Music Recommendation System

Individuals receive music recommendations according on their likelihood and state of mind. The five genes that make up the majority of music recommendations are rock, electronica, jazz, world music, and classical. The type of music is recognised as recommended for the user based on the user's prior playlist. There are two types of music recommendation systems: content-based and collaborative-based. In order to produce representations of songs or artists (embedding or latent factors) from the audio material or textual metadata, such as artist biographies or user-generated tags, research on recommender systems in the music business used machine learning techniques. The data collection process begins to serve the suggested music after determining the patient's emotion. The suggested musical selections are contingent upon the patient's state of mind. Machine learning algorithms are employed to carry out the entire procedure.

These latent item factors are then employed in content-based systems, such as nearest neighbor recommendations, integrated into matrix factorization techniques, or used to create hybrid systems, which most often combine content- and collaborative-based approaches [7]. These applications can be made directly or indirectly. Figure 2 shows the categorization of the music recommendation system.

B. Taxonomy based on Machine Learning Techniques

One of the main subfields of artificial intelligence (AI) is machine learning (ML), which allows software programmes to forecast results more accurately and make decisions solely based on user input. A music recommendation system can be provided based on ML. In order to improve patients' physical and emotional health, a music recommendation system is created using a variety of machine learning approaches [8]. The emotional state score that a human listener would probably assign to recently input musical pieces can be estimated by the machine learning model. Machine learning may significantly facilitate and expedite the process of identifying which musical features yield observable advantages for certain applications. Recently, a variety of machine learning algorithms have been used to suggest music for improved healthcare. [1][6][11].

III. LITERATURE SURVEY

ML includes both supervised and unsupervised learning, which are covered in the sections that follow.

A. Supervised Learning

Labelled datasets are used to train Supervised Learning [5].

There are two categories for supervised learning: regression and classification.

- Classification: This study uses classification to classify the patient's emotion by dividing the data into classes according to the assigned values of the item.

- Regression: The continuous values are forecasted using regression. Creating the optimal curve or best-fit line between the data is the primary objective of regression in supervised learning.

The following is a detailed look of machine learning models:

- Support Vector Machine

A supervised learning method called Support Vector Machines (SVM) is used to categorise different emotions like happy, sadness, rage, etc. In [3], the SVM resolves convex optimisation problems and gets rid of the user's overfitting. The music recommendation system receives the identified emotions from the SVM based on the analysis. Every transferred piece of music will be played in accordance with that arrangement.

2. The Random Forest

A supervised learning ensemble classifier for emotion identification is called Random Forest (RF). Within [9–12],

For emotion recognition, the hybrid model that used the Wavelet Packet Transform (WPT) and Random Forest techniques performed better. By utilising the hybrid RF classifier with WPT, the emotion recognition model's average testing and training time is decreased. According to the aforementioned remark, music recommendations are made fast based on identified emotions.

3. K-Nearest Acquaintances

Since the classification accuracy is highest when the dataset size is small, [8] uses the K-Nearest Neighbour (KNN) for the emotion categorization. Additionally, it's thought that even when emotions overlap, the KNN classifier's accuracy in identifying emotions increases significantly.

To detect the feelings of individuals with disabilities, the classification system was put to the test. During the testing phase, both healthy individuals and those with intellectual disabilities are put through tests. Humans find it challenging to distinguish between mild emotional states. KNN is not very good at music recommendation when the dataset size is large.

4. Neural Network

[13] uses an Artificial Neural Network (ANN) to forecast a song depending on a patient's stress level and to swap songs based on ongoing patient monitoring. Furthermore, the ANN will carry out comprehensive music analytics, including length and genre, to determine the effects of music played in relation to the patient's physiological parameters. According to the aforementioned assertion, it gets easier to offer music by understanding the patient's feelings.

5. The Logistic Regression Model

By using training and testing data, the Logistic Regression (LR) classifier in [6] is used to categorise the patient's emotions, such as happy, sadness, anger, etc. It functions by estimating the likelihood of an occurrence or emotion and categorising the feeling in accordance with a sophisticated non-linear method. Therefore, the LR is more helpful in suggesting appropriate music for patients.

6. Ensemble

For the purpose of classifying emotions from EEG signal data, [12, 14] employ the Rotation Forest Ensemble (RFE) with a number of classification algorithms, including SVM, KNN, RF, and DT ANN. The RFE performs exceptionally well in this regard.

Three methods are used: RFE classifier for classification, Tunable Q Wavelet Transform (TQWT) for feature extraction, and Multiscale Principal Component Analysis (MSPCA) for noise removal.

7. The Decision Tree

A Decision Tree (DT) is a potent tool for classification and prediction that makes use of the conditions for class separation. When the number of samples is appropriately selected, [6] shows that while the DT's accuracy and precision are comparable to the compared classifiers, its recall and f1 score are higher. The DT is one of those many classifiers that is frequently used to categorise patients' emotions and suggest music that would improve their health.

B. Unsupervised Learning

Clustering is a subset of Unsupervised Learning, which use machine learning techniques to analyse unlabeled datasets [11, 15].The process of dividing or grouping various emotions according to their similarities, such as how one emotion in a group is more comparable to another in the same group, is known as clustering.

- Clustering of K-Means

One technique that falls under the unsupervised learning category is the K Means Clustering algorithm. In [15], the precise data regarding diabetic disease are classified using Kmeans clustering, which is accomplished by grouping the data into k groups.K-means, which calculates the k centroid, assigns each point the proper cluster centroid. One type of meta classifier that makes it possible to convert clustering into classification is the "Classification Via Clustering" classifier. The meta classifier was utilised to ascertain the least amount of inaccuracy in class label assignment. The allocation of k values fluctuates dynamically in tandem with variations in human emotions. Therefore, the Kmeans clustering method for music recommendation is intricate.

2. Model of Gaussian Mixture

When it comes to effectively identifying the various emotions from the EEG data, the Gaussian Mixture Model (GMM) is the favoured method over the Hybrid Mixture Model (HMM), Vector Quantization (VQ), and Bayesian Network (BN). Brain signals are classified into different emotions using the Generalised Mixture Distribution Model (GMDM) in combination with truncation and skew GMM. When a distribution is asymmetric, the GMDM model demonstrates that truncation can be applied to either the left or right side, both ends, or both ends of the distribution. Nonetheless, there is a potential that the patient's emotions will be incorrectly classified because the GMM takes into account symmetry and an unlimited range. Thus, the likelihood that the music recommendation system will use GMM is less

There are many other kinds of recommendation systems, including ones for food, movies, clothing, and more [16–17]. The topic of music recommendation systems using different methodologies is covered in this section.

Rahman et al. [1] created Music Therapy, which uses machine learning (ML) techniques to address patients' mental health issues in an effective manner. A comparative investigation was conducted on various psychological metrics, including Skin Temperature (ST), Electro Dermal Activity (EDA), Blood Volume Pulse (BVP), and Pupil Dilation (PD), while listening to various musical genres.

Three classifiers—Neural Networks (NN), Support Vector Machines (SVM), and K Neighbours (KNN)—were utilised in the investigation to assess the performance and metrics.The suggested approach improved both the patient's medical care and the musicogenic epilepsy. When the stimuli were being labelled, the dataset was biassed, which reduced the machine learning model's ability to predict outcomes.

An emotion-based music recommendation system and classification framework (EMCRF), which Quasim et al. [2] proposed, aid in the highly accurate classification of songs according to an individual's emotion. Every time a user opens the IoT framework on their device, the EMRCF recognises their face data and plays music based on the category they choose. It does this by gathering information from the playlist's past.

Neural networks were utilised by the EMRCF to obtain a high degree of accuracy in classifying people into categories like annoyed, pleasant, and relaxed. The inability to produce custom soundtracks stems from EMRCF's automatic operation within the Internet of Things.

In order to create an intelligent music recommendation system, Vall et al. [3] proposed Fast Region Convolutional Neural Network (Fast - RCNN) by utilising a deep learning technique. SVM is the classification algorithm, and Scale Invariant Feature Transform (SIFT) is the feature extraction algorithm clever way for suggesting songs. To transfer the data and protocol utilised in the music recommendation system, however, tolerance and compatibility tests need to be performed on the F-RCNN model's side.

A doubly truncated Gaussian distribution model, as proposed by Krishna et al. [4], can identify an immobilised person's emotions. The main advantage of this model was its asymmetric distribution, which facilitates the elicitation of EEG signal data in either symmetrical or asymmetrical form. The main feature of this method was the nominal scale of measurements that represented the most prevalent emotional states, such as anger, surprise, sadness, happiness, disgust, and fear.

The boundless range of the EEG signal samples was employed to detect emotions, but it was narrowed to a set of limitations. Nevertheless, given their surroundings, the doubly truncated Gaussian distribution model has trouble identifying the neutral mood.

For a supervised dual channel, Abdel-Basset et al. [5] proposed a Spatio-Temporal Deep Human Activity Recognition (ST-deep HAR) that was based on the fusion of inertial sensor data using Long-Short Term Memory (LSTM).

Two channels are offered by the ST-deep HAR: the first is used to learn the sequential features of the HAR, and the second is for the modified residual network. The best performance is offered by the ST Deep HAR, which also enhances feature selection possibilities through fine tuning. The efficiency of Internet of Healthcare Things (IoHT) apps is aided by ST Deep HAR. Nonetheless, in IoHT applications such as device inquiry and radar data streams, the adversarial generative networks need to be enhanced based on heterogeneous HAR.

Wen [18] has presented an intelligent music recommendation system that makes use of the Internet of Things' architecture and deep learning models. An algorithm known as feature extraction was used to extract the mid-level information from the scene photos in order to create the intelligent music recommendation system. The Gabor feature technique was utilised to choose the mid-level features, which are capable of identifying and suggesting music suitable for indoor environments. The SVM classifier is used for classification, while the SIFT feature extraction approach was used to extract the features. The data from the intricate communication systems is processed by the intelligent music recommendation system. Nevertheless, during the SVM classification, shortcomings in the test pertaining to compatibility and tolerance were discovered.

A novel form of music therapy has been introduced by Byrns et al. [19] to treat people suffering from Alzheimer's disease. Patients receive music therapy through a virtual reality setting that resembles a theatre and in which participants perform for a large audience. In order to lessen the patients' negative emotions, the volunteers were told to carry out memory exercises in the setting of music therapy. These kinds of music therapy have been shown to improve disease-affected people's emotions in a virtual reality setting. Additionally, the patients' pleasant emotions are elevated and their memory recall is enabled by this adaptive music treatment. However, more people from other regions need to have access to this kind of music therapy.

A hybrid music recommendation system with two features has been presented by Vall et al. [20]. It combines a feature vector representation with information from the music playlist to carry out collaborative filtering.

Based on collaboration patterns, the hybrid recommendation engine filters out the songs that aren't relevant from the playlist. The music playlist was preprocessed using collaborative filter after being pulled from two datasets, including Art of Mix and 8 tracks. The hybrid music recommendation system made use of a trained auto-tagger to forecast the audio characteristics of the songs that were included in the playlist collection. Nevertheless, there is still room for improvement in terms of training rate and prediction timeframes for the hybrid music recommendation system.

IV . RESULTS AND ANALYSIS

The many approaches utilised in music therapy help people manage their stress, anxiety, and depression while also significantly enhancing their physical and emotional well-being.

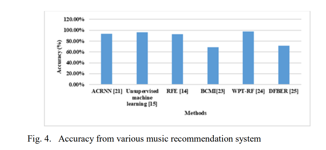

Figure 4 shows a graphical depiction of the accuracy numbers from the current approaches utilised in music recommendation.

The further chart of detailed literature survey along with methodology, advantages, limitations and performance measures is presented in Table 1.

From table 1, it is shown that accuracy is considered as a significant parameter to compute the effectiveness and efficiency of the music recommendation system

According to the analysis, the range of the music recommendation's accuracy is 68% to 98%. Nevertheless, this work still needs a lot more improvement. Some prevalent problems plaguing the current research include irrelevant features, insufficient input data, and limited classification versus subcategories of human emotions including surprise, rage, and happiness. Future study will therefore focus on the ensemble learning approach in order to go to the next level of music selection based on other emotions, such as happiness, rage, and surprise.

The following is a discussion of the issues encountered when developing the comparative table-based music recommendation system:

- Because music repetition triggers long-term memories [10], potentially unpleasant feelings, and unwelcome diversions, it has some detrimental effects on stress reduction.

- By gathering patient history data, the music suggestion system can be enhanced with personalised music to aid in patients' well-being and a speedy recovery [9].

- Certain musical genres, along with extremely loud music, may irritate or discomfort certain individuals. This form of music may also trigger strong or negative reactions or evoke unpleasant memories [6].

According to a review of the literature, Brain-Computer Music Interfaces (BCMIs) offer a tool that provides a minimum accuracy of 68% for emotion identification, whereas Wavelet Packet Transform (WPT) Cochlear Filter Bank and Random Forest (RF) Classifier yields a maximum accuracy of 98%.

Conclusion

A music suggestion system helps patients receive mental healthcare, reduces the impact of illness, and detects emotions in people. It also offers music as treatment. In this study, a machine learning-based music recommendation system is offered for the management of an individual\'s healthcare. The analysis of relevant works for music recommendation systems includes a taxonomy of music recommendation kinds and a discussion of machine learning approaches. Lastly, various approaches are used to state the issues discovered in the linked publications. Consequently, it seems that machine learning (ML) is a vital new auxiliary tool for music therapy intervention. By discovering potentially predictive components of therapeutic success, machine learning techniques can provide music therapy practitioners with incredibly helpful guidance on music listening.

References

[1] J.S. Rahman, T. Gedeon, S. Caldwell, R. Jones, and Z. Jin, “Towards effective music therapy for mental health care using machine learning tools: human affective reasoning and music genres”, Journal of Artificial Intelligence and Soft Computing Research, Vol. 11, 2021. [2] M.T. Quasim, E.H. Alkhammash, M.A. Khan, and M. Hadjouni. \"Emotion-based music recommendation and classification using machine learning with IoT Framework”, Soft Computing, vol. 25, no. 18, pp. 12249-12260, 2021. [3] V. Andreu, M. Quadrana, M. Schedl, and G. Widmer. \"Order, context and popularity bias in next-song recommendations.\" International Journal of Multimedia Information Retrieval 8, no. 2 (2019): 101-113.. [4] N. M. Krishna, K. Sekaran, A. Venkata Naga Vamsi, G.S. Pradeep Ghantasala, P. Chandana, S. Kadry, T. Blažauskas, and R. Damaševi?ius, “An efficient mixture model approach in brainmachine interface systems for extracting the psychological status of mentally impaired persons using EEG signals”, IEEE Access, vol. 7, pp. 77905-77914, 2019. [5] M Abdel-Basset, H. Hawash, R. K. Chakrabortty, M. Ryan, M. Elhoseny, and H. Song, “ST-DeepHAR: Deep learning model for human activity recognition in IoHT applications”, IEEE Internet of Things Journal, vol. 8, no. 6, pp. 4969-4979, 2020. [6] V. Doma, and M. Pirouz, “A comparative analysis of machine learning methods for emotion recognition using EEG and peripheral physiological signals”, Journal of Big Data, vol. 7, no. 1, pp. 1-21, 2020. [7] S. Markus. \"Deep learning in music recommendation systems.\" Frontiers in Applied Mathematics and Statistics (2019): 44. [8] N.V. Kimmatkar, and B. Vijaya Babu, “Novel approach for emotion detection and stabilizing mental state by using machine learning techniques”, Computers, vol. 10, no. 3, pp. 37, 2021 [9] S. Hamsa, I. Shahin, Y. Iraqi, and N. Werghi, “Emotion recognition from speech using wavelet packet transform cochlear filter bank and random forest classifier”, IEEE Access, vol. 8, pp. 96994-97006, 2020. [10] D. Ayata, Y. Yaslan, and M. E. Kamasak, “Emotion based music recommendation system using wearable physiological sensors”, IEEE transactions on consumer electronics, vol. 64, no. 2, pp. 196-203, 2018. [11] D. E. Gustavson, P. L. Coleman, J. R. Iversen, H. H. Maes, R.L. Gordon, and M. D. Lense, “Mental health and music engagement: review, framework, and guidelines for future studies”, Translational Psychiatry vol. 11, no. 1, pp. 1-13, 2021. [12] T. Stegemann, M. Geretsegger, E.P. Quoc, H. Riedl, and M. Smetana, “Music therapy and other music-based interventions in pediatric health care: An overview”, Medicines, vol. 6, no. 1, pp. 25, 2019. [13] S. Siddiqui, R. Nesbitt, M. Zeeshan Shakir, A.A. Khan, A. Ahmed Khan, K. Karam Khan, and Naeem Ramzan. “Artificial neural network (ann) enabled internet of things (iot) architecture for music therapy”, Electronics, vol. 9, no. 12. 2020. [14] A. Subasi, T. Tuncer, S. Dogan, D. Tanko, and U. Sakoglu, “EEGbased emotion recognition using tunable Q wavelet transform and rotation forest ensemble classifier,” Biomedical Signal Processing and Control, vol. 68, pp. 102648, 2021. [15] S. M. Sarsam, H. Al-Samarraie, and A. Al-Sadi, “Disease discoverybased emotion lexicon: a heuristic approach to characterise sicknesses in microblogs”, Network Modeling Analysis in Health Informatics and Bioinformatics, vol. 9, no. 1, 1-10, 2020. [16] C. S. Subhadarsinee, S. N. Mohanty, and A. K. Jagadev. \"Multimodal trust based recommender system with machine learning approaches for movie recommendation.\" International Journal of Information Technology 13, no. 2 (2021): 475-482. [17] T. Uddin, Md Noor, and C. Bepery. \"Framework of dynamic recommendation system for e-shopping.\" International Journal of Information Technology 12, no. 1 (2020): 135-140. [18] X. Wen, “Using deep learning approach and IoT architecture to build the intelligent music recommendation system”, Soft Computing, vol. 25, no. 4, pp. 3087-3096, 2021 [19] B. Alexie, H. Abdessalem, M. Cuesta, M. A. Bruneau, S. Belleville, and C. Frasson. \"Adaptive music therapy for alzheimer’s disease using virtual reality.\" In International Journal on Intelligent Tutoring Systems, pp. 214-219. Springer, Cham, 2020. [20] Vall, A., Dorfer, M., Eghbal-Zadeh, H., Schedl, M., Burjorjee, K. and Widmer, G., 2019. Feature-combination hybrid recommender systems for automated music playlist continuation. User Modeling and UserAdapted Interaction, 29(2), pp.527-572. [21] W. Tao, C. Li, R. Song, J. Cheng, Y. Liu, F. Wan, and X. Chen. \"EEG-based emotion recognition via channel-wise attention and selfattention.\" IEEE Transactions on Affective Computing (2020) [22] V. Kasinathan, A. Mustapha, T. S. Tong, M. F. C. A. Rani, and N. A. A. Rahman. \"Heartbeats: music recommendation system with fuzzy inference engine.\" Indonesian J. Electric. Eng. Comput. Sci 16 (2019): 275-282. [23] D. Ian, D. Williams, A. Malik, J. Weaver, A. Kirke, F. Hwang, E. Miranda, and S. J. Nasuto. \"Personalised, multi-modal, affective state detection for hybrid brain-computer music interfacing.\" IEEE Transactions on Affective Computing 11, no. 1 (2018): 111-124. [24] S.Hamsa, I.Shahin, Y. Iraqi, and N. Werghi. \"Emotion recognition from speech using wavelet packet transform cochlear filter bank and random forest classifier.\" IEEE Access 8 (2020): 96994-97006. [25] A.Deger, Y. Yaslan, and M. E. Kamasak. \"Emotion based music recommendation system using wearable physiological sensors.\" IEEE transactions on consumer electronics 64, no. 2 (2018): 196-203. [26] Pingle, Y.P., Ragha, L.K. An in-depth analysis of music structure and its effects on human body for music therapy. Multimed Tools Appl (2023). https://doi.org/10.1007/s11042-023-17290-w [27] Y. P. Pingle and L. K. Ragha, \"A Review on the use of Machine Learning Techniques in Music Recommendation System for Healthcare Management,\" 2023 10th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 2023, pp. 118-124.

Copyright

Copyright © 2024 Shikha Chaudhary. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET58150

Publish Date : 2024-01-23

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online