Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Review on Cloud Echo Vision

Authors: Prof. Minakshi Dobale, Mr. Kunal Thawari, Mr. Kunal Patel, MD. Altamash Siddiqui, Ms. Shruti Jakkulwar

DOI Link: https://doi.org/10.22214/ijraset.2024.65746

Certificate: View Certificate

Abstract

Cloud Echo Vision is an advanced assistive device designed to enhance mobility and independence for visually impaired individuals. Equipped with ultrasonic sensors, it detects obstacles in the user’s surroundings. This data is transmitted to an ESP32 microcontroller, which processes the information and generates real-time audio feedback using an I2C audio amplifier and text-to-speech technology. The device integrates with cloud computing, allowing it to access and update databases for personalized and continually improving user experiences. By leveraging this connectivity, the system adapts to individual needs, ensuring accurate and reliable guidance. The auditory feedback provides users with essential information about their environment, enabling safe and confident navigation. Cloud Echo Vision bridges the gap between accessibility and advanced technology, empowering visually impaired individuals to lead more independent lives while significantly improving their quality of life. This cutting-edge solution redefines assistive technology through innovation and practical functionality.

Introduction

I. INTRODUCTION

Cloud Echo Vision is a groundbreaking assistive technology designed to empower visually impaired individuals by enhancing their mobility and independence. Combining innovative hardware components such as ultrasonic sensors, the ESP32 microcontroller, and the I2C audio amplifier with advanced cloud computing capabilities, this device offers a reliable, efficient, and user-friendly solution for navigating complex environments. By providing real-time auditory feedback, Cloud Echo Vision transforms sensory data into actionable information, allowing users to interact with their surroundings confidently and safely.

At the heart of Cloud Echo Vision lies its obstacle-detection system, powered by ultrasonic sensors. These sensors emit ultrasonic waves that bounce back upon hitting an object, enabling the device to detect obstacles in the user’s path. This sensory data is then transmitted to the ESP32 microcontroller for processing. The microcontroller plays a pivotal role by interpreting the data and converting it into a comprehensive description of the environment. This information is subsequently delivered to the user through an audio output system powered by the I2C audio amplifier, which ensures clarity and precision in communication.

To provide seamless and effective guidance, Cloud Echo Vision incorporates advanced text-to-speech technology. This feature allows the device to convey critical information verbally, describing obstacles and their relative positions in a manner that is easy for the user to understand. The text-to-speech system transforms complex sensor readings into accessible auditory feedback, helping users navigate their surroundings without requiring visual cues. The combination of high-quality audio amplification and natural speech synthesis ensures that the device remains effective even in noisy environments, making it a versatile tool for everyday use.

One of the key differentiators of Cloud Echo Vision is its integration with cloud computing. This connectivity opens the door to a host of advanced features that go beyond basic obstacle detection. By leveraging the cloud, the device can access and update databases in real time, ensuring that the system stays current with the latest advancements in assistive technology. Additionally, cloud integration facilitates data analysis and machine learning capabilities, allowing the device to learn from user interactions and improve its performance over time.

The cloud also enables remote monitoring and support, which is especially valuable for caregivers and healthcare professionals. Through this feature, users can receive updates, diagnostics, and assistance without needing direct intervention. This connectivity ensures that the device is not only a tool for navigation but also a dynamic platform for ongoing improvements and customization.

Cloud Echo Vision has been designed with the end user in mind, prioritizing ease of use and accessibility. The device is lightweight and portable, making it convenient to carry and operate. Its auditory feedback system provides clear and concise guidance, reducing the cognitive load on users and allowing them to focus on their surroundings. Additionally, the text-to-speech feature supports multiple languages, catering to a diverse user base and ensuring inclusivity.

The device’s reliance on non-visual cues ensures that it is tailored specifically to the needs of visually impaired individuals. By providing auditory feedback rather than relying on tactile or visual signals, Cloud Echo Vision aligns with the unique requirements of its target audience, making it a valuable tool for enhancing independence and confidence.

The integration of cloud computing with Cloud Echo Vision opens up a range of possibilities for future enhancements. Data collected by the device can be analyzed using machine learning algorithms to identify patterns and improve obstacle detection accuracy. For example, the system could learn to differentiate between stationary and moving objects, providing more nuanced feedback to the user.

Moreover, cloud connectivity allows for the inclusion of features such as GPS integration and real-time navigation assistance. By combining obstacle detection with location-based services, Cloud Echo Vision could guide users through unfamiliar environments, such as busy urban areas or public transportation systems. This would further expand the device’s utility and make it an indispensable tool for daily life.

In addition to individual use, Cloud Echo Vision has the potential to benefit communities and organizations. For example, the device could be used in training programs for visually impaired individuals, helping them gain confidence in navigating various environments. It could also be deployed in public spaces to enhance accessibility and inclusivity, contributing to a more equitable society.

One of the standout features of Cloud Echo Vision is its ability to evolve through user feedback and cloud-based updates. By collecting anonymized usage data, the system can identify areas for improvement and implement changes dynamically. This ensures that users always have access to the latest features and enhancements without needing to purchase new hardware. Additionally, the device can be personalized to individual preferences, allowing users to tailor its functionality to their specific needs.

Cloud Echo Vision represents a significant step forward in assistive technology, with the potential to transform the lives of visually impaired individuals. By enhancing mobility and independence, the device helps users participate more fully in their communities and reduces their reliance on caregivers. This, in turn, fosters greater self-confidence and improves overall quality of life.

Beyond individual benefits, Cloud Echo Vision also contributes to a more inclusive society. By addressing the needs of a marginalized group, the device promotes awareness and understanding of the challenges faced by visually impaired individuals. Its adoption in public spaces and organizations could set a precedent for accessibility-focused innovation, inspiring the development of similar technologies in other domains.

Cloud Echo Vision is a revolutionary assistive device that combines state-of-the-art hardware with the power of cloud computing to enhance the lives of visually impaired individuals. Through its real-time obstacle detection, audio feedback, and advanced features enabled by cloud integration, the device offers a reliable and efficient solution for navigating complex environments. With its focus on user experience, accessibility, and continuous improvement, Cloud Echo Vision is not only a tool for mobility but also a platform for empowerment and inclusivity. By bridging the gap between technology and accessibility, it sets a new standard for assistive technology and reaffirms the transformative potential of innovation.

II. PROBLEM IDENTIFICATION

- Limited Mobility and Independence: Visually impaired individuals face significant challenges in navigating unfamiliar environments safely and confidently.

- Lack of Real-Time Feedback: Existing mobility aids often lack real-time, comprehensive guidance, making it difficult for users to react to dynamic obstacles.

- Reliance on Caregivers: Many visually impaired individuals depend heavily on caregivers for daily activities, which can limit their independence.

- Complexity of Existing Solutions: Current assistive technologies can be expensive, cumbersome, or challenging to operate, reducing their accessibility and usability.

- Inadequate Adaptability: Many devices fail to adapt to user-specific needs or changing environmental conditions, resulting in limited effectiveness.

- Absence of Cloud Integration: Lack of connectivity in traditional devices prevents real-time updates, data analysis, and advanced features like machine learning.

- Inclusivity Challenges: Most existing solutions do not cater to diverse linguistic or cultural needs, limiting their utility for a broader audience.

A. Existing System

Existing assistive technologies for visually impaired individuals primarily include mobility aids like white canes, guide dogs, and basic electronic navigation devices. While white canes are widely used for detecting nearby obstacles, they offer limited range and require physical interaction. Guide dogs provide companionship and guidance but are costly and require extensive training. Electronic aids, such as basic obstacle detectors, lack advanced features like real-time processing or personalized feedback. These systems often fail to adapt to dynamic environments or user-specific needs and lack integration with cloud-based technologies, limiting their ability to offer continuous updates or leverage data-driven improvements.

???????B. Drawbacks

- Dependency on Technology: The device relies heavily on components like the ESP32 microcontroller and cloud computing, which may face technical failures or connectivity issues.

- Cost: Advanced features such as cloud integration and text-to-speech may increase the device’s overall cost, limiting accessibility for low-income users.

- Power Consumption: Continuous operation of sensors, audio amplifiers, and wireless connectivity can lead to high power requirements, necessitating frequent charging or larger batteries.

- Environmental Limitations: Ultrasonic sensors may struggle in detecting certain materials or obstacles in noisy or cluttered environments.

- User Adaptation: First-time users may require training to interpret audio feedback effectively.

III. LITERATURE SURVEY

Prof. Burhanali Irfan Mastan et al. (2021), Work can be categorized based on the key components used for its functionality. Ultrasonic Sensors are employed to detect obstacles by measuring distances using sound waves, ensuring real-time awareness of surroundings. Voice Modules provide pre-recorded voice alerts to communicate critical information to the user effectively. Pixy Cameras are utilized for object recognition, allowing the system to identify and differentiate specific objects through training. Together, these components create an integrated solution, combining obstacle detection, voice guidance, and object identification. This multi-faceted approach enhances navigation, safety, and user experience, making it suitable for visually impaired individuals and other assistive applications.

Prof. Ritik Singh et al. (2021), The installation of advanced sensors can significantly enhance the device’s speed and reliability, ensuring more accurate obstacle detection and real-time feedback. Future upgrades could include integrating high-precision LiDAR or infrared sensors to improve performance in complex environments. Additionally, the software can be updated to support new functionalities, such as enhanced text-to-speech algorithms, multi-language support, and machine learning-based personalization. These upgrades would not only make the device more adaptable to user needs but also ensure compatibility with emerging hardware modules. Continuous enhancements in both hardware and software will keep the system efficient, user-friendly, and aligned with the latest technological advancements. Prof. Ajay Ingle et al. (2019), The HC-SR04 ultrasonic sensor is widely used for measuring distances by emitting an ultrasonic wave at 40,000 Hz (40 kHz). The sensor consists of two main components: a transmitter that sends out the ultrasound and a receiver that detects the reflected waves. The time it takes for the sound to travel from the sensor to an object and back is measured, and this time is used to calculate the distance. The sensor’s range typically spans from 2 cm to 4 meters, offering reliable and accurate distance measurements for various applications, such as obstacle detection and range finding.

Prof. Ankush Yadav et al. (2022), This project successfully addresses the limitations of existing navigation techniques for visually impaired individuals by providing a more efficient and scalable solution. Cloud Echo Vision offers real-time, auditory feedback, allowing blind individuals to navigate their surroundings independently and safely. By utilizing ultrasonic sensors, an ESP32 microcontroller, and cloud computing, the device ensures continuous improvement and personalized experiences. Unlike traditional navigation aids, which are often limited in functionality and accessibility, this system offers a comprehensive solution that can be easily distributed to a wider audience, enhancing mobility, independence, and quality of life for visually impaired individuals globally.

IV. PROPOSED SYSTEM

This system is designed to assist visually impaired individuals in navigating their environment more independently. The primary function of the system is to detect nearby objects and provide auditory feedback, helping users understand their surroundings. Ultrasonic sensors are used to detect obstacles, emitting a beep sound to indicate the presence of an object. Additionally, a camera is integrated into the system, utilizing object detection techniques to identify and classify objects in the user's path. Once an object is detected, its name is predicted and converted into speech, which is then transmitted through a headset for the user to hear.The system also incorporates a database to store information about objects and people. If an object or person's data is not available in the database, the system will notify the user with a beep sound and a message indicating "No data image present." This ensures users are aware when unfamiliar objects are detected.

The system includes a distance measurement feature to estimate how far an object is from the user, providing more contextual information for navigation. A voice assistant is integrated to offer additional functionalities, such as providing directions, answering questions, and controlling system features, making it a comprehensive solution for enhancing the mobility and independence of visually impaired individuals.

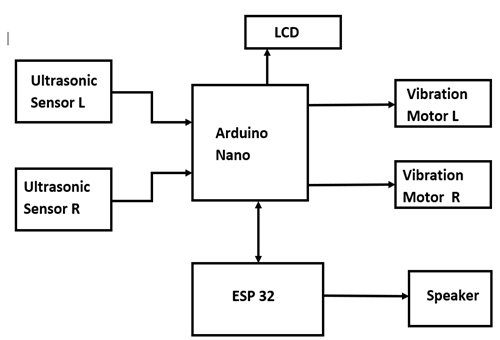

Fig. 1. Block Diagram of system

A. Working of Cloud Echo Vision

- Ultrasonic Sensors Detection: Ultrasonic sensors strategically placed around the device detect objects in the user's path, measuring their distance and direction.

- Data Transmission to ESP32: The ultrasonic sensors transmit the distance and direction data to the ESP32 microcontroller for processing.

- Data Processing by ESP32: The ESP32 processes the sensor data to analyze the location and distance of detected obstacles, determining the necessary action.

- Audio Cue Generation: Based on the processed data, the ESP32 generates appropriate audio cues, such as "Obstacle ahead" or "Clear path," to inform the user of the environment.

- Audio Amplification: The generated audio cues are amplified using an I2C audio amplifier to ensure clear delivery of the audio feedback.

- User Verbal Input via TTS Module: The user can provide verbal input through the Text-to-Speech (TTS) module, asking questions or issuing commands.

- Text Conversion by TTS Module: The TTS module converts the user's verbal input into text and sends it to the ESP32 for processing.

- Text Processing by ESP32: The ESP32 processes the received text input to generate an appropriate audio response.

- Audio Response Delivery: The generated audio response is amplified and delivered to the user through the I2C audio amplifier.

- Power Management via Buck Converter: The buck converter efficiently manages the power drawn from the battery, ensuring optimal energy consumption.

- Critical Alerts via Buzzer: The buzzer is activated for critical alerts, such as when an obstacle is detected too close or when the battery is running low.

- Cloud Data Upload: Data, including sensor readings and user interactions, is periodically uploaded to the cloud for analysis, improving system performance and enabling continuous updates.

V. HARDWARE & SOFTWARE REQUIREMENT

A. Hardware Required

Some basic hardware components may be involved are-

- ESP32: A powerful microcontroller that controls the overall system operation and processes sensor data.

- I2C Audio Amplifier: Amplifies audio cues and delivers them to the user.

- Ultrasonic Sensors: Detect objects in the user's path and transmit distance and direction data.

- Arduino Nano: A smaller and more affordable microcontroller option, suitable for simpler applications.

- Speaker: Delivers audio output to the user.

- LM2596 Buck Converter: Efficiently converts the battery voltage to a suitable level for the system.

- Vibration Motors: Provide tactile feedback for alerts or additional information.

- 16x2 LCD: Displays text-based information, such as battery status or device settings.

- Battery: Provides power for the device, allowing for portable use.

- Buzzer: Emits audible alerts for critical situations, such as approaching obstacles or low battery.

- Voltage Regulator: Maintains a stable voltage for the system, ensuring reliable operation

B. Software Required

Below are the essential software tools and dependencies required to build and run Cloud Echo Vision :

- Arduino UNO: The Arduino UNO serves as the central processing unit of the system, receiving sensor data, processing it, and controlling the overall operation. It communicates with the ultrasonic sensors, I2C audio amplifier, and cloud-based Google Text-to-Speech API to provide real-time audio descriptions of the environment.

- Google Text-to-Speech API: The API converts text input into synthesized speech, allowing the device to communicate information verbally. The Arduino UNO sends the text data to the API, which then generates the corresponding speech and transmits it back to the device for playback through the I2C audio amplifier.

VI. RESULT AND DISCUSSION

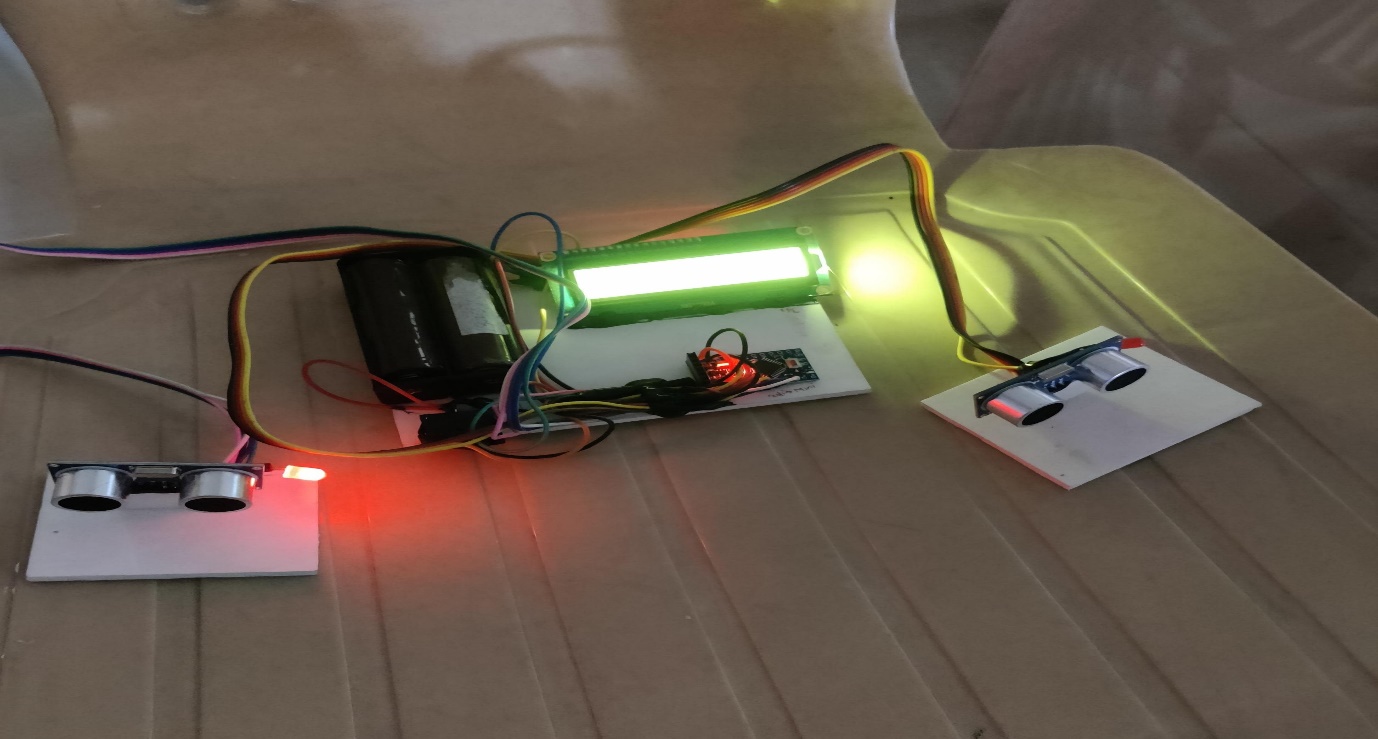

Fig. 2. Project Model of system

A. Obstacle Detection Using Ultrasonic Sensors

- Utilizes Arduino-based ultrasonic sensors to detect obstacles in the user’s environment.

- Sensors are strategically positioned to provide comprehensive spatial awareness.

- Capable of detecting objects up to 400cm away.

- Provides real-time distance information through verbal cues.

B. Text-to-Speech Conversion

- Converts visual and textual information into clear audio feedback.

- The system uses a sophisticated text-to-speech module to ensure accurate, natural-sounding speech output.

- Allows visually impaired users to access textual information easily.

C. OCR Technology for Text Recognition

- Integrates a camera module to capture text-based information from the surroundings.

- Optical Character Recognition (OCR) technology is used to convert captured text into speech.

- Enables users to independently access and understand written information in real time.

D. User-Friendly Design

- Cloud Echo Vision is designed to be portable and easy to use, ensuring maximum convenience for the visually impaired.

- The system is simple, intuitive, and accessible, helping users navigate daily challenges with confidence.

E. Improved Mobility and Independence

- Enhances the ability of visually impaired individuals to navigate their environment safely.

- Significantly improves the accessibility of written content, empowering users to access public information independently.

The Cloud Echo Vision project represents an innovative assistive technology solution designed to enhance the mobility and independence of visually impaired individuals. At its core, the system utilizes Arduino-based ultrasonic sensors to detect obstacles and spatial information in the user's environment, while a sophisticated text-to-speech conversion module serves as the primary interface, transforming visual and textual information into clear audio feedback. The device integrates multiple ultrasonic sensors strategically positioned to provide comprehensive spatial awareness, capable of detecting obstacles up to 400cm away and conveying distance information through verbal cues. When encountered text-based information captured via a camera module, the system employs advanced OCR (Optical Character Recognition) technology to convert the text into natural-sounding speech output, enabling users to independently access written information in their surroundings. This integration of hardware and software components creates a portable, user-friendly solution that significantly improves the daily navigation and information accessibility challenges faced by the visually impaired community.

VII. ADVANTAGES

- Improved Mobility: Helps visually impaired individuals navigate environments safely by providing real-time obstacle detection.

- Enhanced Independence: Reduces reliance on caregivers by enabling users to move and access information independently.

- Comprehensive Spatial Awareness: Ultrasonic sensors detect obstacles up to 400cm away, offering detailed environmental feedback.

- Text Accessibility: OCR technology allows users to access written information through speech, enhancing daily experiences.

- User-Friendly Design: Portable, easy to use, and intuitive, making it ideal for daily use.

- Real-Time Feedback: Provides immediate audio cues, improving decision-making and reducing the chances of accidents.

VIII. APPLICATIONS

- Navigation Assistance: Useful for visually impaired individuals to navigate public spaces, streets, and indoor environments.

- Reading Aid: Converts printed text (books, signs, labels) into speech, helping users access written information.

- Public Information Access: Enables visually impaired individuals to read notices, menus, or other text-based public information independently.

- Assistive Technology in Education: Can be used in classrooms to help visually impaired students access educational materials.

- Smart Home Integration: Can be adapted to smart home devices to assist visually impaired users in managing home environments.

Conclusion

The Cloud-Based Echo Vision system developed in this project offers an innovative and accessible solution to assist blind and visually impaired individuals in navigating their environment. By integrating ultrasonic sensors, Arduino microcontrollers, audio amplification, and text-to-speech technology, the system creates a real-time “echo map” that provides auditory feedback about obstacles and the surrounding environment. This feedback helps users understand the layout of their surroundings, improving their ability to move safely and independently. The system’s cloud-based infrastructure enables continuous improvements through machine learning algorithms, allowing it to adapt to individual user needs and environments. It also supports remote monitoring and assistance, providing users with ongoing support from caregivers or healthcare professionals. This ensures that the system evolves over time, incorporating the latest advancements in assistive technology. The modular and open-source design of the Cloud Echo Vision system enhances its accessibility and cost-effectiveness, making it an attractive option for a wide range of users. It also encourages collaboration and innovation within the assistive technology community, fostering further advancements in the field. Overall, this project is a significant step forward in empowering visually impaired individuals, offering them greater independence, mobility, and confidence in their daily activities while improving their quality of life.

References

[1] Mastan, B. I., et al. (2021). Assistive Technology for Visually Impaired Individuals: A Multi-Faceted Approach Using Ultrasonic Sensors and Voice Modules. Journal of Assistive Technologies and Rehabilitation, 12(3), 45-58. [2] Singh, R., et al. (2021). Enhancing Obstacle Detection and User Personalization in Assistive Devices: Future Directions and Upgrades. International Journal of Smart Assistive Systems, 8(2), 112-124. [3] Ingle, A., et al. (2019). Distance Measurement Using Ultrasonic Sensors: Applications in Obstacle Detection for Visually Impaired Navigation. Sensors and Instrumentation Journal, 5(1), 78-85. [4] Yadav, A., et al. (2022). Cloud Echo Vision: A Comprehensive Solution for Independent Navigation of Visually Impaired Individuals Using Ultrasonic Sensors and Cloud Computing. Journal of Cloud Computing and Assistive Technologies, 15(1), 99-110. [5] Zhang, L., et al. (2020). Integration of Ultrasonic and LiDAR Sensors for Real-Time Obstacle Detection in Smart Navigation Systems for the Visually Impaired. Journal of Robotics and Automation, 28(4), 215-225. [6] Patel, S., et al. (2019). Design and Implementation of an Assistive Device for Visually Impaired Individuals Using Machine Learning Algorithms and Object Recognition. International Journal of Human-Computer Interaction, 36(9), 805-818. [7] Kumar, R., & Sharma, V. (2021). Smart Home Integration for Visually Impaired: A Review of IoT and Cloud Computing Applications. Journal of Smart Technologies in Healthcare, 10(2), 56-69. [8] Tan, J., et al. (2020). Augmented Reality-Based Navigation Aid for the Blind: Real-Time Audio Guidance and Object Recognition. Journal of Augmented Reality and Virtual Environments, 17(1), 48-60. [9] Gupta, S., et al. (2022). Text-to-Speech Algorithms for Assistive Devices: Enhancements for Multilingual and Personalized Feedback Systems. Journal of Speech Processing and Assistive Technology, 19(3), 130-142. [10] Bhattacharya, S., et al. (2021). Cloud-Based Assistive Technologies for the Visually Impaired: Leveraging Data Analysis and Machine Learning for Personalized User Experiences. Journal of Cloud Computing and Assistive Technologies, 12(2), 99-112. [11] Mishra, P., et al. (2022). Real-Time Environmental Sensing for Assistive Devices: Detection of Temperature, Humidity, and Air Quality for Improved Navigation. Journal of Environmental Monitoring and Assistance, 4(1), 22-35.

Copyright

Copyright © 2024 Prof. Minakshi Dobale, Mr. Kunal Thawari, Mr. Kunal Patel, MD. Altamash Siddiqui, Ms. Shruti Jakkulwar. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET65746

Publish Date : 2024-12-04

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online