Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Fake News Detection

Authors: Avishek Agarwala, Yousuf Mahmud Fahim, Mr. Nitin Jain

DOI Link: https://doi.org/10.22214/ijraset.2024.59773

Certificate: View Certificate

Abstract

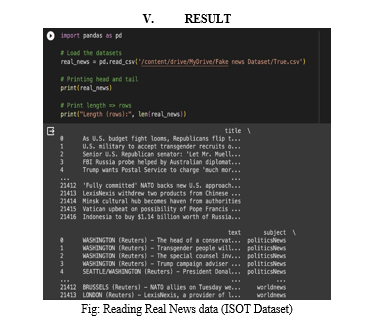

Fake News is a Global problem and is constantly increasing. There are too many people that goes through a fake news and constantly making a new and fake perspective over a subject. To develop a sustainable AI solution is the market demand for many news websites and social platform. The most the work in this era are developed on Backdated Neural Network Classifiers. To engage with latest Model over a highly rated dataset like ISOT Dataset can be a sustainable solution for Fake News Detection. BERT (Bidirectional Encoder Representations from Transformers) can dynamically calculate every input and output element and their weightage. Combining this technology with the existing ones will help to build a sustainable product with a very novel cause to eradicate and control fake news over internet. It will help to detect, eliminate and try to prevent as much as possible threats causing by Fake News.

Introduction

I. INTRODUCTION

Fake news, also known as disinformation or misinformation, is false or misleading information that is presented as if it were true. It is often spread through social media and other online platforms, and it can have a significant negative impact on society. Fake news can be used to manipulate public opinion, sow discord, and undermine trust in institutions. It can also have a negative impact on individuals, leading to them making poor decisions or even putting their lives at risk. The spread of fake news has become a major problem in recent years, and there is a growing need for effective methods of detecting and combating it.

In the digital age, where information travels swiftly and boundlessly, the authenticity of news is constantly under siege. Fake news, with its ability to spread rapidly and undetected, has become a significant challenge, eroding trust in the information we encounter daily. Amidst this tumult, emerges a technological marvel: BERT (Bidirectional Encoder Representations from Transformers). BERT, an advanced natural language processing model, possesses a remarkable ability to comprehend language nuances and contextual intricacies, making it a formidable tool in the battle against misinformation.

BERT is pre-trained on vast amounts of text data and can be fine-tuned for specific NLP tasks like text classification, named entity recognition, question answering, and more. During pre-training, BERT learns to predict missing words in a sentence, gaining a deep understanding of language semantics and context. This pre-trained knowledge can then be adapted and fine-tuned for specific tasks, making BERT a versatile and powerful tool in natural language processing applications.

II. RELATED WORK

A review /survey of fake news detection is proposed By classifying different types of false information’s like Fake news, rumors, clickbait, satire and hoax with real life examples, a list of actors involved in the whole process of fake news propaganda and proposed a three phase detection model based on the time of detection and four different taxonomies to provide a roadmap for fake news detection. The 3T formula provides typology like Misinformation, Disinformation, Misinformation to detect how harmful category the news is and which are different types of fake news and their intentions. The time of detection is also surveyed here as what happens in terms of early detection, mid stage detection and late detection. The taxonomy of fake news is how it is presented like image, audio, video, links and how we categories information in terms of different taxonomies to see which machine learning process have to be implemented over platforms to detect these different category based fake news.

In this paper authors explained about fake news detection techniques on Social media as how taxonomy of different fake news can be seated based on Data, Feature, Application and Model. They identified the psychology in fake news on news portal and social context and how fake news diffusion evolved around application and threat decision is evaluated. Their techniques to recognize fake news evolved around Naive Bayers classifiers, Support vector machine and semantic Analysis on a textual context evaluation. The textual content based on content level, user level based on utility of users and social level. The methods proposes are manual fact checking, Automatic fact Checking over content based and knowledge based fake news. Different linguistic, Visual, Social context and network based Fake news also taken into consideration.

In the work of fake news detection Z Khanam et al 2021, authors have shown how different machine learning approaches can be used.

In this different approaches like Linear Regression, random Forests, XGBoost, Naive Bayes Classifications, K-nearest Neighbors, Decision tree and Support Vector Machine are Compared with the new Benchmark Dataset “Liar”. The core idea of Giving all the approaches is to compare them all to see which work better on a same preprocessed Liar plus dataset. In this they used common parameters like confusion .matrices and the Accuracy over fake message detection to navigate through the Liar dataset. They got around 70-76% over all approaches where XGboost Performs better then another with 76% accuracy.

In this paper, authors recognize the problem of websites containing fake news websites and eliminate them for purposes of helping users to avoid being lured by clickbait. It includes a tool that compute the attributes and produce clickbait data files. They used WEKA machine learning concept to validate the title of websites and then correlate the attribute with WEKA Classifiers which contains BayesNet, Logistic, Random Tree, and Naive Bayes Classifiers. The classifier results are evaluated by precision, recall, F-Measure and ROC. The collected data is very much focused on fake news, clickbait ads and social media viral topics.

In the work of this ,authors explained how the machine learning approaches like logistic regression (LR) Linear SVM (LSVM),Multilayer perceptron K-nearest neighbors (KNN),Ensemble learners(Random forest (RF),Voting classifier (RF, LR, KNN) Voting classifier (LR, LSVM, CART) Bagging classifier (decision trees) Boosting classifier (AdaBoost) Boosting classifier (XGBoost)),Benchmark algorithms(Perez-LSVM Wang-CNN Wang-Bi-LSTM) work in terms of Precision/ recall and F1-Score on 4 datasets(ISOT Fake News Dataset, and kaggele[24] and Kaggle[25]

Dataset and combined one over these 4 dataset. Over these Xgboost again performs better than all other classifiers. And proposed Graph theory and machine learning techniques can be employed to detect key sources in spread of fake news detection.

III. METHODOLOGY

- Logistic Regression: Logistic regression is a statistical method used for binary classification, which means it's designed to predict the probability of an instance belonging to one of two classes. The word "logistic" indicates the logistic function, which is a key component of this model. The main goal of logistic regression is to model the relationship between a set of independent variables (features) and the probability of a particular outcome occurring. The logistic regression model uses the logistic function (also called the sigmoid function) to transform a linear combination of input features into a value between 0 and 1.

- Linear SVM: A Linear Support Vector Machine (Linear SVM) is a powerful supervised machine learning algorithm primarily used for binary classification tasks. The primary objective of a Linear SVM is to find a hyperplane that maximizes the margin, which is the distance between the hyperplane and the nearest data points of each class. Support vectors, the data points closest to the hyperplane, play a crucial role in defining the margin and decision boundary. Linear SVM is particularly effective in high-dimensional spaces, where the number of features exceeds the number of samples. It is robust to outliers due to its focus on support vectors. For cases where data is not perfectly separable, a "soft margin" approach allows for some misclassifications. The algorithm can be extended to handle non-linear relationships through the use of the kernel trick, transforming the input space. Linear SVM finds applications in text classification, image recognition, and various fields with high-dimensional data.

- KNN: K-Nearest Neighbors (KNN) is a simple yet effective supervised machine learning algorithm used for both classification and regression tasks. In KNN, predictions are based on the majority class or average of the K nearest neighbors to a given data point in feature space. The choice of K determines the number of neighbors considered. KNN relies on the assumption that similar instances in feature space have similar labels. The algorithm is non-parametric and instance-based, meaning it doesn't make explicit assumptions about the underlying data distribution. KNN is sensitive to the choice of distance metric, commonly using Euclidean distance. While computationally efficient during training, KNN can be computationally expensive during prediction, especially with large datasets. It is a lazy learner, as it postpones model building until prediction time. KNN is versatile and finds applications in various domains such as image recognition, recommendation systems, and medical diagnosis. The performance of KNN can be influenced by the appropriate choice of K and the feature scaling of the dataset.

- LSTM: Long Short-Term Memory (LSTM) is a type of recurrent neural network (RNN) architecture designed to address the vanishing gradient problem, allowing for the effective modeling of sequential and time-series data. LSTMs have a more complex memory cell structure compared to traditional RNNs, incorporating gates to control the flow of information within the network. These gates enable LSTMs to selectively store, read, and remove information from their memory cells, making them well-suited for capturing long-term dependencies in sequences. LSTMs are particularly effective in tasks such as natural language processing, speech recognition, and time-series prediction. The architecture mitigates the challenges of training deep networks on sequential data by maintaining a constant error flow. LSTMs excel in learning patterns over extended time lags, making them adept at capturing temporal dependencies in diverse applications.

The ability to retain information over long sequences and handle vanishing gradient issues has contributed to LSTMs' widespread adoption in various fields, demonstrating state-of-the-art performance in numerous sequential data tasks.

5. Naive Bayers: Naive Bayes is a probabilistic machine learning algorithm based on Bayes' theorem, particularly effective for classification tasks. Despite its simplicity, Naive Bayes often performs well in various domains. Bayes' Theorem, named after Reverend Thomas Bayes, is a fundamental concept in probability theory and statistics. It describes the probability of an event based on prior knowledge of conditions that might be related to the event. The theorem is expressed mathematically as \( P(A|B) = \frac{P(B|A) \cdot P(A)}{P(B)} \), where \( P(A|B) \) is the probability of event A occurring given that B has occurred, \( P(B|A) \) is the probability of B occurring given that A has occurred, \( P(A) \) is the prior probability of A, and \( P(B) \) is the prior probability of B. Bayes' Theorem is widely used in various fields, including machine learning, medical diagnosis, and information retrieval, to update probabilities based on new evidence or observations.The "naive" assumption is that features are conditionally independent, given the class, which simplifies computations. The algorithm calculates the probability of each class for a given set of features and assigns the class with the highest probability. Naive Bayes is computationally efficient and requires a small amount of training data. It's particularly suitable for high-dimensional datasets, such as text classification. The algorithm is widely used in spam filtering, sentiment analysis, and document categorization. While the independence assumption may not always hold in real-world data, Naive Bayes remains a powerful and interpretable choice for certain applications. Its simplicity and efficiency make it a popular choice for quick and reliable classification tasks.

6. Decision Tree: A Decision Tree is a versatile and interpretable machine learning algorithm used for both classification and regression tasks. It represents a tree-like structure where each internal node denotes a test on an attribute, each branch represents the outcome of the test, and each leaf node holds the prediction or the target value. Decision trees recursively partition the data based on the most significant attribute at each node, aiming to create homogeneous subsets. The splitting process continues until a stopping criterion is met, such as a predefined depth or a minimum number of samples per leaf. Decision trees are easily interpretable, allowing users to understand the decision-making process. They can handle both numerical and categorical data, are resistant to outliers, and require minimal data preprocessing. However, they can be prone to overfitting, which can be mitigated by using techniques like pruning. Popular variants of decision trees include Random Forests and Gradient Boosted Trees, which enhance predictive performance by combining multiple trees.

7. Random Tree: Random Forest is an ensemble learning method that constructs multiple decision trees during training and outputs the mode of the classes (classification) or the mean prediction (regression) of the individual trees. The "random" aspect comes from using a subset of features for each tree, and the final prediction is a result of aggregating predictions from multiple trees. This randomness helps improve the model's robustness and generalization.

8. XGBoost: XGBoost or eXtreme Gradient Boosting, is a powerful and efficient machine learning algorithm that belongs to the ensemble learning family. It is particularly effective for regression and classification tasks. XGBoost builds a series of decision trees and combines their predictions to improve accuracy and generalization. One notable feature is its regularization term, which helps prevent overfitting by penalizing complex models. It uses a gradient boosting framework, where each tree corrects the errors of the previous ones. XGBoost is known for its speed and scalability, making it a popular choice for large datasets and competitions like Kaggle. It incorporates a variety of regularization techniques, handles missing data gracefully, and provides a flexible interface for customization. XGBoost has consistently demonstrated state-of-the-art performance in machine learning competitions and real-world applications, earning its reputation as a go-to algorithm for many data scientists and practitioners.

In this project we are using BERT Language Model. BERT stands for Bidirectional Encoder Representations from Transformers. It is a state-of-the-art natural language processing (NLP) model developed by Google. It is a deep learning model based on the Transformer architecture, a neural network architecture that has proven highly effective for various NLP tasks. BERT's key innovation is its ability to understand the context of words in a sentence by considering the surrounding words from both the left and right sides. Unlike traditional language models that read text sequentially, either from left to right or right to left, BERT processes the entire input text bidirectional. This bidirectional context understanding allows BERT to capture complex linguistic patterns and relationships in the data.

BERT is pre-trained on vast amounts of text data and can be fine-tuned for specific NLP tasks like text classification, named entity recognition, question answering, and more. During pre-training, BERT learns to predict missing words in a sentence, gaining a deep understanding of language semantics and context. This pre-trained knowledge can then be adapted and fine-tuned for specific tasks, making BERT a versatile and powerful tool in natural language processing applications.

BERT's effectiveness in fake news detection lies in its intricate understanding of contextual nuances within text. By pre-processing and tokenizing news articles, BERT transforms words into meaningful numerical representations. The model's architecture, comprising multiple transformer layers and attention mechanisms, allows it to capture intricate relationships between words in a sentence. During training, BERT learns to distinguish genuine news from false narratives by considering the bidirectional context of words, understanding how each word relates to every other word in the text. This comprehensive comprehension of language context, coupled with fine-tuning on a dataset of labeled real and fake news articles, enables BERT to make nuanced predictions. When deployed, it analyzes new articles, discerning subtle linguistic cues and inconsistencies that often characterize misinformation. This deep understanding of language nuances, acquired through extensive pre-training and task-specific fine-tuning, empowers BERT to be a potent tool in the battle against fake news, enabling accurate identification and categorization of misleading information.

IV. IMPLEMENTATION

Implementing a robust fake news detection system using the BERT (Bidirectional Encoder Representations from Transformers) model involves a series of intricately designed steps. Firstly, the textual data, often sourced from news articles or social media posts, is meticulously preprocessed. This preprocessing includes tasks such as lowercasing, removing special characters, and tokenization. Labels are assigned to the data, differentiating between real news, typically labeled as 1, and fake news, denoted as 0. The BERT model operates on tokenized inputs; hence, the text data is transformed into numerical tokens using the BERT tokenizer. Subsequently, the dataset is divided into training and testing sets, allowing the model to learn patterns from one subset and validate its performance on another. A pre-trained BERT model for sequence classification is then loaded, often from popular libraries like Hugging Face's Transformers. While BERT is a pre-trained model, fine-tuning is essential to adapt it specifically to the nuances of fake news detection. During the training phase, the model's parameters are optimized iteratively using techniques like backpropagation and gradient descent. The choice of optimizer, learning rate, and the number of training epochs significantly influences the model's effectiveness. Moreover, the model's performance is regularly monitored on a validation dataset, ensuring it doesn’t overfit or underfit the data. Once trained, the model undergoes evaluation on the testing dataset. Metrics like accuracy, precision, recall, and F1-score are employed to comprehensively assess the model's ability to discriminate between real and fake news. Additionally, techniques like cross-validation might be applied for a more robust evaluation. The success of this implementation lies not only in the power of the BERT model but also in the meticulousness of data preprocessing, the appropriateness of model fine-tuning, and the thoroughness of evaluation metrics chosen. This methodology ensures a high level of accuracy and reliability in the identification of misinformation in the vast landscape of online content.

VI. FUTURE ENHANCEMENT

The future scope of fake news detection is promising and further advancements in machine learning, especially deep learning models, will enhance the accuracy of fake news detection algorithms. Integrating text, image, and video analysis to identify inconsistencies across different media formats, making it harder for misinformation to go undetected. Developing real-time monitoring systems that can flag potential fake news as it emerges, allowing for quicker responses. Implementing block chain for source verification and to ensure the integrity of news articles, making it harder to manipulate information. Analyzing online behavior and social media interactions to identify suspicious patterns and sources of fake news. Expanding international cooperation among governments, tech companies, and fact-checking organizations to combat cross-border misinformation. Empowering users with tools to verify information and encouraging responsible sharing practices through browser extensions or apps. Developing AI models that adhere to ethical guidelines and principles, such as minimizing bias and respecting privacy, in the process of fake news detection. These developments represent a holistic approach to tackling the ever-evolving challenge of fake news in the digital age.

Conclusion

Detecting fake news is a complex and evolving challenge that requires a multi-faceted approach. The proposed model is just a one of many solution that will help. Because there is no one-size-fits-all solution for detecting fake news. Fake news comes in various forms, and it often evolves to bypass detection algorithms. A combination of approaches is necessary to effectively combat it. Machine learning and artificial intelligence play a crucial role in fake news detection. Natural language processing (NLP) models can be trained to identify patterns in language and content that are indicative of misinformation. Fact-checking organizations and initiatives are essential for verifying the accuracy of news stories. Their work can serve as a valuable resource for confirming or debunking claims. Determining the credibility of the source is important. Reliable news outlets are generally more trustworthy than obscure websites or social media accounts with no established track record. Comparing information from multiple sources can help identify inconsistencies and suspicious claims. Reliable news is typically corroborated by multiple reputable sources. Promoting media literacy and critical thinking skills among the public is essential. Teaching people how to evaluate sources, check for biases, and question information is a fundamental part of fake news prevention. As fake news detection techniques improve, so do the methods used by purveyors of misinformation. It\'s a constant arms race, with both sides continually adapting. Encouraging users to report potentially fake news can be an effective way to identify and address misinformation. Many social media platforms have implemented reporting systems. Organizations and platforms involved in fake news detection should be transparent about their methods and criteria for identifying fake news. This helps build trust with the Striking a balance between combating fake news and preserving freedom of speech is a challenge. Legislation and ethical guidelines should be considered to prevent overreach. While AI and algorithms can be powerful tools, human expertise is still crucial for making nuanced judgments, especially in cases where context and intent matter. Collaborative efforts among tech companies, fact-checkers, governments, and the public are important for creating a coordinated response to fake news. In conclusion, detecting fake news is an ongoing battle that requires a combination of technology, human judgment, public education, and collaboration among various stakeholders. There is no one-size-fits-all solution, but by adopting a multi-pronged approach, we can better mitigate the impact of fake news on society.

References

[1] \" Detecting Fake News in Social Media Networks \" Authors: Monther Aldwairi, Ali Alwahedi Published in: The 9th International Conference on Emerging Ubiquitous Systems and Pervasive Networks (EUSPN) Year: 2018 [2] \" Fake News Detection Using Machine Learning Ensemble Methods \" Authors: Iftikhar Ahmad, Muhammad Yousaf, Suhail Yousaf ,and Muhammad Ovais Ahmad Published in: Hindawi Year: 2020 [3] \" Detecting Fake News using Machine Learning: A Systematic Literature Review\" Authors: Alim Al Ayub Ahmed, Ayman Aljarbouh, Praveen Kumar Donepudi, Myung Suh Choi [4] \"Fake News Detection Using Machine Learning Approaches\" Authors: Z Khanam, B N Alwasel, H Sirafi and M Rashid Published in: IOP Conf. Series: Materials Science and Engineering Year: 2021 [5] \"A review on fake news detection 3T’s: typology, time of detection, taxonomies\" Authors: Shubhangi Rastogi, Divya Bansal Published in: International Journal of Information Security Year: 2023

Copyright

Copyright © 2024 Avishek Agarwala, Yousuf Mahmud Fahim, Mr. Nitin Jain. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET59773

Publish Date : 2024-04-03

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online