Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Lane Line Detection System for Vehicle Using Python

Authors: Soni Yadav, Kratika Tripathi, Ankan Singh, Vikas Porwal

DOI Link: https://doi.org/10.22214/ijraset.2024.63040

Certificate: View Certificate

Abstract

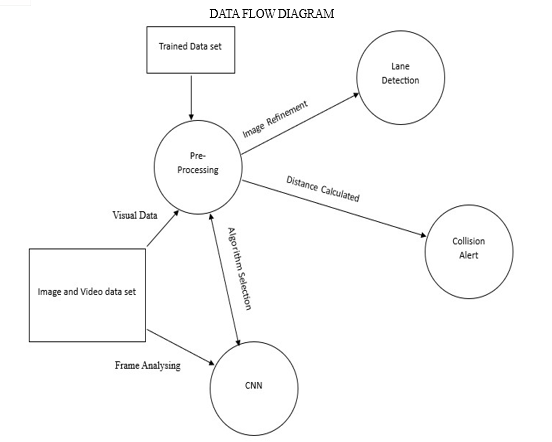

This research presents a real-time collision detection and lane assistance system implemented using Python and OpenCV. The system leverages computer vision techniques to detect vehicles and lane lines, calculate distances to detected objects, and issue timely alerts to prevent collisions. The implementation aims to enhance driver safety by providing real-time feedback about the vehicle\'s surroundings. This paper details the system\'s design, methodology, and performance evaluation.

Introduction

I. INTRODUCTION

In recent times, researchers have been looking into ways to make cars safer by preventing collisions. They've come up with two main types of collision warning systems: one based on time and another based on distance. The first one, called the Safety Time Algorithm, checks how much time is left before a potential collision and compares it to a safety threshold. It mainly uses something called Time to Collision (TTC) as a key factor. The second type, known as the Safety Distance Algorithm, looks at the minimum space required between a car and an obstacle to avoid a collision. This is the distance the car should maintain under the current conditions. To make this work, we developed a smart computer program using Python, a programming language known for its simplicity and flexibility. Python allows programmers to write less code but still express complex ideas clearly. It supports various programming styles and comes with a large library of functions, making it suitable for a wide range of applications. In our case, Python helps us detect potential collisions on a car's lane efficiently. The central part of our approach involves applying gradient and HLS thresholding techniques to detect the lane lines in binary images. Furthermore, we utilize a sliding window search technique to estimate the color of the lane, enabling visualization of the detected lanes. Traditional computer vision-based lane detection methods primarily rely on image processing algorithms to extract lane line features. These methods involve reducing image channels, performing grayscale conversion, applying edge detection algorithms like Canny or Sobel, extracting relevant features, and performing lane line fitting using models such as cubic polynomials, spline curves, or arc curves. The input to our system consists of recorded by the vehicle's camera, which are processed using Hough Line and Hough Transform algorithms for lane mark detection

A. Background and Motivation

With the increasing number of vehicles on roads, traffic accidents have become a significant public safety concern. Technologies like collision detection and lane assistance systems can mitigate these risks by enhancing driver awareness and reaction times. These systems utilize computer vision to monitor the vehicle's environment and provide real-time alerts to the driver.

B. Objectives of the Collision Alert System

The primary objectives of this research are:

- To design and implement an accurate object detection system using Python and OpenCV.

- To develop reliable techniques for estimating the distance of detected objects.

- To create an effective alert mechanism that can timely warn users of potential collisions.

C. Scope of the Report

This report focuses on the development of a collision alert system using image processing and computer vision techniques. It includes theoretical foundations, system design, implementation details, and performance evaluation. The scope does not extend to hardware design or the integration of additional sensors beyond the use of a camera.

II. RELATED WORK

A. Image Processing

An important part of lane detection is image processing in order to achieve an accurate result. The first step in image processing is to convert the image into grayscale i.e., converting the images into gray ones. The first step in image processing is grayscale processing, which converts color images into gray ones. Grayscale processing is used to carry out the next step, binarization, in which the gray image is turned into a black-and-white image. Many algorithms were proposed on how to implement binarization. A new algorithm was proposed [9] in 2017 to improve the traditional algorithm by using an adaptive threshold instead, which improves binarization performance for old images.

B. Lane detection

The first step of the lane detection stage is to identify the range of detected objects in the image, also known as the region of interest (ROI). There are two types of ROI in images, namely static ROI and dynamic ROI. In [10] an night image's near vision field is divided into a near vision field area, a far vision field area, and the sky field is divided into static ROIs. The sky field accounts for 5/12 of the image, and the far vision field accounts for half the near vision field. Most algorithms utilize the ROI-processed picture after getting itedge detection to extract esse fial road lane characteristics from the picture that has been provided. Canny edge detection is the most widely used edge detection technique (CED). Hough transfer is utilised for lane line detection once the pixels with large brightness variations have been marked. [10] employed standard Hough transfer in its algorithm. The approach determines the lane lines for the straight line by using the starting point and finishing point. For the straight line, the method uses the beginning point and the end in order to determine the lane lines. In the case of a curve, the approach calculates the bending direction of the curve on the right base and determines the waveform using the least square fit. Wei et al.

[14] used points of disappearance and some pixels around points, the classic Hough transfer was improved. The Hough Transform is a technique for locating lines by identifying all of their points. This can be performed by representing a line as points. These points are represented as lines/sinusoidal (depending on Cartesian/Polar co- ordinate system). If multiple lines/sinusoidal pass through the point, we can deduce that these points lie on the same line.

C. Lane Departure Recognition

While the gap between the car and the left lane line is much less than the distance between the vehicle and the right lane line, the car starts to flow to left, otherwise, the automobile starts off evolved to flow proper. The deviation distance of the car, in truth, can be calculated by way of the ratio of the deviation distance and driveway inside the picture. Whilst the deviation distance exceeds a selected cost, LDWS works and warns the driving force to adjust again to the safe using range inside the driveway.

III. LITERATURE SURVEY

- Data Collection and Processing Methods for the Evaluation of Vehicle Road Departure Detection Systems

In recent years, off-road collision avoidance/mitigation off-road collision detection systems (RDDS) have been developed and installed in some production vehicles. To support and provide standardized and objective performance, assessments for RDDS, this paper describes the development of the data acquisition and data post- processing systems for testing RDDSs. Seven parame are used to describe road departure test scenarios overall structure and specific components acquisition system and data post-processing syster evaluating the vehicle RDDS will be developed and presented. The sensing system and data post-processing system captured all required signals and accurately displayed the testing vehicle's motion profile, according to the results. The suggested data collection system's effectiveness is demonstrated by test track testing under various scenarios.

2. A Lane Detection Method for Lane Departure Warning System

The vision-based Lane Departure WarningSystem (LDWS) is an effective way to prevent Single Vehicle Road Departure accident. In fact, a lot of complex noise makes it very difficult to quickly and accurately identify a lane, that is, to define a lane, a set of image processing method which can give results fast and accurately in the non-ideal conditions is the primary work. This document proposes a lane detection method for the Lane Departure Warning System. Experimental comparisons determine the Canny algorithm as the edge detection method and the Hough transform as the efficient method for air wire detection. To meet real-time requirements, the Region of Interest (ROI) is defined to reduce noise and speed up for accurate ramping. Finally, experimental results show that this lane detection method can efficiently and accurately extract lane information from captured road images.

3. Real-time illumination invariant lane detection for lane departure warning system

Lane detection is an important factor in improving driving safety. This paper proposes a real-time, lighting-invariant lane detection method for the Lane Departure Warning System. There are three primary components to it. First, it detects vanishing points based on the voting map and defines an adaptive region of interest (ROI) to reduce computational complexity. Second, we take advantage of lane colours' unique nature to achieve illumination invariant lane marker candidate detection. Finly, use a clustering technique to find the main track from the lane marker candidates. In the event of a lane departure situation, our system will send a warning signal to the driver. Experimental results show satisfactory performance with an average detection rate of 93% under a variety of lighting conditions. In addition, the overall process requires only 33MS per frame.

4. A Robust Lane Detection Method Based on Vanishing Point Estimation Using the Relevance of Line Segments

This article proposes a robust lane detection method based on vanishing point estimation. Estimating the vanishing point helps identify the lane because the parallel lines in the 2D projected image converge to the vanishing point. However, for images with complex backgrounds, it is not easy to determine the vanishing point correctly. Thus, a robust vanishing point estimation method is proposed that uses a probabilistic voting procedure based on intersection points of line segments extracted from an input image. The proposed voting function is defined by the strength of the line segment, which represents the relevance of the - extracted line segments. Then select a candidate lane segment, taking into account geometric constraints. Finally, the host trace is detected using the proposed score function designed to remove outliers for the candidate line. In addition, the detected host traces are improved using interframe similarity that takes into account the position consistency of the detected host traces and the estimated vanishing points of consecutive frames.

In addition, we suggest using a look-up table to reduce the computational cost of the vanishing point estimation process. Experimental results show that the proposed method efficiently estimates the vanishing point and recognizes lanes in a variety of environments.

5. A Framework for Camera-Based Real-Time Lane and Road Surface Marking Detection and Recognition

In this paper Vision based, integrated framework based on spatio-temporal incremental clustering coupled with curve fitting and Grassmann manifold learning technique for lane detection is used. It is only restricted by the type of road surface markings present in the training data.

6. New Lane Detection and tracking strategy based on vehicle forward monocular camera

A new strategy for lane detection and tracking that is suitable as a functional prerequisite for using DAS features such as lane departure warning and lane departure warning. Here the visibility of the lane markings was compromised by several factors, such as the reflectivity of the lane, the camera's glare, and the presence of shadows and the deterioration of the paint.

7. A Portable Vision-Based Real-Time Lane Departure Warning System: Day and Night

Here embedded advanced RISC machines (ARM)-based real-time Lane Departure Warning System is proposed. The limitation being this system will poorly perform if there is no sufficient illumination.

8. Automatic Detection and Classification of Road Lane Markings Using Onboard Vehicular Cameras

It is a new approach for road lane classification using an onboard camera using Bayesian classifier but it limits the rapid detection of transitions and removal of isolated misdetections.

9. Lane Departure Identification for Advanced Driver Assistance

This approach reduces the computational time required for the lane departure estimation and reduces the false warnings but does not estimate the real-world coordinates of a vehicle with respect to both lane boundaries.

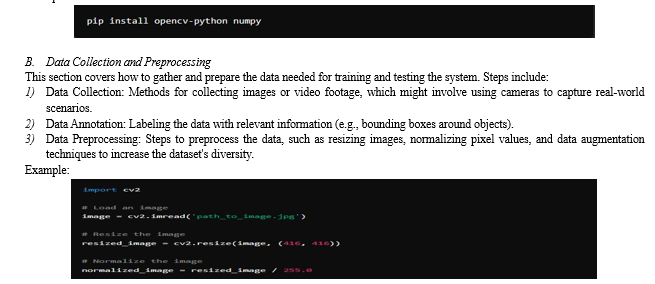

IV. SETTING ENVIRONMENT FOR CODE

A. Installing Python: Instructions for installing the correct version of Python.

Installing OpenCV: Steps to install OpenCV, which is a crucial library for image processing and computer vision tasks.

Additional Libraries: Installation of other necessary libraries and dependencies, such as NumPy, SciPy, and machine learning frameworkks (e.g., TensorFlow or PyTorch if deep learning models are used).

Example

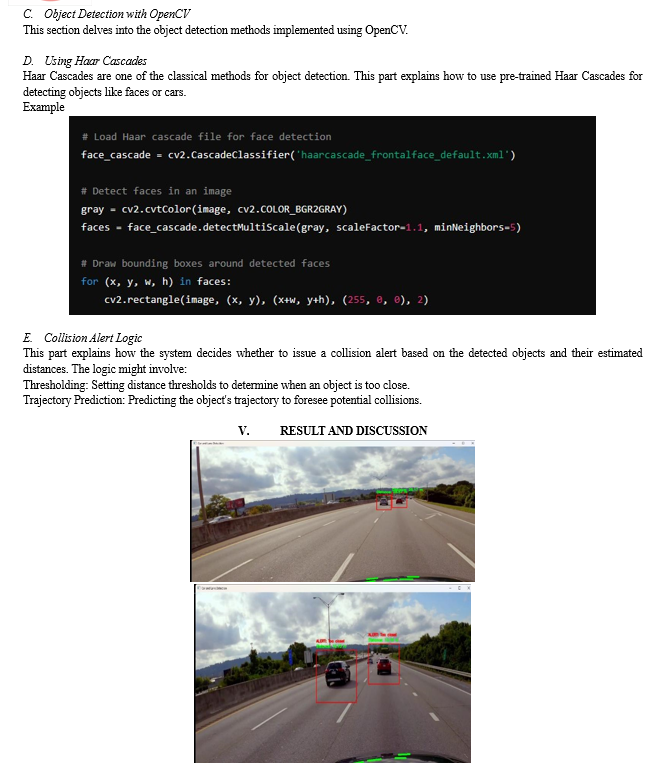

A. Performance Evaluation

In this section, the performance of the collision detection and lane assistance system is evaluated. The system was tested on various video samples to assess its accuracy and real-time processing capabilities. The key findings include:

Accuracy: The car detection and distance estimation functions performed effectively across different lighting conditions and traffic scenarios.

Real-Time Processing: The system was able to process frames in real-time, providing immediate feedback on detected cars and lane lines.

B. Limitations

This subsection discusses the limitations identified during testing:

Lighting Conditions: Poor lighting can affect the accuracy of car detection and lane identification.

Occlusions: Objects blocking the camera's view can hinder the system's ability to detect cars and lanes accurately.

Vehicle Size Variation: Differences in vehicle sizes can impact the precision of distance estimations.

Computational Load: High computational requirements can slow down processing on less powerful hardware, affecting real-time performance.

C. Future Work

Recommendations for future improvements and research directions are provided here:

Advanced Detection Models: Integrating more robust object detection models like YOLO (You Only Look Once) or SSD (Single Shot MultiBox Detector) to improve accuracy.

Sensor Fusion: Combining data from multiple sensors (e.g., LiDAR, radar) to enhance detection reliability and accuracy.

Optimization for Embedded Platforms: Optimizing the system for deployment on embedded platforms to improve performance in real-world applications.

Conclusion

This chapter summarizes the research and highlights the main achievements and potential areas for further development: Feasibility: The research successfully demonstrates the feasibility of using Python and OpenCV to develop a real-time collision detection and lane assistance system. Functionality: The implemented system can detect vehicles, estimate distances, and identify lane lines, issuing timely alerts to enhance driver safety. Future Enhancements: There are several areas for potential improvement, including the integration of advanced detection models, sensor fusion, and system optimization for embedded platforms. In conclusion, the study provides a solid foundation for further development of collision detection and lane assistance systems, with the potential to significantly improve road safety and driver assistance technologies.

References

[1] F. Mariut, C. Fosalau and D. Petrisor, \"Lane Mark Detection Using Hough Transform\", In IEEE International Conference and Exposition on Electrical and Power Engineering, pp. 871-875,2012 [2] S. Srivastava, R. Singal and M. Lumb, Efficient Lane Detection Algorithm using Different Filtering Techniques, International Journal of Computer Applications, vol. 88, no.3, pp. 975-8887,2014 [3] A. Borkat M. Hayes, M.T. Smith and S-Pankant: Layered Approach To Robust Lane Detection At Night A In IEEE International Conference and Exposition on Electrical and Power Engineering, last, Romania, pp. 735-739,2011. [4] K. Ghazali R. Xian and 1. Ma, \"Road Lane Detection Using H-Maxima and Improvest Hough Transform\" Fourth International Conference on Computational Intelligence, Modelling and Simulation, pp: 2166-6531, 2011 [5] M. Aly, \"Real time Detection of Lane Markers in Urban Streets\", In IEEE Intelligent Vehicles Symposium, pp. 7- 12, 2008. [6] J.C. McCall and M.M. Trivedi, \"Video-based Lane Estimation and Tracking for Driver Assistance: Survey, System, and Evaluation\", IEEE Transactions on Intelligent Transportation Systems, vol.7, pp.20-37, 2006. [7] Y.Wang, E. K.Teoh and D. Shen, \"Lane Detection and Tracking Using B-snake,\" Image and Vision Computing, vol. 22, pp. 269-280, 2004. [8] A. Broggi and S. Berte, \"Vision-based Road Detection in Automotive Systems: a Real-time Expectation-driven Approach\", Journal of Artificial Intelligence Research, vol.3, pp. 325-348, 1995. [9] M. Bertozzi and A. Broggi, \"GOLD: A Parallel Realtime Stereo Vision System for Generic Obstacle and Lane Detection\", IEEE Transactions of Image Processing, pp. 62-81, 1998. [10] Kichun, J., L. Minchul and S. Myoungho. Road Slope Aided Vehicle Position Estimation System Based on Sensor Fusion of GPS and Automotive Onboard Sensors. IEEE Transactions on Intelligent Transportation Systems, Vol.17, 2019, pp. 250-263? [11] Xu, L., S. Hu and Q. Luo. A New Lane Departure Warning Algorithm Considering the Driver\'s Behavior Characteristics. Mathematical Problems in Engineering, 2018, 2015 [12] Yi, S., Y. Chen and C. Chang. A Lane Detection Approach Based on Intelligent Vision. Computers & Electrical Engineering, Vol.42, 2015, pp. 23-29? [13] Jung, H. G., Y. H. Lee and H. J. Kang. Sensor Fusion-Based Lane Detection for LKS ACC System. International Journal of Automotive Technology, Vol.10, No. 2, 2018, pp. 219-228\'

Copyright

Copyright © 2024 Soni Yadav, Kratika Tripathi, Ankan Singh, Vikas Porwal. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET63040

Publish Date : 2024-06-01

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online