Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Sign Language Detection Using Deep Learning

Authors: Mrs. N. Kirthiga, Miriyala Vamsi Krishna , Venkata Naveen Vadlamudi , Makani Venkata Sai Kiran , Dudekula Hussain Vali

DOI Link: https://doi.org/10.22214/ijraset.2024.58630

Certificate: View Certificate

Abstract

Millions of citizens worldwide suffer from deaf and hard of hearing (DHH), a communication impairment that makes speaking difficult and necessitates the use of sign language. This communication gap frequently hampers access to education and opportunities for employment. Although AI-driven technologies have been studied to tackle this problem, no research has specifically looked into the intelligent and automatic translation of American sign gestures to text in low-resource languages (LRL), such as Nigerian languages. We suggest a unique end-to-end system for translating the American Sign Language, or ASL, Our framework uses the \"no language left behind\" translation model and the Transformer-based model for ASL-to-Text generation. converting the text generated from LRL to English. We assessed the ASL-to-Text system\'s performance. The people who participated were able to understand the translated text and expressed satisfaction with both the Text-to-LRL and ASL-to-Text models, according to a qualitative analysis of the framework. Our suggested framework shows how AI-driven technologies can promote inclusivity in sociocultural interactions and education, particularly for individuals with DHH living in low-resource environments. To bridge the gap between the non-sign language and hearing/speech impaired communities, sign language recognition is crucial. Sentence detection is more useful in real-world situations than isolated recognition of words, yet it is also more difficult since isolated signs need to be accurately identified and continuous, high-quality sign data with distinct features needs to be collected. Here, we suggest a wearable system for understanding sign language using a convolutional neural network (CNN) that combines inertial measurement units attached to the body with flexible strain sensors to detect hand postures and movement trajectories. A total of 48 frequently used ASL sign language terms were gathered and utilized to train the CNN model. This resulted in an isolated sign language word recognition accuracy of 95.85%.

Introduction

I. INTRODUCTION

In every aspect of life, effective communication is essential, but it's especially important for those who are hard of hearing or deaf. It's critical to close the communication gap between the hearing and non-hearing communities given the rising incidence of hearing loss. To address this issue, we introduce a brand-new system that uses machine learning and computer vision to translate American Sign Language (ASL) into text format. The goal of this system is to provide the deaf and hard of hearing with an effective and convenient way to communicate with the hearing population. Every field recognizes the profound importance of communication, which has led scientists to look for methods to close the gap between hearing and deaf people. Nearly 2.5 billion people worldwide are predicted to have hearing loss by 2050, with at least 700 million of them needing hearing rehabilitation, according to the World Health Organisation. Furthermore, because of risky listening habits, over 1 billion young adults could suffer from permanent, preventable-hearing, loss. Many nations and areas have different sign languages in use, with American Sign Language being one of the most common. This system uses Media Pipe Holistic Key Points to recognize hand gestures and focuses on ASL. The system creates a sign language model to anticipate ASL gestures using an action detection model driven by LSTM layers. This system, which combines state-of-the-art technologies and effective algorithms, is a useful tool for improving communication between the deaf and hard-of-hearing community and themselves. An electronic device interaction system for ASL translation can be implemented almost anywhere, even though it can be difficult to find a sign language interpreter for every interaction

In the field of artificial intelligence research, computer vision is a framework that is still in its infancy for object detection. Like other sign languages, ASL is classified according to region, and this system presents effective methods for identifying hand gestures that convey ASL meanings. The system effectively predicts American Sign Language in real-time by using action detection driven by LSTM layers and extracting Media Pipe Holistic Key Points.

II. LITERATURE REVIEW

|

Author |

Problem |

Methodology |

Remake |

|

ThariqKhalid; Riad Souissi |

Deep Learning for Sign Language Recognition: Current Techniques. [IEEE] |

(CNN)(RNN) |

performed well with an accuracy of 90.3%The recognition rate of CNN was 91.33%, |

|

Abhishek wahane, Aditya Kochari, Aditya Hundekari

|

Real-Time Sign Language Recognition using Deep Learning Techniques (IEEE)-2022 |

(CNN), machine learning Algorithms |

This module leverages Google’s Inception v3-for transfer learning and gives an accuracy of 89.91% |

|

Teena Varma, Ricketa Baptista |

Sign Language Detection using Image Processing and Deep Learning (IJERT)-2020 |

(CNN) |

During the live capture testing, the Canny edge algorithm with accuracy 98% |

|

Sakshi Sharma & Sukhwinder Singh |

Recognition of Indian Sign Language (ISL) Using Deep Learning Model

|

(CNN) |

three datasets have been used and the achieved accuracy is 92.43, 88.01, and 99.52% |

|

Rameshbhai Kothadiya, |

Sign Language Detection and Recognition Using Deep Learning (MDPI) |

LSTM-GRU-RNN-CNN |

LSTM followed by GRU, achieves an accuracy of 97% |

|

Reddygari Sandhya Rani, R Rumana ,R. Prema |

Sign Language Recognition for The Deaf and Dumb |

(CNN)(RNN), machine learning |

achieved final accuracy of 92.0% on our dataset |

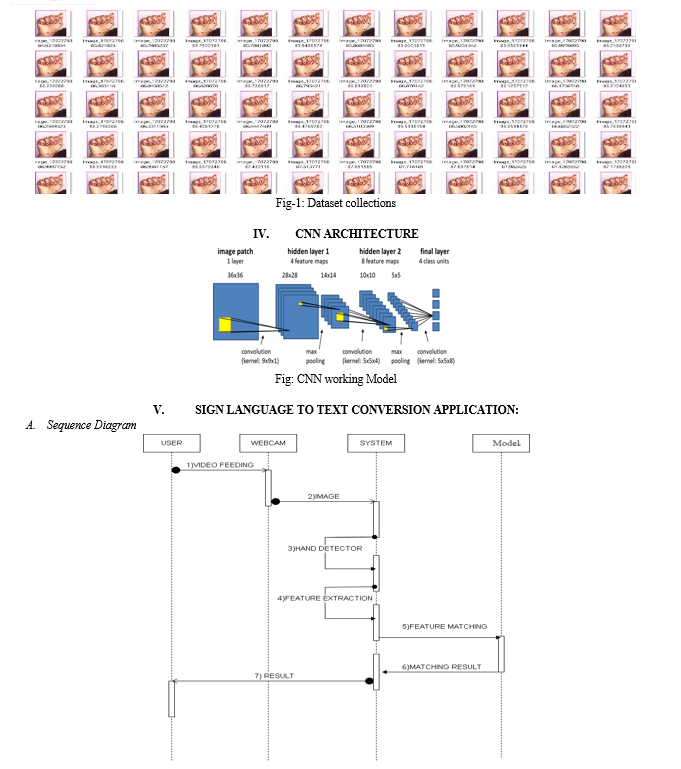

III. METHODOLOGY

A. Data Collection

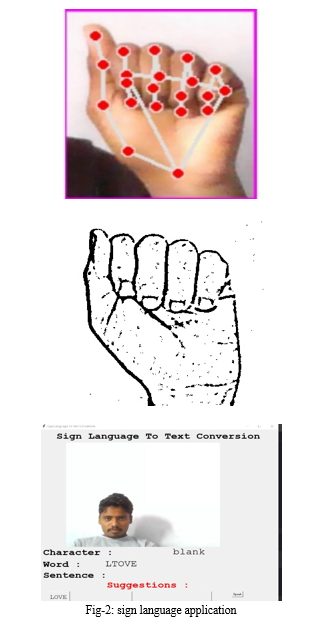

An extensive and varied collection of hand gestures that represent Indian Sign Language is needed to develop the Sign Language to Text conversion system. The Media Pipe library and a webcam are used to gather this dataset. Key points can be placed on the user's hand and hand gestures can be tracked in real time with the help of the Media Pipe library. The hand gestures are recorded by the webcam and saved as data-samples-for-the-dataset. The machine-learning model that is in charge of identifying the hand gestures and translating them into text is trained and tested using the data that has been gathered. To guarantee the system's resilience and precision, it is crucial to gather a varied and inclusive dataset that encompasses an extensive array of hand motions and hand movement variations. To guarantee that it appropriately represents Indian Sign Language, the dataset is updated regularly and data collection is ongoing. We can gather high-quality data samples to create a reliable and accurate Sign language-to-text conversion System with the aid of the Media Pipe library and webcam.

B. Data Pre-Processing

To develop the Sign Language to Text Conversion System, pre-processing the hand gesture images is a crucial step. Preparing the images for the machine learning model serves the purpose of facilitating the model's ability to recognize hand gestures and convert them into text. The pre-processing stage involves resizing, normalising, and transforming the hand gesture images so that the machine learning model can use them as input. To make processing the images easier for the model, they are resized to a standard size. The purpose of the normalization step is to eliminate any discrepancies in the background, lighting, or colour of the pictures that might harm.

Besides normalization and resizing, to make sure the model has a consistent view of the hand gestures, the images may also go through a transformation process like cropping or rotation. This facilitates the model's ability to identify the hand gestures and lowers-the-variance-antedate. The pictures are prepared for use in training and testing the machine-learning model after the pre-processing stage is finished. The model can recognize and translate hand gestures into text with high accuracy because the pre-processed images give it the information it needs to learn the relationship between the hand gestures and the corresponding text. Preprocessing enables the Sign Language to Text Conversion System to function as an effective tool for deaf and dumb people to interact with one another.

C. Labelling Text Data

An essential first step in creating the Sign Language to Text Conversion System is labelling the hand gestures. This step involves giving a label to each hand gesture in the dataset that corresponds to the word or phrase it represents. Labelling is an important step because it gives the machine learning model the information it needs to identify and interpret hand gestures into text. The labels for the hand gestures are made in compliance with the standard terminology and grammar used in Indian Sign Language, drawing inspiration from this language. An expert in Indian Sign Language assigns the labels, making sure they are accurate and consistent. Although the labelling process is done manually, it can also be partially automated with the use of computer vision techniques. The hand gestures can be used to train and test the machine learning model after they have been labelled. The model can recognize and translate hand gestures into text with high accuracy because the labelled data gives it the information it needs to learn the relationship between the hand gestures and the corresponding text. The Sign Language to Text Conversion System is an effective tool for assisting deaf and dumb people in communicating with others when it is labelled appropriately.

D. Training and Testing

The pre-processed images of hand gestures and the associated text labels are fed to the model during the training phase. With the use of this data, the model learns how the hand gestures relate to the text, and as it processes more data, it updates its parameters.

E. Dataset

The deep learning sign language to text conversion model was trained and tested using the 87,000-image ASL (American Sign Language) dataset. There are 29 in this dataset.

labels (A..Z, space, delete, nothing), and the letter A in the alphabet corresponds to 0 for training purposes, B to 1..., and little to 28.

By reducing the image size to 64 by 64, the training process could be accelerated without sacrificing the fine details of the photos. After the data were loaded into the NumPy array, they were scaled down between the values 0 and 1 to prevent the explosion of gradients issue that is commonly encountered in CNN and transfer learning. The image processing steps are illustrated in Fig. 1.

VI. RESULTS

We tested if our model was overfitting using cross-validation. Overfitting occurs when a model fits training data well but is unable to generalize and generate accurate predictions for new data. Two sets of data were created: a training set and a validation set. The validation set was used to evaluate the model's performance after it had been trained using the training set. The model has a 98.7 per cent accuracy rate on the validation set and a 98.8 per cent accuracy rate on the training set.

This means that on fresh data, the model ought to perform with an accuracy of 98.7%. There is a 0.35% loss in the model. As shown in Fig. 3, the train and validation losses decreased with

VII. FUTURE SCOPE

The American sign is used to train this deep learning model. language dataset, we can attempt to broaden the scope of this model by training it on British sign language, Indian sign language, collection, etc. Furthermore, we assumed that the background of the image on which the prediction would be made would be smooth; this can be enhanced by employing augmentation techniques to add some noise to the image.

Conclusion

In this work, we have introduced a deep learning model that, when compared to the current CNN model, demonstrated a notable improvement and provided a high accuracy based on the Transfer Learning concept. We identified the flaws in the current deep learning models and created a new one. The accuracy of the model presented in this paper was 98.7%, an increase of more than 4%. This was made possible by the application of ImageNet weights and VGG16 transfer learning. Additionally, the model\'s accuracy was increased by carefully experimenting until the ideal hyperparameters were found. The picture must be resized to 64 by 64 pixels. Additionally, we have suggested an application\'s architecture that is designed for simple

References

[1] Human hand gesture recognition using a convolution neural network, Hsien-I Lin, Ming-Hsiang Hsu, and Wei-Kai Chen, 10.1109/CoASE.2014.6899454, August 2014 [2] \"Sign Language to Text and Speech Conversion,\" International Journal of Advance Research, Ideas and Innovations in Technology, www.IJARIIT.com, Bikash K. Yadav, Dheeraj Jadhav, Hasan Bohra, Rahul Jain. [3] Real-time American sign language recognition using convolutional neural networks was published in 2016 by Garcia, B., and Viesca, S. A. in Convolutional Neural Networks for Visual Recognition, 2, 225–232. [4] Time Series Neural Networks for Real-Time Sign Language Translation, 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, 2018, pp. 243-248, Doi: 10.1109/ICMLA.2018.00043, S. S. Kumar, T. Wangyal, V. Saboo, and R. Srinath. [5] \"Arabic Sign Language Recognition using Lightweight CNN-based Architecture,\" by AlKhuraym, Batool Yahya, et al. International Journal of Advanced Applications of Computer Science (2022) [6] \"Hidden Markov Model for Gesture Recognition,\" Yang, Jie, and Y. Xu (1994), doi: 10.21236/ada282845 [7] \"A translator for American sign language to text and speech,\" V. N. T. Truong, C. Yang, and Q. Tran, 2016 IEEE 5th Global Conference on Consumer Electronics, 2016, pp. 1-2, doi: 10.1109/GCCE.2016.7800427. [8] 3D MobileNet-v2 and Knowledge Distillation for Sign Language Recognition, X. Han, F. Lu, and G. Tian, ICETIS 2022: 7th International Conference on Electronic Technology and Information Science, 2022, pp. 1-6. [9] \"American sign language recognition and training method with recurrent neural network,\" Lee, C. K. M. et al. Syst. Appl. Expert. 167 (2021) [10] (2020) \"Text Classification Using Long Short-Term Memory with GloVe Features,\" in Sari, Winda, Rini, Dian Palupi, and Malik, Reza, Journal of Computer Science and Information Technology, 5. 85, 10.26555/jiteki. v5i2.15021 [11] \"Arabic Sign Language Recognition through Deep Neural Networks Fine-Tuning,\" by Saleh, Yaser, and Ghassan F. Issa. 2020; Int. J. Online Biomed. Eng. 16: 71–83. [12] S. Liu and W. Deng,” Very deep convolutional neural network-based image classification using small training sample size,” 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), 2015, pp. 730-734, -doi: 10.1109/ACPR.2015.7486599 [13] Sunitha K. A, Anitha Saraswathi.P, Aarthi.M, Jayapriya. K, Lingam Sunny, Deaf Mute Communication Interpreter- A Review, International Journal of Applied Engineering Research, Volume 11, pp 290-296, 2016. [14] Mathavan Suresh Anand, Nagarajan Mohan Kumar, Angappan Kumaresan, An Efficient Framework for Indian Sign Language Recognition Using Wavelet Transform Circuits and Systems, Volume 7, pp 1874- 1883, 2016. [15] Mandeep Kaur Ahuja, Amardeep Singh, Hand Gesture Recognition Using PCA, International Journal of Computer Science Engineering and Technology (IJCSET), Volume 5, Issue 7, pp. 267-27, July 2015. [16] Sagar P.More, Prof. Abdul Sattar, Hand gesture recognition system for dumb people, [17] International Journal of Science and Research (IJSR) [18] Chandandeep Kaur, Nivit Gill, An Automated System for Indian Sign Language Recognition, International Journal of Advanced Research in Computer Science and Software Engineering. [19] Pratibha Pandey, Vinay Jain, Hand Gesture Recognition for Sign Language Recognition: A Review, International Journal of Science, Engineering and Technology Research (IJSETR), Volume 4, Issue 3, March 2015. [20] Nakul Nagpal,Dr. Arun Mitra.,Dr. Pankaj Agrawal, Design Issue and Proposed Implementation of Communication Aid for Deaf & Dumb People, International Journal on Recent and Innovation Trends in Computing and Communication, Volume: 3 Issue: 5,pp- 147 149. [21] S. Shirbhate1, Mr Vedant D. Shinde2, Ms Sanam A. Metkari3, Ms Pooja U. Borkar4, Ms. Mayuri A. Khandge/Sign-Language- Recognition-System. 2020 IRJET Vol3 March 2020. [22] Nandy, A.; Prasad, J.; Mondal, S.; Chakraborty, P.; Nandi, G. Recognition of Isolated Indian Sign Language Gesture in Real Time. Commun. Comput. Inf. Sci. 2010, 70, 102–107. [23] Mekala, P.; Gao, Y.; Fan, J.; Davari, A. Real-time sign language recognition based on neural network architecture. In Proceedings of the IEEE 43rd Southeastern Symposium on System Theory, Auburn, AL, USA, 14–16 March 2011. [24] Chen, J.K. Sign Language Recognition with Unsupervised Feature Learning; CS229 Project Final Report; Stanford University: Stanford, CA, USA, 2011. [25] Sharma, M.; Pal, R.; Sahoo, A. Indian sign language recognition using neural networks and KNN classifiers. J. Eng. Appl. Sci. 2014, 9, 1255–1259. [26] Agarwal, S.R.; Agrawal, S.B.; Latif, A.M. Article: Sentence Formation in NLP Engine on the Basis of Indian Sign Language using Hand

Copyright

Copyright © 2024 Mrs. N. Kirthiga, Miriyala Vamsi Krishna , Venkata Naveen Vadlamudi , Makani Venkata Sai Kiran , Dudekula Hussain Vali . This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET58630

Publish Date : 2024-02-27

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online