Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Iris Unleashed: Revolutionizing Mouse Control through OpenCV

Authors: Farooq Sunar Mahammad, Lakshmi Sravani K, Sandhya B, Neha Maheen A, Manisha M, Dharani T

DOI Link: https://doi.org/10.22214/ijraset.2024.60909

Certificate: View Certificate

Abstract

Background: In today\'s modern world, personal computers have become an essential part of our daily routines, serving various purposes like work, education, and entertainment. However, for individuals with limited mobility, using traditional input devices such as keyboards and mouse can be quite challenging. In such cases, alternative input methods that utilize different abilities, such as eye movements, are preferred. Objectives: The proposed system begins by capturing an image with a camera and using OpenCV code to detect the pupil\'s position in the eye. This information serves as a reference point for the user to control the cursor by moving their eyes left and right. Methods: The creation of the Iris Mouse or Eye Mouse controlled system employing OpenCV involves integrating several technologies like Image Processing Algorithms, Machine Learning Models, Software Development Tools and libraries like cv2 (OpenCV), numpy, mediapipe, math, pyautogui. Statistical Analysis: By analysing changes in the pupil\'s position and orientation, the system can accurately determine the direction and intensity of the user\'s gaze, enabling precise cursor control with the help image processing algorithms. Machine Learning models are trained using labelled data to classify eye features and predict gaze direction with higher accuracy. Findings: The creation of a mouse control system based on eye tracking marks a substantial leap in improving the accessibility and usability of personal computer systems, particularly for individuals dealing with motor disabilities. The suggested model, utilizing computer vision techniques alongside OpenCV, presents an affordable and adaptable solution for managing the mouse cursor through eye movements. Applications: Eye-tracking systems serve a multitude of purposes across various fields, including assistive technology, user interface design, psychology, and marketing research. Improvements: The future of eye-tracking research may explore new avenues and applications, such as immersive virtual reality experiences, gaze-based interaction in augmented reality, and integration with brain-computer interfaces for enhanced control and communication capabilities.

Introduction

I. INTRODUCTION

In today's modern world, computers are essential tools that we use every day for work, school, and entertainment. However, for people with limited mobility, using traditional input devices like keyboards and mice can be difficult. To solve this problem, new methods of controlling computers using eye movements have been developed. This paper presents an affordable eye-tracking system that lets users control the computer mouse cursor. By using a modified webcam, the system captures images of the user's eyes and uses special software to track their movements in real-time. By focusing on where the pupil is located, the system allows users to move the cursor smoothly across the screen. The goal of this research is to make technology more accessible for people with disabilities. By using eye-tracking technology, this system helps people with limited mobility use computers on their own, which promotes inclusivity in today's digital world.

II. LITERATURE SURVEY

Exploring the nuances of human visual perception, researchers have delved into the intricacies of head pose and eye orientation to understand gaze estimation. Among various approaches, the "one-circle algorithm" stands out, focusing on eye gaze estimation using a monocular image zooming in on a single eye. By recognizing the iris contour as a circle, this method estimates the normal direction of the iris circle, representing the eye gaze.

Leveraging basic projective geometry, the elliptical image of the iris is back-projected onto two circles, which can be disambiguated by a geometric constraint ensuring equal distances between the eyeball's center and the eye corners. This innovative approach enables higher resolution iris images captured with zoom-in cameras, leading to more accurate gaze estimation. Additionally, a holistic approach combining head pose determination with gaze estimation is proposed, wherein head pose information guides the search for eye gaze. Extensive experiments on synthetic and real image data validate the robustness of this gaze determination approach statistically.

|

SI.No. |

Authors |

Technology |

Advantages |

Limitations |

|

1. |

Hritik Josi,Nitin Waybhase |

Informative algorithmic rule |

Hand detection is transformed to binary image. |

The system gets slow. |

|

2. |

Anadi Mishra,Sultan Faiji,Pragati Verma,Shyam Dwivedi,Rita Pal |

CV2.VideoCapture, OpenCV And Mediapipe |

Able to control our screen by moving our fingers which will work as cursor. |

Uses only the proper hand to perform gesture. |

|

3. |

Mohamed Nasol,Mujeeb Rahman,Haya Ansari |

MATLAB |

Eye detection movement. |

Small decrease in accuracy. |

|

4. |

Sunil Kumar Beemanapally,Chetan Kumar,Diksha Kumari |

Image Processing,Eye tracking,Hough transform. |

It provides a clear and consise. |

Deforms non elastically as pupil changes size |

|

5. |

Khushi Patel,Snehal Solaunde,Shivani Bhong |

Mediapipe,OpenCV |

Hands free control. |

Privacy Concerns |

III. EXISTING SYSTEM

Existing systems for mouse control typically rely on conventional input devices like computer mouse, touchpads, or keyboards. However, these methods may present challenges for individuals with motor disabilities or limited physical mobility. While specialized input devices are available, they often come with a high price tag and may not cater to all types of impairments. Therefore, there is a growing need for an affordable and versatile alternative that empowers users with motor limitations to interact with computers effectively.

Additionally, the development of a dynamic computational head compensation model aims to automatically adjust the gaze mapping function whenever the user's head moves.

IV. PROPOSED SYSTEM

The proposed Iris Mouse project introduces an innovative solution to address the limitations of traditional input devices. By leveraging OpenCV, the system analyzes real-time video feed captured by a webcam to accurately detect and track the user's eye movements. These eye movements are then translated into cursor movements, effectively replacing the need for a traditional mouse. This hands-free control method has the potential to significantly improve the accessibility and usability of computers for individuals with motor disabilities. Moreover, it streamlines the calibration process to only one initial setup for each user.

V. SYSTEM ARCHITECTURE

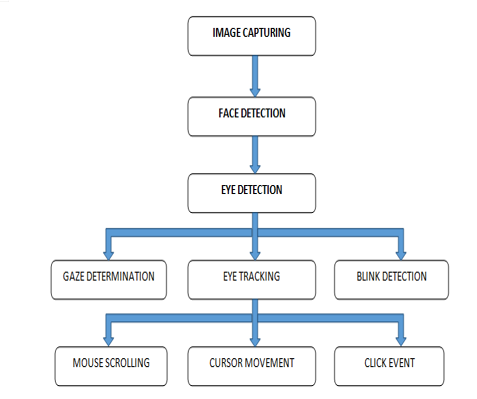

The system's structure involves a customized webcam designed to capture images of the user's eyes, which then connects with OpenCV to process these images in real-time. By analyzing the data from eye movements, the system calculates the position of the cursor on the computer screen. Additionally, a dynamic computational model interprets both eye blinks and pupil movements. This architecture seamlessly integrates various image processing algorithms to ensure precise control of the cursor. With this setup, individuals can navigate through computer interfaces using only their eye movements, thereby improving accessibility. The system architecture emphasizes both efficiency and accuracy, facilitating smooth interaction between the user and the computer system.

VI. STEPS TO IMPLEMENT PROPOSED MODEL

- Data Gathering: Initially, the system captures live video footage from a webcam to obtain the user's eye movements, which serve as the input data for analysis.

- Preprocessing: The captured video frames undergo preprocessing to enhance their quality and clarity. This preprocessing phase may involve techniques like reducing noise, enhancing contrast, and stabilizing the images to improve accuracy.

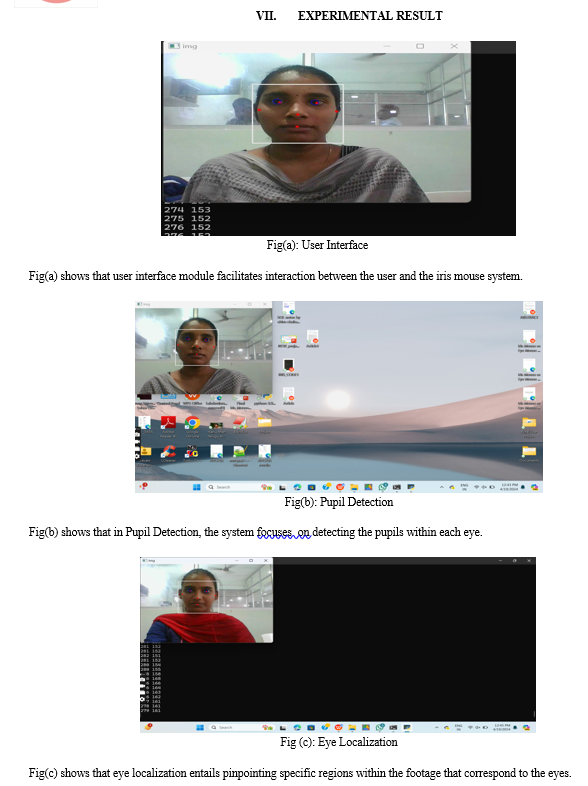

- Eye Localization: Utilizing computer vision algorithms, the system identifies and localizes the user's eyes within the video frames. This step entails pinpointing specific regions within the footage that correspond to the eyes.

- Pupil Detection: Once the eyes are identified, the system focuses on detecting the pupils within each eye. This is achieved through specialized image processing methods tailored for pupil detection, such as thresholding and edge detection.

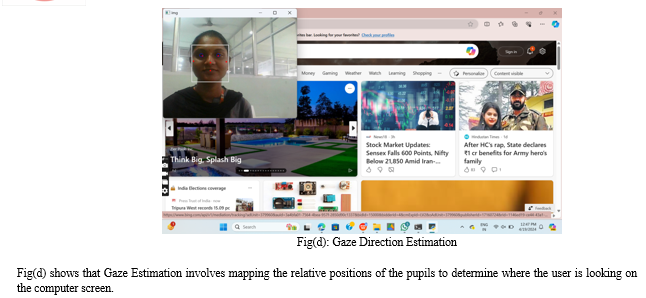

- Gaze Direction Estimation: Based on the detected positions of the pupils within the eyes, the system estimates the direction of the user's gaze. This involves mapping the relative positions of the pupils to determine where the user is looking on the computer screen.

- Cursor Manipulation: Using the estimated gaze direction, the system adjusts the position of the cursor on the computer screen in real-time. This ensures that the cursor moves correspondingly with the user's gaze, enabling hands-free cursor control.

- User Interaction: The system enables the user to interact with the computer interface solely through their gaze. This interaction includes actions like clicking, dragging, and scrolling, all of which are executed based on the user's gaze direction.

- Feedback and Calibration: To ensure accurate cursor control, the system provides feedback to the user. Additionally, it may include a calibration process to fine-tune the mapping between the user's gaze and cursor movement, enhancing performance for individual users.

- Integration and Evaluation: Finally, the developed model is integrated into the computer system and rigorously evaluated to assess its functionality, reliability, and usability across different scenarios and environments.

By adhering to these steps, the system effectively translates the user's eye movements into cursor control, offering an accessible and user-friendly interface for individuals with motor disabilities.

VIII. ACKNOWLEDGEMENT

We are deeply thankful to Dr.Farooq Sunar Mahammad for his support and encouragement. He provided us with the necessary resources and environment to pursue academic excellence and we are able to complete this research under his mentorship successfully.

Conclusion

The creation of a mouse control system based on eye tracking marks a substantial leap in improving the accessibility and usability of personal computer systems, particularly for individuals dealing with motor disabilities. The suggested model, utilizing computer vision techniques alongside OpenCV, presents an affordable and adaptable solution for managing the mouse cursor through eye movements.

References

[1] Zhang, X., Yin, L., Cohn, J. F., & Canavan, S. (2018). An eye movement corpus study of complex task-based reading in Chinese. Frontiers in psychology, 9, 1286. [2] Duchowski, A. T. (2017). Eye tracking methodology: Theory and practice. Springer. [3] Bulling, A., Ward, J. A., Gellersen, H., &Tro?ster, G. (2011). Eye movement analysis for activity recognition using electrooculography. IEEE transactions on pattern analysis and machine intelligence, 33(4), 741-753. [4] Hansen, D. W., & Ji, Q. (2010). In the eye of the beholder: A survey of models for eyes and gaze. IEEE transactions on pattern analysis and machine intelligence, 32(3), 478-500. [5] Wooding, D. S. (2016). The technique of measuring eye movements. International ophthalmology clinics, 6(3), 607-636. [6] Pfeiffer, U. J., Vogeley, K., &Schilbach, L. (2013). From gaze cueing to dual eye-tracking: Novel approaches to investigate the neural correlates of gaze in social interaction. Neuroscience &Biobehavioral Reviews, 37(10), 2516-2528. [7] Laeng, B., Sirois, S., &Gredeba?ck, G. (2012). Pupillometry: A window to the preconscious?. Perspectives on Psychological Science, 7(1), 18-27. [8] Wu, C. C., Chen, S. C., & Yen, N. Y. (2018). Development of a real-time eye movement tracking system for human-computer interaction. In 2018 IEEE International Conference on Applied System Invention (ICASI) (pp. 457-460). IEEE. [9] Kwon, T., Park, J., & Choi, J. (2019). Eye-controlled interface for personal computer using infrared-based eye tracking. Multimedia Tools and Applications, 78(12), 16233-16245. [10] Guo, H., Zhang, X., Zhang, J., Liu, L., & Xu, C. (2016). A novel low-cost eye-gaze tracking method based on webcams. IEEE Access, 4, 3034-3045 [11] Devi, M. M. S., & Gangadhar, M. Y. (2012).”A comparative Study of Classification Algorithm forPrinted Telugu Character Recognition.” International Journal of Electronics Communication and ComputerEngineering,3(3), 633-641. [12] Chaitanya, V. L. (2022). Machine Learning Based Predictive Model for Data Fusion Intruder Alert System. journal of algebraic statistics, 13(2), 2477-2483. [13] Chaitanya, V. L., Sutraye, N., Praveeena, A. S., Niharika, U. N., Ulfath, P., & Rani, D. P. (2023). Experimental Investigation of Machine Learning Techniques for Predicting Software Quality. [14] Lakshmi, B. S., & Kumar, A. S. (2018). Identity-Based Proxy-Oriented Data Uploading and Remote Data Integrity checking in Public Cloud. International Journal of Research, 5(22), 744-757. [15] Lakshmi, B. S. (2021). Fire detection using Image processing. Asian Journal of Computer Science and Technology, 10(2),14-19 [16] Lakshmaiah, D., Subramanyam, D. M., & Prasad, D. K. S. (2014). Design of low power 4-bit CMOS Braun Multiplier based on threshold voltage Techniques. Global Journal of Researches in Engineering: Electrical and Electronics Engineering, 14(9). [17] Potti, D. B., MV, D. S., &Kodati, D. S. P. (2015). Hybrid genetic optimization to mitigate starvation in wireless mesh networks. Hybrid Genetic Optimization to Mitigate Starvation in Wireless Mesh Networks, Indian Journal of Science and Technology, 8(23). [18] Babu, Y. M. M., Subramanyam, M. V., & Giriprasad, M. N. (2015). Fusion and texture based classification of indian microwave data-A comparative study. International Journal of Applied Engineering Research, 10(1), 1003-1010. [19] Kumar, J. D. S., Subramanyam, M. V., & Kumar, A. P. S. (2023). Hybrid Chameleon Search and Remora Optimization Algorithm?based Dynamic Heterogeneous load balancing clustering protocol for extending the lifetime of wireless sensor networks. International Journal of Communication Systems, 36(17), e5609. [20] Kanthi, M., Kumar, J. D. S., & Rao, K. V. (2024). A Fused 3d-2d Convolution Neural Network for Spatial-Spectral Feature Learning And HypeFigure 8.2.1.a shows rspectral Image Classification. Journal of Theoretical and Applied Information Technology, 102(5).

Copyright

Copyright © 2024 Farooq Sunar Mahammad, Lakshmi Sravani K, Sandhya B, Neha Maheen A, Manisha M, Dharani T. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET60909

Publish Date : 2024-04-24

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online