Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

The Role of Machine Learning in Natural Language Understanding

Authors: M. Vasuki, M. Muthamizh

DOI Link: https://doi.org/10.22214/ijraset.2023.54611

Certificate: View Certificate

Abstract

This paper shows deeply the algorithms used in Natural Language (NLU) using Machine Learning (ML) in order to develop Natural Language applications like sentimental analysis, text classification and question answering. The paper thoroughly investigates the diverse applications, inherent challenges, and promising future prospects of machine learning in NLU, providing valuable insights into its revolutionary influence on language processing and comprehension.

Introduction

I. INTRODUCTION

The field of Natural Language Understanding (NLU) has been revolutionized by Machine Learning (ML), empowering computers to comprehend and analyze human language with increasing precision and sophistication. NLU encompasses essential tasks like text classification, sentiment analysis, named entity recognition, machine translation, and question answering, which are vital for processing and understanding textual data.

ML algorithms, fueled by extensive labeled datasets, extract patterns and features from examples to generate predictions and derive valuable insights from text. These algorithms excel in capturing intricate relationships, handling ambiguity, and adapting to diverse contexts, enabling machines to comprehend and interpret human language in a more nuanced manner. By integrating ML techniques into NLU, numerous domains have witnessed the emergence of innovative applications, including virtual assistants, chatbots, customer feedback analysis, and information retrieval systems. The relentless advancement of ML continues to inspire researchers and practitioners to explore cutting-edge techniques such as deep learning, transfer learning, and multimodal learning, aiming to further augment the capabilities of NLU systems.

II. BASIC OF MACHINE LEARNING IN NLP

A. Supervised Learning in NLU

Supervised learning in ML for NLU involves training models using labeled data, where each example is associated with a known target or output. This enables the models to learn patterns and relationships between input text and corresponding labels or categories. In NLU, tasks like text classification, named entity recognition, sentiment analysis, and machine translation can benefit from supervised learning algorithms such as SVM, Naive Bayes, and RNNs. These algorithms analyze labeled data, extract relevant features, and build predictive models. The labeled data guides the models to generalize their understanding to new instances. Supervised learning in ML for NLU is crucial for achieving accurate and reliable results, empowering machines to comprehend and interpret human language effectively.

B. Unsupervised learning in NLU.

Unsupervised learning in ML for NLU involves training models without labeled data or explicit target outputs. Instead, the models autonomously learn patterns, structures, and relationships in the input text data. Unsupervised algorithms, like clustering, topic modeling, and autoencoders, are applied to various NLU tasks. These algorithms analyze the data's inherent structures and similarities to uncover hidden patterns and extract meaningful representations. Unsupervised learning in ML for NLU is critical in tasks such as text clustering, anomaly detection, dimensionality reduction, and unsupervised sentiment analysis. By utilizing unsupervised learning techniques, NLU models reveal valuable insights from unstructured text data, support exploratory analysis, and lay the groundwork for subsequent tasks.

C. Deep Learning in NLU.

Deep learning, a subset of Machine Learning (ML) techniques, has revolutionized Natural Language Understanding (NLU) by employing deep neural networks to process and comprehend human language. NLU tasks benefit from various deep learning models, including Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs), and Transformer models, which have demonstrated exceptional performance across different domains. RNNs are specifically designed to handle sequential data and excel in language modeling, machine translation, and sentiment analysis. These networks incorporate recurrent connections that capture dependencies and contextual information within the input sequence. Enhanced variants such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) address challenges like the vanishing gradient problem, allowing for improved modeling of long-term dependencies. Although commonly associated with computer vision, CNNs have also proven successful in NLU tasks like text classification and sentiment analysis. In text processing, CNNs employ filters or kernels to extract local patterns or n-grams from the input text. By applying these filters across the entire input, the CNN learns hierarchical representations, effectively extracting crucial features for classification or analysis.

III. PREPARING DATA FOR NLU

A. Data collection and Cleaning

Data collection and cleaning play a crucial role in Machine Learning (ML) for Natural Language Understanding (NLU). These essential steps involve acquiring relevant data and preparing it for training ML models to achieve accurate and reliable outcomes. The process begins with gathering a diverse and representative dataset that aligns with the specific NLU task, sourced from various channels like social media, web scraping, or existing databases. It is crucial to ensure the dataset covers different aspects and variations of the target domain to promote model generalization. Once the data is collected, it undergoes cleaning and preprocessing. Data cleaning involves removing irrelevant or noisy data, correcting inconsistencies, and handling missing values. This step ensures the dataset's quality and appropriateness for training ML models. Additionally, data preprocessing tasks, such as tokenization, stemming, and stop word removal, can be applied to transform the raw text data into a more suitable format for NLU tasks. These preprocessing techniques enhance the model's ability to learn meaningful patterns and relationships from the data.

B. Text Preprocessing Technique in NLU.

Text preprocessing techniques are essential in Machine Learning (ML) for Natural Language Understanding (NLU) as they transform raw text data into a suitable format for analysis and model training. Tokenization is a common technique that breaks text into individual tokens or words. Lowercasing converts text to lowercase, normalizing it and reducing vocabulary size. Stop word removal eliminates commonly used but insignificant words, improving efficiency and reducing noise. Lemmatization and stemming reduce words to their base or root forms, treating related words as a single entity. Removing special characters and punctuation enhances text readability. By employing these preprocessing techniques, NLU systems can better comprehend and process text, yielding more accurate and meaningful results.

C. Feature Extraction and Representation

Feature extraction and representation play a critical role in ML for NLU by converting raw text data into meaningful numerical representations. These representations enable ML algorithms to understand and process the text, uncover patterns, and make accurate predictions. One popular technique for feature extraction in NLU is the Bag-of-Words (BoW) approach, which represents text as word frequencies or presence indicators. Although BoW ignores word order and context, it effectively captures the overall distribution of words in the text, providing insights into word importance based on frequency or presence.

IV. MACHINE LEARNING ALGORITHMS FOR NLU.

A. Traditional Algorithms in Supervised Learning.

Traditional algorithms have had a significant impact on Natural Language Understanding (NLU) and have been instrumental in addressing a wide range of language processing tasks. These algorithms, which stem from classical machine learning and statistical approaches, provide dependable and explainable solutions for NLU challenges.

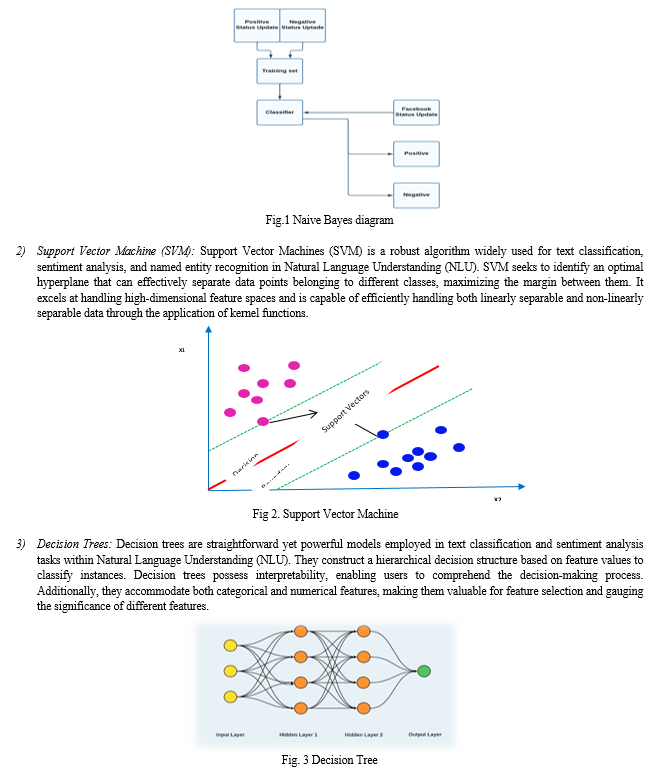

1) Naive Bayes: Naive Bayes is a probabilistic classifier that demonstrates effectiveness in text classification tasks. It operates under the assumption of feature independence and calculates the probability of a document being assigned to a specific class based on the occurrence of words or features. Although Naive Bayes relies on a simplistic assumption, it can deliver rapid and efficient classification outcomes.

- Recurrent Neural Networks (RNN): RNNs are specifically designed to process sequential data and find extensive applications in tasks such as language modelling, machine translation, and sentiment analysis. These networks leverage recurrent connections to capture dependencies and contextual information within the input sequence. Two widely used RNN variants, Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU), effectively tackle the vanishing gradient problem and enhance the modelling of long-term dependencies in the data.

- Convolutional Neural Networks (CNN): Despite their conventional association with computer vision, Convolutional Neural Networks (CNNs) have exhibited exceptional performance in a range of Natural Language Processing (NLP) tasks, including text classification and sentiment analysis. In the domain of NLP, CNNs employ filters or kernels to capture localized patterns or n-grams present in the input text. By systematically applying these filters across the entirety of the input, the CNN learns hierarchical representations, empowering it to extract and exploit critical features that are instrumental in tasks such as classification and analysis. The CNN's capability to discern meaningful patterns within textual data has played a significant role in propelling its triumph in various NLP applications.

- Autoencoders: Autoencoders play a pivotal role as unsupervised models when it comes to acquiring concise representations of textual data. These models consist of an encoder network responsible for compressing the input text into a lower-dimensional latent space, along with a decoder network that reconstructs the original text using the latent representation. Autoencoders find wide application in tasks such as dimensionality reduction, anomaly detection, and text generation. They offer an effective approach for capturing crucial features within the data and hold potential for various natural language processing (NLP) applications.

V. NLU APPLICATIONS AND TASKS

A. Sentiment Analysis

Sentiment analysis aims to determine the sentiment expressed in text, whether it is positive, negative, or neutral. Machine learning models are trained to classify and analyze text sentiment, enabling applications like social media monitoring and customer feedback analysis. It is a widely researched area of NLU that leverages machine learning techniques to determine sentiment in text.

B. Text Classification

Text classification involves the categorization of text documents into pre-defined classes or categories. Through the use of machine learning algorithms, text data can be trained and classified based on labeled examples. This important task finds application in various areas such as spam detection, topic classification, sentiment analysis, and other contexts where the classification of textual information is required.

C. Question Answering

Question answering systems strive to deliver accurate answers to user queries by leveraging a provided context or knowledge base. Through machine learning, models can be trained to comprehend questions and retrieve pertinent information for generating precise responses. This capability finds utility in virtual assistants, search engines, and chatbot applications.

Conclusion

In conclusion, machine learning is crucial for NLU tasks, empowering sophisticated systems to comprehend and process human language. Supervised and unsupervised learning algorithms form the foundation for tasks like sentiment analysis, text classification, and machine translation. Deep learning models, such as RNNs and transformers like BERT, have greatly enhanced NLU capabilities by capturing contextual information and semantic relationships. Ongoing research and advancements in ML techniques continue to drive progress in NLU, enabling more accurate understanding and improved applications in natural language processing, information retrieval, and human-computer interaction.

References

[1] \"Attention Is All You Need\" by Vaswani et al. (2017). Journal: Advances in Neural Information Processing Systems (NeurIPS). [2] \"BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding\" by Devlin et al. (2018). Journal: NAACL-HLT (North American Chapter of the Association for Computational Linguistics: Human Language Technologies). [3] \"GloVe: Global Vectors for Word Representation\" by Pennington et al. (2014). Journal: Empirical Methods in Natural Language Processing (EMNLP). [4] \"Word2Vec\" by Mikolov et al. (2013). Journal: Neural Information Processing Systems (NeurIPS). [5] \"Sequence to Sequence Learning with Neural Networks\" by Sutskever et al. (2014). Journal: Advances in Neural Information Processing Systems (NeurIPS). [6] \"Deep Residual Learning for Image Recognition\" by He et al. (2016). Journal: IEEE Conference on Computer Vision and Pattern Recognition (CVPR). [7] \"A Neural Probabilistic Language Model\" by Bengio et al. (2003). Journal: Journal of Machine Learning Research (JMLR). [8] \"Recurrent Neural Network based Language Model\" by Mikolov et al. (2010). Journal: Interspeech. [9] \"Efficient Estimation of Word Representations in Vector Space\" by Mikolov et al. (2013). Journal: arXiv preprint arXiv:1301.3781. [10] \"Convolutional Neural Networks for Sentence Classification\" by Kim (2014). Journal: Empirical Methods in Natural Language Processing (EMNLP). [11] K. He, X. Zhang, S. Ren, and J. Sun. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. arXiv preprint arXiv:1502.01852, 2015. [12] K. Heafield, I. Pouzyrevsky, J. H. Clark, and P. Koehn. Scalable modified Kneser-Ney language model estimation. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics, pages 690–696, Sofia, Bulgaria, August 2013. [13] R. Kiros, R. Salakhutdinov, and R. Zemel. Multimodal neural language models. In ICML’2014, 2014. [14] R. Jozefowicz, W. Zaremba, and I. Sutskever. An empirical exploration of recurrent network architectures. In Proceedings of the 32nd International Conference on Machine Learning (ICML-15), pages 2342–2350, 2015.

Copyright

Copyright © 2023 M. Vasuki, M. Muthamizh. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET54611

Publish Date : 2023-07-05

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online