Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Senior-Centric Object Detection for Independent Living

Authors: Prof. Shraddha S. Gugulothu, Ishita Kumbhare, Sejal Birkhede, Tanvee Borse, Shubham Pote, Tanmay Dhole, Vaibhav Gobre

DOI Link: https://doi.org/10.22214/ijraset.2024.61954

Certificate: View Certificate

Abstract

The need for creative solutions that help seniors preserve their independence is growing as a result of the worldwide population aging issue. A major obstacle that many seniors encounter is the possibility of a loss in their physical and cognitive abilities, which can make it difficult for them to carry out everyday chores. This study introduces a senior-oriented object detecting system in order to address this urgent problem. The technology assists elderly people in recognizing and locating things in their living areas by utilizing sophisticated computer vision techniques and machine learning algorithms. It aims to improve seniors\' safety and autonomy through real-time support, ultimately leading to a better quality of life. This system is carefully designed to accommodate the special requirements of senior citizens, providing a viable means of fostering autonomous live in a population that is aging. This initiative is a big step forward in the fight to empower seniors and improve their well-being in an aging society because of its creative approach and emphasis on user-centric design.

Introduction

I. INTRODUCTION.

The share of the elderly is increasing significantly, which means a fundamental change in the global demographic picture. Often called the phenomenon of population aging, demographic changes create various challenges for communities around the world. Among these difficulties, protecting the independence and well-being of the elderly is one of the most important. As people age, they often experience a variety of physical, mental and social changes that can affect their ability to live freely and maintain a good standard of living. More and more people are interested in using technology to serve an aging population to address these challenges.

Object recognition systems have proven to be particularly effective tools to meet the individual needs of the elderly. These systems can help the elderly with daily activities, improve safety and provide quick help in an emergency because they use more accurate algorithms to detect and recognize things in a given environment. However, object recognition technology still has many unanswered questions, despite its obvious potential benefits for the elderly. Current solutions often lack the user-centered design and customization necessary for the special requirements and preferences of the elderly.

With this in mind, current research proposes creating an object recognition system targeting the elderly to fill these gaps. The main goal of the search is to create and deploy a reliable, user-friendly system that is particularly suited to the needs and difficulties of the elderly. The proposed system prioritizes simplicity, reliability and accessibility to improve safety, autonomy and overall quality of life for the elderly. Our goal is to develop technology-based solutions that contribute to population aging combining theoretical analysis, empirical research and real-world applications.

II. LITERATURE REVIEW

Ripon Patgiri. [1] states that quality assurance of blister packages for defect detection is necessary, but manual inspection is inefficient. The proposed methodology develops an automated defect detection system using the YOLOv7 to detect five defect categories: broken pill, crack pill, empty pill, foreign object, and color mismatch.

The fine-tuning approach improved precision and recall by 6% and 5% of the proposed YOLOv7, respectively. The model also achieves high accuracy with a mAP@0.5 score of 0.962.

K. Elaiyarani. [2] states that finding cracks and dents in pressed panel goods is a crucial step in assessing their quality.

The objective of this proposed work is to use convolutional neural network (CNN) algorithm to develop an automated system that can accurately recognize dents on cars or other metal surfaces. The suggested system uses YOLO V3, R-CNN algorithms, and image processing approaches to find dents or fissures in metal or concrete surfaces.

Rohan Chopade,[3] states that the recognition of cars is vital for the control and surveillance systems. Manually recognizing all the car number plates that are passing or parked is a laborious and complex task for humans. The proposed model, trained on the Indian car number plate dataset, utilizes YOLOv8 for efficient object detection and RestNet-50 for powerful feature extraction, enhancing ANPR accuracy and robustness. The system can localize single-line number plates with a success rate of about 98.6% and recognize characters with a success rate of about 97.81% under widely varying illumination conditions.

P. Pandiaraja. [4] found out that Human-wildlife conflict denotes the harmful interactions occurring between wild animals and humans, leading to adverse impacts on individuals, their resources, or the wildlife and their habitat.

Machine Learning techniques, IOT and Cloud are going to use in this research. By using Machine Learning techniques, we train the model using dataset. For real-time image detection, we use YOLO v3 by using camera. When an animal enters agricultural land or residential area, an image is captured and processed, and a flash message is sent to the people and the forest officer informing him of the animal’s entry. And also, a speaker is places within the range and used to give an alert of animal’s entry.

Naresh. [5] states that Cannabis is a plant species with various subspecies, and its classification is vital for both medical and regulatory purposes. This work presents a novel approach for the classification of Thai cannabis plants using a hybrid deep learning model that combines You Only Look Once (YOLO) and Convolutional Neural Networks (CNN).

The proposed model is evaluated against other deep learning models commonly used for image classification, and it demonstrates exceptional performance, achieving an impressive accuracy rate of 95.37%.

Shayan. [6] states that in response to the increasing challenge of wild animal intrusions in rural areas, this study presents an innovative solution for rural community protection.

The proposed system utilizes cameras and sound recognition technology to detect the presence of potentially dangerous wildlife and concurrently emit a loud sound to deter the animals and alert the villagers.

Sagrario [7] states that Human activity recognition (HAR) provides information about interest situations, in this way a follow-up of the activities carried out is obtained, this is applied to areas in health such as the monitoring or diagnosis of diseases for vulnerable people, in video surveillance by detecting suspicious acts in certain places, in human-machine interaction, the assistance of robots with people, to mention a few examples.

This article will address the implementation of a convolutional neural network (CNN) architecture for the identification of activities interest, which is part of a multimodal human activity recognition system.

Xiaodong [8] states that to reach SDSB (Self-Driving Sweeping Bot) in an efficient-sweeping manner, data collection of visual images regarding sweeping target must be conducted prior to analyse the required sweeping objects with other noises.

In this work, three categorized target objects including, fallen leaves, speed bumps and manhole cover etc. are involved in training and validation phases.

Furthermore, to detailed analyse the Yolo v5s performance on training and validation set, several indices including, box loss, objectness loss, classification loss, precision, recall and mean average precision (mAP) in terms of epoch number, are also reported in the experiments.

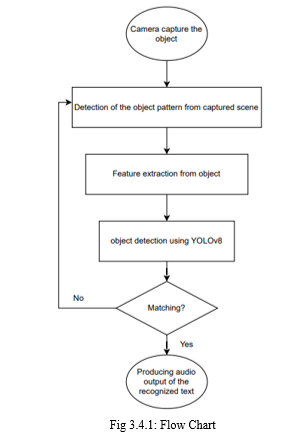

III. METHODOLOGY

A. YOLOv8 in Object Detection: An Introduction

YOLOv8, or You Only Look Once version 8, is a state-of-the-art object detection algorithm widely used in computer vision applications. Developed as an evolution of the YOLO series, it offers significant improvements in accuracy, speed, and robustness compared to its predecessors. YOLOv8 employs a single neural network to predict bounding boxes and class probabilities directly from full images in one evaluation, making it exceptionally fast and efficient for real-time object detection tasks. This architecture enables YOLOv8 to achieve high accuracy while maintaining rapid inference speeds, making it suitable for various applications, including autonomous driving, surveillance, and image analysis.

The architecture employs a series of convolutional layers to extract features from the input image efficiently. Following feature extraction, non-maximum suppression is applied to filter redundant bounding boxes, ensuring precise object localization. Then, the remaining bounding boxes are labeled with corresponding class labels, providing valuable information about detected objects. Finally, YOLOv8 outputs labeled bounding boxes along with their associated confidence scores, enabling robust and real-time object detection in various scenarios.

B. Data Collection and Preliminary Handling

Our technique starts with data collection and preliminary management. The Indore Object Detection dataset, which is accessible on Kaggle, will be used. An extensive collection of photos and annotations appropriate for our item detection task is provided by this dataset. We will use 1349 images of indoor things for our project. We will preprocess the dataset to guarantee uniformity in image sizes, fix any incorrect or missing annotations, and separate the dataset into training, validation, and test sets during the initial handling stage. To guarantee the accuracy and consistency of the data used for model training, this preprocessing phase is essential.

C. Model Training

The dataset will be preprocessed before we begin the model training procedure. YOLOv8, a cutting-edge object detection technique, will be utilized to train our model. The training set from the Indore dataset will be used for the training.

Using our dataset, we will fine-tune the chosen pre-trained model in order to maximize transfer learning and improve performance. To keep an eye on the model's performance and avoid overfitting, several validation tests will be carried sout during training.

D. Streamlit Application Development

To use the object detection system, we will create a Streamlit application after the model has been trained. Streamlit offers a simple and easy-to-use interface for implementing machine learning models. Users using the program will be able to upload photos or use a webcam to instantly identify objects. It will also improve accessibility and user experience by offering findings and visualizations in an easily understood way.

E. Alert Mechanism

To enhance the functionality of the object detection system, an alert system will be incorporated. When an object is detected, the system will generate a speech output to inform users or caregivers about the detected object. This feature aims to provide timely and clear communication, ensuring that relevant parties are aware of the detected object and can take appropriate actions if necessary.

F. Testing and Evaluation

Finally, our object detection system has undergo extensive testing and assessment in order to

determine its dependability and performance. The test set from the Indore dataset will be used to assess the F1-score, accuracy, precision, and recall of the model. The Streamlit application will also be put through real-world testing to evaluate its usability and functionality. Users' and caregivers' feedback will be gathered to further develop and enhance the system.

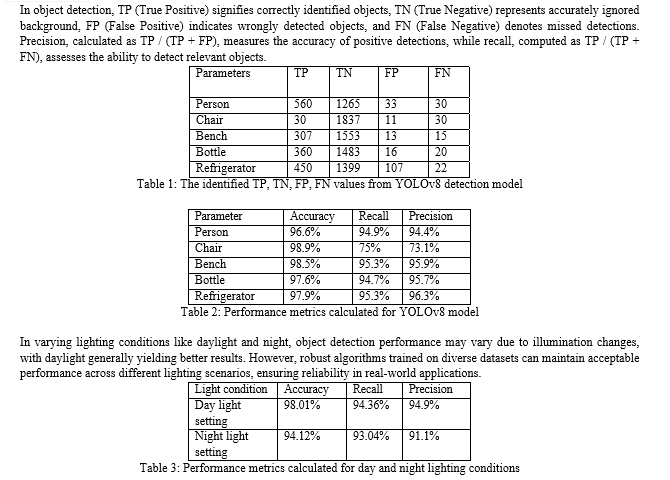

IV. EXPERIMENTAL RESULTS

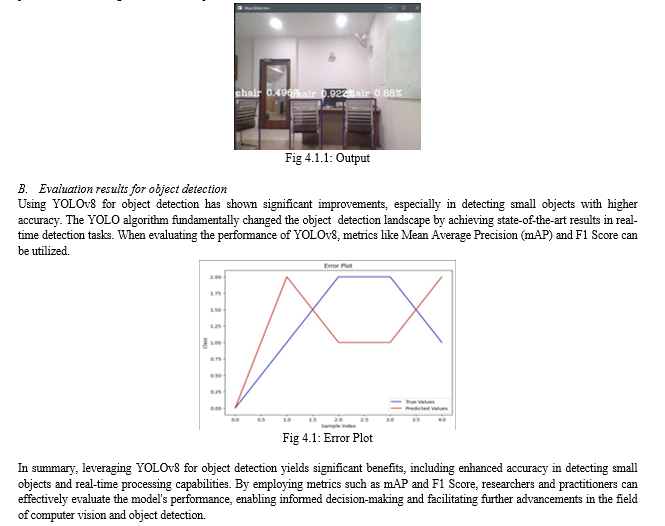

A. amlit app for object detection results

A Streamlit application can be built to visualize the detection results, enabling users to upload images or videos and view the detected objects alongside evaluation metrics. Such apps can offer real-time updates and insights into the model's performance, making it easier to interpret and utilize the detection results.

V. FUTURE SCOPE

In the realm of future possibilities, several avenues for advancement emerge in the domain of object detection systems. Adding environmental sensors to detection mechanisms is one approach that promises to give detection mechanisms context awareness. Through leveraging supplementary information from various sensors, such as contextual information about the user, the system can adjust itself dynamically to a variety of circumstances. This flexibility, in turn, has the potential to achieve hitherto unheard-of levels of precision and efficiency for the system.

Furthermore, object detection systems have additional opportunities as a result of the confluence of technology and healthcare. These kinds of systems have the potential to develop into strong instruments for remote health management by adding complex health monitoring features. Users, especially the elderly, will be empowered to take proactive measures to protect their well-being by being able to monitor vital signs, sleep quality, and other health data.

Conclusion

In conclusion, the Senior-Centric Object Detection project marks a significant milestone in leveraging advanced technology to address the unique needs of senior citizens. The project has achieved its goals through the creation and deployment of a reliable YOLOv8-based object detection system. The project\'s results demonstrate not only how well the system works to increase seniors\' safety and independence, but also how well it can affect their general quality of life. The method is a useful instrument for guaranteeing the wellbeing of senior citizens by offering caregivers and family members priceless peace of mind. There are a lot of opportunities for improvement and progress in the future. Subsequent endeavours might give precedence to augmenting the scope of object classifications identified by the apparatus, consequently widening its relevance and suitability in various contexts. Furthermore, there is potential to improve the system\'s functionality and user experience through continued efforts to enhance system integration and investigate new features.

References

[1] Ripon Patgiri; V. Ajantha; S. Bhuvaneswari; V. Subramaniyaswamy. Intelligent Defect Detection System in Pharmaceutical Blisters Using YOLOv7 IEEE Sensors Journal, February 2024 [2] K. Elaiyarani; B. Nigileeswari; G. Tharagai Rani; J. Rajeswari; S. Vignesh. Machine Learning Based Dent Damage Detection Using Image Processing Methods, IEEE Vellore, India February 2024 [3] Rohan Chopade; Bhakti Ayarekar; Soham Mangore; Ankita Yadav; Uma Gurav. Automatic Number Plate Recognition: A Deep Dive into YOLOv8 and ResNet-50 Integration, IEEE, February 2024. [4] P. Pandiaraja; U Madhumitha; S Mohan Kumar; N Santhosh. An Analysis of Wild Fauna Trespassing Warning System using CNN and YOLO v3, IEEEDecember 2023. [5] Naresh Kumar Trivedi; Himani Maheshwari; Raj Gaurang Tiwari; Vinay Gautam. Hybrid Deep Learning for Thai Cannabis Plant Classification: YOLO+CNN Approach, IEEE, December 2023. [6] Shayan Hore; K Deepa Thilak; Silpi Kartheek Achari. Wild Life Detection Providing Security to Villages – YOLO v8, IEEE, December 2023. [7] Sagrario Garcia-Garcia; Raúl Pinto-Elías. Human Activity Recognition implanting the Yolo models. IEEE, December 2022. [8] Xiaodong Yu; Ta Wen Kuan; Yuhan Zhang; Taijun Yan. YOLO v5 for SDSB Distant Tiny Object Detection. IEEE, November 2022. [9] Yonghui Lu; Langwen Zhang; Wei Xie YOLO-compact: An Efficient YOLO Network for Single Category Real-time Object Detection IEEE 2020. [10] Wenbo Lan; Jianwu Dang; Yangping Wang; Song Wang. Pedestrian Detection Based on YOLO Network Model IEEE 2018. [11] Chaitanya; S Sarath; Malavika; Prasanna; Karthik. Human Emotions Recognition from Thermal Images using Yolo Algorithm IEEE 2020. [12] P Gajalakshmi; J V Satyanarayana; G Venkat Reddy; Sunita Dhavale. Detection of Strategic Targets of Interest in Satellite Images using YOLO IEEE 2020. [13] Vijayakumar Ponnusamy; Amrith Coumaran; Akhash Subramanian Shunmugam; Kritin Rajaram; Sanoj Senthilvelavan. Smart Glass: Real-Time Leaf Disease Detection using YOLO Transfer Learning IEEE 2020. [14] Priyanka Malhotra; Ekansh Garg. Object Detection Techniques: A Comparison IEEE 2020. [15] N. Ragesh; B. Giridhar; D. Lingeshwaran; P. Siddharth; K. P. Peeyush. Deep Learning based Automated Billing Cart IEEE 2019. [16] Rajanikant Tenguria; Saurabh Parkhedkar; Nilesh Modak; Rishikesh Madan; Ankita Tondwalkar. Design framework for general purpose object recognition on a robotic platform IEEE 2017. [17] Naresh Kumar. Machine Intelligence Prospective for Large Scale Video based Visual Activities Analysis IEEE 2017. [18] Joseph Redmon; Santosh Divvala. You Only Look Once: Unified, Real-Time Object Detection IEEE 2016.

Copyright

Copyright © 2024 Prof. Shraddha S. Gugulothu, Ishita Kumbhare, Sejal Birkhede, Tanvee Borse, Shubham Pote, Tanmay Dhole, Vaibhav Gobre. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET61954

Publish Date : 2024-05-11

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online