Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Signease: Empowering Seamless Communication for the Hearing Impaired

Authors: Dr. S. Brindha, Ms. T. P. Kamatchi, Ms. V. S. Jayani, Ms. S. Sushmitha

DOI Link: https://doi.org/10.22214/ijraset.2024.59956

Certificate: View Certificate

Abstract

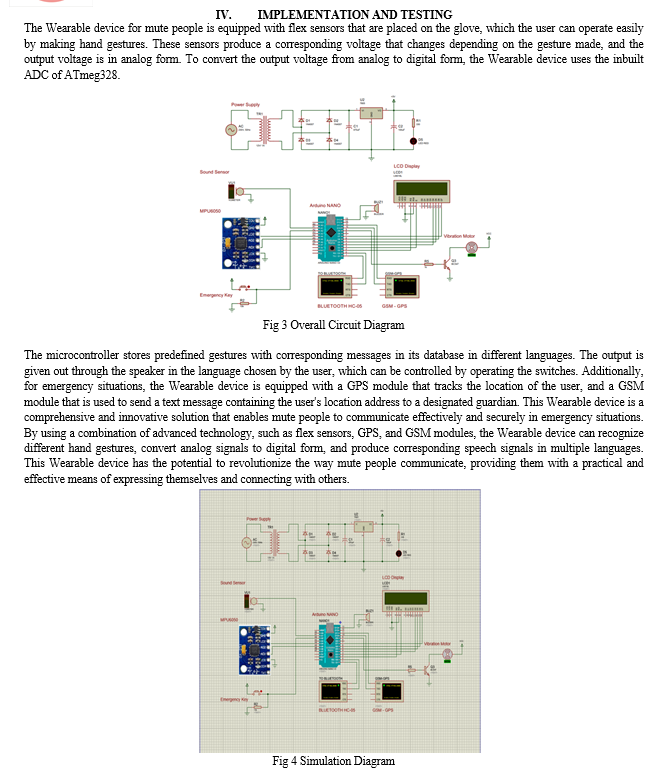

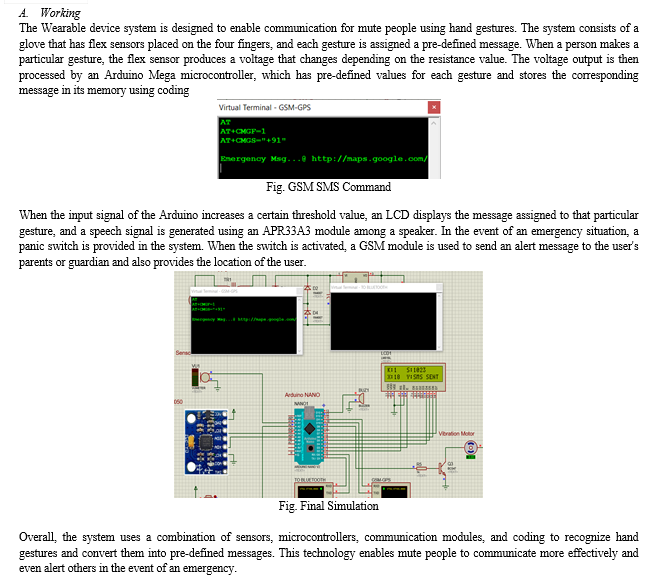

There are 2.78% of the total population of India who can’t speak. Sign language is a nonverbal form of communication method which is found among all deaf and dumb communities in the world. Normal people do not learn sign language. It causes barriers in communication between deaf dumb and normal people. Hence Deaf-Mute communication requires an interpreter who will convert hand gestures into auditory speech. The past implementation of this project involved using image processing concepts. But the drawback of these implementations is that projects were non portable and too expensive. Therefore, the system is being proposed with the use of flex sensors, accelerometer and android technology. System includes two modules. First module is a hand glove with sensors and microcontroller to convert hand gestures to auditory speech. Second module is an Android App with Google Speech API to convert text signal to speech.

Introduction

I. INTRODUCTION

The communication barrier faced by the hearing impaired, with over 1.6 million citizens unable to speak and 1.2 million unable to hear in India, highlights the need for accessible communication tools. Current solutions, while existing, are often non-portable, expensive, or one-way, further isolating the disabled from mainstream society. Hand gestures offer an intuitive means of interaction, and gesture recognition technology has shown potential for various applications, including sign language translation. Vision-based techniques, while widely used, are not suitable for mobile applications and may be affected by environmental factors. Inertial sensor technology has emerged as an alternative, providing accurate hand movement capture without interference. By integrating magnetometers, gyroscopes, and accelerometers, drift-free orientation estimation can be achieved, enabling more accurate gesture recognition and translation. This innovative approach holds promise for bridging the communication gap between the hearing impaired and the general population, fostering inclusivity and equal access to communication for all.

A. Embedded systems

Embedded systems are specialized computer systems designed to perform specific functions within complex devices like cars, with characteristics such as:

- Single Key Function: They run a single program repeatedly, unlike general-purpose computers.

- Direct Interaction: Embedded systems interface with the real world through sensors and user interfaces.

- Tight Boundaries: They operate with limited resources, including power, memory, and interfaces.

- Reactive Principles: Built to serve dedicated functions, they operate based on external stimuli.

- High Reliability: Expected to work continuously for years without issues, even recovering on their own.

In the age of the Internet of Things (IoT), the need for interactions among physical objects has led to challenges in data management, wireless communications, and real-time decision making. Security and privacy concerns have also emerged, driving demand for lightweight cryptographic methods.

To address these needs, we propose a portable hardware system focusing on two-way communication using flex sensors and Android technology. The system aims to convert hand gestures to auditory speech for communication between mute and normal individuals and speech to readable text for communication between deaf and normal individuals. It includes a hand glove with flex sensors and an Atmel ATmega328 microcontroller, as well as an Android app with Google Speech API integration. The system covers Indian Sign Language and includes a customized section for personal information, with a language processing algorithm ensuring grammatically correct English sentences despite the structural differences in sign language grammar.

II. LITERATURE SURVEY

In their 2013 study, authors Lorenzo Chiari and Jorunn L Helbostad address the challenge of falls among older people. They emphasize the necessity of body-worn sensors to enhance the understanding of fall mechanisms and kinematics. The systematic review aims to analyse published studies on fall detection with body-worn sensors. From a collection of 96 records, including journal articles, conference proceedings, and project reports published between 1998 and 2012, the authors extracted and analysed data using SPSS software. Their findings highlight a lack of agreement between methodology and documentation protocols, as well as a dearth of real-world fall recordings. Methodological inconsistencies, such as the absence of an established fall definition and variations in sensor types and specifications, were noted. The study underscores the need for a worldwide research consensus to address key issues in fall detection, including incident verification, guideline establishment, and the development of a common fall definition.

In their 2012 study, authors Wiebren Zijlstra and Jochen Klenk address the persistent challenge of falls among the elderly population. They emphasize the importance of real-time fall detection and prompt communication to telecare centers to facilitate rapid medical assistance, thereby enhancing the sense of security for the elderly and mitigating the negative consequences of falls. The study evaluates the performance of thirteen published fall-detection algorithms on a database comprising 29 real-world falls, collected as part of the SensAction-AAL European project. The findings reveal that while the average specificity (SP) of the algorithms is high (83.0%±30.3%, with a maximum value of 98%), the sensitivity (SE) is considerably lower (57.0%±27.3%, with a maximum value of 82.8%) compared to simulated falls. The study highlights the importance of testing fall-detection algorithms in real-life conditions to develop more effective automated alarm systems with higher acceptance rates. Furthermore, the authors suggest that a large, shared real-world fall database could enhance understanding of the fall process and aid in designing and evaluating high-performance fall detectors.

In their 2015 paper, authors Jo-Ann Eastwood and Suneil Nyamathi highlight the emergence of remote health monitoring (RHM) as a solution to address the cost burden associated with unhealthy lifestyles and aging populations. They emphasize the importance of enhancing compliance with prescribed medical regimens, particularly in systems utilizing smartphone technology. The paper introduces a technique aimed at improving smartphone battery consumption to enhance users' adherence to remote monitoring systems. The authors deploy WANDA-CVD, an RHM system designed for patients at risk of cardiovascular disease (CVD), which utilizes a wearable smartphone for detecting physical activity. Through an in-lab pilot study and validation in the Women’s Heart Health Study, they demonstrate that the battery optimization technique enhances battery lifetime by an average of 192%, resulting in a 53% increase in compliance. The authors propose that systems like WANDA-CVD have the potential to extend smartphone battery lifetime for RHM systems monitoring physical activity.

III. SYSTEM DESIGN

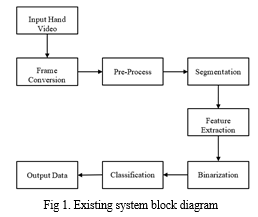

A. Existing system

Gesture detection, including vision-based, acoustic-based, passive infrared sensor-based and inertial sensor-based methods. Provided information for reasoning about the observed space were later on integrated into smart environments, aimed at delivering assistance services like continuous diagnosis of users’ health. These smart environments also integrated assistive robotic technologies with sensing networks.

A method to assess foot placement during walking using an ambulatory measurement system consisting of orthopaedic sandals equipped with force/moment sensors and inertial sensors. An inductive sensor for real time measurement of plantar normal and shear forces distribution on a diabetes patient's foot that can provide useful information for physicians and diabetes patients to take actions in preventing foot ulceration.

DISADVANTAGES

- Power is still wasted in the acquisition and processing of the acceleration and pressure signals when the fall detector moves with the wearer during normal physical activities.

- A passive vibration sensor and a passive tilt sensor work with more consuming power.

B. Proposed system

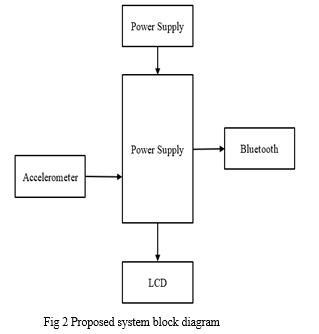

The proposed system is presented, which supports the two-way communication between disabled and normal people. System is consisting of two modules i.e. gloves with flex sensor to convert gestures to speech and an android app to convert speech to text.

The proposed wearable device which is called Wearable device, converts the hand gesture data to speech signal. The hand glove contains the two sensors, flex and accelerometer sensor. It converts the gesture into motion data to a microcontroller. The Bluetooth attached microcontroller, the mobile application collects the data from the hand glove and converts the data to speech signals.

Wearable device consists of 2 sensors; flex sensors and motion measuring device sensor. The output of the measuring device sensors is detected by the lean detection module, whereas the output of the flex sensors and therefore the overall gesture of the hand square measure detected by the gesture detection module. When power is ON, the position and orientation of the hand is obtained from the data glove that consists of Flex sensors on fingers (Thumb and index) and one accelerometer (X, Y, and Z positions). Tilting of the palm can be captured by the accelerometer where Flex sensors can measure the bend of the five fingers when making a sign. When the user performs a gesture/letter and presses a button, signals coming from the sensors are amplified via a dedicated amplification circuit to each signal, and then captured by the microcontroller which converts the analog signals to digital values through its 8-channel ADC. These values are formatted into a simple state matrix: two values for the Flex sensors, one for each axis of the accelerometer. As a result, each letter in the ASL will have a specific digital level for the five fingers and the three axis of the accelerometer. Each level is represented by a value between 0 and 255; an interval of ± 3 levels should be taken into consideration in case the user could not keep his hand steady.

Conclusion

The project aims to bridge communication barriers faced by mute and deaf individuals by implementing a system that converts hand gestures to audible speech and vice versa. Through the integration of Arduino-based gesture recognition and an Android application for speech-to-text conversion, the system provides a user-friendly interface for seamless interaction. By leveraging emerging technologies and innovative design, the project endeavors to empower individuals with speech and hearing impairments, facilitating greater inclusion and accessibility in society. Moving forward, ongoing refinement and testing will be essential to ensure the system\'s effectiveness and usability in real-world scenarios. Additionally, collaboration with relevant stakeholders and communities will be crucial for gathering feedback and insights to enhance the system\'s functionality and address user needs. Ultimately, the project represents a significant step towards leveraging technology to promote inclusivity and support the communication needs of diverse individuals.

References

[1] S. N. Robinovitch, F. Feldman, Y. J. Yang, R. Schonnop, P. M. Leung, T. Sarraf, et al., \"Video capture of the circumstances of falls in elderly people residing in long-term care: an observational study,\" Lancet, vol. 381, pp. 47-54, Jan 5 2013. [2] M. E. Tinetti, W. L. Liu, and E. B. Claus, \"Predictors and prognosis of inability to get up after falls among elderly persons,\" Jama-Journal of the American Medical Association, vol. 269, pp. 65-70, Jan 6 1993. [3] D. Wild, U. S. L. Nayak, and B. Isaacs, \"How dangerous are falls in old-people at home,\" British Medical Journal, vol. 282, pp. 266-268, 1981. [4] L. Schwickert, C. Becker, U. Lindemann, C. Marechal, A. Bourke, L . C h i a r i, et al., \"Fall detection with body-worn sensors: a systematic review,\" Zeitschrift Fur Gerontologie Und Geriatrie, vol. 46, pp. 706-719, Dec 2013. [5] R. Igual, C. Medrano, and I. Plaza, \"Challenges, issues and trends in fall detection systems,\" Biomedical Engineering Online, vol. 12, p. 66, Jul 6 2013. [6] F. Bagala, C. Becker, A. Cappello, L. Chiari, K. Aminian, J. M. Hausdorff, et al., \"Evaluation of accelerometer-based fall detection algorithms on real-world falls,\" Plos One, vol. 7, p. e37062, May 16 2012. [7] N. Alshurafa, J. A. Eastwood, S. Nyamathi, J. J. Liu, W. Y. Xu, H. Ghasemzadeh, et al., \"Improving compliance in remote healthcare systems through smartphone battery optimization,\" IEEE Journal of Biomedical and Health Informatics, vol. 19, pp. 57-63, Jan 2015. [8] D. M. Karantonis, M. R. Narayanan, M. Mathie, N. H. Lovell, and B. G. Celler, \"Implementation of a real-time human movement classifier using a triaxial accelerometer for ambulatory monitoring,\" IEEE Transactions on Information Technology in Biomedicine, vol. 10, pp. 156-167, Jan 2006. [9] C. Wang, W. Lu, M. R. Narayanan, S. J. Redmond, and N. H. Lovell, \"Low-power technologies for wearable telecare and telehealth systems: A review,\" Biomedical Engineering Letters, vol. 5, pp. 1-9, 2015. [10] L. Ren, Q. Zhang, and W. Shi, \"Low-power fall detection in home-based environments,\" in MobileHealth \'12, Hilton Head, South Carolina, USA, 2012, pp. 39-44. [11] S. R. Lord, C. Sherrington, H. B. Menz, and J. C. Close, Falls in older people: risk factors and strategies for prevention. Cambridge, UK: Cambridge University Press, 2007.

Copyright

Copyright © 2024 Dr. S. Brindha, Ms. T. P. Kamatchi, Ms. V. S. Jayani, Ms. S. Sushmitha. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET59956

Publish Date : 2024-04-07

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online