Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Signify: An Enhancing ASL Communication Model with Deep Learning

Authors: Vipul Punjabi, Yash Deokar, Rohit Sonawane, Krishna More, Riddesh Suryawanshi

DOI Link: https://doi.org/10.22214/ijraset.2024.65439

Certificate: View Certificate

Abstract

Technology is now employed to solve practically all humanity\'s problems. The vast communication gap between the common people and the deaf community is one of the society\'s fundamental challenges. Computer-based American Sign Language (ASL) systems that translate gestures into text/speech are now being developed by researchers all over the world. This paper attempts to summarize the current state-of-the-art methodologies used by various researchers in the field of ASL systems, right from collecting the data, image processing and using it to translate the gestures. Moreover, the steps followed to develop a ASL system are discussed, and some of the existing ASL applications are reviewed.

Introduction

I. INTRODUCTION

The World Health Organization (WHO) estimates that India’s deaf and hearing impaired population is around 63 million. As a result, there is a considerable communication gap between those with disabilities and the wider public. The use of sign language is a significant part of intercommunal communication. However, the main issue is understanding and responding appropriately, which may create a barrier because not all deaf and hard of hearing, as well as normal people, are familiar with sign language. For example, only around 5,00,000 or roughly 1% of 48 million individuals in the United States with hearing loss utilizes sign language. Hearing loss, in fact, is a spectrum, with different forms of hearing loss and communication options. Some deaf people utilize hearing aids, while for others, sound amplification is inefficient or unpleasant. They may use sign language as their primary way of communication. Sign language is regarded as a separate language from other spoken languages [1].

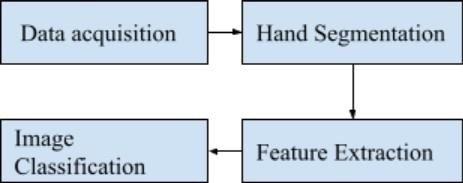

Sign language recognition systems are systems, and platforms, that capture hand/face motions, recognize them, and translate them into spoken language. Different approaches and algorithms are used to build and propose various ASL systems. The general flow followed by researchers includes 4 major steps.

The steps are:

- Data Accusation: Converting real-world signals to the digital domain for display, storage, and analysis is Data accusation.

- Hand Segmentation: Hand segmentation is a texture that segments out the portion of the camera feed where the hand is.

- Feature Extraction: Process of transforming raw data into numerical features that can be processed while preserving the information in the original dataset is done by feature extraction.

- Image Classification: Assigning a label or class to an entire image according to their shape, size, etc. is image classification.

These are some major steps included in the collection of the dataset for the models to be built. These steps are discussed in detail in the further parts of the paper. This paper is a review of several studies released by various researchers around the world.

II. LITERATURE SURVEY

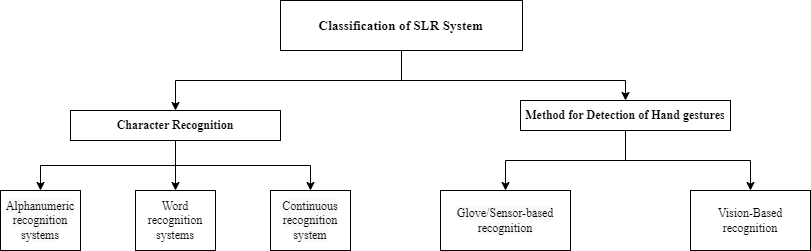

Table 1 lists the most common sign languages and the datasets that are available for those in this domain. Table 2 shows some of the most widely used sign languages translation software. In the development of sign language recognition systems, two types of databases are used: Standard databases and databases created by them. Many academics wish to train the system using their own datasets. In addition, numerous standard databases for popular sign languages are available. The general classification of the ASL system is shown in Figure 1. Creating the Sign Language Recognition system is a process that takes place in stages. Many methods and algorithms are applied to create an effective and accurate ASL system.

Figure 1. General classification of ASL systems

Table 1. Popular sign language methods and datasets [11]

|

S. No |

Sign Language |

Abbreviation |

Available Standard Datasets |

|

1 |

American Sign Language |

ASL |

|

|

2 |

Indian Sign Language |

ISL |

RWTH-BOSTON-104 |

|

3 |

Brazilian Sign Language |

Libras |

UCI- Libras Movement Data set |

|

4 |

Chinese Sign Language |

CSL |

CAS-PEAL |

|

5 |

Persian Sign Language |

PSL |

PETS 2002 |

|

6 |

Spanish Sign Language |

LSE |

ARAAC |

|

7 |

Irish Sign Language |

ISL |

ATIS Corpus |

|

8 |

Dutch Sign Language |

NGT |

Corpus NGT |

|

9 |

German Sign Language |

DGS |

|

|

10 |

Australian Sign Language |

Auslan |

UCI Australian Sign Language |

Table 2. Summary of the existing ASL applications

|

App Name |

Language |

Recognize |

Input |

Output |

Teaching App |

Translation App |

|||

|

Alphabets |

Numbers |

Words |

Sentences |

||||||

|

Mimix 3D |

Signing Exact English (SEE) |

? |

? |

? |

? |

Text/ Speech |

Gestures |

_ |

? |

|

Pro-Deaf Translator |

ASL, Brazilian, Portuguese |

? |

? |

? |

? |

Text |

Gestures |

? |

? |

|

The ASL app |

ASL |

_ |

_ |

_ |

_ |

_ |

_ |

_ |

? |

|

Sanket |

ISL |

_ |

_ |

? |

? |

Text |

Gestures |

_ |

? |

|

Indian Sign Language Translator |

ISL |

? |

? |

? |

? |

Speech |

Sign language |

_ |

? |

|

Sign Language ASL- Pocket Sign |

ASL |

_ |

_ |

_ |

_ |

_ |

_ |

? |

_ |

|

Deaf & Mute Communication |

English |

? |

? |

? |

? |

Text |

Speech |

_ |

? |

|

Spread Sign |

ASL, British, French, German, etc. |

_ |

_ |

_ |

_ |

_ |

_ |

? |

_ |

The general steps followed for the development of the ASL system are shown in Figure 2.

Figure 2. Steps for developing the ASL system

A. Data/Image Acquisition

In any image processing system, the first stage is image acquisition. The goal of any image capture is to convert an optical image (real-world data) into a numerical data array that can be edited later, on a computer. In the topic of ASL, there has been a lot of research done, and scholars have created their own standard databases. Researchers utilize various technologies to capture hand motions when creating datasets. The information from the user can be obtained by a variety of means, including visual-based device cameras/web cameras, wearable devices, such as sensor-gloves, cube-gloves, or specific devices such as Microsoft Kinect. The data acquisition procedure is described as "technical work with photographs" and is briefly discussed in [8].

B. Hand Segmentation

The challenge of gesture segmentation is the first step toward visual gesture recognition, which involves detecting, analyzing, and recognizing motions from real-world sequences of images. In ASL systems, hand and gesture detection is critical because the outcome of all other processes is dependent on it. Because there are several different sign tones and segment placements, image segmentation entails training on skin segmentation datasets [2].

The methods [2],[3],[4],[10] consist of the following steps:

- Input image is preprocessed, and the region is computed.

- The image's attributes are preprocessed.

- Transliteration, a method of converting an altered image to text is performed.

C. Feature Extraction

The input images contain a lot of irrelevant information that doesn't help with sign language identification, which requires a lot of real-time processing. Feature extraction is the process of converting raw data into numerical features that may be processed while maintaining the information in the original dataset. It yields better results than simply applying machine learning to raw data. Table 3 summarizes the feature extraction approaches as well as the algorithms employed by researchers, along with their outcomes.

D. Image Classification

Image categorization is the process of extracting classes of information from a multiband raster image. Thematic maps can be created using the raster created by image categorization. It's crucial in ASL systems since it uses feature-extracted images as input and detects correct gestures. The training set of observational data is usually used to classify the data.

Researchers do not use this as their only means of segmentation. Many scholars utilize new technologies such as MediaPipe as well [10]. MediaPipe is used to pre- process images in order to obtain multi-hand landmarks. MediaPipe is a framework that allows developers to create multi-modal applications with a skeleton of nodes, edges, and landmarks to track key places on the body. All coordinate points are normalized in three dimensions. Developers use Tensorflow for developing models. Pipelines in MediaPipe are made up of nodes in a graph. Only hand movements make up 21 of the crucial aspects. Mediapipe is becoming more popular for ASL development due to the convenience of recording the critical points of gestures.

III. DISCUSSION ON THE EXISTING SYSTEMS

There are a lot of problems due to which a system fails and there is a need for new systems that overcome these problems. The major issue found in the existing systems is that, most of the applications are sign language teaching apps and not sign language translation apps which can help in the translation of the gestures into the language understood by everyone. While some applications can be used for the conversion to sign language and not vice-versa. The available applications use ASL and not the native language. Also, native languages are not given any preferences over ASL i.e., there are few datasets available for native languages.

Apart from this, there is no real-time sign language translator that can be used anytime anywhere. Some existing systems don't recognize the dynamic gestures and also sometimes there is false recognition of the gesture which leads to false communication or wrong learning of the particular language.

Table 3. Summary of related work analysis

|

|

Ref No |

Paper Name |

Algorithm & Accuracy |

Limitations |

|

|

|

1 |

Long Short- Term Memory- based Static and Dynamic Filipino Sign Language Recognition |

LSTM Neural Network Accuracy – 98% |

The system is not able to recognize rare or complex gestures. |

|

|

|

2 |

Hand Gesture Based Sign Language Recognition Using Deep Learning |

AlexNet Classifier Accuracy – 99% |

It is only able to recognize fingerspelling, which is a subset of sign language. |

|

|

|

3 |

Deep Learning based Sign Language Recognition robust to Sensor Displacement |

CNN, LSTM Accuracy – 95.6% |

This is only working with large amount of data. |

|

|

|

4 |

A Review of Segmentation and Recognition Techniques for Indian Sign Language using Machine Learning and Computer Vision |

CNN |

The current datasets used for ISL recognition and segmentation are limited in size and diversity. |

|

|

5 |

Speech to Sign Language Translation for Indian Languages |

Wavelet based MFCC & LSTM Accuracy – 80% |

Accuracy for voice input is very less and with lot of noise. |

||

|

6 |

Sign Language to Text Conversion using Deep Learning |

CNN Accuracy – 99% |

The model accuracy is less with less dataset. |

||

|

7 |

Sign Language Translator using ML |

KNN, Decision Tree Classifier, Neural Network Accuracy – 97% |

The system does not take into account the context of the signs. This means that the system may not be able to correctly classify a sign if it is performed in a different context. |

||

|

8 |

Sign Language to speech translation using ML |

CNN Accuracy – 90% |

If the user places the sensor in a different location, the system's performance may degrade. |

||

|

9 |

An improved hand gesture recognition system using key- points and hand bounding boxes |

CNN Accuracy – 95% |

The proposed method is computationally expensive, especially for large images. |

||

|

10 |

Dataset Transformati on System For Sign Language Recognition Based on Image Classification Network |

STmap, CNN- RNN Accuracy – 99% |

The system converts the skeleton data into an image, called an STmap, before training the image classification network.This conversion process may lead to some loss of information |

||

Conclusion

There are more than 300 different sign languages, one for each country. The development of sign language recognition systems is undergoing extensive research. However, there are numerous flaws in the creation of a fully operational ASL system. All these flaws have been mentioned in this paper. Some of the conclusions derived through this research are summarized as follows: basic issues like the research being limited due to a lack of standard datasets, the creation of a dependable and ubiquitous database is critical for the advancement of systems, the developed technologies operate in situations that are carefully controlled, such as a plain background, clothing color, light intensity, and so on, the currently available systems are focused on static signals; but the development of dynamic communication systems should be prioritized, the creation of a real-time, speedier, and more accurate system should be prioritized, since few movements for distinct signs are the same, such as the hand motion for the number \"3\" and the alphabet \"W\" in ASL, it is difficult for a system to recognize them effectively, the number of sign language translation apps available is restricted, most of the applications are educational apps that use video lectures to teach sign languages, there are some translation apps available; these apps accept text or speech as input and use a 3D model to translate the text into motions. To conclude, there are just a few papers that have achieved success in all factors of ASL accuracy, having a huge dataset, and having real-time recognition being just a few of the factors. More research is being conducted every day on the development of different technologies that help to bridge the gap between the deaf and the normal population. Future work on development of ASL systems should focus on development of real-time ASL system that would work on dynamic gestures as well. Also, the development of ASL systems for the native language apart from ASL is most needed. Moreover, the accuracy of the systems can be increased that would help to prevent the miscommunication that it could cause.

References

[1] Sahoo, Ashok K., Gouri Sankar Mishra, and Kiran Kumar Ravulakollu. \"Sign language recognition: State of the art.\" ARPN Journal of Engineering and Applied Sciences 9.2 (2014): 116-134. [2] Prof. Radha S. Shirbhate1, Mr. Vedant D. Shinde2, Ms. Sanam A. Metkari3, Ms. Pooja [3] U. Borkar4, Ms. Mayuri A. Khandge5. Sign language Recognition Using Machine Learning Algorithm. International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 (3, march 2020). [4] Sharath Kumar “Sign Language Recognition with Convolutional Neural Network.”International Research Journal of Engineering and Technology (IRJET) Volume: 07 Issue: 12 | Dec 2020. [5] NB, Mahesh Kumar. \"Conversion of sign language into text.\" International Journal of Applied Engineering Research 13.9 (2018): 7154-7161. [6] Mayuresh Keni, Shireen Meher, Aniket Marathe, Amruta Chintawar, Shweta Ashtekar. Sign Language Recognition System - INTERNATIONAL JOURNAL OF ENGINEERING RESEARCH & TECHNOLOGY (IJERT) ICONET – 2014 (Volume 2-Issue 04). [7] Mekala, Priyanka, et al. \"Real-time sign language recognition based on neural network architecture.\" 2011 IEEE 43rd Southeastern symposium on system theory. IEEE, 2011. [8] EE, 2011. [8] Halder, Arpita, and Akshit Tayade. \"Real-time vernacular sign language recognition using mediapipe and machine learning.\" Journal homepage: www. ijrpr. com ISSN 2582 (2021): 7421. [9] Duy Khuat, Bach, et al. \"Vietnamese sign language detection using Mediapipe.\" 2021 10th International Conference on Software and Computer Applications. 2021. [10] Adhikary, Subhangi, Anjan Kumar Talukdar, and Kandarpa Kumar Sarma. \"A Vision- based System for Recognition of Words used in Indian Sign Language Using MediaPipe.\" Sixth International Conference on Image Information Processing (ICIIP). Vol. 6. IEEE, 2021. [10] Nadgeri, Sulochana, and Dr Kumar. \"Survey of Sign Language Recognition System.\" Available at SSRN 3262581 (2018). [11] Jan Matuszewski, Marcin. Zaj?c.Recognition of alphanumeric characters using artificial neural networks and MSER algorithm.Event: Radioelectronic Systems Conference, Jachranka, Poland. (2019) [12] Maruyama, Mizuki, et al. \"Word-level sign language recognition with multi-stream neural networks focusing on local regions.\" arXiv preprint arXiv:2106.15989 (2021). [13] Sharma, S., R. Gupta, and A. Kumar. \"Continuous sign language recognition using isolated signs data and deep transfer learning.\" Journal of Ambient Intelligence and Humanized Computing (2021): 1-12.

Copyright

Copyright © 2024 Vipul Punjabi, Yash Deokar, Rohit Sonawane, Krishna More, Riddesh Suryawanshi. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET65439

Publish Date : 2024-11-21

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online