Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Smart Driver Fatigue Detection System Using CNN

Authors: Nikitha N, Keerthan Raj Mateti

DOI Link: https://doi.org/10.22214/ijraset.2023.55112

Certificate: View Certificate

Abstract

Road accidents frequently result from fatigued or sleepy drivers. More people die and are killed worldwide each year. Driver fatigue is a major factor in many accidents. It is currently one of the major causes of accidents. Numerous facial expressions, such as frequent yawning, frequent eye blinking, and head positioning, might indicate how tired someone is. Computer vision is the most suitable and practical technology to solve this issue since it uses these sensory properties. The suggested methodology employs Dlib, CNN, and OpenCV. Dlib estimates the positions of 68 coordinates (x,y) that help in mapping the facial points on a person’s face. The 2904 pictures that make up the collections classified are Open Eyes, Closed Eyes, Yawning, or No-Yawning. A convolutional neural network (CNN) will be used to determine the mouth and eye states from the Region of Interest (ROI) photographs. The amount of mouth opening (FOM) and the percentage of eyelid closure (PERCLOS) over the pupil are the measures used to measure weariness over time. The recommended system determines whether the driver is sleepy and sounds a warning when it detects their eyes and yawns for a certain amount of time. The accuracy of the model has been discussed.

Introduction

I. INTRODUCTION

These days, fatigued driving contributes significantly to many traffic accidents. Implementing a system with an alarm to wake up sleepy drivers and assist them to stay concentrated on the road helps prevent car accidents. To minimize accidents, lessen casualties, and decrease suffering, a technology is being developed to detect driving sleepiness and inform drivers with an alarm. Several strategies must be examined to choose the method that will identify driver sleepiness with the maximum degree of accuracy.

The techniques provide a live framework using a computerized camera to access and evaluate the driver’s eye and mouth using Python, Dlib, CNN, and OpenCV. In essence, convolution is a filter that is slid over the input. CNNs are mostly employed for image recognition and classification. A CNN’s capacity to do convolutions is its expertise. In addition to these tasks, CNNs also are pre-eminent in signal processing and picture segmentation. With a linear and successive methodology, the waterfall model is a classic paradigm employed in the system development life cycle.

The use of a camera will be directed at capturing the driver’s yawn and eye movements. The driver is informed with a trigger. The suggested system detects driver fatigue and sounds a warning when the driver’s eyes and yawn are found to be near together for a specific measurement of the casing. We created a CNN network that was trained using detectable data. The CNN model is then used to analyze the live feed from the camera and classify whether or not the subject is sleepy using OpenCV.

II. LITERATURE REVIEW

A. Facial Detection

The system uses the Raspberry Pi in conjunction with several sensors, such as gas and vibration sensors, to determine sleepiness [1]. A camera that captures their vital signs has been fitted to monitor the driver. The facial image is transferred to the cloud if the eye is closed for a prolonged length of time. The accident is detected by a vibration sensor, and the server is notified by sending its latitude and longitude. The car’s inbuilt IOT modem transmits the vehicle’s position. With the aid of a gas sensor, this technology goes a step further and determines if the driver has drunk alcohol. An alert message is issued to the motorist if it is determined that they are not fit to drive.

The suggested solution uses an infrared camera to detect driver yawning and eye closure for fatigue analysis [2]. This process has four steps: recognizing faces, spotting eyes, spotting mouths, and spotting closed eyes and yawning. This procedure begins with MATLAB analyzing information from an infrared 2D camera. This technique has the benefit of being able to detect yawning and eye closure in low-light situations. The application will print a red rectangle around the driver’s lips and eyes when it detects signs of driver fatigue, such as yawning, and a red rectangle around the driver’s mouth and eyes when the driver closes their eyes.

A system for detecting drowsy drivers is created using Python and Dlib model [3]. It has been taught to recognize 64 different face landmarks. With the help of the pre-trained facial feature detector Histogram of Oriented Gradients from the Dlib package, face features may be located and detected (HOG). Histograms are created using the frequency of a picture’s gradient in certain localized areas. It is used to record the positions of the feature points in the video sequence and to identify tiredness by keeping an eye on the proportions of the mouth and eyes. The driver is warned if persistent indicators of tiredness are seen over an extended period of time. Dlib package helps to get better accuracy in terms of facial detection.

This technique uses local binary pattern histograms to recognize faces (LBPH) [4]. Making an intermediate picture that encodes the original image in binary representation is the first computational step in lbph. The image is transformed into a matrix, and we must select its center value to serve as the threshold value. In order to specify neighboring values, this value must be set to either 0 or 1. A low-dimensional, statistically independent feature vectors are developed. This only works with smaller data samples.

B. Paradigm for Drowsiness Detection

A detailed examination of drivers’ biosignals, by the electroencephalogram (EEG) is a solution for dealing with drowsiness [5]. EEG is important for sleepiness levels by displaying the electrical activity of the mind. This investigation examines how drivers behave by the sleepiness indicator: the brain wave pattern. In this research, the EEG was used to gather the individuals’ brain signals. headset connected to the OpenBCI program. A decision tree was used to classify every extracted feature, and the results indicated excellent levels of accuracy. The decision tree model’s outcomes demonstrated a relative alpha power that increased throughout the sleepy session. Based on the object identification model, fatigue indicators are used in driver fatigue detection [6]. The efficientDet model was used to detect the condition of the mouth and eyes, and the percentage of eye closure (PERCLOS), frequency of yawning, and length of eye closure were utilized to determine the level of weariness. The efficientDet is a quick and effective object detection model. Real-time processing is present. The outcomes demonstrate real-time processing, cutting-edge performance, and appropriateness for practical applications. This technique measures the features accurately thanks to the employment of PERCLOS and FOM. The effective approach of percentage eye closure (PERCLOS) is the main emphasis of the suggested system [7]. A parameter level is established by PERCLOS to identify drowsiness. The Viola-Jones detector is used for the detection, and it separates the driver’s face and the detector’s picture of the eye. The basic idea behind this technique is that it concentrates on the face region while immediately rejecting the non-facial. AdaBoost first captures the original image before using the Viola-Jones technique to determine whether or not it contains a face. If it is a face, the PERCLOS technique is used to identify the eye portion and determine whether or not it is open. In our study, the PERCLOS will be utilized to collect data on measurement and eye closure. A model that increases the tracking’s precision by detecting the drivers’ awareness using video stills of their faces [8]. speed limit suggestions’ secondary functionality is dependent on the driver’s mental condition at the time. The different face characteristics are assessed to ascertain the drivers’ present condition. The driver receives a warning about their level of tiredness as well as a proposed safe speed limit by integrating the characteristics of their mouth and eyes. This technology is crucial for preventing and subsequently reducing the amount of fatal accidents that come from drowsy driving, which will prevent many lives from being lost and avoid significant property damage. The sleepiness detection method enhances classification results by minimizing the amount of false positives caused by fluctuations in observed eye blink rates that are not linked to tiredness but rather to body language or speech.

dWatch is a mobile device-based solution for real-time driver drowsiness monitoring that integrates physiological measures with the driver’s mobility states for high detection accuracy and little battery usage [9]. In particular, distinct techniques are developed for estimating heart rate variability (HRV) and detecting yawn motions, respectively, based on heart rate readings, and these techniques are integrated with steering wheel motion characteristics extrapolated from motion sensors for fatigue identification. The motion data not only helps with detection but also serves as a trigger for activating the heart rate sensor, which lowers the system’s power usage. To determine the driver’s level of tiredness, an SVM algorithm is employed, with the parameters being the retrieved physiological characteristics and motion features.

C. Algorithms Used for Detection Model

The driver’s facial characteristics are analyzed to determine whether or not they are sleepy [10]. The areas corresponding to the mouth and eyes are retrieved from the video frame. For the purpose of sleepiness detection, these extracted areas are plugged into a hybrid deep-learning model. The modified InceptionV3 network and the long short-term memory (LSTM) network are both incorporated into the suggested model for a hybrid form of deep learning.

The global average pooling layer and the dropout algorithm have been added to InceptionV3 in order to increase its spatial resilience and prevent it from overfitting to the training data. On the NTHU-DDD dataset, the convolutional neural network, the IncpetionV3 model, and the LSTM model are evaluated alongside the suggested hybrid model. The findings of the experiments show that combining the different feature maps gives us the ability to isolate useful characteristics.

The system suggests a hierarchical architecture called a hierarchical deep drowsiness detection (HDDD) network, which is comprised of deep networks with divided spatial and temporal phases [11]. The suggested procedure makes use of ResNet to identify the face of the driver, as well as the surrounding illumination and whether or not the driver is wearing glasses. This phase is also responsible for the subsequent stage having a much-increased percentage of eyes and mouths being detected. After that, the LSTM network is utilized so that the temporal information that is present between the frames may be utilized.

According to the literature, the three groups of sleepiness detection approaches are based on driving habits, physio-logical traits, and computer vision [12]. Due to its low cost and non-intrusive nature, the computer vision approach is the one that is most frequently utilized among them. This method examines different photographs of the driver’s posture, looking for signs of drowsiness such as a yawning period, head movement, and closed eyes. A thorough comparison analysis found that strategies based on spatial features produced the best results.

In order to draw out the facial area from each frame of a video, the driver’s face and facial landmarks are first recognized [13]. After that, a residual-based deep 3D convolution neural network (CNN) is built to categorize the driver’s facial picture order with a particular number of frames for the purpose of generating its sleepiness output probability value. This network was trained using data that was unrelated to the task at hand. After that, the state probability vector of a video is obtained by concatenating a specific number of output probability values. A video can have many different states. In the end, a recurrent neural network is utilized in order to categorize the created probability vector and acquire the result of driver sleepiness. The approach provides consistent and reliable results.

Using a convolutional neural network, a real-time monitoring technique for driving disturbances (CNN) [14]. Modern CNN models like InceptionV3, VGG16, and ResNet50 are used for detection. The output assessment of the CNN models is shown using a variety of human face images that are both awake and asleep. The highest-performing CNN model among the tested models is deployed across more devices, and its performance is reviewed, leveraging the dataset to improve real-time effectiveness. This particular data collection considerably improves performance by including not just the side view but also the driver’s front vision, comparable to the driver-end situations. Making this work a commodity and producing it on a wide scale will also significantly advance the field.

The system uses a resource-constrained digital video processor platform with a Partial Least Squares (PLS) analysis-based eye state classification algorithm to monitor the status of the eyes at all times while driving [15]. Using the percentage of eye closure (PERCLOS) statistic, sleepiness is identified. Using a cascaded classifier based on Haar characteristics, the face in the infrared (IR) picture is identified, and the eye inside the face is identified. PLS analysis is utilized to create a low- dimensional discriminative subspace for the classification of binary eye states.

This subspace has a basic PLS regression score-based classifier that divides the test vector into open and closed categories. The results of the testing conducted on a moving object demonstrate that the suggested approach improves classification accuracy significantly at a frame rate of almost three seconds.

III. PROPOSED SYSTEM

The Drowsiness dataset that is available on the Kaggle website is utilized for the project. Images may be classified as having Open Eyes, Closed Eyes, Yawning, or No-Yawning according to the four categories included in the original dataset. • There are a total of 1452 photos split across two categories included in the collection. • There are 726 photos in each category. • There is no need to rebalance the dataset because it is already in a balanced state. • Class Labels including “Open Eye” and “Closed Eye,” ”yawn” and ”no yawn,” and ”Open Mouth” and ”Closed Mouth” • The Class Labels were encoded in such a way that the number 3 indicates Open Eyes, the number 2 represents Closed Eyes, the number 0 represents Yawns, and the number 1 represents No Yawns.

The system is divided into various modules namely Image Capturing Module, Preprocessing Module, Analysis Module and Alarm Activation Module as shown in Fig. 1.

A. Image Capturing Module

Set up a camera such that it continuously searches a stream for faces in order to begin the process of initiating a video broadcast. Take note of the indices that correspond to the facial landmarks. First, the coordinates need to be extracted, and then those extracted coordinates may be used to determine the aspect ratio. after calculating the mouth-to-eye ratio and the eye-to-mouth ratio. Establish an initial threshold for the eye in such a way that a warning will be displayed if the aspect ratio is lower than the threshold.

B. Preprocessing Module

In order to achieve the desired outcome, the Driver Fatigue Detection system project must complete a number of stages along the road. As a machine learning and OpenCV project, it is essential to include several libraries connected to that domain, such as OpenCV, SciPy, Matplotlib, sklearn, tensorflow, and keras. To get started, let’s talk about libraries. We continue working our way through the dataset, which includes categories such as open eyes, closed eyes, yawn, and no yawn. Following this, we visually represent some of the data, and then we preprocess the data by labelling the classes. The system employs technology from the field of artificial intelligence to preprocess the image of the driver and isolate the face.

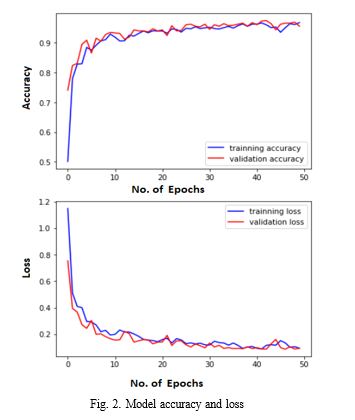

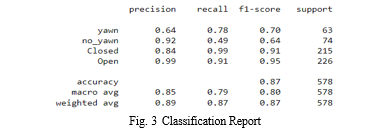

C. Analysis Module

The dataset is then segmented into a training set and a testing set, and more data is added to the training set so that it is more diverse. Activation, pooling, and an optimizer are all components that are important to the construction of the CNN model. The performance of the model is evaluated after it has been run for a total of 50 epochs using metrics such as model accuracy, model loss, and classification report. The model is put through its paces in terms of its testing. The fresh image is then submitted to the analysis module, which uses deep learning methods to determine the level of weariness exhibited by the driver at that precise instant. In this scenario, the analysis module is made up of both a recurrent neural network and a convolutional neural network. This recurrent CNN is accountable for determining a numerical output that reflects the estimated sleepiness level of the driver in order to decide if the driver is currently undergoing signs of weariness at the present time.

D. Alarm Activation Module

The value is then transmitted to the alarm activation module, which is responsible for determining whether or not the associated alarm should be activated. When the eyes are closed, the counter will begin to count down. The warning appears on the screen whenever the user yawns or closes their eyes, whichever comes first. The alarm will sound if the eyes are closed for five frames in a row without being opened.

IV. RESULT AND DISCUSSION

The model was evaluated based on model accuracy, model loss as shown in Fig. 2 and classification report as shown in Fig. 3. The recorded accuracy is 87 %.

In order to achieve the outcomes that were intended, it was necessary to do exhaustive testing on each individual component of the system before integrating it fully into the system as a whole. Examining each of the individual modules and the ways in which they communicated with one another allows for an assessment of the results of this project. Following the completion of the modelling of the algorithm, the simulation of the method was carried out. Experimentation and modelling have shown that this project is successful in detecting both the eyes and the mouth for the sleepiness detection system. This system is reliable because it can detect the transition from one state to another of sleepiness. The algorithms used in this system can detect changes in both the eyes and the lips at each frame per second.

Python and OpenCV were used to develop an implementation of a sleepiness detection system, which included the following steps: During the race, the footage captured with the camera worked successfully. The captured video was cut up into individual frames, and those frames were separately examined. Following the successful recognition of the face, subsequent detections of the eye and mouth were made.

The eye aspect ratio for each eye can be determined by using the Euclidian distance functions of OpenCV. This function returns a single value and calculates the eye aspect ratio by comparing the distances that exist between the vertical eye landmark points and the distances that exist between the horizontal eye landmark points. After the eye-aspect ratio has been calculated, an algorithm can use it in conjunction with a threshold to determine whether or not a person is blinking. The ratio is relatively unchanged when the eyes are open; however, it will quickly decrease until it reaches zero during a blink, after which it will begin to rise again when the eye opens.

If the same eye is closed for many frames in a succession, it is considered to be a sleepy condition; otherwise, it is treated as a regular blink, and the process of taking a picture and assessing the state of the driver is repeated. In the event that sleepiness is detected, an alert will be sent.

Conclusion

The system satisfies the system’s goals and criteria. A real-time technique for detecting eye blinks was given. We proved statistically that Haar cascade classifiers and regression facial landmark detectors are accurate enough to correctly assess the positive facial pictures and the level of eye-opening. The haar cascade algorithm identifies faces and eyes using haar characteristics. Predefined Haar characteristics are utilized to detect various objects. On the picture, haar characteristics are applied, and the blink frequency is computed using the perclos technique. If the value stays constant for extended durations, the driver is deemed distracted, and an alert is also activated. The current technique yields a result that is 87 % accurate. The model may be progressively enhanced by including more characteristics such as automobile conditions, weather reports, etc. Using all of these characteristics may significantly increase the accuracy. We want to add a sensor that monitors the driver’s heart rate to the project in order to avoid accidents caused by abrupt heart attacks. Netflix and other streaming services may recognize when a user is sleeping and stop playing the video appropriately using the same model and approaches. It is also applicable to applications that keep users from falling asleep. We may also give the customer an Android application that provides information on his or her degree of tiredness throughout any travel. According to the amount of frames captured, the user will know their Normal condition, their Drowsy state, and the number of times their eyes blinked.

References

[1] R. K. M, R. V and R. G. Franklin, ”Alert System for Driver’s Drowsiness Using Image Processing,” 2019 International Conference on Vision To- wards Emerging Trends in Communication and Networking (ViTECoN), 2019, pp. 1-5, doi: 10.1109/ViTECoN.2019.8899627. [2] W. Tipprasert, T. Charoenpong, C. Chianrabutra and C. Sukjamsri, ”A Method of Driver’s Eyes Closure and Yawning Detection for Drowsiness Analysis by Infrared Camera,” 2019 First International Symposium on Instrumentation, Control, Artificial Intelligence, and Robotics (ICA- SYMP), 2019, pp. 61-64, doi: 10.1109/ICA-SYMP.2019.8646001. [3] S. Mohanty, S. V. Hegde, S. Prasad and J. Manikandan, ”Design of Real-time Drowsiness Detection System using Dlib,” 2019 IEEE International WIE Conference on Electrical and Computer Engineering (WIECON- ECE), 2019, pp. 1-4, doi: 10.1109/WIECON-ECE48653.2019.9019910. [4] C. -C. Lien and P. -R. Lin, ”Drowsiness Recognition Using the Least Correlated LBPH,” 2012 Eighth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, 2012, pp. 158- 161, doi: 10.1109/IIH-MSP.2012.44. [5] S. Yaacob, N. I. Z. Muhamad’Arif, P. Krishnan, A. Rasyadan, M. Yaakop and F. Mohamed, ”Early driver drowsiness detection using electroencephalography signals,” 2020 IEEE 2nd International Conference on Artificial Intelligence in Engineering and Technology (IICAIET), 2020, pp. 1-4, doi: 10.1109/IICAIET49801.2020.9257833. [6] R. Ayachi, M. Afif, Y. Said and A. Ben Abdelali, ”Drivers Fatigue Detection Using EfficientDet In Advanced Driver Assistance Systems,” 2021 18th International Multi-Conference on Systems, Signals Devices (SSD), 2021, pp. 738-742, doi: 10.1109/SSD52085.2021.9429294. [7] K. S. Sankaran, N. Vasudevan and V. Nagarajan, ”Driver Drowsiness Detection using Percentage Eye Closure Method,” 2020 International Conference on Communication and Signal Processing (ICCSP), 2020, pp. 1422-1425, doi: 10.1109/ICCSP48568.2020.9182059. [8] Charlotte Jacobe´ de Naurois, Christophe Bourdin, Anca Stratulat, Emmanuelle Diaz, Jean-Louis Vercher, Detection and prediction of driver drowsiness using artificial neural network models, Accident Analysis Prevention, Volume 126, 2019, Pages 95-104, ISSN 0001-4575 [9] Tianzhang Xing, Qing Wang, Chase Q. Wu, Wei Xi, and Xiaojiang Chen. 2020. DWatch: A Reliable and Low-Power Drowsiness Detection System for Drivers Based on Mobile Devices. ACM Trans. Sen. Netw. 16, 4, Article 37 (November 2020), 22 pages. https://doi.org/10.1145/3407899 [10] Kumar, V.,Sharma, S. Ranjeet Driver drowsiness detection using modified deep learning architecture. Evol. Intel. (2022). https://doi.org/10.1007/s12065-022-00743-w [11] Jamshidi, S., Azmi, R., Sharghi, M. et al. Hierarchical deep neural networks to detect driver drowsiness. Multimed Tools Appl 80, 16045–16058 (2021). https://doi.org/10.1007/s11042-021-10542-7 [12] Pandey, N.N., Muppalaneni, N.B. A survey on visual and non-visual features in Driver’s drowsiness detection. Multimed Tools Appl 81, 38175–38215 (2022). https://doi.org/10.1007/s11042-022-13150-1 [13] Zhao, L., Wang, Z., Zhang, G. et al. Driver drowsiness recognition via transferred deep 3D convolutional network and state probability vector. Multimed Tools Appl 79, 26683–26701 (2020). https://doi.org/10.1007/s11042-020-09259-w [14] Minhas, A.A., Jabbar, S., Farhan, M. et al. A smart analysis of driver fatigue and drowsiness detection using convolutional neural networks. Multimed Tools Appl 81, 26969–26986 (2022). https://doi.org/10.1007/s11042-022-13193-4 [15] Selvakumar, K., Jerome, J., Rajamani, K. et al. Real-Time Vision-Based Driver Drowsiness Detection Using Partial Least Squares Analysis. J Sign Process Syst 85, 263–274 (2016). https://doi.org/10.1007/s11265- 015-1075-4

Copyright

Copyright © 2023 Nikitha N, Keerthan Raj Mateti. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET55112

Publish Date : 2023-07-30

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online