Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Smart Glass for Visually Impaired People

Authors: Dr. Kavya A P, Anoushka Ameet Kurthukoti, Ayeesha Rida Khan A, Devika K S

DOI Link: https://doi.org/10.22214/ijraset.2024.61143

Certificate: View Certificate

Abstract

This review paper aims to underscore the significance of inclusive technology in enhancing the lives of individuals with visual impairments and examine how smart wearable glasses are considered a potential solution for addressing challenges related to object detection. The blind community faces several obstacles in a world where visual information is dominant. The study examines the body of research on smart glasses and emphasizes how object recognition helps to improve spatial awareness. It highlights the revolutionary effect of these gadgets in promoting independence and enhancing accessibility for blind people by summarizing recent research. This succinct assessment seeks to add to the existing methods of inclusive technology by highlighting the exciting possibilities of smart glasses in empowering the blind population.

Introduction

I. INTRODUCTION

Visual impairment is a severe disability that affects around 2.2 billion people worldwide. To add to this known problem, 90% of these people belong to the economically weaker sector. The known comprehensible solution is about 3000$, which is approximately 2,50,000Rs, only affordable to some challenged people. To improve daily functioning, creative solutions are required. Visual impairment is an emerging public health concern since it makes it difficult for people to recognize faces and navigate barriers. This is according to the data from the World Health Organization. With technology developing at a breakneck pace, we have a unique chance to use innovation to improve the quality of living for those who are blind or visually impaired.

This review paper is focused on creating smart glasses that are tailored for those who are blind or visually challenged, with an emphasis on the feature of object recognition. Existing smart glasses integrate the following technologies to offer real-time support: the YOLO algorithm, ultrasonic sensors, OCR detection, and devices that use cameras and Raspberry Pi. The main objective is to close the gap between the world and the unique requirements of visually impaired people, promoting independence and improving their quality of life. This paper explores the current creation and design of these smart glasses, highlighting how they could improve social interaction and safety for a population with vision disabilities.

II. ASSISTIVE DEVICES: TECHNOLOGY DEVELOPMENT FOR THE VISUALLY IMPAIRED

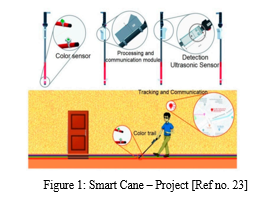

A. Smart Cane

The Smart Cane (Figure 1.) integrates obstacle detection via ultrasonic sensors and geolocation capabilities, transmitting location coordinates to an MQTT Broker. The system alerts the user through a vibrotactile system when obstacles are detected, with the vibration intensity corresponding to the obstacle's proximity. Additionally, it navigates by detecting and recognizing color lines, guiding the user audibly. Real-time audio alerts are generated based on detection and navigation data. This multifunctional device enhances safety and mobility for visually impaired individuals by providing timely feedback and guidance in various environments.

III. LITERATURE REVIEW

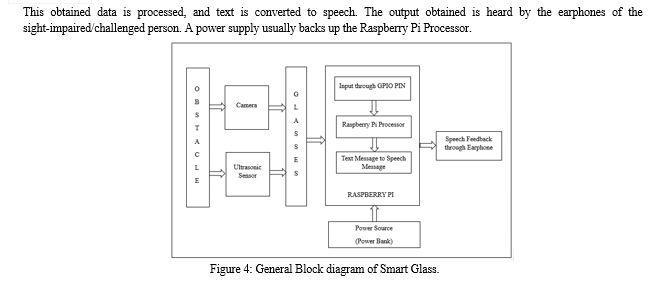

Mr. Harisha G C [1] Capturing images and converting them to text are included in the image processing method. Optic character recognition is the approach used for image processing. Optical character recognition takes pictures or scans them and then transforms them into text or readable format to be processed further. The picture OCR-captured images can have any resolution. A static image is captured using the image processing approach with assistance from the camera. The Raspberry Pi uses the camera as its eye. The Raspberry Pi can be linked to the camera using cable assistance. To take the picture, we utilized a Raspberry Pi camera. Following a successful connection, the picture is obtained with the aid of the OCR-programmed Tesseract. We are utilizing Tesseract OCR, which is compatible with the Raspberry Pi and can mainly comprehend English. The Tesseract Orc is available as an open-source library. Tesseract-ocr—a command line OCR—captures an image using a single button press. The picture can be stored in the.png or.jpeg file formats. The captured image is converted to text format on the Raspberry Pi and saved with the aid of the Python Tesseract OCR package. The text is transformed and is used as the input to the TTS system, which reads it aloud. The text images are captured using the built-in camera. The camera being utilized determines the clarity of the picture that is captured. We are utilizing the camera on the Raspberry Pi.

Mr. Rampur Srinath and Mr. Suhas Bharadwaj R [2] The pi-camera is where the input is initially obtained. The live video stream will be provided to the Raspberry Pi model, which is utilized as input. For the model to identify obstacles, it must first be trained using images of objects. Additionally, data augmentation must be performed for those objects, which entails creating fake data from the already available dataset. The model here is a balanced fit. This will enable blind people to decide the course of action when encountering obstacles. Here, a deep learning model is being used and will be trained to utilize a unique collection. Robo flow was used to build those datasets. It offers a range of solutions that enable the modelling of multiple datasets and the execution of diverse data augmentation processes. This model would be fed input video feeds, which it would then analyze to determine if the images it detects are objects. We use Porch and YOLO v5 to perform prediction and other tasks. The model's output is given into the Pyttx3 model, transforming it from text output into speech output.

Dr. B. Sudharani [3] The primary goal of this project is to help those who are visually impaired—not to provide exact assistance, but to enable them to become more independent and live easier. The glasses we will be utilizing for this project have a camera on them so they can take images. Glasses can identify each object and recognize images in the pictures. It can measure the separation between every object and the blind person. Transformation of the patron will be able to hear the voice-activated collected image information via headphones that assist blind people to recognize the person in front of them.

It will also notify the user if the thing is far away or very close to him. In this project, persons are detected using an ultrasonic sensor. When it finds a person, it takes a picture and searches the pre-established database. The individual's name will be displayed as a voice over an earphone if the image matches; otherwise, it will say " unknown person. "A. Narmada [4] implemented a prototype of An intelligent glove that emerges as a promising solution for individuals with visual impairments, empowering them to enhance their detection capabilities and track objects in their vicinity. The system utilizes a Raspberry Pi as the central processing unit, integrating a camera, ultrasonic sensor, speakers, buzzer, and micro-vibrating motor. Object detection and tracking are achieved using a Deep Neural Network (DNN) implemented in OpenCV. The system successfully recognizes various objects and displays their names, providing real-time feedback to the person via speakers. Additionally, obstacle detection using the ultrasonic sensor triggers alerts, enhancing user safety. In future iterations, integrating a GPS module could enhance the system by providing location-based information and navigation assistance. Speech recognition capabilities could also be incorporated to enable hands-free operation, further improving the user experience. Optimizing the system for faster object detection and tracking, potentially leveraging high-performance graphics processing units (GPUs), would contribute to real-time responsiveness. Overall, the proposed smart glove demonstrates significant potential for assisting visually impaired individuals in navigating their surroundings independently.

Aisha Abdulatif [ 5] proposed a smart glasses robot for blind people that employs Haar-cascade, LBPH, YOLO, and OCR algorithms implemented through Python programming on Raspberry Pi. The methodology involves face detection, person identification, object recognition, and text-to-speech conversion using the respective algorithms. The system demonstrated promising accuracy and processing speed results for varying faces, objects, and word datasets. Enhancing accuracy by integrating advanced machine and deep learning algorithms is recommended for future scope. Additionally, continuously refining existing algorithms and exploring newer technologies could improve the smart glasses' capabilities. Incorporating user feedback and considering real-world challenges will contribute to the ongoing development of this assistive technology, making it more effective and user-friendly. Moreover, potential expansions into other functionalities to address the diverse needs of the sight-impaired community can be explored.

Mr. Mukhriddin Mukhiddinov and Mr. Jinsoo Cho [6] propose an innovative glass system for blind and visually limited individuals. The smart glasses capture real-time images and send them to a high-performance artificial intelligence server. The processing involves deep learning models for enhancing low-light images, detecting objects, extracting salient objects, and recognizing text within the images. Results, including audio feedback and tactile graphics, are sent back to the user through the smartphone, ensuring efficient processing and communication. The future work focuses on advancing the innovative glass system by leveraging evolving GPU technology and optimizing deep learning models. Exploring enhancements in sensory inputs, such as incorporating additional environmental sensors, can further improve system efficiency. Integrating innovative features, like expanded object categories for recognition, can broaden the system's applications. Ongoing efforts should prioritize real-time performance improvements, explore energy-efficient solutions, and enhance user experience to create a more seamless and accessible solution for individuals with visual impairments.

Mr. Yirun Wang and Mr. Xiangzhi Tu [7] The Raspberry Pi ZREO, Oak-D-Lite depth camera, bone conduction headset, and other essential parts make up the arrangement. The OcK-D-Lite depth camera takes the picture, and the ESP32 microcontroller in the camera controls the VPU unit to preprocess the picture. Following the transmission of the preprocessed findings to Raspberry Pi ZREO, related voice matching is carried out. Finally, the ZREO speech module for Raspberry Pi is called a bone conduction earpiece and is utilized to provide corresponding speech output. To analyze obstacle avoidance and edge detection data for the obstacle avoidance module and the zebra crossing edge detection module, the training model is burned into the ESP32. Corresponding voice matching is performed when impediments ahead and the zebra crossing edge are identified; ultimately, the voice module is called for the corresponding voice output. The shell of the glasses in this system is made of ABS engineering plastic. After examining the wearing force, silica gel gaskets are inserted in strategic contact points to improve. The batteries and raspberries are arranged symmetrically to guarantee the wearer's comfort and slide resistance.

Dr Vani Priya C H [8] suggested that Smart Glasses for Visually Impaired People use a complete, Requirements-Based methodology. Understanding visually challenged people's problems and identifying suitable technologies contribute to the gathering process. A Literature Review examines existing studies on smart glasses, obstacle detection, and facial recognition. System Design comprises developing the smart glasses system using Raspberry Pi, Pi Cam, Ultrasonic Sensor, and Haar Cascade Algorithm. Hardware implementation includes Raspberry Pi, Pi Camera, and Ultrasonic Sensor. The software implementations include OpenCV for facial recognition, Espeak for voice output, and IoT functionality. Testing verifies that obstacle detection, facial recognition, and voice output work correctly and reliably. Result Analysis assesses the effectiveness of implementation. The conclusion summarises the data and examines the potential influence on visually hindered people.

Mr. N.M. Ramalingeswara Rao [9] Aims to support visually handicapped people by using image processing, OCR, and TTS conversion, including capturing photos and translating the text into audio feedback. Raspberry Pi camera and Tesseract OCR software for English text conversion are what help with image processing and OCR.

Color conversion and thresholding are examples of image preprocessing procedures for effective OCR. Festival TTS on Raspberry Pi is utilized for text-to-speech conversion, with audio generated via an inbuilt audio connection. The Raspberry Pi, camera, switch, speaker, and ultrasonic sensor for obstacle detection are all shown in the given block diagram. Ultrasonic smart glasses detect obstructions in real-time and offer safety features. The results reveal effective obstacle detection and text recognition on objects, books, or papers. Finally, technology assists visually impaired folks by employing image processing, OCR, and TTS and adding safety features via ultrasonic.

Mr. K. Sundar Srinivas [10] Through obstacle detection and face recognition, the electronic device aims to assist visually challenged persons in social interactions. Ultrasonic sensors are used as transceivers in pairs to measure the separation between visually challenged people and obstacles. Face recognition technology uses a person's face to identify them, which is an essential feature for differentiation. Four components are used in the system block diagram: Raspberry Pi 3, Ultrasonic sensor, Pi camera, and headphones. The camera module, push button, power supply, and earbuds are all included. The testing of smart glasses for facial recognition and obstacle detection has been effective, offering visually impaired people a portable, affordable option. The gadget acts as a walking aid, runs off internet access, and speaks to users to alert them to obstacles. Plans call for adding ultrasonic sensors to identify obstacles, which will help blind people navigate society more confidently and with more navigation.

IV. DISCUSSION

This section gives an overview of the comparison of existing methods assistive devices for visually impaired people.

Table 1. Comparison of existing methods (Outcome of literature review)

|

REFERENCE |

METHOD |

RESULT |

FUTURE SCOPE |

DRAWBACK |

|

1 |

Tesseract OCR, TTS |

obstacle detection. |

Object recognin-ion. |

Image and language limitations. |

|

2 |

YOLO v5, Pytorch. |

Successful v5, trained with a dataset. |

Sensors, models, and GPS. |

Limited information. |

|

3 |

OpenCV, NumPy for array operation. |

We are capturing live images. |

Power management. |

Cost-effective. |

|

4 |

OpenCV speak for obstacle detection |

Effective capturing of images |

Capability, power optimization |

Lack of explicit mention |

|

5 |

AI, Deep Learning Models. |

effective in navigation and recognition |

small object detection. |

Image pixel values. |

|

6 |

TTS, and YOLOv3 |

Successful OCR accuracy at 87%. |

video input, hand-written notes |

Accuracy limitations |

|

7 |

GPS, voice broadcast-sting |

accuracy based on testing |

better recognition |

Limited accuracy |

|

8 |

OpenCV, obstacle detection |

Cost-effective face recognition |

Integration of advanced AI, battery optimization |

Cost usability |

|

9 |

Deep learning, CNN |

multiple sensors |

navigation assistance |

complexity |

|

10 |

Open CV, Python |

Successfully tested RTI |

Voice output enhancement |

More effect |

- The papers reviewed covers various approaches to image processing, object detection, and recognition. These papers generally employ technologies such as Tesseract OCR, YOLO v5, Pytorch, OpenCV, gTTS, and deep learning models.

- They succeed in obstacle detection, object recognition, and navigation assistance. However, common challenges include image and language process limitations, information gaps in certain studies, and the need for accuracy improvement.

- Some papers emphasize the potential for cost-effective enhancements, while others focus on power optimization and complexity issues. Future scopes involve addressing accuracy limitations, improving cost usability, and handling diverse image scenarios.

- Despite their successes, each paper faces unique drawbacks, such as limited information, accuracy constraints, and complexity challenges.

- The collection reflects a diverse landscape of methodologies, results, and future directions in image processing and recognition.

Conclusion

The research concludes by thoroughly examining how smart glasses may significantly improve the lives of people with vi-sion impairments and highlighting the importance of inclusive technology. To improve object detection and recognition, the article addresses several methods that use technologies, including Raspberry Pi, cameras, ultrasonic sensors, and OCR. By encouraging independence and raising living standards, these advances seek to close the gap between the vision impaired and the visually privileged. The literature review demonstrates the versatility of smart glasses in solving the particular issues encountered by the blind community by highlighting the various methodologies, ranging from image processing and facial recognition to real-time object tracking. The studies highlight how technology helps visually hinder people with safety, so-cial engagement, and spatial awareness. Even with these developments, the evaluation notes that more research and devel-opment are required to guarantee a flawless user experience, increase item categories, and maximize real-time performance. This review\'s investigation of smart glasses offers a potentially fruitful path toward empowering blind people, highlighting the fascinating prospects of inclusive technology for a more open and self-sufficient future. Author Contributions: The vital role that Dr. Kavya A. P played in the success of our combined research efforts was exem-plified by her outstanding support and direction throughout the study process. This included refining research objectives, troubleshooting obstacles, and polishing paper drafts.made a major contribution to the research outcomes with her review of papers, which included a thorough evaluation of the previous literature, rigorous data gathering, and percep-tive data analysis. Ayeesha\'s substantial contributions by also reviewing papers to the study\'s conception, design, and inter-pretation of the data are indicative of her in-depth knowledge of the subject matter and technique. Devika had a major im-pact on the research outcomes with her contribution of reviewing papers that have vital contributions to the process, which included careful data processing, perceptive data visualization, and important manuscript changes. Funding: This research received no external funding. Conflicts of Interest: The authors declare no conflict of interest.

References

[1] Harisha G C, Smart glass for visually impaired people, International Journal of Innovative Research in Electrical, Electronics, Instrumentation and Control Engineering ISO 3297:2007 Issue 4, April 2023 DOI: 10.17148/IJIREEICE.2023.1140. [2] Mr. Rampur Srinath, Mr. Suhas Bharadwaj R, Smart glasses to assist the blind, International Research Journal of Modernization in Engineering Technology and Science, e-ISSN 2582- 5208, June 2022. [3] Dr. B. Sudharani, Smart glasses for visually challenged people using facial recognition, International Journal of Innovative Research in Science and Engineer-ing, Volume 08, Issue 06, 2022, www.ijirse.com. [4] Ms. A. Narmada, Raspberry pi-based design and implementation of object detection for visually impaired, ISSN: 2096-3246 Volume 54, Issue 02, July, 2022. [5] Mr. Aisha Abdulatif, Smart Glasses Robot for Blind People Using Raspberry Pi and Python Journal of Science Computing and Engineering Research (JSCER) Month/Year Jan-Feb 2022. [6] Mukhriddin Mukhiddinov and Jinsoo Cho, Smart glass for visually impaired people, Smart Glass System Using Deep Learning for the Blind and 5 Visually Impaired. Electronics 2021. [7] Yirun Wang, Xiangzhi Yu, Raspberry Pi and OpenCV Based Mobility Aid Glasses for the Blind, Journal of Physics: Conference Series 2356 (2022) 012024, Yirun Wang and Xianzhi Yu 2022 J. Phys.: Conf. Ser. 2356 012024. [8] Dr. Vani Priya C H, Smart Glasses for Visually Disabled Person, International Journal of Research in Engineering and Science (IJRES). ISSN (Online): 2320- 9364 and ISSN (Print): 2320-9356. Issue 7, 2021, www.ijres.org. [9] K. Sai Sri Harsha, Srikanth, Smart Stick for Visually Impaired with Object Detection using Raspberry Pi, Journal of Emerging Technologies and Innovative Research (JETIR), April 2020, Volume 7, Issue 4, www.jetir.org (ISSN-2349-5162). [10] Mr. K. Sundar Srinivas, A new method for recognition and obstacle detection for visu ally glasses powered challenged with using raspberry smart pi , Interna-tional Journal of Engineering Applied Sciences and Technology, 2020 Vol. 5. [11] Ayat A. Nada Department of Computers and Systems Electronics Research Institute, Giza, Egypt, Assistive Infra- 286 red Sensor Based Smart Stick for Blind People, IEE Technically Sponsored science and information conference 2015 [12] Arnesh Sen Kaustav Sen Jayoti Das Jadavpur University: Dept. of Physics, Ultrasonic Blind Stick For Completely 289 Blind People To Avoid Any Obstacles, Kolkata, India. 2019 [13] An Application of Infrared Sensors for Electronic White Stick S. Innet 1, N. Ritnoom 21Department of Computer 292 and Multimedia Engineering 2Department of Electrical Engineering University of the Thai Chamber of Commerce, Intelligent Signal Processing and CommunicationSystem,2008 [14] Mrs. S. Divya, Smart Assistance Navigational System for Visually Impaired Individuals, Assistant Professor 295 in the Department of Electrical and Electron-ics Engineering at Kalasalingam Academy of Research and Education, Viru- 296 dhungar. IEEE International Confernces,2019 [15] Students Hawra 299 AlSaid, Lina AlKhatib, Aqeela AlOraidh, Shoaa Al Haidar, Abul Bashar. Deep Learning Assisted Smart Glasses as Educational Aid for Visually Challenged. . IEEE International Confernces,2019 [16] Jung-Hwna Kim, Sun-Kyu Kim, Tea-Min Lee, Yong-Jin Lim 301 and Joonhong Lim. Smart glasses using deep learning and stereo camera, IEEE 8th Global Conference on Consumer Electronics.2019 [17] Jyun-You Lin, Chi-Lin Chiang, Meng-Jin-Wu, Chih-Chiung Yao, Ming-Chiao Chen, Smart Glasses Application System for Visually Impaired People Based on Deep Learning, IEEE International Confernces,2020 [18] Dakopoulos D, Bourbakis N, Wearable obstacle avoidance electronic travel aids for blind: A survey. IEEE [19] IEEE International Confernces,2009 [20] Vargas-Martín F., Peli E. SID Symposium Digest of Technical Papers. Volume 32. Wiley Online Library; Princeton, 310 NJ, USA: 2001. Augmented View for Tunnel Vision: Device Testing by Patients in Real Environments; pp. 602–605.2012 [21] Balakrishnan G., Sai Narayanan G., Nagarajan R., Yaacob S. Wearable real-time stereo vision for the visually im- 314 paired. Advance Online Publica-tion:2007 [22] Elango P., Murugesan K. CNN-based Augmented Reality Using Numerical Approximation Techniques’. J. Signal Image Process. 2010; 1:205–210. [23] Fiannaca A., Apostolopoulos I., Folmer E. Headlock: A wearable navigation aid that helps blind cane users traverse 320 large open spaces; Proceedings of the 16th international ACM SIGACCESS Conference on Computers & Accessibility; 321 Rochester, NY, USA. 20–22 October 2014; pp. 19–26. [24] Jorge Rodolfo Beingolea, MiguelA. Zea- Vargas, Renato Huallpa, Xiomara Vilca, Renzo Bolivar and Jorge Rendulich, Assistive Devices: Technology Devel-opment for the Visually Impaired,Mdpl,2021.

Copyright

Copyright © 2024 Dr. Kavya A P, Anoushka Ameet Kurthukoti, Ayeesha Rida Khan A, Devika K S. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET61143

Publish Date : 2024-04-27

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online