Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Smart Hand Gloves for Deaf and Dumb People

Authors: Shravani Jadhav, Siddhi Mhatre, Riddhi Patil, Prachi Sorte

DOI Link: https://doi.org/10.22214/ijraset.2024.59171

Certificate: View Certificate

Abstract

Approximately 2.78% of the population in our nation is nonverbal. They communicate with people by using their hands and facial gestures. As is well known, those who are silent are mute and those who are not are unaware of sign language, which is a way for silent individuals to communicate. Thus, we have put forth a brand-new method known as “The SPEAKING SYSTEM TO THE MUTE PEOPLE”. Thus, this system will be helpful in solving the issue. The accelerometer sensors form the foundation of the system. Mute people believe that every deed has a purpose. The accelerometer sensors accelerate with each action and provide a signal to the Arduino. The speech signal is created by the Arduino by synchronizing its motion with that of the database. The Guide Me app is being used as the system\'s output. Since gestures are consistent with people\'s communication habits, a lot of study has been done on gesture detection using a vision-based method.

Introduction

I. INTRODUCTION

The census indicates that there are roughly 1.8 billion Indians. The number of people who are disabled and have vision, hearing, or speech impairments is rapidly increasing. In the entire nation, 2.21% of people are classified as disabled. They have a really hard time communicating with and connecting with regular people in society. Their means of communication when they are unable to speak is sign language. This prevents the folks who have so much potential, wisdom, and insight from speaking up. As of right now, using an interpreter has been their only means of communication with others. Only after completing a nine-month diploma program can the interpreter receive certification. The inability of dumb or deaf persons to conduct normal lives is greatly hampered by this circumstance.

In nature, people anticipate some degree of flexibility when technological advancements ease the operation of machines or systems. Thus, from the users' point of view, ease of use is created when a fixed portion of the system is integrated with a mobile system. The client must employ some tracking movements in addition to an accelerometer-equipped glove. In addition to being inexpensive, the device is basic in design. The intricacy of gestures to speech is being simplified, and techniques and modulations are being enhanced at a very quick pace. The deaf and dumb may converse easily thanks to an electronic glove made for deaf-mute communication. It is a simple device and costs of the device are additionally cheap. It's an inexpensive gadget with a straightforward design. There are other suggested ways to help the deaf and dumb as well, like using smartphone apps to identify and translate their speech into normal speech or body movements to translate their nonverbal communication into speech.

The purpose of this study is to address the communication gap that exists between the normal population and people with disabilities because, in actuality, 2 out of every 100 people in India are deaf or dumb. We suggested a way by which we may find some kind of resolution in order to break down this barrier to communication. Our suggested approach is more cost-effective and effective. The output of the sensors employed is what drives this system.

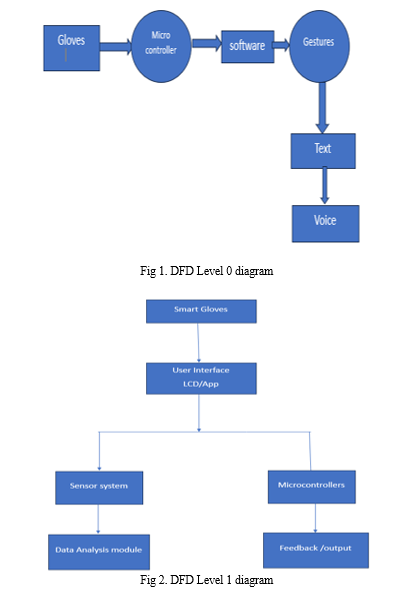

The methodical procedure is as follows:

- Create an algorithm that takes accelerometer input.

- The goal of this algorithm is to match the input gestures with the data that already exists.

- The corresponding output voice is produced by processing the input and matching it with the code.

II. LITERATURE SURVEY

Two factors are used by the smart glove to function: sign language and sensor functionality. The indicators are simulated by use of a camera that captures and processes images using image processing techniques. These are the ones who perceive the indication left by individuals, and it has Microcontrollers make up the processing unit, whereas the output unit contains the display component. There are three types of sensing sign languages: hybrid-based, vision-based, and sensor-based. Due to their widespread availability in laptops, cameras are the main piece of equipment used by the vision-based system. On the other hand, the sensor-based approach makes advantage of the glove's sensors [1].

The alphabets and numerals must be supplied into the sensor-based glove. They can add gestures like please, thank you, and sorry in addition to providing some of the typical behaviors performed by the deaf-mute. A particular tracking procedure must be followed, and part of the raw data must be applied to the vision-based system. In processing it, it also makes use of a few of the algorithms [2].

We have utilized a voice synthesizer to translate the sign into speech since it is not understandable to the average person. For the deaf-mute, communicating their needs to the general public or to someone in need of assistance is a relatively simple process in native tongues [3].

Because of the sensor method's high recognition rate and affordability, it is therefore commonly employed. It also reduces maintenance and risk. The accelerometer is used to measure the force exerted on the hand and fingers, while the tactile sensor records hand movements. Indian Sign Language is the intended use for this technique [4].

Since most people cannot understand sign language, we have turned sign language into speech using a voice synthesizer. For the deaf-mute, communicating their needs to the general public or to someone in need of assistance is a relatively simple process. They didn't have to exert any further effort to explain it to the average person. They gain the self-assurance to succeed in their own lives and speak in front of others as well. The only drawback is that in order to communicate their ideas to someone else, they must be proficient in Indian sign language. Numerous organizations assist these individuals by providing sign language instruction in their native tongues [5].

This paper aids in glove design; by understanding past problems, we have created a glove that is more comfortable for users to wear on their hands. Moveable and lightweight, this may be placed anywhere. In larger meetings, this might be the most effective approach for people to talk to one another [6].

Previous works have been done on motion detecting and turning these bodily movements into sentences. Previously, Microsoft Kinetic, which featured a gesture-responsive technology, assisted with this. It combines a depth sensor with an RGB camera. Although the method they suggested was able to accomplish their goals, it is not a portable system. Gestures captured by an RGB camera equipped with a depth sensor might result in erroneous output and generate a variety of consequences depending on the surrounding circumstances [7].

The concept presented here can be integrated into our system for potential users, enabling more precise motions and providing mobile applications with numerous correct outcomes via the ASR methodology [8].

The suggested product is portable and fully wireless. This can be done via speech recognition or an LCD display. Because of its weight, this glove needs to be handled carefully. The components utilized are somewhat pricey, and users should handle them carefully [9].

Letters and numbers are included with the gesture-based hand gloves. Additionally, this glove includes some of the fundamental hand motions used by the dumb-impaired and deaf. Thus, it is highly practical for them [9, 10].

III. PROPOSED SYSTEM

A. Hand Gestures

The proposed system for smart gloves for the dumb and deaf combines cutting-edge technology with user-centric design, creating a powerful tool for communication and accessibility. By bridging the communication gap between individuals with speech and hearing impairments and the rest of the world, smart gloves have the potential to empower users, promote inclusivity, and foster understanding in society.

There are three components to the suggested system:

- Input via gestures

- Data processing

- Audio output

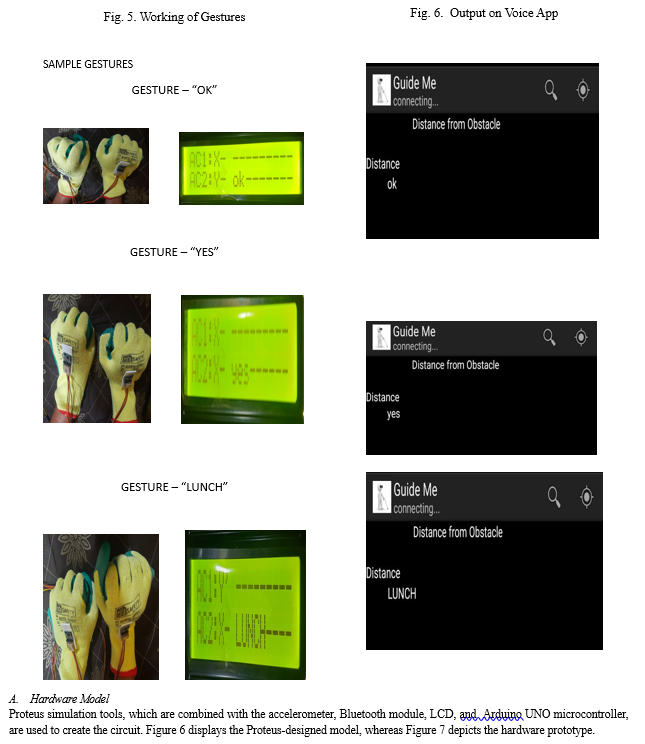

a. Gesture input: One type of gesture input is accelerometers. As a result, the gloves have accelerometers that the user may easily activate using gestures. The resistance values will alter in response to the user's gesture, and the sensor will output voltage in line with those changes.

b. Processing the data: The Arduino UNO's built-in ADC is used to transform the analog output voltage from accelerometer sensors into digital form. The microcontroller's database contains predefined gestures and messages in a variety of languages. The Arduino Uno determines if the accelerometer's input voltage is greater than the threshold value that is recorded in the database.

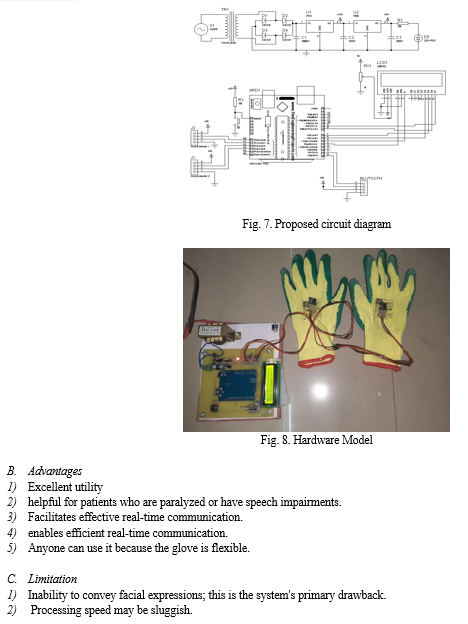

c. Voice output: The Arduino sends its output to an LCD and a Bluetooth device that is linked to the speech application. Next, the database-assigned message for the gesture was shown on the LCDs.

B. Methodology

An instrument used to gauge a moving object's acceleration is called an accelerometer. Accelerometers are generally used in electronic bias, similar to smartphones and smartwatches, to measure physical exertion. In sign language discovery, accelerometers can be used to capture the hand and arm movements of the stoner while making signs.

A common approach for accelerometer-grounded sign language discovery involves the use of neural networks. First, stir data is collected from a set of druggies making different sign language signs. also, this data is used to train a neural network, which can fetch movement patterns associated with different signs. The stoner wears a device equipped with an accelerometer, similar to a glove or a wristband, to make signs.

The accelerometer records the movements of the gestures and sends them to the neural network for processing. However, it can restate it to a textbook or vocalize it for the stoner, If the neural network detects a sign. Accelerometer-grounded sign language discovery has several advantages. Compared to other approaches, similar to the use of cameras, accelerometers are less protrusive and can work in low-light conditions. also, the use of a movable device equipped with an accelerometer allows the stoner to communicate further discreetly in public settings.

C. System Design

The suggested system and flow chart architecture, which includes sign acquisition and signal conditioning devices, are shown in Figure 3.

Conclusion

The suggested approach is much more user-friendly, has a high level of efficiency, and can be expanded to multiple higher grounds and processors. This provides a general idea of the solution; additional technology and creativity can turn the basic model into a personalized product. This approach eliminates the continual difficulty that deaf and dumb individuals encounter in their daily lives and facilitates communication through gestures. The flex sensors on the smart glove enable them to translate signs into text and audio, thereby removing the barrier that separates two communities. We might additionally increase this system\'s efficiency. Relays can be used to do home automation using the same method, as flux sensors are employed in this equipment to match different parameter ranges with words and letters. The hand gesture that is thought to be a computer command input has prompted a great deal of study into how virtual reality appears. Hardware that can translate hand movements into voice is being developed. The study that is being presented explains how gestures can be converted into a collection of symbols that can be deciphered by computers and processed further. Hand movements can be translated into voice in this study, but in the same way, a wide range of other devices can also be controlled. the normal world.

References

[1] Bilal Bahaa Zaidan, Aws Alaa Zaidan, Mahmood Maher Salih, and Muhammad Modi bin lakulu. Ahmed, Mohamed Aktham \"A review on systems-based sensory gloves for sign language recognition state of the art between 2007 and 2017.\" Sensors Vol. 18, no. 7, pp.2208, 2018. [2] Dario Corona, and Maria Letizia Corradini, Pezzuoli, Francesco. \"Improvements in a wearable device for sign language translation.\" In International Conference on Applied Human Factors and Ergonomics, pp. 70-81. Springer, Cham, 2019. [3] Raúl Rojas, Lang, Simon, Marco Block \"Sign language recognition using Kinect.\" In International Conference on Artificial Intelligence and Soft Computing, pp. 394-402. Springer, Berlin, Heidelberg, 2012. [4] Tanzila Saba, Amjad Rehman, Muhammad Rashid, Muhammad Altaf, and Zhang Shuguang, Yousaf, Kanwal, Zahid Mehmood, \"A novel technique for speech recognition and visualization based mobile application to support two-way communication between deaf-mute and normal peoples.\" Wireless Communications and Mobile Computing 2018 (2018). [5] Verma, Pallavi, S. L. Shimi, and Richa Priyadarshani. \"Design of Communication Interpreter for Deaf and Dumb Person.\" J International Journal of Science Research 2015 Vol. 4, no. 1, pp.2640- 2643, 2015. [6] Farooq, Uzma, Ayesha Asmat, Mohd Shafry Bin Moh Rahim, Nabeel Sabir Khan, and Adnan Abid. \"A comparison of hardware-based approaches for sign language gesture recognition systems.\" In 2019 International Conference on Innovative Computing (ICIC), pp. 1-6. IEEE, 2019. [7] Patel, Dhawal L., Harshal S. Tapase, Paraful A. Landge, ParmeshwarP. More, and A. P. Bagade. \"Smart hand gloves for disabled people.\" International Research Journal of Engineering and Technology (IRJET), Vol. 5, pp. 1423-1426, 2018. [8] Varghese, Anju, Christy Paul, Dilna Titus, and Amrutha Benny. “Sign Speak Sign Language to Verbal Language.” (2017). [9] Ariesta, Meita Chandra, Fanny Wiryana, and Gede Putra Kusuma. \"A Survey of Hand Gesture Recognition Methods in Sign Language Recognition.\" Pertanika Journal of Science & Technology, Vol. 26, no. 4, 2018. [10] Shahrukh, J., B. S. Ghousia, J. S. K. Aarthy, and R. Ateequeur. \"Wireless Glove for Hand Gesture Acknowledgment: Sign Language to Discourse Change Framework in Territorial Dialect.\" Robot Autom Eng J Vol. 3, no. 2, pp. 555609, 2018. [11] Sawant, Shreyashi Narayan. \"Sign language recognition system to aid deaf-dumb people using PCA.\" Int. J. Comput. Sci. Eng. Technol.(IJCSET) Vol. 5, no. 05, 2014. [12] Anju Varghese, Christy Paul, Dilna Titus, Vijith C, “A Survey on Sign Language to Verbal Language Converter”, International Journal of Engineering Science and Computing, Vol. 6, Issue No. 9, March 2018.

Copyright

Copyright © 2024 Shravani Jadhav, Siddhi Mhatre, Riddhi Patil, Prachi Sorte. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET59171

Publish Date : 2024-03-19

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online