Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Smart Navigation in Assistive Devices: Depth Camera-Driven Stairs Recognition

Authors: Dr Renuka Devi S M

DOI Link: https://doi.org/10.22214/ijraset.2024.65699

Certificate: View Certificate

Abstract

With almost 8 million blind and visually impaired persons in India, there is an urgent need for autonomous navigation systems to help them. Since most homes have stairs, it is crucial that they be automatically detected in real time. The division of stairs into \"up\" and \"down\" categories is the main topic of this essay. 3D point cloud photos taken with an Intel RealSense camera are used in the study. The Hough Transform is applied to the corresponding RGB image in order to extract a 1D feature along the stairs\' length from the depth image. The Intel RealSense camera was used to build a balanced dataset of 170 point cloud images that represented both \"up\" and \"down\" stairs. Experimental results demonstrate that the proposed method achieves classification performances of 60% with SVM and 85% with MLP, showcasing the potential of the approach for real-world applications.

Introduction

I. INTRODUCTION

Worldwide, the number of visually impaired people is estimated to be around 0.285 billion of the total 7.8billion world population. This physically challenged 3.6% of population needs an assistive device for autonomous navigational aids in indoor and out-door environments[1]. Few research articles[2, 3] gave an exhaustive survey with nearly hundred plus references on assistive navigational aids available from 1990 to 2018. These devices usually provide the detections of stairs, corridors, walls, objects, plain floor, door open or closed in indoors and provide zebra crossing, land marks detection in outdoors. Our work is on indoor detections that include upstairs, down stairs detection. With the advent of inexpensive 3D sensors, like Kinect, ENSENSO, ToF Depth camera, LiDAR Camera there was a lot of research in the areas that use 3D point cloud data (PCD)[4,5]. These areas include 3D model reconstruction, building performance analysis, geometry quality inspection, health care. Qian Wang et al. [6] in his survey with two hundred plus references have fond the importance of PCD data in various applications. The use of RGBD camera that provide PCD data is quite blissful in navigational aids algorithms. The prime concern in design of these navigational aids research work is in improving precision and speed of the algorithm. This is required due to the use of these devices in real time applications. In addition to these detections mentioned, some of the authors have made efforts in finding the distance of the start of stairs steps[7], and have worked on detection to be possible with narrow or occluded view[8]. The use Neural networks[9] and Deep learning have found to give improved precise solutions to various engineering problems. This motivated us to explore the use of fully connected neural network in the problem of stairs and floor detection. Our proposed work on Stair detection is based on use of PCD data. First the step edges are extracted using edge detection and Hough transform lines are drawn on stair edges. Then the middle line depth pixels as features for the classifiers. This work is similar to [10], where only stairs and cross crossing detection was done, using classifiers SVM. The main contribution of our work is in detection of stairs using Fully connected Neural Network and comparing that with the performance of SVM. Further there was no standard database available so we also collected the required database for our experiments using intel realsense depth camera. Experimental results have shown that FCNN performs much better compared to SVM in terms of precision and speed of detection. This paper is organized as follows: Section 2 elaborates on the methodology of our approach, Section 3 details of the database and the results, and section 4 presents the conclusion.

II. METHODOLOGY

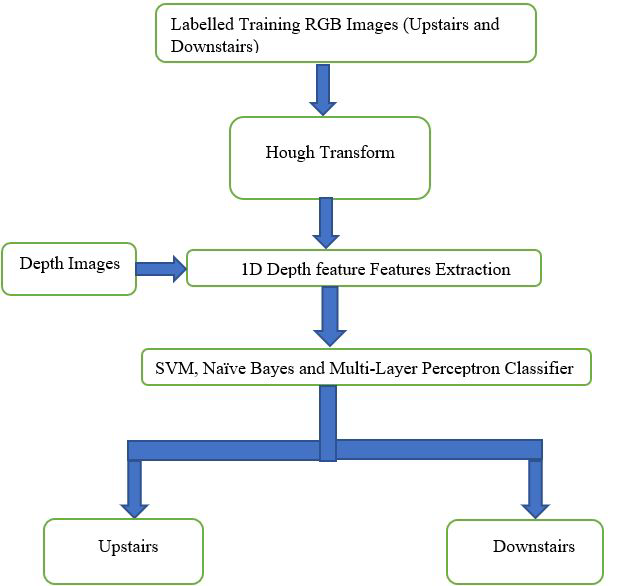

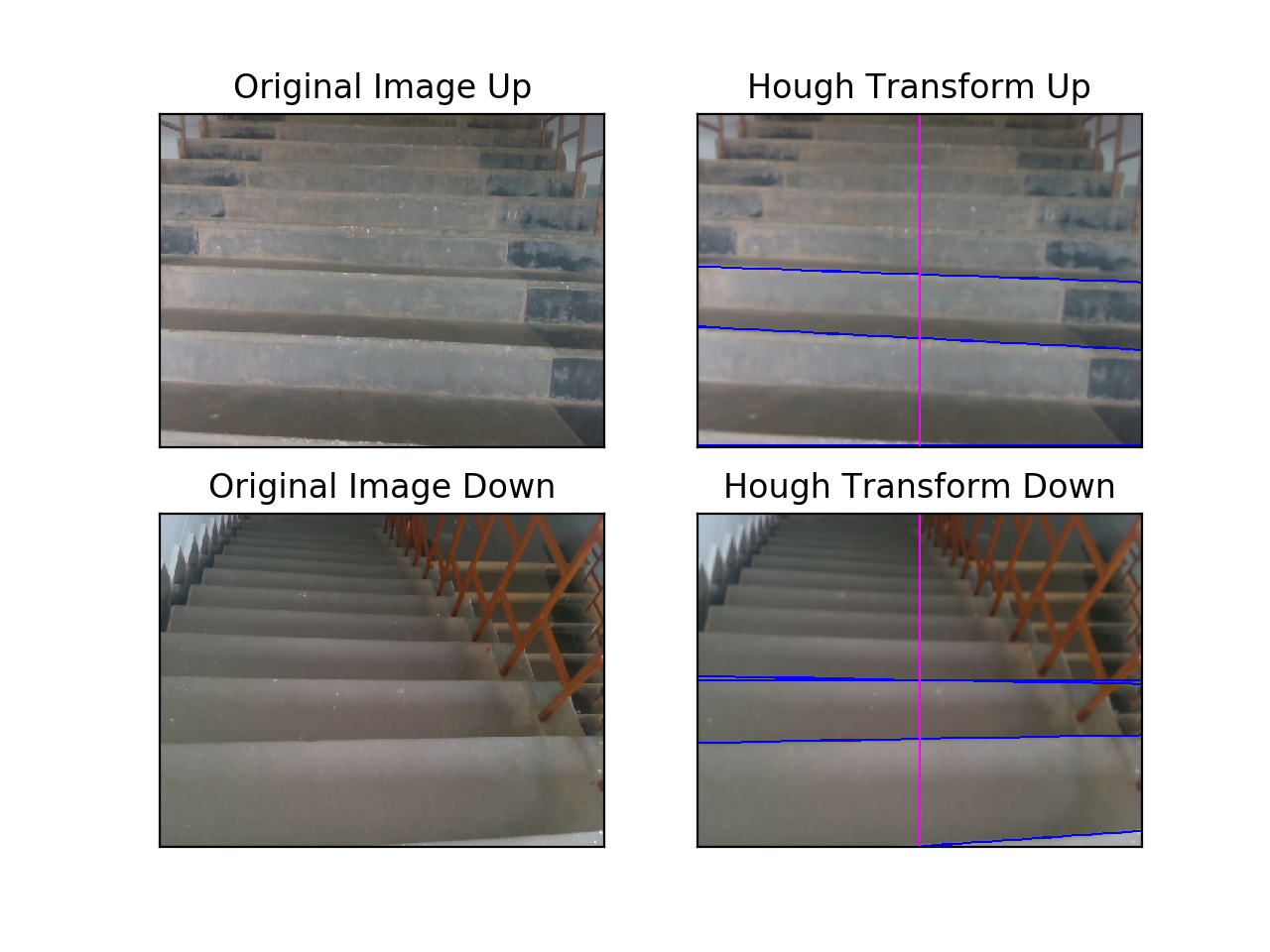

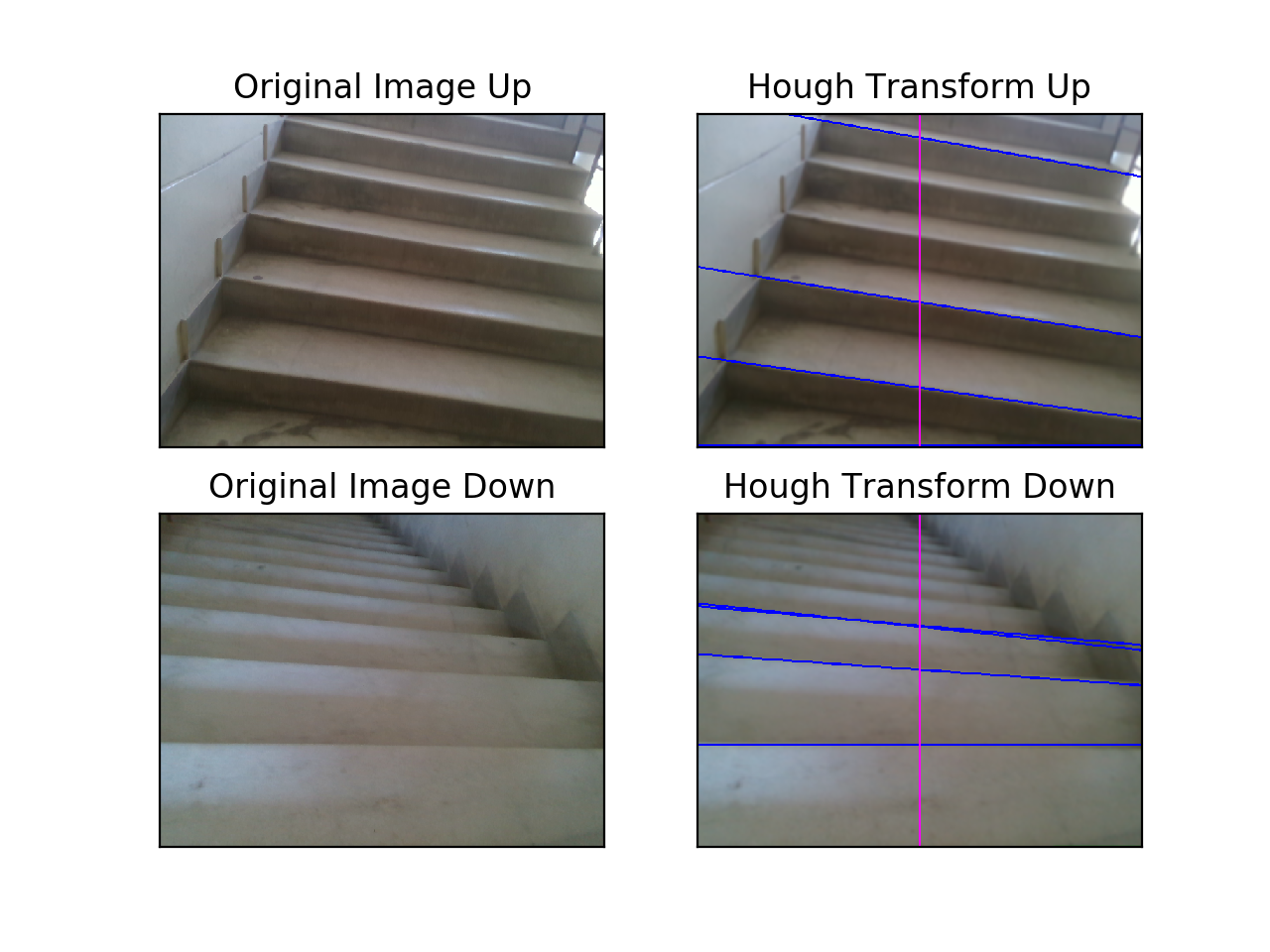

The process flow of our proposed method is shown in fig 1.Initially, any noise present in the RGB images is removed by Gaussian blurring. Later Canny edge detection is used to obtain edge detection. Hough transform is employed to the extracted edge map to detect potential staircase edge lines. The parametric representation of lines containing the edge points (xi ,yi ) is ?????= x cos?????+y sin?????, Where (x,y) are the known edge points and (?????,?????) are unknown[11]. Thus we obtain staircase steps in the RGB images to appear as a set of parallel lines. A perpendicular line is drawn to the dominant line of stair step edge which is the vertical blue line to the steps as shown in Fig 2 and Fig 4.

Fig 1 : Flow chart of the approach

A. 1-Dimensional Feature Vector:

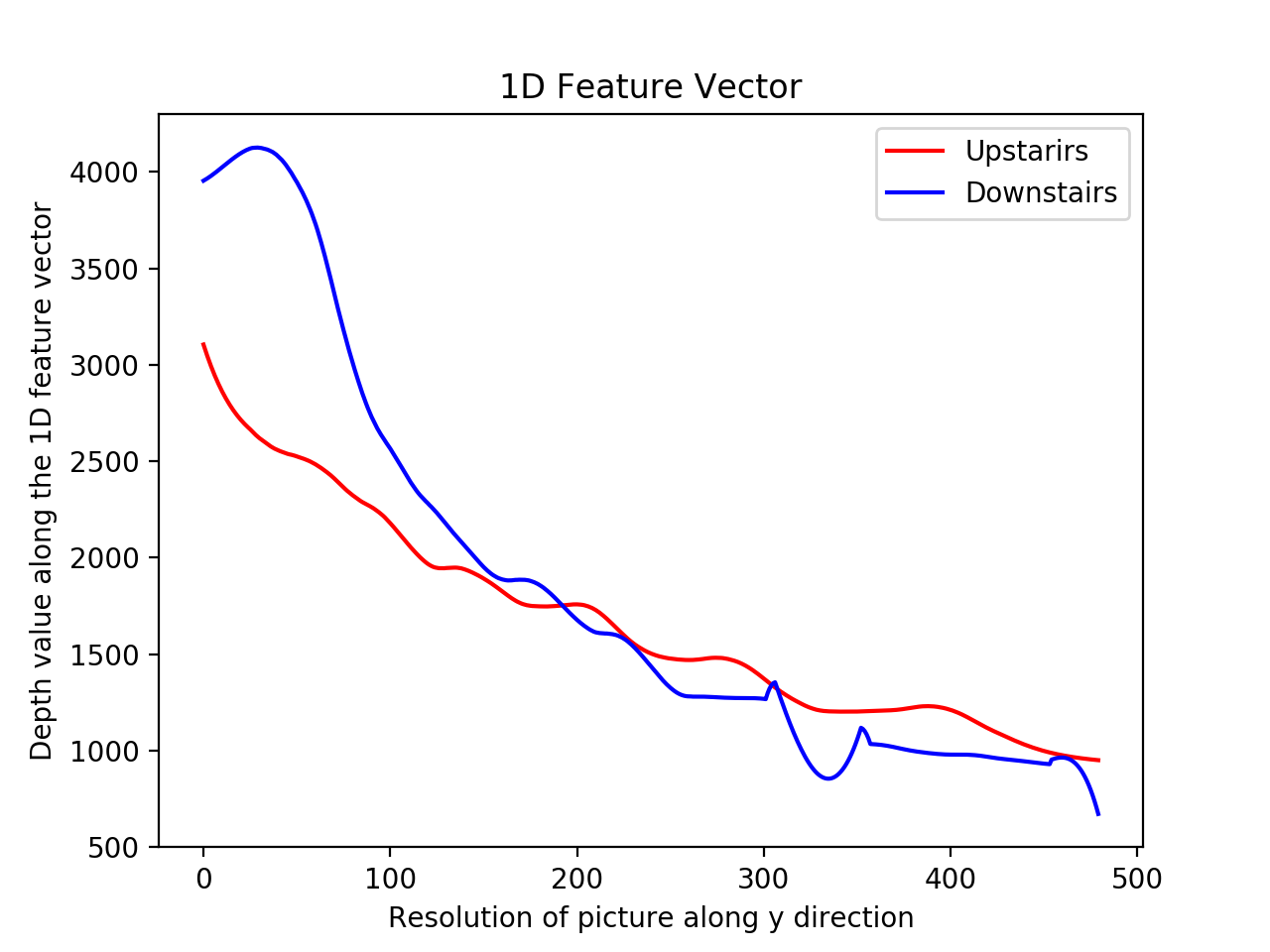

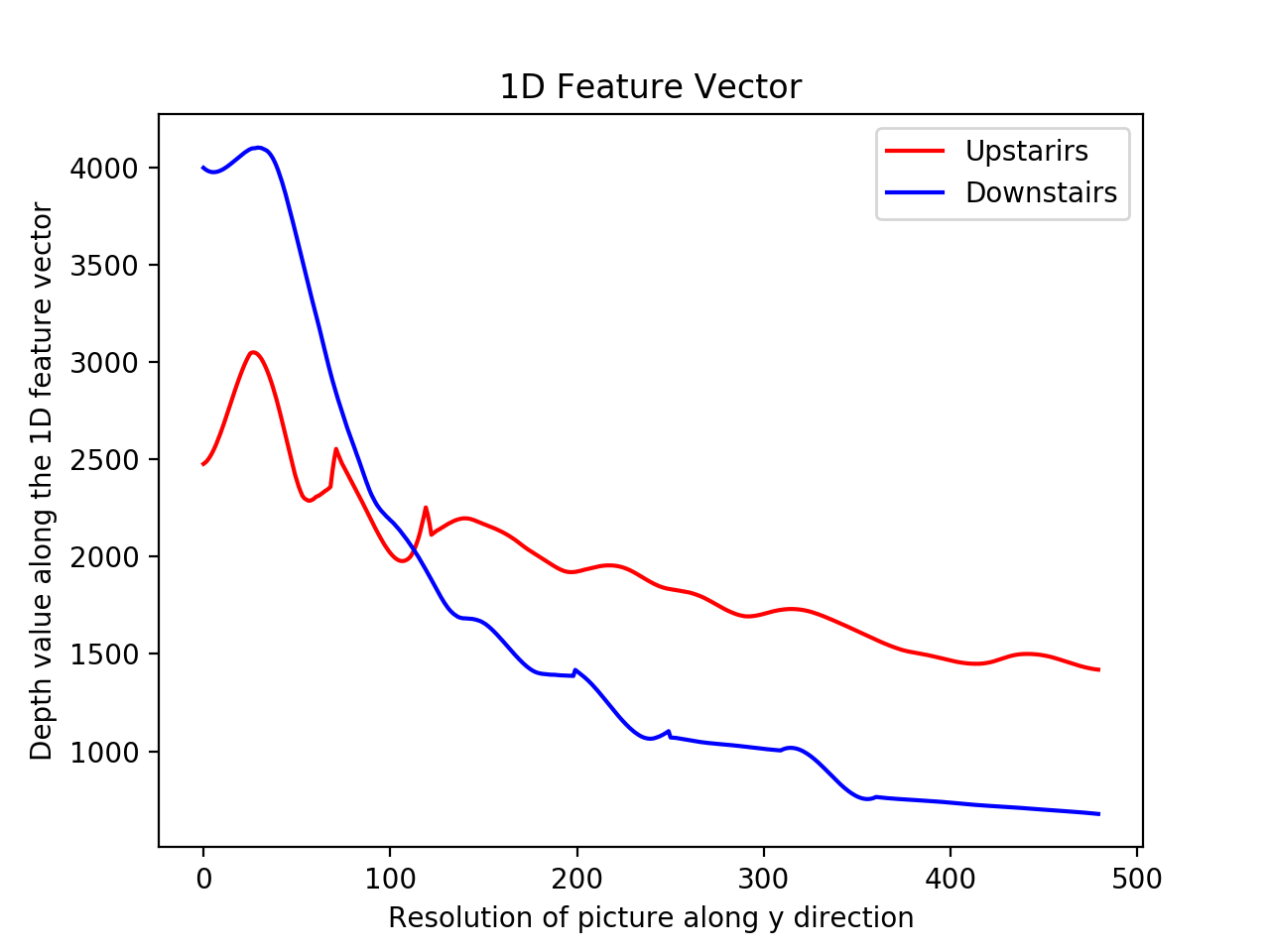

A feature vector is a vector that contains information describing an object's important characteristics. The Hough Transform algorithm implemented in the above step detects the parallel lines in the color image captured by the Intel RealSense Depth camera. The perpendicular line drawn through the longest detected parallel line gives the feature line in the RGB image. The feature line passes through the centre of the longest line thereby giving its position. The position and orientation of the feature line are used to map it onto the corresponding depth image captured by the camera to extract one dimensional feature vector. Plotting the graph with Distance from camera as independent variable and Intensity of Depth image as dependent variable for the points along the feature line in depth image gives the one-dimensional feature vector. The feature vector of depth information is employed as input of a classifier to recognize upstairs and downstairs.

B. Classifiers

In this paper, we compared the performance of three different classifiers namely Support Vector Machine, Naive Bayes classifier and Multi-layer Perceptron for classifying the upstairs and downstairs.

- Naive Bayes Classifier: Naive Bayes is a generative classifier. Bayesian network classifier models first the joint distribution p(x,y) of the measured attributes x and the class labels y factorized in the form p(x|y)p(y), and then learns the parameters of the model through maximization of the likelihood given by p(y)∏j p(xj|y) , assuming the features are independent. Furthermore, the naïve Bayes classifier is stable and its classification result is not significantly changed due to noises or corrupted data. Here we used multinomial NB which states that each probability is a multinomial distribution.

- Support Vector Machine Classifier: The objective of the support vector machine algorithm is to find a hyperplane in an N-dimensional space(N — the number of features) that distinctly classifies the data points. Hyperplanes are decision boundaries that help classify the data points. Data points falling on either side of the hyperplane can be attributed to different classes. It finds a plane that has the maximum margin, i.e the maximum distance between data points of both classes. Maximizing the margin distance provides some reinforcement so that future data points can be classified with more confidence. In our case, the feature vectors of all the images in the training set along with category labels are given as input to the classification model. The algorithm finds the best hyperplane I.e. the one with maximum margin that classifies the images in the test set into either upstairs or downstairs.

- Multi Layer Perceptron: A Muti-Layer Perceptron is an Fully connected Neural Network. This NN is composed of an input layer to receive the features, an output layer that makes a decision or prediction about the input, and an arbitrary number of hidden layers that form the true computational engine of the MLP. MLPs with one hidden layer are capable of approximating any continuous function.

In our case we are using a MLP Classifier with 4 hidden layers of dimensions 100, 50, 25, 10 respectively with the activation function to be ReLU apart from an input and an output layer.

III. RESULTS

We formed a database capturing 170 images-85 upstairs, 85 downstairs for the dataset using Intel Realsense d435i camera[12,13]. Our Dataset contained tiled as well and marble stone stairs. The sample images of upstairs and down stairs used in the database is shown in figure 3.We considered images of different trails and types. The 1D feature vectors in an image is as shown in fig 3. We used 70% of the data for training the classifier and 30% for testing. Also 10-fold testing was performed.

In our experimentation, HT theta parameter is set in the range of 85degrees to 100degress to avoid the railing, and other unnecessary edges other than stairs step edges(red lines) to be included as shown in figure 3.The accuracy of the three classifiers is as follows:

Table 1 : Accuracy for ML algorithms

|

|

Multilayer Perceptron(MLP) |

State Vector Machine(SVM) |

Gaussian Naive Bayes(NB) |

|

Overall |

85% |

60% |

70% |

|

Upstairs |

70% |

80% |

40% |

|

Downstairs |

100% |

40% |

100% |

we observe, SVM is biased towards upstairs while the Naive Bayes is biased towards downstairs. MLP with 4 hidden layers balances both of the classes better than NB and SVM. The 1D feature vector plot of up stairs starts at around the value of 3000 and tapers down slower with greater slope than that of the downstairs line which starts around a value of 4000 and tapers down faster with greater slope. These key differences enable the classifier to learn as this 1D feature vector is given as input to Machine Learning Classifier.

Fig 2: Extraction of 1D feature vector for Tilted stairs

Figure 3: Plot of 1D feature vector for Tilted stairs

In the above pictures, Hough lines are represented in blue color and the 1D feature vector is represented in pink color. We can see that in both of the case of tile and marble stairs above, the upstairs curve in the 1D feature vector plot start at around the value of 3000 and taper down slower with greater slope than that of the downstairs line which starts around a value 0f 4000 and tapers down faster with greater slope. These key differences enable the classifier to learn as this 1D feature vector is given as input to Machine Learning Classifier[15].

Fig 2: Extraction of 1D feature vector for marble stairs

???????Figure 3: Plot of 1D feature vector for Tilted stairs

???????Figure 3: Plot of 1D feature vector for Tilted stairs

Read Images: Images are read using PIL. PIL is the Python Imaging Library which provides the python interpreter with image editing capabilities. This library has a module named Image which provides a number of factory functions, including functions to load images from files, and to create new images. PIL is significantly faster than opencv, IPP. PIL.Image.open() Opens and identifies the given image file.

Conclusion

This study focuses on the real-time detection and classification of stairs into \"up\" and \"down\" stair categories, addressing the urgent need for autonomous navigation aids to help visually impaired people. By applying Hough Transform on RGB pictures, the suggested method effectively recovers pertinent characteristics from 3D point cloud data obtained from an Intel RealSense camera. The experimental findings show that MLP performs noticeably better than SVM, with an accuracy of 85%, whereas SVM only manages 60% classification accuracy. These results show that the suggested method is feasible for practical usage, laying the groundwork for future advancements in autonomous navigation systems for those with visual impairments. Future research will investigate improving accuracy using more sophisticated deep learning methods and more datasets.

References

[1] B. Sharma and I. A. Syed, ”Where to begin Climbing Computing start of stair position for robotic platforms,” 2019 11th International Conference on Computational Intelligence and Communication Networks (CICN), Honolulu, HI, USA, 2019, pp.110-116. [2] S. Ponnada, S. Yarramalle and M. R. T. V., ”A Hybrid Approach for Identification of Manhole and Staircase to Assist Visually Challenged,” in IEEE Access, vol. 6, pp. 41013-41022, 2018. [3] A. L. F. Castro, Y. B. d. Brito, L. A. V. d. Souto and T. P. Nascimento, ”A Novel Approach for Natural Landmarks Identification Using RGB-D Sensors,” 2016 International Conference on Autonomous Robot Systems and Competitions (ICARSC), Braganca, 2016, pp. 193-198. [4] A. Sinha, P. Papadakis and M. R. Elara, ”A staircase detection method for 3D point clouds,” 2014 13th International Conference on Control Automation Robotics & Vision (ICARCV), Singapore, 2014, pp. 652-656. [5] Csapo´, A´ ., Werse´nyi, G., Nagy, H. et al. A survey of assistive technologies and applications for blind users on mobile platforms: a review and foundation for research. J Multimodal User Interfaces 9, 275–286 (2015). [6] Wang, Qian & Kim, Min-koo. (2019). Applications of 3D point cloud data in the construction industry: A fifteen-year review from 2004 to 2018. Advanced Engineering Informatics. 39. 306-319. [7] M. Young, The Technical Writer’s Handbook. Mill Valley, CA: University Science, 1989. [8] P´erez-Yus A., L´opez-Nicol´as G., Guerrero J.J. (2015) Detection and Modelling of Staircases Using a Wearable Depth Sensor. Computer Vision – ECCV 2014. [9] R. Munoz, Xuejian Rong and Y. Tian, ”Depth-aware indoor staircase detection and recognition for the visually impaired,” 2016 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Seattle, WA, 2016, pp. 1-6.

Copyright

Copyright © 2024 Dr Renuka Devi S M. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET65699

Publish Date : 2024-12-01

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online