Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Social Engineering Attack in the Era of Generative AI

Authors: Nishant Kumar, Niyati Manojkumar Patel

DOI Link: https://doi.org/10.22214/ijraset.2025.66688

Certificate: View Certificate

Abstract

A significant cybersecurity risk arises when sophisticated artificial intelligence (AI) is combined with social engineering. The aim of this study was to assess how much the sophistication and skills of social engineering are enhanced through advanced AI techniques. The study found that generative AI has revolutionized social engineering in six key areas: multi-channel attack vectors, automated infrastructure for social engineering campaigns, advanced personalization, the development of evasion and obfuscation tactics, and the creation of hyper-realistic content. The study also proposed a framework to recognize the evolving threat landscape, termed the Generative AI Social Engineering Framework. It highlights how structured and organized AI can be leveraged in deceptive social engineering tactics by integrating elements from machine learning, cybersecurity, and human-computer interaction. Besides showing up the vulnerabilities, the research also lays out recommendations to safeguard organizations and individuals in order to attain digital resilience.

Introduction

I. INTRODUCTION

Rapid advances in generative AI applications are creating unparalleled challenges in cybersecurity landscapes. Generative AI technology, which creates extremely realistic text, voice, images, and even video, is revolutionizing the very industries which it threatens by creating avenues for its exploitation by malicious entities [1] [2]. The area at pressing stake for application appears to be social engineering and phishing attempts; this literary genre of the generative AI attack causes the manipulation method to be refined while the victim is deceived into releasing information known to sides apart. The manipulation of one sort or another takes place in one form or the other using social engineering for attack variations-namely, human-to-human, human-to-computer, and computer-to-computer-to fulfill the end placing reliance on the trust factor. Integrating generative AI into cybercriminal activities complicates challenges by enhancing credibility in messaging, mimicking human-like communication, and bypassing conventional detection systems. The ability of AI to replicate trust signals at scale poses significant risks to the digital environment, creating an urgent need for research to address these concerns.

The development of AI and ML technologies has revolutionized various industries, but they have also enabled cybercriminals to execute sophisticated attacks. This has intensified cyber crime, a major global issue. The World Economic Forum highlights cyberspace threats as top global risks, emphasizing the need for effective measures. AI/ML is effective in detecting and mitigating social engineering and phishing attacks, but their use to cause damage is concerning. Large models like GPT-4 and PaLM 2 automate social engineering campaigns, creating convincing phishing attacks. Open-source generative AI tools further exacerbate these risks, necessitating the identification of vulnerabilities and the development of countermeasures.

This study tackles a crucial question: In what ways can generative AI amplify the effectiveness of phishing and social engineering attacks?

By investigating the intersection of AI and cybersecurity, the research seeks to:

- Understand how cybercriminals utilize generative artificial intelligence to evolve and enhance their phishing and social engineering tactics.

- Analyze the impact of these AI-driven attacks on the current cybersecurity framework and introduce new challenges.

- Propose some possible solutions to cope with these advanced threats.

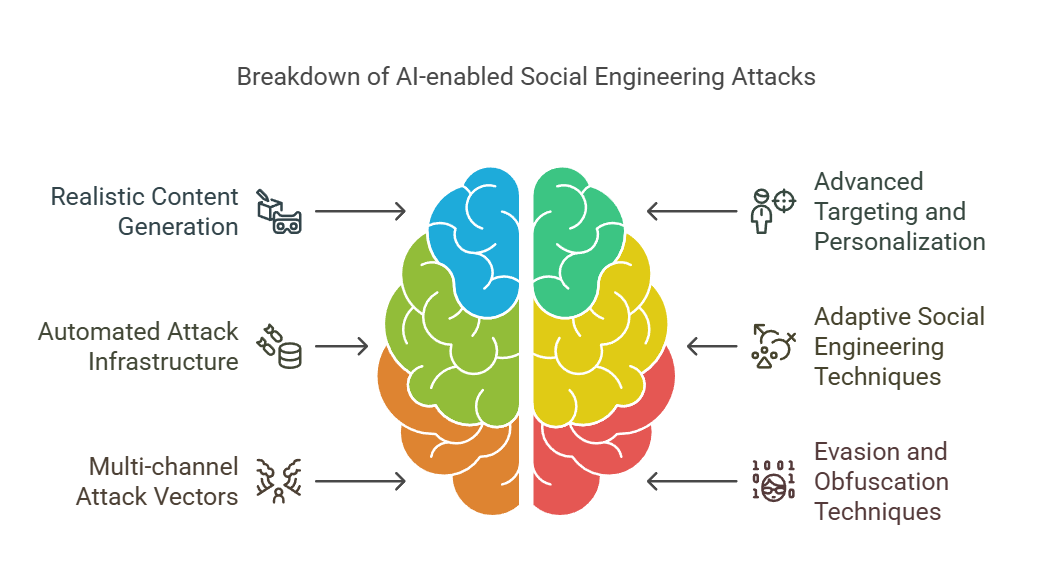

Realistic content creation, enhanced targeting and personalization, automated attack infrastructure, adaptive SE techniques, multi-channel channel attack vectors, and evasion and obfuscation techniques are the six pillars of our platform (see Figure 1). These subsections comprise the section on social engineering assaults allowed by generative AI.

Figure 1: Six-Pillar Structure for SE Attacks Powered by Generative AI

Figure 1: Six-Pillar Structure for SE Attacks Powered by Generative AI

II. METHODOLOGY

In an attempt to solve the issues and problems brought about by the application of generative AI in the context of social engineering (SE) attacks, this work is organized utilizing a systematic, multi-method research strategy. The technique includes the following crucial elements:

- Review: The study embarks on literature review in the area of social engineering (SE) and generative AI to arrive at sensible findings and identify the gaps and emerging trends.

- Capability: Generative AI's role in reinforcing SE strategies is assessed, focusing on the areas of content creation, targeting, automation, and evasion techniques.

- Framework Development: A framework is developed that relates generative AI capabilities to SE tactics, leading up to a 5-stage model for personal-level countermeasures of attack prevention, detection, and mitigation.

III.LITERATURE BACKGROUND

A. Discussion on Social Engineering vs Phishing

Fabrication is the foundation of social engineering and phishing assaults, which are launched when the attacker seems to be a trustworthy entity. Social engineering leverages human psychology and intuition to grab sensitive information and manipulate people into allowing coordinated actions, thereby bypassing any kind of security technology that might be in place. On the other hand, phishing, one example of social engineering, typically includes a fraudulent communication designed to deceive recipients into giving up confidential information such as access credentials or financial details. Various types of social engineering tactics are utilized to exploit victims in diverse scenarios. Table 1 below provides an overview of some common social engineering attack types:

TABLE 1

VARIOUS FORMS OF SOCIAL ENGINEERING ATTACKS

|

Type |

Description |

|

Pretexting |

Attackers fabricate a scenario to manipulate the target into providing information or access. |

|

Shoulder Surfing |

Attackers observe the target’s screen or keystrokes (e.g., passwords, PINs) in public spaces. |

|

Baiting |

Attacker entices the target with an appealing offer (e.g., free software) to compromise security. |

|

Type |

Description |

|

Phishing |

Attackers use deceptive emails to trick targets into revealing sensitive information. |

|

Tailgating |

Attackers gain physical access to restricted areas by exploiting trust, often by following authorized personnel. |

|

Quizzing |

Attackers use fake surveys or quizzes to extract sensitive information from the target. |

|

Scareware |

Attacker deceives the target into purchasing fake antivirus or security software. |

|

Dumpster Diving |

Attackers search through discarded materials, such as trash or recycled documents, to retrieve confidential information. |

|

Watering Hole |

Attacker infects a website frequently visited by the target, delivering malware or phishing attempts. |

|

Social-Media Engineering |

Attackers leverage social platforms to build trust, impersonate individuals, or spread malicious content. |

One of the most common and increasingly popular forms of cyber threats are phishing attacks. Phishing employs phishing attempts on the part of the attackers to declare and imitate genuine organizations, which in turn send fake emails or messages with malware that is likely to be embedded into them [3][4]. With the same aim in mind, it becomes easier to manipulate human behavior to make them give away confidential information or make actions to cause harm. Phishing attacks serve a variety of aims, ranging from infecting devices with malware and obtaining personal data, such as usernames and credit card information, to gaining control of accounts on the internet. It may even induce the receiver directly to commit an unauthorized financial transaction. Thus, phishing usually stands as a harbinger attack, allowing adversaries to access a device or account. Most often, then, the trust relationship within compromised accounts, and contacts are targeted as opportunities to facilitate progress in the aforementioned tasks.Phishing attacks generally have severe consequences, causing financial consequences, identity fraud, delicate information misuse, or very rarely, compromise to the infrastructure. Table 2 gives a more differentiated view of the various types of phishing attacks, each of which uses differences in perception and resources.

TABLE 2

DIFFERENT KINDS OF PHISHING ATTACKS

|

Phishing Type |

Description |

|

Email Phishing |

fraudulent emails that try to fool users into disclosing private information by impersonating trustworthy institutions, such banks or government organizations. |

|

Spear Phishing |

tailored phishing emails that target specific groups or individuals and frequently use personal data to boost their legitimacy. |

|

Whaling |

specialized spear phishing that aims to trick prominent people (such as CEOs and CFOs) into approving financial transactions or disclosing business data. |

|

Smishing |

misleading text messages (SMS) that aim to fool receivers into clicking on harmful links or disclosing private information. |

|

Vishing |

Voice-based phishing attacks conducted over the phone to extract confidential information. |

|

Pharming |

sending visitors to phony websites that imitate genuine ones in an attempt to trick them into entering login credentials or other private data. |

|

Clone Phishing |

A previously delivered legitimate email is cloned and resent with malicious modifications to deceive the recipient. |

|

Social Media Phishing |

Using social media platforms (e.g., Facebook, Instagram) to deceive users into revealing sensitive information or clicking malicious links. |

|

Search Engine Phishing |

Attackers create malicious websites optimized for search engines to attract victims looking for specific products or services. |

|

Pop-Up Phishing |

Pop-up windows that appear on reputable websites and are intended to deceive users into downloading harmful software or inputting private information. |

|

Credential Harvesting |

Fake login pages or portals designed to capture user credentials directly. |

|

Man-in-the-Middle |

Intercepting communications between users and websites to steal sensitive information in real-time. |

So, it can be comprehensively explained that phishing encompasses email phishing, spear phishing, and vishing according to the categories furnished in Table 2. Social engineering, on the contrary, refers to manipulation and deception in all its myriad forms given in Table 1, including pretexting, baiting, and scareware. They both rely on exploiting human vulnerability rather than technical weakness.

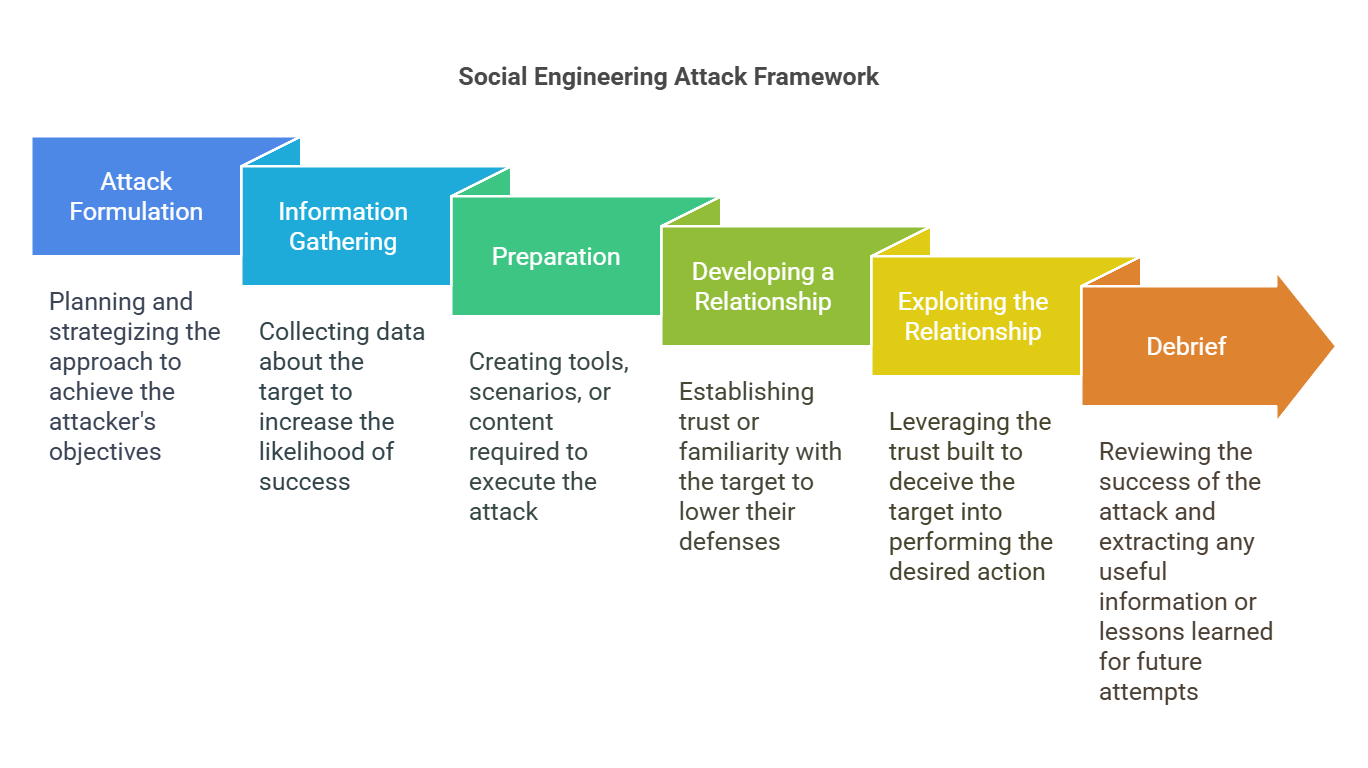

Mouton et al. [5] was able to provide a comprehensive model showing each of the stages involved in social engineering attacks, characterizing unique social engineering attacks from the perspective of a few social engineering incidents.Figure 2 displays the framework's high level model, which depicts the phases of a social engineering attack (attack design, information gathering, planning, connection building, relationship exploitation, and debriefing). In Section IV, this approach is used to help structure our analysis of Gen-AI's potential in social engineering attacks.

Figure 2: Mouton et al.'s Social Engineering Attack Framework was replicated [5].

Figure 2: Mouton et al.'s Social Engineering Attack Framework was replicated [5].

B. Artificial Intelligence Proficiencies

Over the years, the growing power of data, the successes in algorithms, and improvements in hardware have made artificial intelligence (AI) emerge as a potentially revolutionary technology in the making. One particular aspect of AI called machine learning (ML) aims to develop models and algorithms that distinguish patterns, predict future events, and make data-driven decisions. Machine learning itself comes in a variety of patterns, such as reinforcement learning, explicit learning, weak learning, or semi-supervised learning.[6] [7]

Deep learning, a sub-strip of machine learning, enables the training of multiple layers of neural networks. It has radically altered several domains like autonomous systems, natural language processing, and image recognition. Numerous industries, including healthcare, banking, and cybersecurity, are being impacted by AI and ML[8]. These sectors automate procedures, enhance decision-making, and extract knowledge from vast volumes of data by utilizing AI-powered systems.

A novel development in AI is Generative AI, as demonstrated by GAN (Generative Adversarial Network). These things can produce highly realistic artificial content and range from any kind of art, photographs, and videos [9]. Creating a Large Language Model (LLM), like GPT-4 and Palm 2, represents a rich standard for natural language generation, much of which is particularly influenced by the methodologies of the very same deep learning techniques for the development of human-like text . So, while breathtaking in what these technologies can do, they do raise all sorts of ethical and security issues as it becomes increasingly harder to make a distinction between AI-generated content and content that is human-generated. See Table 3 for a Broad Spectrum of AI Fields and Studies.

TABLE 3

IN A NUTSHELL, ARTIFICIAL INTELLIGENCE

|

Learning Type |

Description |

Example in Social Engineering Attack |

|

Supervised Learning |

Learn from labeled data to predict or classify new data. |

Phishing emails trained on known examples to detect and filter malicious emails. |

|

Unsupervised Learning |

Finds patterns in unlabeled data without predefined categories. |

Identifying patterns in user behavior to detect anomalies in network activity. |

|

Semi-Supervised Learning |

Combines labeled and unlabeled data to improve predictions. |

Using a mix of known and unknown attack data to improve fraud detection systems. |

|

Reinforcement Learning |

Learn by interacting with the environment and maximizing rewards. |

Simulating different social engineering attack scenarios to determine the most effective approach. |

|

Deep Learning |

Uses complex neural networks to process large amounts of data. |

Analyzing large datasets of employee interactions to detect phishing vulnerabilities. |

|

Generative AI |

Creates new content like text, images, or videos based on existing data. |

Generating convincing fake profiles or emails to impersonate a legitimate entity. |

The capabilities of AI and ML have paved the way for a range of tools that could be leveraged for social engineering and phishing attacks. These AI techniques enable attackers to craft increasingly sophisticated and convincing attacks. Below (Table 4)is an overview of how AI capabilities could be applied in this context:

TABLE 4

A SYNOPSIS OF POSSIBLE AI SKILLS IN THE SOCIAL ENGINEERING CONTEXT

|

Capabilities |

Explanation |

ML Techniques |

|

Generative AI |

Algorithms that generate new content like text, photos, and videos. Generative AI can create realistic phishing emails, phony profiles, and methods of attack that mimic human speech in social engineering. |

GANs, Transformer models |

|

AI Analysis |

Uses machine learning to analyze data, predict behaviors, and identify vulnerabilities in potential targets, refining attack strategies based on information gathered. |

Machine Learning, Classification, Regression, NLP |

|

AI Scraping |

Involves using automated tools to gather data from online sources such as social media and databases. This helps attackers build detailed profiles of targets. |

Web Scraping Libraries, Data Mining, Clustering Techniques |

|

AI Automation |

Automates repetitive tasks and communication, ensuring consistent interaction and maintaining the momentum of an attack while reducing detection risks. |

Rule-based Systems, Process Automation, Workflow Management |

|

AI Chatbots |

Mimics human speech in order to further the attack by fostering trust, obtaining information, and controlling emotions. |

LLMs, Contextual Chatbot Frameworks, Sequence-to-Sequence Models |

|

AI Coordination |

Coordinates tasks between different AI agents, ensuring smooth transitions and consistency across multiple stages of an attack. |

Multi-agent Systems, Coordination Algorithms, Task Allocation Methods |

|

AI Assessment |

Tracks and evaluates the success of an attack, identifying key outcomes like compromised accounts or data leaks, and refining future attacks based on performance metrics. |

Performance Metrics, Anomaly Detection, A/B Testing |

IV. GENERATIVE AI'S CAPABILITY REVIEW IN SOCIAL ENGINEERING

Generative AI is thought to play an important role in enhancing the effectiveness of SE and phishing attacks with its more convincing, personalized, and much more sophisticated deception. Integrating AI capabilities in various stages of the Social Engineering Attack Lifecycle (introduced by Mouton et al. [5]) could make these attacks more powerful. In this section, the concept of Generative AI and its applications across these Affiliate stages will be examined and specific attention will be given to those stages where the Generative AI could contribute towards the most exciting imaginative pathways.

Table 5 provides an overview of AI intervention at each level of the Social Engineering Attack Lifecycle, along with the relevant AI capabilities used in each stage. The section focuses on enhancing the phases in which generative AI is most widely utilized.

TABLE 5

AI APPLICATION AT EVERY STAGE OF THE PHASES OF THE SE ATTACK

|

AI Utilization of SE Attack |

Application |

AI Capabilities |

AI Role |

|

Formulation of an Attack |

|

|

|

|

Goal Identification |

creating possible attack targets by considering vulnerabilities and intended results. |

Generative AI |

Goal Generation |

|

Target Identification |

examining public data to find possible targets according to their roles and online personas. |

AI Analysis |

Target Detection |

|

Information Gathering |

|

|

|

|

Identify Potential Sources |

searching online and public sources for potential data sources. |

AI Scraping |

Data Collection |

|

Gather Information from Sources |

collecting appropriate information from multiple sources. |

AI Scraping |

Data Collection |

|

Assess Gathered Information |

automating the evaluation and compilation of data from many sources. |

AI Automation |

Data Assessment |

|

Preparation |

|

|

|

|

Combination and Analysis of Gathered Information |

Analyzing and processing gathered data to find trends and weaknesses. |

AI Analysis |

Data Analysis |

|

Development of an Attack Vector |

using the information acquired to create customized attack vectors, such as phishing emails. |

Generative AI |

Attack Design |

|

Develop Relationship |

|

|

|

|

Rapport Building |

conversing with the target in order to establish an emotional connection. |

AI Chatbot |

Rapport Building |

|

Establishment of Communication |

automating the process of reaching the target and keeping in touch. |

AI Automation |

Communication Management |

|

Exploit Relationship |

|

|

|

|

Prime the Target |

creating material that is in keeping with the relationship in order to influence responses. |

Generative AI |

Target Manipulation |

|

Elicitation |

using AI chatbots to gather information or actions by using psychological tactics. |

AI Chatbot |

Information Extraction |

|

Debrief Maintenance |

maintaining the existing relationship by automating recurring interactions. |

AI Automation |

Relationship Sustenance |

|

Transition |

allowing interactions to be seamlessly transferred from one attacker to another. |

AI Coordination |

Coordination of Attack |

A. Realistic Content Generation

Despite the ballooning technology aiming to polarize social feedbacks-LLMs ranging from GPT to BERT-have grown text generation into something with coherent contextual frame and tremendous credibility. LLMs could train on huge datasets that were large enough to capture even the slightest nuance of human language. Synthetic media creations like deep fakes and voice clonings have become increasingly more sophisticated with GANs and autoencoders. Criminals, especially cybercriminals in using these skills, create eerily humane impersonations that, in social engineering attacks, vastly increase their reach and make it almost impossible for the victims to realize fraud.

B. Advanced Targeting and Personalization

Recent research in AI-based profiling and sentiment analysis has enabled attackers to create detailed victim profiles using large datasets and machine learning models. Facilities like facial recognition capability, scraping social media, and the analysis of online behavior from websites like LinkedIn, Facebook, and Instagram have allowed attackers to spy on the daily life and patterns of behavior of individuals to personalize their attacks. In order to perform actual attacks, machine learning algorithms such as k-means clustering or decision trees categorize victims according to their electronic behaviors.A personalized message would very likely succeed in persuading a certain individual a priori, simply because it is a message containing personal information like the person's name or employer, making it all the more hard to be perceived as threatening.

C. Automated Attack Infrastructure

AI researchers are presently focusing on automating attack orchestration, meaning AI systems can automatically detect vulnerable targets and select optimal attack vectors. This process includes reconnaissance automation, where AI performs scanning on public databases and websites for sensitive information, and uses AI to predict which targets are more vulnerable. AI-powered platforms can indeed scale and adapt to attack infrastructures, bringing up completely different tactics as necessary. Automated penetration testing methodologies have proven that AI discovers susceptibility much more rapidly than the human hackers. Research is examining using cloud platforms to scale and coordinate attacks, allowing large-scale, effective, and extremely hard to be tracked without much human intervention.

D. Adaptive Social Engineering Techniques

The advancements in reinforcement learning (RL) and online learning enabled attackers with adaptive social engineering tactics. These systems can monitor a victim's behavior and adjust the attack strategy in real time through various emotional responses or trust levels. AI collects feedback, analyzing responses to emails or phone calls, and refines its approach, moving it closer to success. For example, if the first message is not acted upon, then AI could try a follow-up message with different phrasing or a more direct appeal. Periodic learning and adaptation make social engineering attacks more difficult to predict and counter, while real-time adaptation enhances the attack's authenticity and effectiveness.

E. Multi-channel Attack Vectors

Research in multi-modal AI systems has enabled attackers to integrate communication channels like email, SMS, social media, and voice calls into a unified attack strategy. By using natural language processing and machine learning, these systems ensure consistent messaging across platforms. AI-driven data synthesis techniques maintain coherence across channels, making the attack appear coordinated and credible. Studies show that multi-channel attacks increase success rates by engaging the victim through multiple touchpoints. Cross-platform AI integration research demonstrates how attackers synthesize information from various sources to craft a more convincing narrative, leading victims to perceive the attack as trustworthy.

F. Evasion and Obfuscation Techniques

Research in AI of adversarial machine learning paves way for methods for the attacker to evade conventional causal detection. The adversary examples can trick AI security devices into misthinking their assessment data. A polymorphic attack payload is exacted under intelligence through GANs without a steady form during every execution. Investigative studies into camouflage techniques provide insights regarding camouflage of deceptive patterns and concealment pathways for relaxing the attack as being benign. Consequent to some studies, they have proven that slight modifications to input data lead highly advanced security systems into decision errors. These evasion techniques impair the efficiency of signature or behavior-based defense solutions and lead to a high rate of attack success.

V. FRAMEWORKS AND MODELS

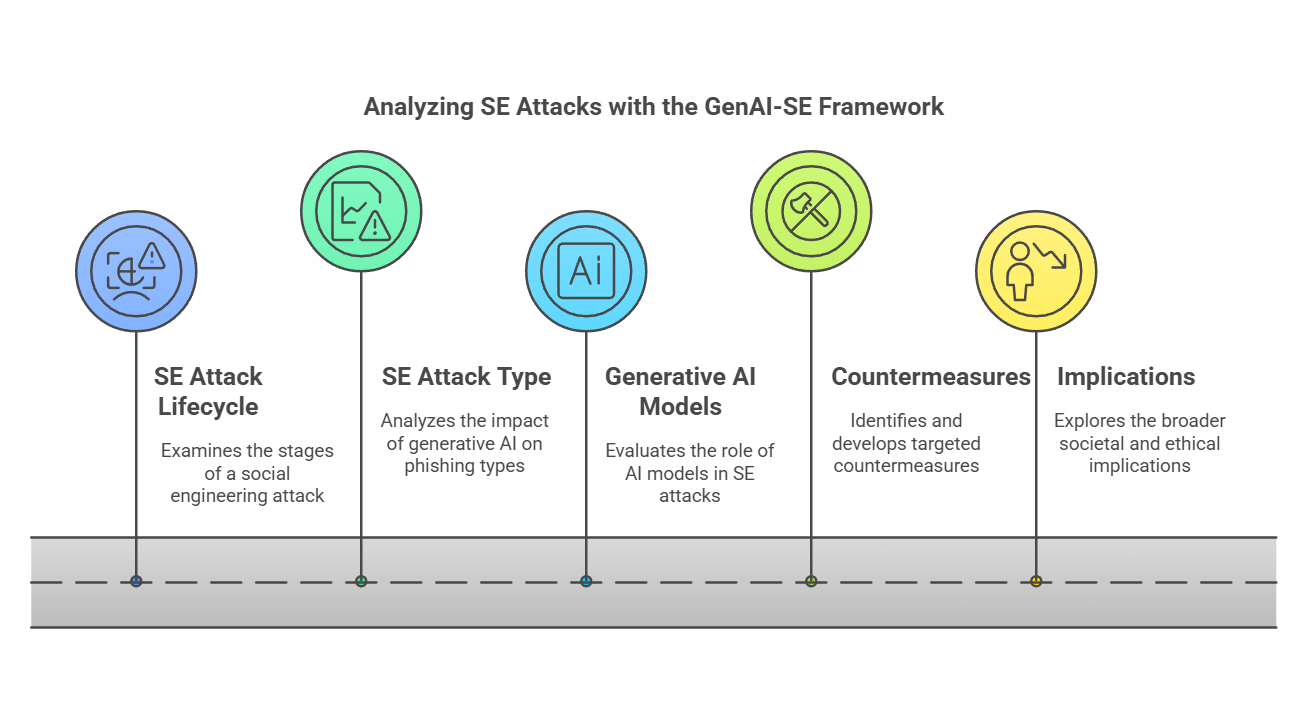

A. Generative AI Social Engineering Framework (GenAI-SE) - An Investigation and Analysis Model

A comprehensive and organized approach for examining the various facets of social engineering (SE) attacks and the developing role of generative artificial intelligence (GenAI) in strengthening these risks is provided by the GenAI-SE Framework (see Figure 3). With the rapid improvement of machine learning models, this paradigm emphasizes the growing hazards posed by generative AI in supplementing SE methods. Furthermore, it offers practical solutions for efficiently mitigating these dangers. The following is a detailed description of how this framework can be used to evaluate various aspects of SE assaults and the capabilities of generative AI:

Figure 3: Generative AI Social Engineering (GenAI-SE) Framework

Figure 3: Generative AI Social Engineering (GenAI-SE) Framework

- SE Attack Lifecycle: This framework would analyze the full SE attack lifecycle from conception and info gathering to preparation, relationship building, exploitation, debriefing, and goal satisfaction.

- SE Attack Types: The model will assess the effects of generative AI-powered SE attacks on various types of phishing, with an emphasis on threat multiplication and cost-effectiveness.

- Generative AI Models: The framework would carry out an evaluation of the generative AI models' benefits and issues, such as ChatGPT and Bard, in the context of SE attacks.

- Countermeasures: The software could help identify and design countermeasures tailored to the defense against SE attacks and could potentially include AI-based solutions.

- Implications: The growing interest would lead the framework to study broader societal, ethical, and legal impacts of the convergence between generative AI and social engineering, blooming bigger than mere threat intelligence or countermeasure exploration.

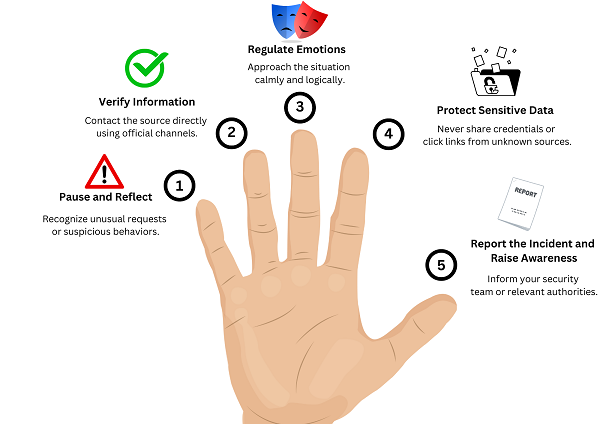

B. Five-Finger Approach to Combating Social Engineering

Moving Forward,this research presents a comprehensive solution for defending against social engineering attacks. By following a structured approach, individuals can effectively protect themselves through 5 key stages (See Figure 4) when confronted with a potential social engineering threat.

- Pause and Reflect (Thumb): Ask yourself if the request seems unusual or out of place. Look for red flags, such as urgency, an unknown sender, or unprofessional language.

- Verify the Information (Index Finger): Contact the sender directly using official channels (e.g., verified phone number or email) and cross-check details with trusted sources (e.g., company websites or databases).

- Regulate Emotions (Middle Finger): Stay calm and avoid impulsive reactions. Take a deep breath and approach the situation logically.

- Don't Give Away Sensitive Information (Ring Finger): Don't give away bank information, PINs, or credentials. Avoid open attachments or click links from anonymous sources without properly checking.

- Report the Incident and Raise Awareness (Pinky Finger): Notify your company’s security team, IT department, or a relevant cybercrime platform about the incident. Share key details such as the sender’s email address, message content, and any suspicious links or attachments. Ensure the incident is documented and spread awareness within the community to stop such threats early, minimizing the number of victims.

Figure 4: Five- Finger Approach to Combating Social Engineering

C. Counter Measures of AI

Cybercrime is a constantly evolving threat, with attackers and defenders locked in a continuous struggle. This makes it challenging to put in place surefire safeguards [10]. Cybersecurity countermeasures can be broadly classified into two categories: human-centered solutions (mostly user education) and technical solutions (such AI/ML and cryptography) [11][12]. Despite the availability of creative solutions, the industry is still concentrating on awareness-raising training initiatives to deal with the "people problem." Businesses of all sizes frequently use these tools, although they are typically useless against sophisticated, customized attacks. In addition to being risky, blaming end users for being targets of these assaults is simplistic. It is assumed that the issue would be resolved by increased awareness and training, yet this is inadequate for a number of reasons.

As AI technology progresses in the mimicking of human conversations, it will increasingly become a challenge to tell what content is real or not. There is always the deep fake becoming an option to make it hard to recognize if a video or audio is a hoax. Moving forward, the practice of truly feminine cyberattacks will require eternal vigilance for most people where they have to be extremely conscious to avoid deception while maintaining trust in the communication channel. In the long run, assessing the effectiveness of security awareness training (SAET) remains a big challenge. When users fall victim to attacks, it is often seen as a failure in training, which perpetuates the false belief that the victim is at fault. Table 6 contains a number of indicative proposals for specific countermeasures for each of the six pillars.

TABLE 6

COUNTERMEASURES FOR AI-GENERATED DECEPTION

|

Category |

Countermeasure |

Explanation |

|

Content Generation |

AI-Generated Content Detection Tools |

Tools like deepfake detection software and AI-generated text identification systems can detect unnatural patterns in content, reducing the impact of synthetic media. |

|

Advanced Targeting and Personalization |

Privacy and Data Protection Regulations |

Enforcing stricter data protection laws (e.g., GDPR) and limiting the amount of personal information available on social media can hinder profiling efforts. |

|

Automated Attack Infrastructure |

AI-Powered Intrusion Detection Systems |

AI-driven IDS can automate threat detection by identifying patterns of suspicious behavior and vulnerabilities, improving response time and detection efficiency. |

|

Category |

Countermeasure |

Explanation |

|

Adaptive Social Engineering Techniques |

Behavioral Analysis and Counteracting AI Systems |

Implement systems that monitor and analyze communication patterns for anomalies, preventing adaptive techniques from exploiting human behavior in real-time. |

|

Multi-channel Attack Vectors |

Cross-Platform Security Protocols |

Implement unified security measures across multiple platforms (e.g., email, social media, SMS) to prevent attackers from exploiting different channels. |

|

Evasion and Obfuscation Techniques |

Adversarial Training for Detection Systems |

Train AI systems to detect and respond to adversarial examples by creating models that can recognize and counteract obfuscation and evasion strategies. |

AI’s role in phishing highlights the need for innovative defenses. Machine learning models could help detect deceptive content in communications, and AI can also be used ethically to create cybersecurity training tools. As AI continues to evolve, it is critical to stay ahead of AI-driven threats and develop strategies to counter them. Mapping AI capabilities to social engineering attacks can reveal new areas for defense and research.

VI. DIRECTIONS FOR FUTURE RESEARCH

The risk posed by the emergence of highly autonomous social engineering bots highlights the urgent need to improve current defenses and create new ones. We suggest the following areas of investigation for further study to address these issues:

- User Awareness and Education: While "awareness training" alone isn’t a cure for AI-driven cyber-attacks, it's essential for combating emerging threats. Training programs, simulations, and intuitive tools can empower users to recognize and respond to new attack vectors effectively.

- Adversarial Machine Learning: Advancing adversarial machine learning techniques is vital for developing resilient AI models that can resist manipulation. Research should focus on algorithms and defenses against social engineering and phishing, strengthening AI systems' reliability in real-world applications.

- Active Deception Defense: Proactive defense strategies, leveraging natural language processing, machine learning-driven anomaly detection, and real-time analysis, are essential to disrupt deceptive attacks like phishing and social engineering. Research should focus on dynamic, real-time defensive tools for swift responses to evolving threats.

- Explainable AI for Threat Detection: Visibility of AI in threat detection is crucial. Explainable AI (XAI) allows security experts to better comprehend AI decision-making, discover flaws, and increase collaboration. Future research should concentrate on creating interpretable and practical XAI frameworks for real-world situations of use.

- Emerging Technologies: As technologies like chatbots, brain-computer interfaces, robotics, and quantum computing evolve, new social engineering vectors are likely to emerge. Research should explore the intersection of these innovations with social engineering tactics, anticipating potential vulnerabilities and creating defense strategies.

- AI-Driven Behavioral Profiling: Finding unusual behaviors becomes crucial as generative AI produces lifelike digital personas. In order to stop social engineering assaults, future research should concentrate on AI models that examine digital traces and behavioral patterns to identify hostile intent early.

Conclusion

Humans have traditionally been the weak link on a cyberchain, with often-severe vulnerability to social engineering (SE) attacks. These attacks, especially when powered by generative AI, are becoming more complex in targeting human behavior by exploiting their confidence. The more advanced AI becomes, the more ability it has to manipulate human decisions. This magnifies the exposure that humans are to cyber threats. It is pivotal to note that, for attacks to be successful on any large scale, they need to leverage the ordinary, less suspicious, segment of humanity, who are likely baited and lured, into their lyrical schemes. In order to make any particular point, it is reasoned that through the incorporation of Generative AI, social engineering and phishing can undergo a guise of transformation. In fact, Generative AIs greatly heighten the ability to employ Machine-Learning with a menacing effect for wisdom extraction from simulations. AI has generated realism through the content that is produced, personalization of campaigns, and automation of attacks on a wider basis. With these, cybercriminals can now carry out industrial-scale attack patterns that wait in the wings of a more sophisticated era for themselves. Therein more powerful AI becomes, and the more accessible with economically embedded misutilization, increased concern has gripped cybersecurity communities, industries, and individuals. Being on the brink of these new threats, we need to put forth efforts in countermeasures development and refinement. An action now is the shield against the inevitability of exponentially growing sophisticated AI-driven cyber threats.

References

[1] Yamin, M. M., Ullah, M., Ullah, H., & Katt, B. (2021). Weaponized AI for cyber attacks. Journal of Information Security and Applications, 57, 102722. https://doi.org/10.1016/j.jisa.2020.102722 [2] Schmitt, M. (2023). Securing the digital world: Protecting smart infrastructures and digital industries with artificial intelligence (AI)-enabled malware and intrusion detection. Journal of Industrial Information Integration, 36, 100520. https://doi.org/10.1016/j.jii.2023.100520 [3] S. Fahl, Web & Mobile Security Knowledge Area, The Cyber Security Body of Knowledge. (2021). www.cybok.org [4] Aleroud, A., & Zhou, L. (2017). Phishing environments, techniques, and countermeasures: A survey. Computers & Security, 68, 160–196. https://doi.org/10.1016/j.cose.2017.04.006 [5] Mouton, F., Malan, M. M., Leenen, L., & Venter, H. (2014). Social engineering attack framework. Information Security for South Africa. https://doi.org/10.1109/issa.2014.6950510 [6] Schmitt, M. (2022). Deep learning in business analytics: A clash of expectations and reality. International Journal of Information Management Data Insights, 3(1), 100146. https://doi.org/10.1016/j.jjimei.2022.100146 [7] Sutton, R., & Barto, A. (1998). Reinforcement Learning: An Introduction. IEEE Transactions on Neural Networks, 9(5), 1054. https://doi.org/10.1109/tnn.1998.712192 [8] LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436–444. https://doi.org/10.1038/nature14539 [9] I.J. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, Y. Bengio, Generative Adversarial Networks, (2014). http://arxiv.org/abs/1406.2661. [10] Taddeo, M., McCutcheon, T., & Floridi, L. (2019). Trusting artificial intelligence in cybersecurity is a double-edged sword. Nature Machine Intelligence, 1(12), 557–560. https://doi.org/10.1038/s42256-019-0109-1 [11] A. Basit, M. Zafar, X. Liu, A.R. Javed, Z. Jalil, K. Kifayat, A comprehensive survey of AI-enabled phishing attacks detection techniques, Telecommun Syst. 76 (2021) 139–154. https://doi.org/10.1007/s11235-020-00733-2. [12] B. Naqvi, K. Perova, A. Farooq, I. Makhdoom, S. Oyedeji, J. Porras, Mitigation strategies against the phishing attacks: A systematic literature review, Comput Secur. 132 (2023). https://doi.org/10.1016/j.cose.2023.103387.

Copyright

Copyright © 2025 Nishant Kumar, Niyati Manojkumar Patel. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET66688

Publish Date : 2025-01-26

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online