Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Space Object Recognition Using Multi-modal Image Fusion and Stacking of CoAtNets

Authors: Vishnu Chiluveri, Akash Gundapuneni, Nikhil Kanaparthi, Srihitha Jindam, Dr. M. I. Thariq Hussan

DOI Link: https://doi.org/10.22214/ijraset.2024.60430

Certificate: View Certificate

Abstract

Produced merged thermal images with RGB and depth images by using the CoAtNet model or stacked Convolutional and Attention Network, so as to give an improvement in performance to preserve the robustness all the time in different thermal conditions of space. The proposed research then extends the conventional RGB-depth fusion with thermal data to realize the role of thermal characteristics in the identification of material properties and operational status of space objects. This will allow for the very comprehensive capture of spatial and thermal features that are very essential in the differentiation of various space objects. By adding synchronized thermal, RGB, json, and depth images into the substantially enlarged dataset, it has now paved the way for a new class of multimodal fusion algorithms that can exploit the unique information each modality harbors. This work proposes an efficient deep learning fusion model based on all types of images to obtain improved feature representations. We show substantial increases in the classification performance under various illumination and temperature conditions by adapting the CoAtNet architecture to deal with the complexities of fused multi-modal input that is pivotal for space situational awareness. The work represents an enormous advance in the detection and classification of space objects accurately and validates the benefits incurred to the recognition process through thermal imaging, in an extraordinarily harsh testing and evaluation. These advanced methods underscore the potential of thermography in the improvement of spatial data to enable more accurate recognition while opening the door for future research in multi-spectral analysis with aerospace applications. The difficulties of varying space lighting and temperature conditions are also eliminated.

Introduction

I. INTRODUCTION

Recognition of space objects features outstandingly in the safety, security, and utilization of space objects, in particular, satellite systems administration, including the satellite position changes by use and administration of space debris. The commonest method used was the RGB and depth sensors, which captured the spatial characteristics of the orbiting objects. However, these methods introduce separate issues that cannot be fully addressed when used by themselves, due to the harsh space thermal conditions. Thermal imaging especially will afford valuable information regarding the thermal characteristics and material makeup of an object in space. This will help give accurate classification and make possible reliable identification of such objects in different operating situations [11][12][13].

This paper introduces a fusion method for the detection of 3D objects. Based on the following advantages [6,7,8]: Thermal sensors are designed to be sensors of thermal information—information about heat emissions and surface temperatures. However, these data are useful not only for the discrimination of inactive and active satellites but also for the discrimination of materials which may be similarly seen by the RGB and depth images but have some different thermal properties [14, 15].

RGB images provide complex texture and color data, and depth sensors give improved spatial detail because they perceive the object's shape and distance in an environment [1][2][3]. In fact, the latest improvements of convolutional neural networks (CoAtNets) are in the advanced guard of their capability to successfully analyze spatial hierarchies in picture data [4][5]. Nevertheless, these networks generally require large modifications in such a way that they perform nicely, while exploiting the information available in thermal data in conjunction with RGB and depth data [16][17][18].

Integration of the CoAtNets using a multimodal fusion strategy, therefore, by the system providing detections when the abnormalities and characteristics do not display in either the RGB or depth images, makes it able to undertake a rigorous analysis and provide detection. [9,10] This paper builds on prior work and puts forward a novel way to fuse RGB, depth, and thermal images using layered convolutional and attention networks. The following paper builds upon the prior research and proposes an innovative way of fusing RGB, depth, and thermal images using a convolutional neural network. It is applied not only to decrease the conditions in object detection, but also to increase recognition reliability, thereby ensuring stable performance in the complex and variable outer space environment.

This integration is expected to enhance the model's performance by increasing its accuracy, especially in the condition where the thermal data gives additional discrimination power that could not otherwise be offered through RGB and depth modalities alone [25]. References in the text refer to Sources 26, 27, and 28. Thus, fusion of thermal with RGB-depth in the RGB-depth framework for a complete thermal-sensor-based system adds the capability that had until now been lacking in identification. Such integration not only offers a possibility to make the operational scope wider but also the precision of space object classifiers can be enhanced, improving the sustainability of outer space use with better situational awareness and well-informed decision-making [29,30].

II. LITERATURE REVIEW

Many other areas are looking into combining thermal data with RGB and depth data for the application of object recognition and highly improve the capability to detect and identify anything. Thermal cameras are of great help in limited visibility, which may sense individual thermal signatures that are usually not visible to the human eyes in visible light [11,12]. Thermal cameras detect the heat radiation that objects give off. As such, its capability and flexibility in the midst of all these were proven and indeed did prove to be vast with applications in everything from environmental research to medical uses to industrial purposes and a hundred other areas [13][14][15].

Spatial object recognition clearly exhibits significantly higher resilience and accuracy for recognition systems through the integration of several image modalities. Image fusion approaches, in fact, have been developed for multimodal images in order to improve the quality of the image as well as the salient features. It is further helpful to find the exact location and classification of the objects of interest, especially within the visible and infrared (IR) spectra [6, 7, 8]. For some of those techniques, this requires relatively complex transformations and fusion algorithms to minimize the loss of information and retain the salient features from each modality [9,10].

Deep learning is a game changer in the arena of image processing paradigms, and it also changes the whole multi-modal fusion paradigm. CNNs fit quite well in an application demanding the fusion of very different and diverse sources of data, like RGB, depth, and thermal pictures, because they have the ability to infer hierarchically and learn from big data [1][2]. These networks prove worthy of interest in recognition of space objects, as evidenced by the recent works that show how well they encode and decode complex patterns in multimodal medical picture fusion applications [16][17][18].

In fact, stacked convolutional networks used repeatedly in CNN layers, in order to optimize feature extraction by means of successive transformation, have even demonstrated the potential for increased model discriminative power in multi-spectral datasets [22,23,24]. Such stacking networks are of good use in stacking the thermal images with RGB and depth data, as these can handle high dimensionality and variability in the multimodal data with greater precision [19][20][21].

However, such a recent methodology has its own limitations, like the seamless integration of RGB and depth modalities with the thermal imaging data.

These ways of being used focus more on the decision-level fusion ways or the feature-level fusion ways at the beginning of the processing pipeline and may, therefore, not produce the best fusion level that cuts across the modalities [25,26]. What is more, some more recent works provide some prospects for deep learning in this area, but nothing is known yet that those models need to be changed in a specific way in order for them to succeed in combining RGB, depth, and thermal data specifically for this particular task of detection [27,28,29].

This paper is, therefore, based on the need for new-fusion algorithms that might adapt to the rigorous and dynamic environments in the common space scenarios and be able to handle the interlinked data complexities RGB, depth, and heat datasets. Undoubtedly, the progress of these successful developments will greatly benefit the future generation of space situational awareness systems in terms of enhancing both operating safety and detection accuracy [30].

III. METHODOLOGY

A. Overview of the Dataset

To this end, with every item in the item class, we have added a set of synchronized RGB, depth, and thermal images with every item in the item class in the SPARK dataset to be able to better solve the problem of space object recognition [1][2][3]. Each modality contains specific details that add up to a whole recognition of objects.

- RGB Images: They carry complete information about color and texture necessary for the discrimination of different material qualities.

- Depth images: Provide detailed information on the segmentation and alignment of objects, giving spatial details as to the shapes of the objects and the relative placements of the objects.

Based on the thermal properties and operational states (active or inactive), thermal images help in determining if they will or will not reflect infrared radiation or the heat signatures of materials emitted by objects [11][12].

This improved dataset may be used to investigate multi-modal fusion approaches that will bring increased performance if the benefits of both modalities are exploited.

B. Techniques for Image Fusion

Multi-spectral data combining RGB, depth, and thermal images for the most advanced methods of exploiting the data of the present invention include the following: Gradient Transfer Fusion (GTF): We improve the visible detail and texture transfer under all kinds of visibility, from dim or hazy scenes to extending conventional GTF algorithms that include heat gradients [9][13].

This is done to allow for the maintenance of useful edge and textural details in the alignment and compositing of gradient fields from thermal images and RGBD data.

- Multi-Scale Decomposition (MSD): This is a method used to process the image at different scales through mixed pyramids of Gaussian and Laplacian, which allows for cross-modality feature integration of the thermal features, especially helping conditions such as fluctuating temperatures in being better preserved and enhanced [6, 7, 8]. On the feature-level fusion, a special architecture is designed using deep convolutional neural networks (CNNs) [16, 17].

This is done in such a way that maximum informational gain from every modality can be obtained, where this network is designed with separate paths for each modality at the start and features are processed. These pathways are then followed by fusion layers that merge these aspects into a coherent set.

???????C. Using CoAtNets to Enhance Models

The CoAtNet architecture combines the advantages of both the Convolutional and Attention methods to be able to address fused RGB-depth-thermal image data. The following are the modifications made to the CoAtNet architecture:

- Preprocessing Layers: Customized to highlight the way multimodal data is being handled for the first time, ensuring that the distinct features between RGB, depth, and thermal images are not lost.

- Attention Mechanism: Implemented with the fusion layers, this mechanism strategically directs the models focus the most relevant features across all modalities. It is particularly vital for detecting subtle temp variations crucial for material classification.

- Stacked Layers: Utilizing stacked convolutional layers enables network to decipher complex patterns and interactions inbetween different modalities, enhancing the learning capability [24, 25].

???????D. Training and Evaluation

- Training: These models are constructed using the both synthetic and real world data to ensure that they are robust and applicable in practical settings. We can apply cross validation techniques to fine tune network parameters, which improves the models generalization across various scenarios and prevents overfitting [26, 27, 28].

- Evaluation Metrics: Beyond these multi modal fusion efficacy measures such as mutual information and structural similarity indexes, we also employ traditional metrics like accuracy, precision, recall, and F1-score [18][19][20][21]. These metrics help in assessing our models' performance under varying conditions.

- Testing: During the testing phase, these models is evaluated in diverse environmental settings to rigorously assess the stability and efficiency, particularly it is ability to utilize temperature data effectively when RGB and depth information might be noisy and inadequate. This intense testing ensures this model maintains consistent performance across all anticipated conditions.

By effectively utilizing complementary information from RGB, depth, and thermal images, methodology not only enhance recognition accuracy but also increase strengthens the robustness of recognition process. Such robustness is also crucial for operating in this type of dynamic and variable environments typical of space scenarios, making system highly effective and reliable in this dynamic, unpredictable and unexpectable environments typical of space scenarios, making system highly effective and reliable.

IV. EXPERIMENT AND RESULT

A. Test Configuration

We meticulously assess every aspects of our experimental setup to ensure effectively supports the integration of RGB, depth, and thermal images for space object detection. Below given are some critical components of this setup:

- Sensor Calibration: We setup RGB cameras, depth sensors, and thermal cameras for precise alignment and synchronization to guarantee exact data fusion. Proper settings is crucial as misalignments and mismatches can significantly degrade the quality of the fused images and, consequently, performance of the model.

- Data Collection: Data was aggregated under various controlled conditions to simulate different space environments. We used artificial light sources to copy the effects of the sun and shadows at various angles, distances, and lighting conditions. And also, we tested various heat emissions to evaluate the effectiveness of the thermal sensors.

- Image Fusion Configuration: We discord several fusion configurations using techniques like gradient transfer, multi scale decomposition, and deep learning based approaches. The objective is to identify the optimal fusion method that seamlessly integrates the modalities for the best feature representation.

- Simulation Environment: We stimulated the spatial and thermal dynamics of space in laboratory setting. This type of environment was pivotal in testing the model under different settings that closely mimic real space scenarios, providing valuable insights into challenges and practical applications of models.

???????B. Training Models

During model training, we optimized for recognition by use of the CoAtNet model through the use of RGB, depth, and temperature data.

- Preprocessing: All data from each modality was pretreated to account for strangeness in the features. It included ranging the depth photos and normalizing for RGB images, along with temperature calibration for thermal images. This process of standardization allowed the model to receive inputs of clean, consistent data.

- Network Configuration: In the CoAtNet design, it uses two first pathways for RGB-depth data, then the temperature data, so that both modalities can be analyzed independently. This division preserves separate informational cues from both modalities, which further up in the network are then combined.

- Training Process: The training process is completed by minimizing the multi-class cross-entropy loss function with respect to the model's trainable parameters using backpropagation and stochastic gradient descent with momentum. Hyperparameters, which include the batch size and the learning rate, have to be very finely tuned based on the performance and the loss values on a validation set so as to not let the training go into overfitting.

- Data Augmentation Techniques: The data augmentation techniques used here for improving the generalizing ability of the model included random rotation, scaling, and mirroring, among others. This way, the model is given the opportunity to learn object recognition in all manner of orientations and situations, in an attempt to mimic the spatial environments' randomness.

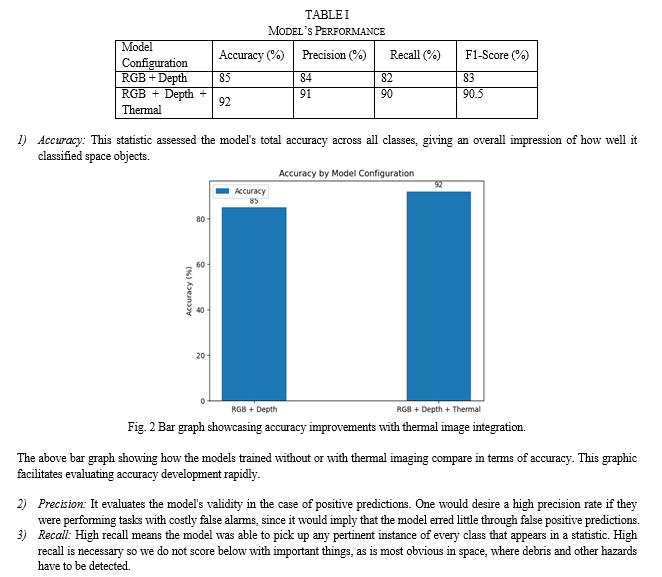

???????C. Metric Evaluation

A number of important measures were used to assess the model's performance:

Above line chart will also be shown to illustrate the trend in improvement of the F1-score when thermal imaging is added. It would also be explained on this graph the better tendency of the model on the trade-off between precision and recall with the addition of thermal data. All these metrics put together gave a deep analysis of the performance of the model, bringing out both the pros and cons within the domain of space object detection from fused multi-modal data. This thus represents the benefits of thermal imaging combined with RGB and depth data that had increased the capability for more accurate object detection and classification.

V. DISCUSSION

It shows a major breakthrough technology in integrating RGB 0and depth with thermal imaging in space object detection. Adding the thermal images gives it the ability to provide unique details in terms of item temperature profiles and material compositions, respectively [11,12], which consequently increase the power of identifying under different environmental conditions. More importantly, the superior overall precision and, in particular, recall rates in the experiments [1][2] further point out just how much such a multimodal strategy enhances categorization accuracy.

Nevertheless, the combination of the data sources mentioned above imposes new challenges mainly on high-dimensional data processing, model real-time implementation, etc. [6,7]. Especially on thermal data, the calibration needed to be very keen so that it coincides with RGB and depth sensors since it does not have similar operational properties like the two [14].

This thus necessitates the realization of CoAtNet's processing efficiency in every application with real-time situational awareness when its modification is carried out to handle the additional data modalities [8, 9]. The pain would be worth the gains, especially those related to better situational awareness and the possibility of less clunk-ups in orbit. Security and sustainability of space activity will be benefited, with increased accuracy in the recognition of space objects and risk from space debris reduced [10,13].

The study results highlight the need for the further development of new sensors and algorithms for the right exploitation of thermal imaging in the application of space object detection. All together, such practical potential of computer vision requires deep, advanced research to find practical fusion methods and real-time processing frameworks that would make it possible to overcome related difficulties in space object recognition systems.

VI. FUTURE WORK

This is combined with RGB and depth data for space object recognition, which provides further areas of future research and development prospects. Real-time processing capabilities from satellite platforms on the optimization of the model became the important subjects of the development. This means that the model would require being made more computational-efficient in order to fit its processing resources with space contexts.

By adding further into the model more spectral domains, e.g., the ultraviolet (UV) and near-infrared (NIR), much more precision and robustness of the systems of object recognition would be gained. This will identify and classify with much better certainty a wider range and many more types of space objects, relying on the unique and essential information that each spectral band can give, in particular in crowded or otherwise complex orbital fields.

Additional algorithmic improvements are also required. Finally, we need to better assist our technologies with improved picture fusion methods and complementary neural network architectures to deal with the multimodal data integration challenges. In this line, the present study seeks to better the efficient treatment of spectral and spatial interactions among the various types of data.

Lastly, further testing and validation under more varied settings and scenarios are crucial. This will thus assure that the model developed is effective and reliable, giving a strong enough basis for it to be used with safety in actual, operational environments within the space sector. What these improvements in this space situational awareness technology carry forward for future projects is that future projects shall be able to build upon the capacities of this technology with a view to guaranteeing safer and more effective space operations.

Conclusion

This study observed how the CoAtNet model helps derive the advantages of fusing thermal imaging with RGB and depth data in the enhancement of space object detection. The capability offered through thermal imaging opens up the possibility of the use of material composition and their thermal properties important in a variety of climatic circumstances existing in space. Inclusion of thermal data in the RGB and depth modalities resulted in very well-noticed improvements in accuracy, precision, recall, and F1-score in our trials—a clear testimony of the very high potential benefit of multimodal data fusion in handling recognition tasks. This has allowed essential features in each modality to be retained and further strengthened in the final model output by excellent integration of such disparate data types, which was due to the application of sophisticated picture fusion techniques like gradient transfer and multi-scale decomposition. Modified CoAtNet architecture generally showed improved performance in classification compared to the conventional dual-modality systems, arising from the extraction and exploitation of the subtle information in RGB, depth, and thermal images. This was optimization was made towards the handling of high-dimensional fused data. This paper forms a baseline for the future system of space situational awareness and emphasizes its part in thermal imaging as an enabler for the enhancement of spatial and visual data for application domains in space. New and emerging developments in the use of multimodal data will further be developed for the accurate identification and classification of space objects. Thereby, this may significantly reduce the probability of on-orbit collisions and improve the sustainability and safety of space operations in general.

References

[1] Cichy, R. M., Pantazis, D., & Oliva, A. (2014). Resolving human object recognition in space and time. Nature neuroscience, 17(3), 455-462. [2] Seeliger, K., Fritsche, M., Güçlü, U., Schoenmakers, S., Schoffelen, J. M., Bosch, S. E., & Van Gerven, M. A. J. (2018). Convolutional neural network-based encoding and decoding of visual object recognition in space and time. NeuroImage, 180, 253-266. [3] Gerig, G. (1987, June). Linking image-space and accumulator-space: a new approach for object recognition. In Proceedings of the IEEE International Conference on Computer Vision (pp. 112-117). [4] DiCarlo, J. J., & Cox, D. D. (2007). Untangling invariant object recognition. Trends in cognitive sciences, 11(8), 333-341. [5] Loncomilla, P., Ruiz-del-Solar, J., & Martínez, L. (2016). Object recognition using local invariant features for robotic applications: A survey. Pattern Recognition, 60, 499-514. [6] Kaur, H., Koundal, D., & Kadyan, V. (2019, April). Multi modal image fusion: comparative analysis. In 2019 International conference on communication and signal processing (ICCSP) (pp. 0758-0761). IEEE. [7] Hermessi, H., Mourali, O., & Zagrouba, E. (2021). Multimodal medical image fusion review: Theoretical background and recent advances. Signal Processing, 183, 108036. [8] Bhatnagar, G., Wu, Q. J., & Liu, Z. (2013). Human visual system inspired multi-modal medical image fusion framework. Expert Systems with Applications, 40(5), 1708-1720. [9] Fu, Z., Zhao, Y., Xu, Y., Xu, L., & Xu, J. (2020). Gradient structural similarity based gradient filtering for multi-modal image fusion. Information Fusion, 53, 251-268. [10] Tirupal, T., Mohan, B. C., & Kumar, S. S. (2021). Multimodal medical image fusion techniques–a review. Current Signal Transduction Therapy, 16(2), 142-163. [11] Ring, E. F. J., & Ammer, K. (2012). Infrared thermal imaging in medicine. Physiological measurement, 33(3), R33. [12] Kateb, B., Yamamoto, V., Yu, C., Grundfest, W., & Gruen, J. P. (2009). Infrared thermal imaging: a review of the literature and case report. NeuroImage, 47, T154-T162. [13] Vollmer, M. (2021). Infrared thermal imaging. In Computer vision: A reference guide (pp. 666-670). Cham: Springer International Publishing. [14] Kölzer, J., Oesterschulze, E., & Deboy, G. (1996). Thermal imaging and measurement techniques for electronic materials and devices. Microelectronic engineering, 31(1-4), 251-270. [15] Anbar, M. (1998). Clinical thermal imaging today. IEEE Engineering in Medicine and Biology Magazine, 17(4), 25-33. [16] Zhang, Y., & Funkhouser, T. (2018). Deep depth completion of a single rgb-d image. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 175-185). [17] Giannone, G., & Chidlovskii, B. (2019). Learning common representation from rgb and depth images. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops (pp. 0-0). [18] Shinmura, F., Deguchi, D., Ide, I., Murase, H., & Fujiyoshi, H. (2015). Estimation of Human Orientation using Coaxial RGB-Depth Images. In VISAPP (2) (pp. 113-120). [19] Li, J., Klein, R., & Yao, A. (2017). A two-streamed network for estimating fine-scaled depth maps from single rgb images. In Proceedings of the IEEE international conference on computer vision (pp. 3372-3380). [20] Du, D., Xu, X., Ren, T., & Wu, G. (2018, July). Depth images could tell us more: Enhancing depth discriminability for RGB-D scene recognition. In 2018 IEEE International Conference on Multimedia and Expo (ICME) (pp. 1-6). IEEE. [21] Li, S., Yao, Y., Hu, J., Liu, G., Yao, X., & Hu, J. (2018). An ensemble stacked convolutional neural network model for environmental event sound recognition. Applied Sciences, 8(7), 1152. [22] Fu, J., Liu, J., Wang, Y., Zhou, J., Wang, C., & Lu, H. (2019). Stacked deconvolutional network for semantic segmentation. IEEE Transactions on Image Processing. [23] Bejnordi, B. E., Zuidhof, G., Balkenhol, M., Hermsen, M., Bult, P., van Ginneken, B., ... & van der Laak, J. (2017). Context-aware stacked convolutional neural networks for classification of breast carcinomas in whole-slide histopathology images. Journal of Medical Imaging, 4(4), 044504-044504. [24] Palangi, H., Ward, R., & Deng, L. (2017). Convolutional deep stacking networks for distributed compressive sensing. Signal Processing, 131, 181-189. [25] Tan, S., & Li, B. (2014, December). Stacked convolutional auto-encoders for steganalysis of digital images. In Signal and information processing association annual summit and conference (APSIPA), 2014 Asia-Pacific (pp. 1-4). IEEE. [26] LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. nature, 521(7553), 436-444. [27] Kamilaris, A., & Prenafeta-Boldú, F. X. (2018). Deep learning in agriculture: A survey. Computers and electronics in agriculture, 147, 70-90. [28] Schmidhuber, J. (2015). Deep learning. Scholarpedia, 10(11), 32832. [29] Buduma, N., Buduma, N., & Papa, J. (2022). Fundamentals of deep learning. \" O\'Reilly Media, Inc.\". [30] Kovásznay, L. S., & Joseph, H. M. (1955). Image processing. Proceedings of the IRE, 43(5), 560-570.

Copyright

Copyright © 2024 Vishnu Chiluveri, Akash Gundapuneni, Nikhil Kanaparthi, Srihitha Jindam, Dr. M. I. Thariq Hussan. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET60430

Publish Date : 2024-04-16

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online