Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

SPARSHA-AR an Android Application for the Visualization of Aero Engine Components’ Assemblies and Sub-Assemblies Using Augmented Reality Environment

Authors: Saban ., Dr. Naveen Kumar S.K

DOI Link: https://doi.org/10.22214/ijraset.2023.54548

Certificate: View Certificate

Abstract

In this project we are applying the concept of Augmented Reality (AR) to display the Aero engine components’ assemblies and sub assemblies. We are displaying those components in Android Smartphone in more attractive way by enabling the user to interact with those components’ using Android Smartphone and Windows PC. Also, as part of the project we have made it possible to display the information of respective model along with voice read out complete description of model simultaneously.

Introduction

I. INTRODUCTION.

The aero engine components designed by GTRE are 3D models which can be used in latest available technology for viewing in real world using Smartphone’s. Most of the Smartphone’s available in the market are based on Android OS. Most of the reputed organization is now developing their own Android application either for their internal use or for the public use. GTRE is also one of such reputed organization in India and also the one of the organization which makes use of latest available technologies for various purposes. Therefore it is required to develop an Android application that can display the 3D models of aero engine components assemblies and sub assemblies. An attempt has been made in this dissertation to develop SPARSHA AR- A Android application for the visualization of aero engine components assemblies and sub assemblies using Augmented Reality environment.

This application is an attempt to display the aero engine components assemblies and sub assemblies in the Augmented Reality environment using an Android Smartphone. The application combines the features of both the android application and the augmented reality to display the 3D models of aero engine components in the android Smartphone. This software is also intended to incorporate ease of portability, so that it can run on any high-end Smartphone having higher processing capability and Android Operating System. The aim of this project is to give visualization to Stereo Lithography (STL) files of GTRE, which is initially converted to Filmbox (FBX) file format so that it can be used in our Augmented Reality Android app. Augmented Reality (AR) is a reasonably recent, but still large field. Even though AR does not have a very large market share, but most of its current applications are just out of prototyping.

II. VIRTUAL REALITY

Virtual Reality (VR), which is referred to as immersive multimedia or computer-simulated life that replicates an environment that simulates physical presence in places in the real world or imagined worlds. Virtual reality is capable of recreating the sensory experiences, which includes virtual taste, sight, smell, sound, and touch.

III. AUGMENTED REALITY

Augmented Reality (AR) is a direct live or indirect view of a physical, real-world whose objects are Augmented (or supplemented) by computer-generated sensory input such as video, graphics or GPS data. In general, Augmented Reality may also be referred to as Mixed Reality or Mediated Reality, which is the combination of virtual object or the virtual world scene and the real-world scene.

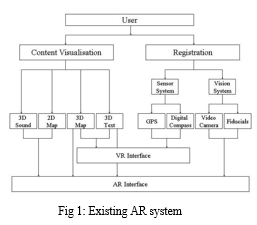

The Augmented Reality (AR) technology the virtual information of the real world can be digitally manipulated. The schematic diagram of existing AR system which combines Virtual Reality (or virtual object) environment is shown in Figure 1.

- To create a system where the user can not differentiate between the Virtual world and Real world.

- To use the Virtual content within the Real world with rich user experience.

- To integrate it to day-to-day activities to reduce human effort.

A. Aim of Augmented Reality [1].

IV. TYPES OF AUGMENTED REALITY

The Augmented Reality can be classified as two different types

- Marker Less

- Marker Based

A. Marker Less

In marker less AR, the image target is acquired from the cloud database and the Augmentation can be achieved on any specific location. The location data of the device is obtained by using GPS. The location data is then matched with cloud data base and real time Augmentation is achieved.

B. Marker Based

In marker based AR, an image acts as a marker that is assigned with some virtual objects. This image can be detected by the camera that is incorporated with the application. The camera used in AR application which is known as ARCamera is capable of detecting the markers with virtual data. When the detected marker is matched with the marker on the database of the application, the virtual content appears as it is present above the image in the real world.

V. PROPOSED SYSTEM

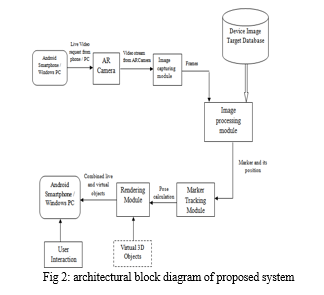

The proposed system is a application for visualizing the aero engine components in the Augmented Reality environment using Android Smartphone as well as using Windows PC. The proposed system is marker based AR application developed with Vuforia AR SDK. The following figure shows the architecture of the AR system of the application.

A. ARCamera

It is simply a camera when the user starts the application. The user only has to start the camera to record the every frame of real world like a video. The camera frame is automatically stored to temporary memory in device- dependent format.

B. Image Capturing Module

The input to this module is provided by the ARCamera. Every frame that is being captured by the camera is translated as binary images.

C. Image Processing Module

Input to this module is the binary image provided by the image capturing module. This binary image is processed using an image processing algorithm incorporated with SDK that detects the marker. When the marker is detected, the application searches for the match in Device target database.

D. Marker Tracking Module

When the marker from video stream and database are found matching, the module starts calculating the relative pose of the marker in real world. The relative pose data generated in this module is the input for rendering module. Hence this module is termed as “Heart and Soul” of this augmented reality application.

E. Rendering Module

The Rendering Module takes two inputs

- Relative pose data from the Marker Tracking Module.

- Virtual 3D objects from the application database.

When the marker is found matching from pose data; the application starts combining the real world with the virtual world by fetching the 3D objects from database and display it on the device screen.

F. User Interaction

User interaction is enabled in this system by the usage of Multi-touch gesture support in Android Smartphone. User can touch the virtual 3D object and rotate in X axis, Y axis and Z axis.

G. Device Target Database

The data base containing the image target must be created and downloaded using Target Manger in the website of Vuforia SDK. This database asset contains the XML configuration file that contains trackable information. While the application is running these assets are compiled by the image processing module and used the Vuforia SDK.

The tools used in developing the Application are listed below

- Tools: Unity 3D, Blender

- SDK: Qualcomm Vuforia AR SDK, Android SDK

- IDE: Mono Develop

- Programming Language: C#

VI. SOFTWARE REQUIREMENTS

The software requirements section explains the system requirements. This document should show functionality, constraints, supportability, performance and usability.

A. Specific Requirements

Here we list the functional and non-functional requirements.

- Functional Requirements

A functional requirement is a requirement regarding the specific functions of the application we want to make. These requirements may be technical details, data manipulation and processing and functionality details.

a. Image Recognition: This is the process of recognizing the predefined 2D image targets using some predefined image processing algorithms. It is used to display the 3D objects on the display of the mobile phone.

b. Multi-Touch gesture support in Android Smartphone’s: This is the function in which touch sensors are activated on touch of a finger. This is used to rotate the augmented 3D objects in x-axis, y-axis and z-axis Android

c. Screen Capture: This is the process of capturing the current scene being displayed on the screen as a image and storing in the device memory. This function is used to manipulate the 3D object when application is not being used.

d. Display model Information: This is the function in which the application displays the information of the 3D model and also application should play the voice read out of the model description.

e. Initialize camera: This is the process in which the application initializes the camera so that the function, Image Recognition can be performed.

2. Non-functional Requirements

A non-functional requirement is a required feature of the system development process, the service or any other aspect of development.

a. Navigation: This function is used to toggle between multiple models within the available list of models.

b. Interface Design: The interface should be intuitive to use and help the user find the desired functions fast.

c. Button Design: We should have sufficiently big buttons so that the user has no problem in navigating in the application.

VII. TESTING RESULT

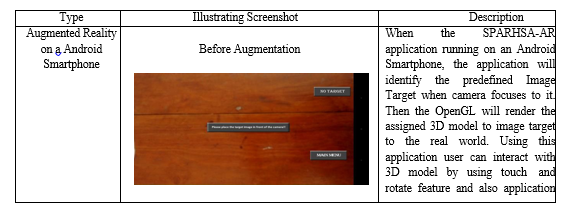

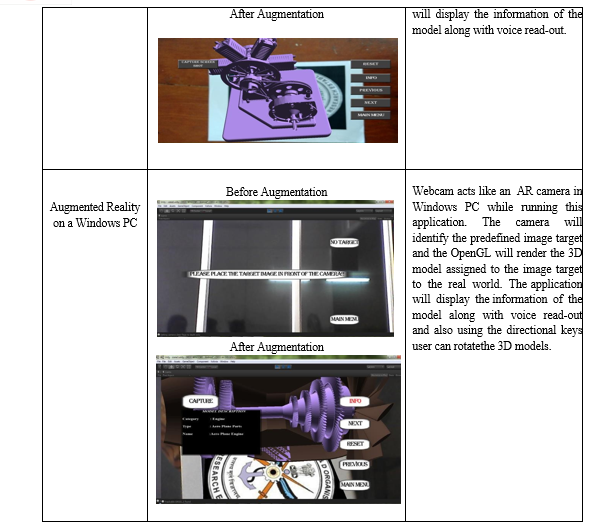

This section gives a general overview of the Test Specification for “SPARSHA AR- An Android application for the visualization of aero engine components assemblies and sub assemblies using Augmented Reality environment”.

A. Major Constraints

This section gives some constraints that make it almost impossible to perform all tests necessarily.

- The main limitation is time. Since testing is equally important as the development of the software, a heavy work has to be done on this.

- There are currently not many users of the software who may report the bugs when they use this.

Testing never ends, it just gets transferred from developer to user. Every time the user uses the program, a test is being conducted. Even then, the requirements specified by the user have been satisfied in this phase. All the tests produced the desired results

|

Test Case |

Input |

Expected Output |

Test Report |

|

1. |

Invalid Image Target / No Image target. |

Following warning messages are expected 1.No Target Found 2. Please place the target image in front of the camera. |

Success |

|

2. |

Valid Image Target. |

Following outputs are expected

displayed above the image

|

Success |

VIII. FEATURE WORKS

The additional features and further improvement that have to be done in SPARSH-AR application are mentioned below:

- This application has been built for Android using Unity 3D. But, Augmented Reality application for Android can also be developed using Eclipse or Android Studio by plugging in Qualcomm Vuforia to Eclipse/Android Studio.

- Augmented Reality app developed using Eclipse/Android studio can incorporate even more native android features like gesture support, sensor functionalities etc.

- This application displays the 3D model when the device recognizes the 2D image target. In future, the reverse process can be achieved that is, when the device recognizes the real word 3D model, the application should project a 2D image which contains information like stress analysis, strain analysis etc, of the respective model.

- Qualcomm Vuforia supports more attractive features like virtual buttons, smart terrain, video playback, cloud recognition, object recognition, etc, these features can also be implemented using Unity 3D. Eclipse plug-in does not support some of these features.

- The same application can also be built for iOS Smartphone’s if the system has iOS SDK installed in it.

- As of now only.fbx, .dae, .3ds, .blender, .obj and .dxf format 3D files can be displayed using this application. So the future work should involve taking all possible formats of 3D files like .stl, .vrml, etc.

- Future work should be carried out so that the application is optimized for handling 3D file of large size, so that the application size can also be reduced without loss of any information.

- Future work can be carried out by implementing the application using DAQRI 4D studio, Aurasma, Metaio Creator, Layer Creator, Augment Dev, Blippar, EON reality. This software’s are developed for creating Augmented Reality applications. But most of them are either paid or trail versions.

- The graphical user interface (GUI) of this application is also designed using Unity 3D, hence the GUI may not be found like an android application GUI. Therefore, in future works it is recommended to use Eclipse/Android Studio for developing this kind of application with more attractive GUI or menu system. 10.Animation is the more attractive thing that can happen in Augmented Reality applications. Hence, in future it is recommended to replace the existing 3D models with animated models so that the application can be much more attractive than the present version.

IX. RESULT TABLE WITH SCREENSHOT AND DESCRIPTION.

X. ACKNOWLEDGEMENT

We would like to thank Mr. K Vijayananda, Sc ‘F’, GTRE for providing an opportunity to develop this application using the aero engine components for their continuous support and valuable guidance

Conclusion

In this work, we have described the implementation of Augmented Reality (AR) technology on an Android Smartphone and run time environment using Windows PC. The application is developed for high end Android Smartphone because the application is best viewed on a High Density (HD) display. We have developed the application using a free to use Augmented Reality toolkit called Qualcomm Vuforia SDK which is based on computer vision technology. The main challenge we faced during the development stage is displaying the very high sized 3D models of Aero Engine components which is then compressed and used in our application. The size of the application is more while compared with the other Android applications. We have overcome all the problems and difficulties and successfully developed the application with minimum number of bugs.

References

[1] Azuma, Ronald T. 1997.A Survey of Augmented Reality. In Presence: Teleoperators and Virtual Environments 6, 4 (August 1997), 355-385

[2] S.Karpischek, C. Marforio, M. Godenzi, S. Heuel, and F. Michahelles.2009. Mobile augmented reality to identify mountains. In Adjunct Proc.of AmI. Switzerland: ETH Zurich.

[3] Hull, Jonathan. 2007. Paper-Based Augmented Reality. International Conference on Artificial Reality and Telexistence.IEEE.

[4] The Unity Team. Unity Scripting Reference. Unity Documentation. http://docs.unity3d.com/Documentation/Manual/index.html

[5] http://mashable.com/category/augmented-reality

[6] Marker Based Augmented Reality Using Android OS. By

Mr. Raviraj S. Patkar, Pune University- ISSN-2277128X, Volume 3, Issue 5,May 2013,

[7] Introduction to AR By R. Silva, J.C Oliveira, G. A. Giraldi National laboratory for scientific computation Brazil.

[8] Theory and applications of marker-based augmented reality Sanni Siltanen ISSN 2242-1203, Copyright © VTT 2012.

[9] Vuforia developer portal.

[10] DESIGN STYLE FOR BUILDING INTERIOR 3D OBJECTS USING MARKER BASED AUGMENTED REALITY by-RAJU RATHOD, GEORGE PHILIP.C, VIJAY KUMAR B.P

[11] MARKER TEXTBOOKS FOR AUGMENTED REALITY ON MOBILE LEARNING by-TOUFAN D TAMBUNAN,HERU NUGROHO

[12] CREATING AUGMENTED REALITY AUTHORING TOOLS INFORMED BY DESIGNER WORKFLOW AND GOALS by-

Maribeth Gandy Coleman

[13] Juan, C.; Beatrice, F.; Cano, J.;, “An Augmented Reality System for Learning the Interior of the Human Body,” Advanced Learning Technologies, 2008. ICALT ‘08. Eighth IEEE International Conference on, vol., no., pp.186-188, 1-5 July 2008 doi: 10.1109/ICALT.2008.121

[14] \"Augmented Reality (Vuforia„¢) Tools and Resources.\" Augmented Reality (Vuforia) Tools and Resources. N.p., n.d. Web. 22 Nov. 2012.

Copyright

Copyright © 2023 Saban ., Dr. Naveen Kumar S.K. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET54548

Publish Date : 2023-06-30

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online