Ijraset Journal For Research in Applied Science and Engineering Technology

- Home / Ijraset

- On This Page

- Abstract

- Introduction

- Conclusion

- References

- Copyright

Survey Paper on Virtual Reality in Gaming

Authors: Atharva Baikar, Prof. Rajeshwari Dandage

DOI Link: https://doi.org/10.22214/ijraset.2024.65230

Certificate: View Certificate

Abstract

This paper examines the integration of virtual reality (VR) technology within gaming, focusing on the development of immersive virtual environments that enhance user interaction, presence, and satisfaction. With advancements in VR hardware and software, gaming experiences are becoming more lifelike and socially engaging. The VR-based metaverse concept explored in this research aims to provide interconnected virtual worlds with dynamic, persistent interactions. This paper discusses the benefits and challenges of VR in gaming, including technical limitations, high costs, and potential for immersive storytelling. The findings offer insights into the potential impact of VR in gaming and entertainment.

Introduction

I. INTRODUCTION

Virtual reality (VR) is transforming the gaming industry by creating immersive, interactive environments where users can engage in real-time with virtual worlds. This technology, which uses advanced VR headsets and controllers, has the unique ability to immerse players in a sensory-rich experience that far surpasses conventional gaming in terms of engagement and realism. By enveloping players in lifelike simulations, VR gaming bridges the gap between reality and virtuality, offering players a heightened sense of presence that can significantly enhance gameplay satisfaction.

The adoption of VR in gaming addresses some limitations of traditional gaming, which often lacks immersive engagement and restricts social connectivity. Conventional gaming, while visually captivating, typically confines users to screen-based interactions. VR, however, introduces a multi-dimensional experience that allows players to interact physically and socially within the game space. This added dimension encourages a deeper level of emotional involvement and brings a sense of realism to virtual interactions, which could redefine the future of gaming as a more dynamic, experience-driven form of entertainment.

Various studies have highlighted advancements in VR technology that enable more realistic and personalized gaming experiences. Research in VR gaming has shown that users are more engaged when they feel their actions influence the virtual world around them, sparking interest in creating highly responsive and adaptive virtual environments. These studies have also shed light on the technical challenges of VR, such as motion sickness and high hardware costs, as well as limitations in content development that could impact widespread adoption. Despite these challenges, VR's potential to provide more interactive, user-centered gaming experiences is driving ongoing interest and innovation in the field.

The problem definition for VR in gaming centers on overcoming the technical and experiential limitations of existing virtual platforms. VR brings unique challenges, including the need for advanced hardware, the potential for user discomfort during extended play sessions, and the complexity of developing lifelike, engaging environments. This study further explores the scope of VR applications in gaming, with a focus on enhancing user engagement and developing more interactive, lifelike experiences that reflect the diverse needs and interests of modern gamers. By addressing these issues, VR has the potential to bring new forms of entertainment that are as socially meaningful as they are visually impressive, marking a significant evolution in digital interaction.

II. METHODOLOGY

The technical architecture of VR gaming systems, which includes a series of interconnected core modules that enable immersive and interactive experiences. The core components of VR systems typically include graphics, AI, and networking modules, each contributing to the system’s ability to create dynamic, persistent virtual worlds where players can engage socially.

User Interface Module: The Unreal Motion Graphics (UMG) module acts as a visual UI designer, allowing developers to create intuitive user interfaces, menus, and HUDs (heads-up displays). This module is integral to providing a seamless and interactive user experience, as it allows players to navigate the VR environment efficiently.

Together, these modules form a cohesive VR architecture that supports real-time, socially interactive experiences, bridging the gap between traditional gaming and fully immersive virtual environments. This architecture is critical to VR gaming as it addresses the technical challenges of creating expansive and lifelike worlds.

A. Requirement Gathering

This initial phase is about understanding what the Virtual Reality project.

- Core Module: The foundation of VR gaming architecture involves essential classes and utilities, which manage resources and memory, ensuring efficient and stable gameplay. This module supports all other functionalities, making it crucial for structuring VR experiences.

- Rendering Module: The RenderCore module is responsible for managing graphics rendering processes, including shaders and resource management, to create visually engaging environments. It is essential for delivering high-quality visuals, which are central to VR's immersive potential.

- Artificial Intelligence (AI) Module: AI plays a significant role in VR by supporting non-player characters (NPCs) and enhancing interactivity. The AI module uses algorithms like behavior trees and machine learning to create intelligent, responsive NPCs, making virtual worlds feel more lifelike and adaptive to user actions.

- Networking Module: Multiplayer VR environments rely on a robust networking module to handle client-server communications and online functionalities. This component enables real-time interactions between players, facilitating the social aspects of VR and creating persistent shared spaces.

B. System Architecture

The system architecture of a VR gaming system involves several layers and components that work together to deliver immersive, interactive experiences. Below is an outline of the key components and their interconnections:

- User Interface (UI) Layer: Provides the user interface through which players interact with the VR environment. It allows the user to access settings, select options, and engage with in-game features like inventory or mission objectives. We use Unreal Motion Graphics (UMG), menus, HUDs (Heads-Up Displays), and in-game controls.

- Application Logic Layer: This layer controls the game’s functionality, ensuring that events and game actions are processed correctly. It incorporates logic for AI, game rules, progression, and interactions, managing non-player characters (NPCs) and player actions. Here we use Core gameplay mechanics, event handling, AI logic, and game state management.

- Rendering Layer: Responsible for rendering the 3D environment, characters, and objects. The rendering system ensures smooth and high-quality visuals for VR immersion, with optimized shaders, lighting, and textures. It also includes spatial tracking and visual effects that respond to player movements. We use RenderCore, shaders, graphical resource management, and display pipeline.

- AI and Behavior Layer: Enhances the world with intelligent NPCs and dynamic interactions. The AI system uses techniques like behavior trees and learning algorithms to enable NPCs to react adaptively to the player's actions and environmental changes. We use AI modules such as NPC behaviors, machine learning algorithms, decision trees.

- Networking Layer: Manages the communication between players in multiplayer VR environments. It ensures that players can interact in real-time, maintaining consistency and synchronization across players’ devices, and supports online features like multiplayer matches, shared spaces, and social interactions. It includes Client-server architecture, peer-to-peer connections, real-time multiplayer support.

- Resource Management Layer: Efficiently manages system resources, ensuring that memory and assets are optimized for performance. This layer handles loading and unloading of textures, models, and other assets, making sure that gameplay runs smoothly even in large and complex environments. It includes Memory asset management, data storage and resource allocation.

- VR Hardware Layer: Provides the physical interface for the player to interact with the VR environment. This layer includes all hardware elements that enable immersion, such as head tracking, hand motion controllers, and haptic feedback systems that simulate tactile sensations. This includes VR headset, motion controllers, tracking sensors, haptic feedback devices.

- Data Layer (Optional): This layer handles the storage of user progress, settings, preferences, and multiplayer data. It can include cloud synchronization for saving game progress or analytics systems for gathering data on player behavior to improve the game. It includes Cloud storage, player data, save states, and analytics.

C. Algorithm

An important part of the system’s functionality is the algorithm. The following steps outline how the system works:

- Initialize VR System: Set up VR hardware, game state, and environment.

- Capture Player Input: Read player inputs from VR headset and controllers.

- Update Game State: Process player actions and update game objects and NPCs.

- Update AI: Execute AI algorithms for NPCs based on player actions and environment

- Synchronize Multiplayer: Communicate with the server to sync game state across players.

- Render Scene: Update and display the virtual environment and objects.

- Manage Resources: Load and unload game assets as required to optimize memory.

- Provide Feedback: Trigger haptic feedback for player interactions.

- Check Exit Conditions: Monitor for game end conditions or user exit requests.

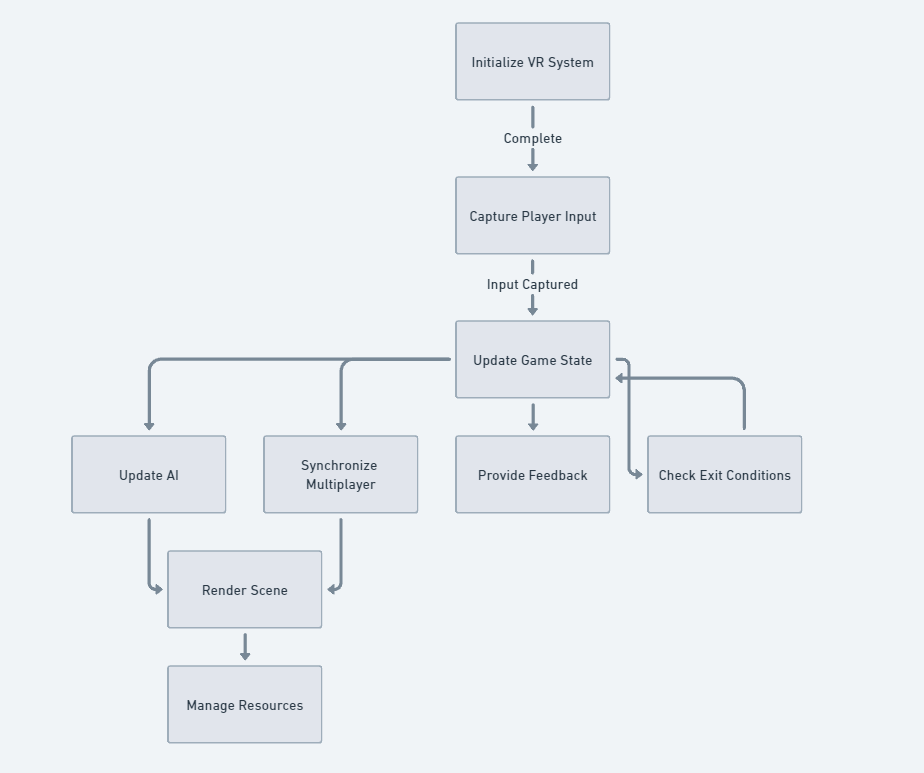

D. Flowchart

Fig. 1 Flowchart

As shown in Fig. 1, the flowchart depicts a process flow of successive operations from the input by the user to the final display of results.

- Initialize VR System: The system boots up and establishes connections with all VR hardware, including headsets and controllers. This step ensures all necessary components are properly configured and ready for use.

- Capture Player Input: The system continuously monitors and records all player actions, including head movements, controller inputs, and gestures. This real-time input capture is crucial for maintaining immersion and responsiveness.

- Update Game State: This is the central processing hub that takes the player's input and determines how it affects the game world. It branches out to multiple subsystems to handle different aspects of the game.

- Update AI: The artificial intelligence systems are updated to respond to the current game state and player actions. This includes updating NPC behaviors, enemy movements, and other AI-controlled elements.

- Synchronize Multiplayer: For multiplayer games, this step ensures all players' games are synchronized by sharing relevant data between clients. It maintains consistency in the shared virtual environment.

- Provide Feedback: The system generates appropriate responses to player actions, such as haptic feedback, sound effects, or visual cues. This helps create a more immersive and responsive experience.

- Check Exit Conditions: The system monitors for conditions that would end the current game session or trigger a transition. This could include completing objectives, player death, or manual exit requests.

- Render Scene: The visual elements of the game are processed and displayed to the player through the VR headset. This includes creating the stereoscopic 3D view and applying any visual effects.

- Manage Resources: The system handles memory management, asset loading/unloading, and optimization of system resources. This ensures smooth performance and prevents memory leaks or crashes.

Conclusion

VR technology is redefining gaming by offering immersive, interactive experiences that engage players in unique ways. With advancements in cloud VR, AI integration, and mixed reality, future developments promise even greater opportunities for personalized and accessible gaming experiences. As the technology continues to evolve, the future of VR gaming will focus on expanding accessibility, enhancing user comfort, and achieving seamless integration across platforms. These innovations will not only enhance the realism of virtual worlds but also ensure that VR gaming becomes more inclusive and enjoyable for a broader audience, paving the way for new, dynamic forms of interactive entertainment.

References

[1] S. Alves, A. Callado, and P. Jucá. “Evaluation of graphical user interfaces guidelines for virtual reality games”. In: 2020 19th Brazilian Symposium on Computer Games and Digital Entertainment (SBGames). IEEE, 2020, pp. 71–79. [2] M. K. Baowaly, Y.-P. Tu, and K.-T. Chen. “Predicting the helpfulness of game reviews: A case study on the steam store”. In: Journal of Intelligent & Fuzzy Systems 36.5 (2019), pp. 4731–4742. [3] D. M. Blei, A. Y. Ng, and M. I. Jordan. “Latent dirichlet allocation”. In: The Journal of Machine Learning Research 3 (2003), pp. 993–1022. [4] I. Busurkina et al. “Game experience evaluation: A study of game reviews on the steam platform”. In: International Conference on Digital Transformation and Global Society. Springer, 2020, pp. 117–127. [5] S. LaValle et al. “Head Tracking for the Oculus Rift”. In: Proceedings of IEEE International Conference on Robotics and Automation (ICRA). 2014. url: https: //doi.org/10.1109/ICRA.2014.6906953. [6] K. M. Lee. “Presence, Explicated”. In: Communication Theory 14.1 (2004), pp. 27–50. url: https://doi.org/10.1111/j.1468-2885.2004.tb00302.x.

Copyright

Copyright © 2024 Atharva Baikar, Prof. Rajeshwari Dandage. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Download Paper

Paper Id : IJRASET65230

Publish Date : 2024-11-13

ISSN : 2321-9653

Publisher Name : IJRASET

DOI Link : Click Here

Submit Paper Online

Submit Paper Online